Methods for Gastrointestinal Endoscopy Quantification: A Focus on Hands and Fingers Kinematics

Abstract

:1. Introduction

2. Related work

2.1. Direct Observation

- Scope handling: (1) Exhibits good control of the head and shaft of colonoscope at all times; and (2) Demonstrates ability to use all scope functions (buttons/biopsy channel) whilst maintaining a stable hold on the colonoscope. Minimizes external looping in the shaft of the instrument.

- Tip control: (1) Integrated technique, as the ability to combine tip and torque steering to accurately control the tip of the colonoscope and maneuver the tip in the correct direction; and (2) Individual components: tip steering, torque steering and luminal awareness.

- Air management: Appropriate insufflation and suction of air to minimize over-distension of the bowel while maintaining adequate views.

- Loop management: Uses appropriate techniques (tip and torque steering, withdrawal, position change) to minimize and prevent loop formation.

2.2. Pressure and Force Measurement

- Shergill et al. (2009) evaluated upper-extremity musculoskeletal load [15]. Particularly, they measured right-thumb pinch and downward force and bilateral forearm-muscle activity in both left and right forearms. Essentially, they found high left wrist extensor muscle activity and high right thumb pinch force loads exceeding the threshold levels known to increase the risk of injury [18]. An extension of the previous experiments was performed more recently in 2021, collecting data from thumb pinch force and forearm muscle loads [18]. Differences between male and female endoscopists were observed at the biomechanical level.

- Ref. [6] A wireless device called the Colonoscopy Force Monitor (CFM) was used to measure the manipulation patterns and force applied by the hands and fingers during the insertion and withdrawal of the tube during a colonoscopy [6,19]. This device was able to capture the over the endoscope: linear force (push/pull or axial) and radial force (torque). [26] A sensor on the hose of the colonoscope was also used in [26] for the real-time measurement of the force and torque of the colonoscope applied by the hands and fingers. This work was not focused on training or injury prevention but on supporting the endoscopist by providing information about the force and posture of the distal end of the endoscope to avoid bowel perforation and looping. They refer to previous designs of the endoscope, identifying some issues, particularly that they are bulky and demand two-handed operation.

2.3. Motion Quantification

- In [23,24], right wrist posture and movements were analyzed using a magnetic motion-tracking device. Endoscopists wore a right arm sleeve and glove that were custom-made for this study. The [24] hypothesis of these studies was that the range of wrist movement (mid, center, extreme, and out) for each wrist DoF (flexion/extension, abduction/adduction, pronation/supination) might decrease as experience in colonoscopy is acquired. It was concluded that fellows spent significantly less time in an extreme range of wrist movements at the end of the study compared to the baseline evaluation.

- Microsoft KinectTM was used in [10] to measure the technical skills of endoscopists. Seven metrics were analyzed to find discriminative motion patterns between novice and experienced endoscopists: hand distance from the gurney, the number of times the right hand was used to control the small wheel of the colonoscope, the angulation of the elbows, the position of the hands in relation to body posture, the angulation of the body posture in relation to the anus, the mean distance between the hands, and the percentage of time the hands were approximated to each other.

- In Ref. [28], a motion tracking setup to measure wrist and elbow joint motions is described in [28]. Several wrist and elbow motion metrics are described in this work. For each wrist, the axes of flexion/extension and abduction/adduction motions were analyzed. For each elbow, the axes of flexion/extension and supination/pronation movements were analyzed. For each joint, the number of times the joint entered extreme ranges of motion, as well as the total time spent in extreme ranges of motion.

- In Ref. [29], flexible wearable sensors that were placed on the dorsum of both hands and the dorsal section of both forearms (2/3 distance from wrist to elbow) were used in [29,30] to compare differences in the movements between novices and experts [30]. Three-dimensional coordinates from the length of the endoscope were taken using a Magnetic Endoscopy Imaging system called ScopeGuide (UPD-3, Olympus, Tokyo, Japan) that includes electromagnetic coils along the length of the endoscope.

2.4. Other Quantifications

3. Hand and Finger Kinematics Quantification

3.1. Hand and Finger Kinematics

3.2. Quantification Technologies

3.2.1. Sensor-Based Technology

3.2.2. Vision-Based Technology

3.3. Smart Gloves

3.3.1. Manus VR Prime II

3.3.2. MoCap Pro

3.4. Camera-Based Systems

4. Experimentation

4.1. Motion Capture Devices

4.2. The Colonoscopy Process

4.3. Aspects Evaluated of the Two Proposed Capture Systems

- Installation (complicated/time/limited license);

- Calibration (more or less complex and fragile);

- Battery (limitation in measurements/Autonomy);

- Communication (Bluetooth or cable);

- Ease of use (difficulty due to gender or hand size);

- Naturalness (naturalness of use during the handling of tools).

5. Results

5.1. Set Up Process

5.1.1. Facility

5.1.2. Connection

5.1.3. Calibration

5.1.4. Data Treatment

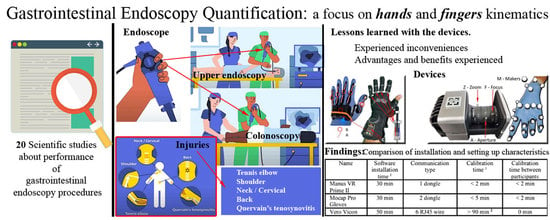

5.2. Lessons Learned with the Devices

5.2.1. Manus VR Prime II Gloves

Experienced Inconveniences

- Rigid gloves prevent the natural mobility of the hand and therefore are more uncomfortable.

- One size in the purchase, which means having several units.

- Does not allow raw data to be extracted directly for further analysis.

- Does not exchange finger movement data with other measurement devices such as Xsens. To do that, the Prime Xsens version is necessary.

Advantages and Benefits Experienced

- Relatively good battery capacity, allowing 40 min of measurement.

- Wireless enables remote work provided by Bluetooth.

- Support for incorporating external position trackers.

- Data recorded on own encrypted format manus

- Data could be exported as FBX

5.2.2. Stretchsense MoCap Pro Gloves

Experienced Inconveniences

- Little adaptable to smaller hands, for this specific case, the fingertips are covered, which is inconvenient for the specialist.

- Calibration by individual poses, slower process than with Manus, which is more sequential.

- Difficulty putting on gloves, requiring help from a partner.

- Adduction abduction movements are not appreciated and therefore are not clearly reflected in the hand model.

- The bar graph that shows the movements is very small and does not allow us to appreciate the variation of the data.

Advantages and Benefits Experienced

- Very flexible and therefore comfortable to the touch, allows for more natural measurements.

- Approximately 3 h of use at full capacity.

- Offers alternatives for transmission and/or storage of data captured with SD memory.

- Allows you to extract the captured raw data directly in csv format, quaternion output.

5.2.3. Vero Vicon

Experienced Inconveniences

- Very delicate calibration regarding the sensitivity of the cameras.

- Assembly time and preparation of the capture scenario of more than 1 h.

- Raw data captured in its own session format and not directly analyzable.

- Requires a prior study of marker placement for later modeling and data interpretation.

- The markers are the essential elements to monitor, and sometimes they do not allow for a natural movement of fingers and hand in certain exercises.

Advantages and Benefits Experienced

- Unlimited battery capacity.

- Very high precision in data capture with a maximum average error of 1 mm.

- Allows you to extract raw data in csv, quaternion output.

- Allows you to extract vrpn, pose data automatically supplied. VRPN clients that are able to make use of velocity and acceleration data can use this information directly from Tracker’s VRPN output rather than having to calculate this on the client side. This is useful if, for example, you want to use Vicon data within a dead reckoning algorithm or other prediction algorithms to estimate poses at a time that may or may not coincide with a Vicon frame.

6. Discussion

- None of the analyzed devices offer integration for the analysis of these movements and their correlation with musculoskeletal injuries.

- The two types of gloves studied do not offer a precision range, which calls into question the validity of the data for the scientific study and the quality of the results.

- The markers distributed in this case by hand cause two important problems: (i) the occlusion that causes gaps and, therefore, a loss of information about its trajectory and position (ii) and the difficulty of manipulating the tool, making the maneuver difficult.

- There are very few studies that focus their studies on the analysis of hand modeling due in part to the fact that it is an extremely complex part of the body.

- Make data transmission compatible in both directions so that the precision of the cameras is used to correct a problem they have with occlusions. In addition, the analysis and post-processing of data from both points of view, that of the hands and body posture, would allow for obtaining more significant conclusions.

- Design personalized gloves that are highly adapted to the contour and silhouette of the hand, not interfering and not hindering the fine manipulation of microsensors such as Polhemus Micro Sensors. Therefore, the design of a glove with an array of infrared LEDs is proposed, just as the Vero Vicon calibration stick is installed.

- Modeling of the hand integrated with Deep Learning so that it speeds up and corrects the gaps that are produced by the occlusion of the markers or, failing that, the array of infrared LEDs that integrates the previously proposed glove.

- Standardized data analysis that integrates data types such as quaternion, which are recorded by these devices avoiding intermediate filtering and pre-processing for subsequent reproduction and simulation of mobility. A simulation is already offered by free software programs such as openSim, which is recognized by the scientific community.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rana, M.; Mittal, V. Wearable Sensors for Real-Time Kinematics Analysis in Sports: A Review. IEEE Sens. J. 2021, 21, 1187–1207. [Google Scholar] [CrossRef]

- Chen, S.; Lach, J.; Lo, B.; Yang, G.Z. Toward Pervasive Gait Analysis With Wearable Sensors: A Systematic Review. IEEE J. Biomed. Health Inform. 2016, 20, 1521–1537. [Google Scholar] [CrossRef] [PubMed]

- Xu, Q.; Nwe, T.L.; Guan, C. Cluster-Based Analysis for Personalized Stress Evaluation Using Physiological Signals. IEEE J. Biomed. Health Inform. 2015, 19, 275–281. [Google Scholar] [CrossRef] [PubMed]

- Valenza, G.; Nardelli, M.; Lanata, A.; Gentili, C.; Bertschy, G.; Paradiso, R.; Scilingo, E.P. Wearable Monitoring for Mood Recognition in Bipolar Disorder Based on History-Dependent Long-Term Heart Rate Variability Analysis. IEEE J. Biomed. Health Inform. 2014, 18, 1625–1635. [Google Scholar] [CrossRef]

- Rutter, M.D.; Senore, C.; Bisschops, R.; Domagk, D.; Valori, R.; Kaminski, M.F.; Spada, C.; Bretthauer, M.; Bennett, C.; Bellisario, C.; et al. The European Society of Gastrointestinal Endoscopy Quality Improvement Initiative: Developing performance measures. Endoscopy 2015, 48, 81–89. [Google Scholar] [CrossRef] [Green Version]

- Korman, L.Y.; Egorov, V.; Tsuryupa, S.; Corbin, B.; Anderson, M.; Sarvazyan, N.; Sarvazyan, A. Characterization of forces applied by endoscopists during colonoscopy by using a wireless colonoscopy force monitor. Gastrointest. Endosc. 2010, 71, 327–334. [Google Scholar] [CrossRef] [Green Version]

- Spier, B.J.; Durkin, E.T.; Walker, A.J.; Foley, E.; Gaumnitz, E.A.; Pfau, P.R. Surgical resident’s training in colonoscopy: Numbers, competency, and perceptions. Surg. Endosc. 2010, 24, 2556–2561. [Google Scholar] [CrossRef]

- Lee, S.H.; Chung, I.K.; Kim, S.J.; Kim, J.O.; Ko, B.M.; Hwangbo, Y.; Kim, W.H.; Park, D.H.; Lee, S.K.; Park, C.H.; et al. An adequate level of training for technical competence in screening and diagnostic colonoscopy: A prospective multicenter evaluation of the learning curve. Gastrointest. Endosc. 2008, 67, 683–689. [Google Scholar] [CrossRef]

- Walsh, C.M. In-training gastrointestinal endoscopy competency assessment tools: Types of tools, validation and impact. Best Pract. Res. Clin. Gastroenterol. 2016, 30, 357–374. [Google Scholar] [CrossRef] [PubMed]

- Svendsen, M.B. Using motion capture to assess colonoscopy experience level. World J. Gastrointest. Endosc. 2014, 6, 193. [Google Scholar] [CrossRef] [PubMed]

- Ekkelenkamp, V.E. Patient comfort and quality in colonoscopy. World J. Gastroenterol. 2013, 19, 2355. [Google Scholar] [CrossRef] [PubMed]

- Buschbacher, R. Overuse Syndromes Among Endoscopists. Endoscopy 1994, 26, 539–544. [Google Scholar] [CrossRef] [PubMed]

- Liberman, A.S.; Shrier, I.; Gordon, P.H. Injuries sustained by colorectal surgeons performing colonoscopy. Surg. Endosc. 2005, 19, 1606–1609. [Google Scholar] [CrossRef] [PubMed]

- Hansel, S.L.; Crowell, M.D.; Pardi, D.S.; Bouras, E.P.; DiBaise, J.K. Prevalence and Impact of Musculoskeletal Injury Among Endoscopists. J. Clin. Gastroenterol. 2009, 43, 399–404. [Google Scholar] [CrossRef] [PubMed]

- Shergill, A.K.; Asundi, K.R.; Barr, A.; Shah, J.N.; Ryan, J.C.; McQuaid, K.R.; Rempel, D. Pinch force and forearm-muscle load during routine colonoscopy: A pilot study. Gastrointest. Endosc. 2009, 69, 142–146. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.G.; Thomas-Gibson, S.; Brooker, J.C.; Suzuki, N.; Williams, C.B.; Thapar, C.; Saunders, B.P. Use of video and magnetic endoscope imaging for rating competence at colonoscopy: Validation of a measurement tool. Gastrointest. Endosc. 2002, 56, 568–573. [Google Scholar] [CrossRef] [PubMed]

- Battevi, N.; Menoni, O.; Cosentino, F.; Vitelli, N. Digestive endoscopy and risk of upper limb biomechanical overload. Med. Lav. 2009, 100, 171–177. [Google Scholar]

- Shergill, A.K.; Rempel, D.; Barr, A.; Lee, D.; Pereira, A.; Hsieh, C.M.; McQuaid, K.; Harris-Adamson, C. Biomechanical risk factors associated with distal upper extremity musculoskeletal disorders in endoscopists performing colonoscopy. Gastrointest. Endosc. 2021, 93, 704–711. [Google Scholar] [CrossRef]

- Korman, L.Y.; Brandt, L.J.; Metz, D.C.; Haddad, N.G.; Benjamin, S.B.; Lazerow, S.K.; Miller, H.L.; Greenwald, D.A.; Desale, S.; Patel, M.; et al. Segmental increases in force application during colonoscope insertion: Quantitative analysis using force monitoring technology. Gastrointest. Endosc. 2012, 76, 867–872. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Obstein, K.L.; Patil, V.D.; Jayender, J.; Estépar, R.S.J.; Spofford, I.S.; Lengyel, B.I.; Vosburgh, K.G.; Thompson, C.C. Evaluation of colonoscopy technical skill levels by use of an objective kinematic-based system. Gastrointest. Endosc. 2011, 73, 315–321. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Browne, A.; O’Sullivan, L. A medical hand tool physical interaction evaluation approach for prototype testing using patient care simulators. Appl. Ergon. 2012, 43, 493–500. [Google Scholar] [CrossRef] [PubMed]

- Arnold, M.; Ghosh, A.; Doherty, G.; Mulcahy, H.; Steele, C.; Patchett, S.; Lacey, G. Towards Automatic Direct Observation of Procedure and Skill (DOPS) in Colonoscopy. In Proceedings of the International Conference on Computer Vision Theory and Applications, Barcelona, Spain, 21–24 February 2013; pp. 48–53. [Google Scholar]

- Mohankumar, D.; Garner, H.; Ruff, K.; Ramirez, F.C.; Fleischer, D.; Wu, Q.; Santello, M. Characterization of right wrist posture during simulated colonoscopy: An application of kinematic analysis to the study of endoscopic maneuvers. Gastrointest. Endosc. 2014, 79, 480–489. [Google Scholar] [CrossRef]

- Ratuapli, S.K.; Ruff, K.C.; Ramirez, F.C.; Wu, Q.; Mohankumar, D.; Santello, M.; Fleischer, D.E. Kinematic analysis of wrist motion during simulated colonoscopy in first-year gastroenterology fellows. Endosc. Int. Open 2015, 3, E621–E626. [Google Scholar] [CrossRef] [Green Version]

- Nerup, N.; Preisler, L.; Svendsen, M.B.S.; Svendsen, L.B.; Konge, L. Assessment of colonoscopy by use of magnetic endoscopic imaging: Design and validation of an automated tool. Gastrointest. Endosc. 2015, 81, 548–554. [Google Scholar] [CrossRef]

- Zheng, C.; Qian, Z.; Zhou, K.; Liu, H.; Lv, D.; Zhang, W. A novel sensor for real-time measurement of force and torque of colonoscope. In Proceedings of the IECON 2017—43rd Annual Conference of the IEEE Industrial Electronics Society, Beijing, China, 29 October–1 November 2017; pp. 3265–3269. [Google Scholar]

- Velden, E. Determining the Optimal Endoscopy Movements for Training and Assessing Psychomotor Skills. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2018. [Google Scholar]

- Holden, M.S.; Wang, C.N.; MacNeil, K.; Church, B.; Hookey, L.; Fichtinger, G.; Ungi, T. Objective assessment of colonoscope manipulation skills in colonoscopy training. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 105–114. [Google Scholar] [CrossRef]

- Levine, P.; Rahemi, H.; Nguyen, H.; Lee, H.; Krill, J.T.; Najafi, B.; Patel, K. 765 use of wearable sensors to assess stress response in endoscopy training. Gastrointest. Endosc. 2018, 87, AB110–AB111. [Google Scholar] [CrossRef]

- Krill, J.T.; Levine, P.; Nguyen, H.; Zahiri, M.; Wang, C.; Najafi, B.; Patel, K. Mo1086 the use of wearable sensors to assess biomechanics of novice and experienced endoscopists on a colonoscopy simulator. Gastrointest. Endosc. 2019, 89, AB442–AB443. [Google Scholar] [CrossRef]

- Vilmann, A.S.; Lachenmeier, C.; Svendsen, M.B.S.; Søndergaard, B.; Park, Y.S.; Svendsen, L.B.; Konge, L. Using computerized assessment in simulated colonoscopy: A validation study. Endosc. Int. Open 2020, 8, E783–E791. [Google Scholar] [CrossRef]

- He, W.; Bryns, S.; Kroeker, K.; Basu, A.; Birch, D.; Zheng, B. Eye gaze of endoscopists during simulated colonoscopy. J. Robot. Surg. 2020, 14, 137–143. [Google Scholar] [CrossRef]

- Fulton, M.J.; Prendergast, J.M.; DiTommaso, E.R.; Rentschler, M.E. Comparing Visual Odometry Systems in Actively Deforming Simulated Colon Environments. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4988–4995. [Google Scholar]

- Walsh, C.M.; Ling, S.C.; Khanna, N.; Cooper, M.A.; Grover, S.C.; May, G.; Walters, T.D.; Rabeneck, L.; Reznick, R.; Carnahan, H. Gastrointestinal Endoscopy Competency Assessment Tool: Development of a procedure-specific assessment tool for colonoscopy. Gastrointest. Endosc. 2014, 79, 798–807. [Google Scholar] [CrossRef]

- Barton, J.R.; Corbett, S.; van der Vleuten, C.P.; Programme, E.B.C.S. The validity and reliability of a Direct Observation of Procedural Skills assessment tool: Assessing colonoscopic skills of senior endoscopists. Gastrointest. Endosc. 2012, 75, 591–597. [Google Scholar] [CrossRef] [PubMed]

- O’Sullivan, G. Formative DOPS for Colonoscopy and Flexible Sigmoidoscopy. 2016. Available online: https://www.hse.ie/eng/about/who/acute-hospitals-division/clinical-programmes/endoscopy-programme/programme-documents/competency-model-for-skills-training-in-gi-endoscopy-in-ireland.pdf (accessed on 17 October 2022).

- Medina Gonzalez, C.; Benet Rodríguez, M.; Marco Martínez, F. El complejo articular de la muñeca: Aspectos anatofisiológicos y biomecánicos, características, clasificación y tratamiento de la fractura distal del radio. Medisur 2016, 14, 430–446. [Google Scholar]

- Jarus, T.; Poremba, R. Hand Function Evaluation: A Factor Analysis Study. Am. J. Occup. Ther. 1993, 47, 439–443. [Google Scholar] [CrossRef] [Green Version]

- Carroll, D. A quantitative test of upper extremity function. J. Chronic Dis. 1965, 18, 479–491. [Google Scholar] [CrossRef] [Green Version]

- Sollerman, C.; Ejeskär, A. Sollerman Hand Function Test: A Standardised Method and its Use in Tetraplegic Patients. Scand. J. Plast. Reconstr. Surg. Hand Surg. 1995, 29, 167–176. [Google Scholar] [CrossRef] [PubMed]

- Wolf, S.L.; Catlin, P.A.; Ellis, M.; Archer, A.L.; Morgan, B.; Piacentino, A. Assessing Wolf Motor Function Test as Outcome Measure for Research in Patients After Stroke. Stroke 2001, 32, 1635–1639. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Caeiro-Rodríguez, M.; Otero-González, I.; Mikic-Fonte, F.A.; Llamas-Nistal, M. A Systematic Review of Commercial Smart Gloves: Current Status and Applications. Sensors 2021, 21, 2667. [Google Scholar] [CrossRef] [PubMed]

- Turk, M.; Athitsos, V. Gesture Recognition. In Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 1–6. [Google Scholar]

- Ma, Z.; Ben-Tzvi, P. Design and Optimization of a Five-Finger Haptic Glove Mechanism. J. Mech. Robot. 2015, 7, 3–4. [Google Scholar] [CrossRef] [Green Version]

- Stoppa, M.; Chiolerio, A. Wearable Electronics and Smart Textiles: A Critical Review. Sensors 2014, 14, 11957–11992. [Google Scholar] [CrossRef] [Green Version]

- Dong, W.; Yang, L.; Fortino, G. Stretchable Human Machine Interface Based on Smart Glove Embedded With PDMS-CB Strain Sensors. IEEE Sens. J. 2020, 20, 8073–8081. [Google Scholar] [CrossRef]

- Li, Y.; Zheng, C.; Liu, S.; Huang, L.; Fang, T.; Li, J.X.; Xu, F.; Li, F. Smart Glove Integrated with Tunable MWNTs/PDMS Fibers Made of a One-Step Extrusion Method for Finger Dexterity, Gesture, and Temperature Recognition. ACS Appl. Mater. Interfaces 2020, 12, 23764–23773. [Google Scholar] [CrossRef] [PubMed]

- Rautaray, S.S.; Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Beddiar, D.R.; Nini, B.; Sabokrou, M.; Hadid, A. Vision-based human activity recognition: A survey. Multimed. Tools Appl. 2020, 79, 30509–30555. [Google Scholar] [CrossRef]

- Cheng, H.; Yang, L.; Liu, Z. Survey on 3D Hand Gesture Recognition. IEEE Trans. Circuits Syst. Video Technol. 2016, 26, 1659–1673. [Google Scholar] [CrossRef]

- Vuletic, T.; Duffy, A.; Hay, L.; McTeague, C.; Campbell, G.; Grealy, M. Systematic literature review of hand gestures used in human computer interaction interfaces. Int. J. Hum. Comput. Stud. 2019, 129, 74–94. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.; Yu, C.; Tu, C.; Lyu, Z.; Tang, J.; Ou, S.; Fu, Y.; Xue, Z. A Survey on Hand Pose Estimation with Wearable Sensors and Computer-Vision-Based Methods. Sensors 2020, 20, 1074. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alam, M.; Samad, M.D.; Vidyaratne, L.; Glandon, A.; Iftekharuddin, K.M. Survey on Deep Neural Networks in Speech and Vision Systems. Neurocomputing 2020, 417, 302–321. [Google Scholar] [CrossRef] [PubMed]

- Sienicki, E.; Bansal, V.; Doucet, J.J. Small Intestine, Appendix, and Colorectal. In Surgical Critical Care and Emergency Surgery; Wiley: Hoboken, NJ, USA, 2022; pp. 403–414. [Google Scholar]

- Cai, S.; Jin, Z.; Zeng, P.; Yang, L.; Yan, Y.; Wang, Z.; Shen, Y.; Guo, S. Structural optimization and in vivo evaluation of a colorectal stent with anti-migration and anti-tumor properties. Acta Biomater. 2022. Available online: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9445364/pdf/fonc-12-972454.pdf (accessed on 17 October 2022). [CrossRef]

- Hussain, S.; Zhou, Y.; Liu, R.; Pauli, E.; Haluck, R.; Fell, B.; Moore, J. Evaluation of Endoscope Control Assessment System. In Proceedings of the 2022 Design of Medical Devices Conference, Minneapolis, MN, USA, 17–19 April 2022; Volume 84815. [Google Scholar]

- Wangmar, J. To Participate or Not: Decision-Making and Experiences of Individuals Invited to Screening for Colorectal Cancer. Ph.D. Thesis, Karolinska Institutet, Stockholm, Sweden, 2022. [Google Scholar]

- Lin, M.R.; Lee, W.J.; Huang, S.M. Quaternion-based machine learning on topological quantum systems. arXiv 2022, arXiv:2209.14551. [Google Scholar]

- Rettig, O.; Müller, S.; Strand, M. A Marker Based Optical Measurement Procedure to Analyse Robot Arm Movements and Its Application to Improve Accuracy of Industrial Robots. In Proceedings of the International Conference on Intelligent Autonomous Systems, Zagreb, Croatia, 8 April 2022; Springer: Cham, Switzerland, 2022; pp. 551–562. [Google Scholar]

- Gholami, M.; Choobineh, A.; Abdoli-Eramaki, M.; Dehghan, A.; Karimi, M.T. Investigating the Effect of Keyboard Distance on the Posture and 3D Moments of Wrist and Elbow Joints among Males Using OpenSim. Appl. Bionics Biomech. 2022, 2022, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Siegel, J.H. Risk of Repetitive-Use Syndromes and Musculoskeletal Injuries. Tech. Gastrointest. Endosc. 2007, 9, 200–204. [Google Scholar] [CrossRef]

- Shah, S.Z.; Rehman, S.T.; Khan, A.; Hussain, M.M.; Ali, M.; Sarwar, S.; Abid, S. Ergonomics of gastrointestinal endoscopies: Musculoskeletal injury among endoscopy physicians, nurses, and technicians. World J. Gastrointest. Endosc. 2022, 14, 142–152. [Google Scholar] [CrossRef] [PubMed]

| Publication | Measurement Method/Devices | Entity | What Is Measured | Participants and Procedures |

|---|---|---|---|---|

| [16] Shah et al., 2002 | Observations (in video) | Upper limbs | Manipulation skills of endoscope controls. Endoscope insertion tube manipulation. Depth of insertion | 18 endoscopists 22 colonoscopies |

| [17] Battevi et al., 2009 | Observation (in video) | Upper limbs | Frequency of technical actions per minute. The awkward posture of the hand continuously moving in a pinch of the endoscopic wheels. For the left arm, the static posture to bear instruments. Fine movements of left-hand fingers. Right elbow flexion–extension movements. Wrist postures in ulnar deviation of the right thumb. | 6 endoscopists 4 gastroscopies 4 colonoscopies |

| [15] Shergill et al., 2009 [18] Shergill et al., 2021 | Pressure: tactile pad Force: surface EMG | Right thumb Right and left forearm bilateral muscles | Right thumb pinch force Forearms muscle activity | 6 endoscopists 30 colonoscopies |

| 7 experienced, 9 trainees Total: 49 real col. | ||||

| [6] Korman et al., 2010 [19] Korman et al., 2012 | Pressure: load cells | Endoscope tube | Push/pull force Torque: clockwise and counterclockwise | 3 experienced endoscopists 9 real col. |

| 12 endoscopists. 2–4 real col. | ||||

| [20] Obstein et al., 2011 | Kinematics: electromagnetic 6D motion sensors | Endoscope tube | Path length, flex, velocity, acceleration, jerk, tip angulation, angular velocity, rotation and curvature of the endoscope. A kinematic score is calculated for the endoscopists | 9 novice endoscopists 4 experienced ones A physically simulated col. |

| [21] Browne and O’Sullivan, 2012 | Kinematics: electrogoniometers Force: surface EMG | Wrist Dominant forearm | - Wrist posture (flexion/extension) - Muscle activity | 10 novice endoscopists 5 (physically simulated stomach wall biopsy) |

| [22] Arnold et al., 2013 | Kinematics: motion sensors | Endoscope tube | Longitudinal displacement Circular displacement | 25 colonoscopies |

| [10] Svendsen and Hillingsø., 2014 | Kinematics: Microsoft Kinect TM | Body | Level of the left hand below the z-axis. Level of the right hand below the z-axis. Distance between hands. Distance shoulder-hand. Angulation of right elbow. Angulation of left elbow. Angulation of shoulders to the anus | 10 experienced 11 novices A physically simulated col. |

| [23] Mohankumar et al., 2014 [24] Ratuapli et al., 2015 | Kinematics: magnetic tracker (Polhemus Fastrak) | Wrist | Wrist posture (flexion/extension, abduction/adduction, pronation/supination) Wrist motion range (mid, center, extreme, and out) for each wrist DoF (flexion/extension, abduction/adduction, pronation/supination) | 12 endoscopists 2 VR simulated col. 5 first-year fellows 4 VR simulated col. |

| [25] Nerup et al., 2015 | Magnetic endoscope imaging | Endoscope tube | Colonoscopy Progression Score (CoPS) A map of progression in colonoscopy | 11 novices. 10 experienced A physically simulated col. |

| [26] Zheng et al., 2017 | Force: resistance-strain | Endoscope tube | Axial force: push and pull force Torque: clockwise and counterclockwise | - |

| [27] Velden, 2018 | Kinematics: IMU trackers (Xsens) Kinematics: magnetic Webcam and color band External camera- Kinematics: Vision (M.Kinect 2) | Body Endoscope tube End. wheel rotation Endoscopic view | 23 body segments: pelvis, L5, L3, T12, T8, neck, head, right and left shoulder, upper arm, forearm, hand upper leg, lower leg, foot, and toe. Translation and torque. Angle of the two endoscope wheels Insertion, retroflexion and inspection, duodenal intubation, and retraction Head | 9 endoscopists (3 beginners and 6 experts) 26 uppers gastrointestinal procedures |

| [28] Holden et al., 2018 | Kinematics: electromagnetic 3D motion sensors | Hand, arm and forearm (wrist and elbow joints) | The total path length of hands, number of discrete hand motions, wrist motions (flexion/extension, abduction/adduction) elbow motions (flexion/extension, supination/pronation), number of times in extreme ranges of motion. | 22 novice, 8 experienced A physically simulated col. (Wooden bench-top model) |

| [29] Levine et al., 2018 [30] Krill et al., 2019 | Kinematics: motion trackers (Biostamp RCTM) | Left and right forearms Forehead | 3D linear accelerations Angular velocity | 19 gastroenterologists A VR simulated col. |

| 30 novices, 18 experienced | ||||

| [31] Vilmann et al., 2020 | Kinematics: motion sensor | Endoscope tube | 3D data: travel length, tip progression, chase efficiency, shaft movement without tip progression, looping | 12 novices, 12 experienced 2 physicals simulated col. |

| [32] He et al., 2020 | Eye gaze tracking (Tobii X2-60) | Eye gaze | Scope disorientation. Eye gaze fixation. Eye gaze saccade | 20 novices, 6 experienced VR simulated |

| [33] Fulton et al., 2021 | Kinematics: magnetic tracker (Polhemus Patriot) | Endoscope tube | Translation trajectory and rotation of the endoscope | Physically simulated col. |

| Name | Software Installation Time 1 | Communication Type | Initial Calibration Time | Session Calibration Time 1 | Calibration Time between Participants |

|---|---|---|---|---|---|

| Manus VR Prime II | 30 min | 1 dongle | <1 min | <2 min | <2 min |

| Mocap Pro Gloves | 30 min | 2 dongles | <1 min | <5 min | <2 min |

| Vero Vicon v2.2 | 50 min | 6 RJ45 wires | 90 min 2 | <5 min | 0 min |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Otero-González, I.; Caeiro-Rodríguez, M.; Rodriguez-D’Jesus, A. Methods for Gastrointestinal Endoscopy Quantification: A Focus on Hands and Fingers Kinematics. Sensors 2022, 22, 9253. https://doi.org/10.3390/s22239253

Otero-González I, Caeiro-Rodríguez M, Rodriguez-D’Jesus A. Methods for Gastrointestinal Endoscopy Quantification: A Focus on Hands and Fingers Kinematics. Sensors. 2022; 22(23):9253. https://doi.org/10.3390/s22239253

Chicago/Turabian StyleOtero-González, Iván, Manuel Caeiro-Rodríguez, and Antonio Rodriguez-D’Jesus. 2022. "Methods for Gastrointestinal Endoscopy Quantification: A Focus on Hands and Fingers Kinematics" Sensors 22, no. 23: 9253. https://doi.org/10.3390/s22239253

APA StyleOtero-González, I., Caeiro-Rodríguez, M., & Rodriguez-D’Jesus, A. (2022). Methods for Gastrointestinal Endoscopy Quantification: A Focus on Hands and Fingers Kinematics. Sensors, 22(23), 9253. https://doi.org/10.3390/s22239253