A Comparison of Methods for Determining Forest Composition from High-Spatial-Resolution Remotely Sensed Imagery

Abstract

:1. Introduction

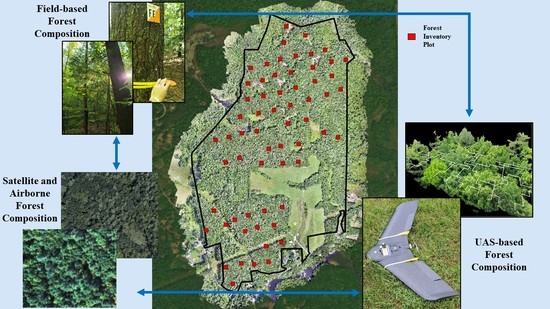

2. Materials and Methods

2.1. Study Areas

2.2. Field Reference Data

2.3. Remotely Sensed Imagery

2.4. Classification Scheme

- ▪

- White pine—any forested land surface dominated by tree species, comprising an overstory canopy with greater than 70% basal area per unit area eastern white pine.

- ▪

- Hemlock—any forested land surface dominated by tree species, comprising an overstory canopy with greater than 70% basal area per unit area eastern hemlock.

- ▪

- Mixed conifer—any forested land surface dominated by tree species, comprising coniferous species other than white pine or eastern hemlock (or a combined mixture of these species) that comprises greater than 66% basal area per unit area of the overstory canopy.

- ▪

- Mixed forests—any forested land surface dominated by tree species, comprising a heterogenous mixture of deciduous and coniferous species each comprising greater than 20% basal area per unit area composition. Important species associations include eastern white pine and northern red oak (Quercus rubra), red maple (Acer rubrum), white ash (Fraxinus americana, Marsh.), eastern hemlock, and birches.

- ▪

- Red maple—any forested land surface dominated by tree species, comprising an overstory canopy with greater than 50% basal area per unit area red maple.

- ▪

- Oak—any forested land surface dominated by tree species, comprising an overstory canopy with greater than 50% basal area per unit area white oak (Quercus alba, L.), black oak (Quercus velutina, Lam.), northern red oak, or a mixture.

- ▪

- American beech—any forested land surface dominated by tree species, comprising an overstory canopy with greater than 25% basal area per unit area American beech composition. This unique class takes precedence over other mentioned hardwood classes if present.

- ▪

- Mixed hardwoods—any forested land surface dominated by tree species, comprising deciduous species other than red maple, oak, or American beech (or a combined mixture of these species) that comprises greater than 66% basal area per unit area of the overstory canopy.

- ▪

- Early successional—any forested land surface dominated by tree species, comprising an overstory composition that is highly distinct including areas dominated by early successional species such as paper birch (Betula papyrifera, Marsh.), white ash (Fraxinus americana), or aspen (Populus spp.).

2.5. Forest Composition from Visual Interpretation

Accuracy/Uncertainty in Visual Interpretation

2.6. Forest Composition from Digital Classification

2.6.1. Image Segmentation and Tree Detection

2.6.2. Automated Classifications

3. Results

3.1. Accuracy/Uncertainty in Visual Interpretation

3.2. Image Segmentation and Tree Detection

3.3. Digital Classifications

4. Discussion

4.1. Analysis of Visual Interpretation Uncertainty

4.2. Analysis of Digital Classifications

4.3. Future Perspectives

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Classification Features

| Classification Features | |

|---|---|

| Spectral Greenness Mean of red band Mean of green band Mean of blue band Mean of NIR HIS transformation HIS = hue, intensity, saturation | SD red band SD green band SD blue band SD NIR band Greenness = |

| Texture GLCM homogeneity GLCM contrast GLCM dissimilarity GLCM entropy GLCM = gray−level co-occurrence matrix | GLCM mean GLCM correlation GLDV mean GLDV contrast GLDV = gray−level difference vector |

| Geometric Area (m2) Border index Border length Length/width Roundness *NAIP imagery only | Compactness Asymmetry Density Radius of longest ellipsoid Radius of shortest ellipsoid Shape index |

Appendix A.2. Visual Interpretation Uncertainty

| Plot-Level Visual Interpretation Accuracy for High-Resolution Remotely Sensed Data Sources | |||

|---|---|---|---|

| Google Earth | NAIP | UAS | |

| 9 Composition Classes | 24.51% | 25.25% | 41.67% |

| 4 Composition Classes | 39.95% | 39.46% | 51.96% |

Appendix A.3. Automated Classification

| Field (Reference) Data | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WP | EH | OC | AB | RM | OAK | OH | ES | TOTAL |

USERS ACCURACY | |||

| UAS Imagery Using the CART Classifier | WP | 25 | 4 | 7 | 4 | 2 | 1 | 5 | 0 | 48 | 52.08% | |

| EH | 2 | 7 | 2 | 6 | 1 | 6 | 2 | 6 | 32 | 21.88% | ||

| OC | 9 | 2 | 12 | 2 | 2 | 5 | 6 | 3 | 41 | 29.27% | ||

| AB | 2 | 2 | 1 | 11 | 4 | 5 | 7 | 2 | 34 | 32.35% | ||

| RM | 1 | 6 | 5 | 2 | 16 | 11 | 2 | 7 | 50 | 32.0% | ||

| OAK | 2 | 5 | 8 | 4 | 7 | 30 | 12 | 4 | 72 | 41.67% | ||

| OH | 4 | 5 | 1 | 3 | 6 | 9 | 2 | 4 | 35 | 5.7% | ||

| ES | 1 | 4 | 2 | 3 | 6 | 1 | 4 | 7 | 28 | 25.0% | ||

| TOTAL | 46 | 35 | 38 | 35 | 45 | 68 | 40 | 33 | 110/340 | |||

| PRODUCERS ACCURACY | 54.35% | 20.0% | 31.58% | 31.43% | 35.56% | 44.12% | 5.0% | 21.21% | OVERALL ACCURACY 32.35% | |||

| Field (Reference) Data | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WP | EH | OC | AB | RM | OAK | OH | ES | TOTAL |

USERS ACCURACY | |||

| UAS Imagery Using the RF Classifier | WP | 36 | 4 | 10 | 3 | 1 | 1 | 4 | 3 | 62 | 58.01% | |

| EH | 0 | 10 | 0 | 6 | 2 | 3 | 2 | 3 | 26 | 38.46% | ||

| OC | 2 | 2 | 18 | 2 | 3 | 7 | 1 | 1 | 36 | 50.0% | ||

| AB | 1 | 3 | 1 | 13 | 1 | 3 | 4 | 1 | 27 | 48.15% | ||

| RM | 0 | 2 | 1 | 3 | 22 | 3 | 2 | 6 | 39 | 56.41% | ||

| OAK | 1 | 9 | 5 | 5 | 10 | 48 | 13 | 8 | 99 | 48.48% | ||

| OH | 6 | 4 | 2 | 1 | 3 | 2 | 12 | 2 | 32 | 37.5% | ||

| ES | 0 | 1 | 1 | 2 | 3 | 1 | 2 | 9 | 19 | 47.37% | ||

| TOTAL | 46 | 35 | 38 | 35 | 45 | 68 | 40 | 33 | 168/340 | |||

| PRODUCERS ACCURACY | 78.26% | 28.57% | 47.37% | 37.14% | 48.89% | 70.59% | 30.0% | 27.27% | OVERALL ACCURACY 49.41% | |||

References

- Shen, X.; Cao, L. Tree-species classification in subtropical forests using airborne hyperspectral and LiDAR data. Remote Sens. 2017, 9, 1180. [Google Scholar] [CrossRef] [Green Version]

- Zhao, D.; Pang, Y.; Liu, L.; Li, Z. Individual tree classification using airborne lidar and hyperspectral data in a natural mixed forest of northeast China. Forests 2020, 11, 303. [Google Scholar] [CrossRef] [Green Version]

- Kuchler, A.W. Vegetation Mapping; The Ronald Press Company: New York, NY, USA, 1976. [Google Scholar]

- Xie, Y.; Sha, Z.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant. Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Congalton, R.G.; Gu, J.; Yadav, K.; Thenkabail, P.; Ozdogan, M. Global land cover mapping: A review and uncertainty analysis. Remote Sens. 2014, 6, 12070–12093. [Google Scholar] [CrossRef] [Green Version]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Martin, M.E.; Newman, S.D.; Aber, J.D.; Congalton, R.G. Determinig Forest Species Composition Using High Spectral Resolution Remote Sensing Data. Remote Sens. Environ. 1998, 65, 249–254. [Google Scholar] [CrossRef]

- USGCRP. Accomplishments of the U.S. Global Change Research Program; The National Academies Press: Washington, DC, USA, 2017. [Google Scholar]

- Townshend, J.; Li, W.; Gurney, C.; McManus, J.; Justice, C. Global land cover classification by remote sensing: Present capabilities and future possibilities. Remote Sens. Environ. 1991, 35, 243–255. [Google Scholar] [CrossRef]

- Avery, T.E. Forester’s Guide To Aerial Photo Interpretation; U.S. Department of Agriculture: Washington, DC, USA, 1969.

- Ko, Y.; Lee, J.H.; McPherson, E.G.; Roman, L.A. Long-term monitoring of Sacramento Shade program trees: Tree survival, growth and energy-saving performance. Landsc. Urban. Plan. 2015, 143, 183–191. [Google Scholar] [CrossRef]

- Maxwell, A.E.; Warner, T.A.; Vanderbilt, B.C.; Ramezan, C.A. Land cover classification and feature extraction from National Agriculture Imagery Program (NAIP) Orthoimagery: A review. Photogramm. Eng. Remote Sensing 2017, 83, 737–747. [Google Scholar] [CrossRef]

- Berhane, T.M.; Lane, C.R.; Wu, Q.; Autrey, B.C.; Anenkhonov, O.A.; Chepinoga, V.V.; Liu, H. Decision-tree, rule-based, and random forest classification of high-resolution multispectral imagery for wetland mapping and inventory. Remote Sens. 2018, 10, 580. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schepaschenko, D.; See, L.; Lesiv, M.; Bastin, J.F.; Mollicone, D.; Tsendbazar, N.E.; Bastin, L.; McCallum, I.; Laso Bayas, J.C.; Baklanov, A.; et al. Recent Advances in Forest Observation with Visual Interpretation of Very High-Resolution Imagery. Surv. Geophys. 2019, 40, 839–862. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Chen, J.; Liao, A.; Cao, X.; Chen, L.; Chen, X.; He, C.; Han, G.; Peng, S.; Lu, M.; et al. Global land cover mapping at 30 m resolution: A POK-based operational approach. ISPRS J. Photogramm. Remote Sens. 2015, 103, 7–27. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Lee, E.; Warner, T.A. A time series of annual land use and land cover maps of China from 1982 to 2013 generated using AVHRR GIMMS NDVI3g data. Remote Sens. Environ. 2017, 199, 201–217. [Google Scholar] [CrossRef]

- Yadav, K.; Congalton, R.G. Issues with large area thematic accuracy assessment for mapping cropland extent: A tale of three continents. Remote Sens. 2017, 10, 53. [Google Scholar] [CrossRef] [Green Version]

- Verhulp, J.; Niekerk, A.V. Transferability of decision trees for land cover classification in heterogeneous area. South Afr. J. Geomat. 2017, 6, 30–46. [Google Scholar] [CrossRef]

- Moessner, K.E. Photo interpretation in forest inventories. Photogr. Engin. XIX, 3 1953, 496–507. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Holloway, J.; Mengersen, K. Statistical Machine Learning Methods and Remote Sensing for Sustainable Development Goals: A review. Remote Sens. Environ. 2018, 10, 1365. [Google Scholar] [CrossRef] [Green Version]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation, 7th ed.; John Wiley and Sons Ltd: Hoboken, NJ, USA, 2015; ISBN 978-1-118-34328-9. [Google Scholar]

- Jensen, J. Introductory Digital Image Processing: A Remote Sensing Perspective, 4th ed.; Pearson Education Inc.: Glenview, IL, USA, 2016. [Google Scholar]

- Otukei, J.R.; Blaschke, T. Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 27–31. [Google Scholar] [CrossRef]

- Foody, G.M. The continuum of classification fuzziness in thematic mapping. Photogramm. Eng. Remote Sens. 1999, 65, 443–451. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Brown de Colstoun, E.C.; Story, M.H.; Thompson, C.; Commisso, K.; Smith, T.G.; Irons, J.R. National Park vegetation mapping using multitemporal Landsat 7 data and a decision tree classifier. Remote Sens. Environ. 2003, 85, 316–327. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Meta-discoveries from a Synthesis of Satellite-based Land-Cover Mapping Research. Photogramm. Eng. Remote Sens. 2014, 35, 4573–4588. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Frauman, E.; Wolff, E. Segmentation of Very High Spatial Resolution Satellite Images in Urban Areas for Segments-Based Classification. In Proceedings of the ISPRS 5th International Symposium Remote Sensing Urban Areas, Tempe, AZ, USA, 14–16 March 2005. [Google Scholar]

- Radoux, J.; Bogaert, P.; Fasbender, D.; Defourny, P. Thematic accuracy assessment of geographic object-based image classification. Int. J. Geogr. Inf. Sci. 2011, 25, 895–911. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation. In Angewandte Geographische Informations-Verarbeitung, XII; Wichmann Verlag: Karlsruhe, Germany, 2000; pp. 12–23. [Google Scholar]

- Fraser, B.T.; Congalton, R.G. Issues in Unmanned Aerial Systems (UAS) data collection of complex forest environments. Remote Sens. 2018, 10, 908. [Google Scholar] [CrossRef] [Green Version]

- Harris, P.M.; Ventura, S.J. The integration of geographic data with remotely sensed imagery to improve classification in an urban area. Photogramm. Eng. Remote Sens. 1995, 61, 993–998. [Google Scholar]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Its’hak, D. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Fraser, B.T.; Congalton, R.G. Evaluating the Effectiveness of Unmanned Aerial Systems (UAS) for Collecting Thematic Map Accuracy Assessment Reference Data in New England Forests. Forests 2019, 10, 24. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principals and Practices, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Coppin, P.R.; Bauer, M.E. Digital Change Detection in Forest Ecosystems with Remote Sensing Imagery. Remote Sens. Rev. 1996, 13, 207–234. [Google Scholar] [CrossRef]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of unmanned aerial system-based CIR images in forestry-a new perspective to monitor pest infestation levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Pugh, S.A. Applying Spatial Autocorrelation Analysis to Evaluate Error in New England Forest Cover-type maps derived from Landsat Thematic Mapper Data. Masters Thesis, University of New Hampshire, Durham, NH, USA, 1997. [Google Scholar]

- Šumarstvo, P.; Pripadajuće, D.I. Precision Forestry—Definition and Technologies 2. Scope of Research—Problematika istraživanja. Forestry 2010, 11, 603–611. [Google Scholar]

- Goodbody, T.R.H.; Coops, N.C.; Marshall, P.L.; Tompalski, P.; Crawford, P. Unmanned aerial systems for precision forest inventory purposes: A review and case study. For. Chron. 2017, 93, 71–81. [Google Scholar] [CrossRef] [Green Version]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef] [Green Version]

- Hassaan, O.; Nasir, A.K.; Roth, H.; Khan, M.F. Precision Forestry: Trees Counting in Urban Areas Using Visible Imagery based on an Unmanned Aerial Vehicle. IFAC-PapersOnLine 2016, 49, 16–21. [Google Scholar] [CrossRef]

- Barnhart, R.K.; Hottman, S.B.; Marshall, D.M.; Shappee, E. Introduction to Unmanned Aerial Systems, 1st ed.; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Lelong, C.C.D.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef] [PubMed]

- Burns, J.; Delparte, D.; Gates, R.; Takabayashi, M. Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. PeerJ 2015, 3, e1077. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Gu, J.; Congalton, R.G. Individual Tree Crown Delineation From UAS Imagery Based on Region Growing by Over-Segments With a Competitive Mechanism. IEEE Trans. Geosci. Remote Sens. 2021, 1–11. [Google Scholar] [CrossRef]

- Verhoeven, G.; Doneus, M.; Briese, C.; Vermeulen, F. Mapping by matching: A computer vision-based approach to fast and accurate georeferencing of archaeological aerial photographs. J. Archaeol. Sci. 2012, 39, 2060–2070. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Hugenholtz, C.H.; Whitehead, K.; Brown, O.W.; Barchyn, T.E.; Moorman, B.J.; LeClair, A.; Riddell, K.; Hamilton, T. Geomorphological mapping with a small unmanned aircraft system (sUAS): Feature detection and accuracy assessment of a photogrammetrically-derived digital terrain model. Geomorphology 2013, 194, 16–24. [Google Scholar] [CrossRef] [Green Version]

- Hölbling, D.; Eisank, C.; Albrecht, F.; Vecchiotti, F.; Friedl, B.; Weinke, E.; Kociu, A. Comparing manual and semi-automated landslide mapping based on optical satellite images from different sensors. Geosciences 2017, 7, 37. [Google Scholar] [CrossRef] [Green Version]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Janowiak, M.K.; D’Amato, A.W.; Swanston, C.W.; Iverson, L.; Thompson, F.R.; Dijak, W.D.; Matthews, S.; Peters, M.P.; Prasad, A.; Fraser, J.S.; et al. New England and Northern New York Forest Ecosystem Vulnerability Assessment and Synthesis: A Report from the New England Climate Change Response Framework Project; U.S. Department of Agriculture, Forest Service, Northern Research Station: Newtown Square, PA, USA, 2018; Volume 234, p. 173. [CrossRef]

- Franklin, S.E.; Ahmed, O.S. Deciduous tree species classification using object-based analysis and machine learning with unmanned aerial vehicle multispectral data. Int. J. Remote Sens. 2018, 39, 5236–5245. [Google Scholar] [CrossRef]

- Gini, R.; Sona, G.; Ronchetti, G.; Passoni, D.; Pinto, L. Improving Tree Species Classification Using UAS Multispectral Images and texture Measures. Int. J. Geo-Inf. 2018, 7, 315. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Shen, X.; Cao, L.; Coops, N.C.; Goodbody, T.R.H.; Zhong, T.; Zhao, W.; Sun, Q.; Ba, S.; Zhang, Z.; et al. Tree species classification using UAS-based digital aerial photogrammetry point clouds and multispectral imageries in subtropical natural forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102173. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV lidar and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- Woodlands, U. University of New Hampshire Office of Woodlands and Natural Areas. Available online: https://colsa.unh.edu/woodlands (accessed on 1 May 2021).

- Eisenhaure, S. Kingman Farm. Management and Operations Plan. 2018; University of New Hampshire, Office of Woodlands and Natural Areas: Durham, NH, USA, 2018. [Google Scholar]

- Kershaw, J.A.; Ducey, M.J.; Beers, T.W.; Husch, B. Forest Mensuration, 5th ed.; John Wiley and Sons Ltd.: Hoboken, NJ, USA, 2016. [Google Scholar]

- Ducey, M.J. Workshop Proceedings: Forest Measurments for Natural Resource Professionals. In Proceedings of the Natural Resource Network: Connecting Research, Teaching, and Outreach; University of New Hampshire Cooperative Extension; University of New Hampshire: Durham, NH, USA, 2001; p. 71. [Google Scholar]

- Fraser, B.T.; Congalton, R.G. Estimating Primary Forest Attributes and Rare Community Charecteristics using Unmanned Aerial Systems (UAS): An Enrichment of Conventional Forest Inventories. Remote Sens. 2021, 13, 2971. [Google Scholar] [CrossRef]

- EOS Arrow 200 RTK GNSS. Available online: https://eos-gnss.com/product/arrow-series/arrow-200/?gclid=Cj0KCQjw2tCGBhCLARIsABJGmZ47nIPNrAuu7Xobgf3P0HGlV4mMLHHWZz25lyHM6UuI_pPCu7b2gMaAukeEALw_wcB (accessed on 1 July 2021).

- Oldoni, L.V.; Cattani, C.E.V.; Mercante, E.; Johann, J.A.; Antunes, J.F.G.; Almeida, L. Annual cropland mapping using data mining and {OLI} {Landsat}-8. Rev. Bras. Eng. Agrícola e Ambient. 2019, 23, 952–958. [Google Scholar] [CrossRef]

- Kirui, K.B.; Kairo, J.; Bosire, K.M.; Viergever, S.; Rudra, S.; Huxham, M.; Briers, R.A. Mapping of mangrove forest land cover change along the Kenya coastline using Landsat imagery. Ocean. Coast. Manag. 2013, 83, 19–24. [Google Scholar] [CrossRef]

- Google Earth Google Earth Satellite Imagery. Available online: https://earth.google.com/web/@10.757402,34.78251121,620.99875321a,19577839.73696211d,35y,0h,0t,0r/data=Ci4SLBIgOGQ2YmFjYjU2ZDIzMTFlOThiNTM2YjMzNGRiYmRhYTAiCGxheWVyc18w (accessed on 1 September 2021).

- USDA NAIP Imagery. Available online: https://www.fsa.usda.gov/programs-and-services/aerial-photography/imagery-programs/naip-imagery/ (accessed on 1 May 2021).

- senseFly. eBee Plus Drone User Manual v1.8; senseFly Parrot Group: Cheseaux-Lausanne, Switzerland, 2018; Volume 1, p. 107. [Google Scholar]

- senseFly. eBee X Fixed-wing Mapping Drone. Available online: https://www.sensefly.com/drone/ebee-x-fixed-wing-drone/ (accessed on 1 May 2019).

- senseFly. senseFly Aeria X Photogrammetry Camera. Available online: https://www.sensefly.com/camera/sensefly-aeria-x-photogrammetry-camera/ (accessed on 1 May 2019).

- senseFly. senseFly S.O.D.A. Photogrammetry Camera. Available online: https://www.sensefly.com/camera/sensefly-soda-photogrammetry-camera/ (accessed on 1 May 2019).

- EMotion, S. senseFly Drone Flight Management software versions 3.15 (eBee Plus) and 3.19 eBee X. Available online: https://www.sensefly.com/software/emotion/ (accessed on 1 August 2021).

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision uav estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

- NOAA Continuously Operating Reference Stations (CORS); National Geodetic Survey National Oceanic and Atmospheric Administration. 2019. Available online: https://geodesy.noaa.gov/CORS/ (accessed on 1 August 2021).

- Gu, J.; Grybas, H.; Congalton, R.G. A comparison of forest tree crown delineation from unmanned aerial imagery using canopy height models vs. spectral lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

- Nowacki, G.J.; Abrams, M.D. Is climate an important driver of post-European vegetation change in the Eastern United States? Glob. Chang. Biol. 2015, 21, 314–334. [Google Scholar] [CrossRef] [PubMed]

- Eyre, F.H. Forest Cover Types of the United States and Canada: Society of American Foresters; Society of American Foresters: Bethesda, MD, USA, 1980; 148p. [Google Scholar]

- Justice, D.; Deely, A.; Rubin, F. Final Report: New Hampshire Land Cover Assessment; Complex Systems Research Center, University of New Hampshire: Durham, NH, USA, 2002; 42p. [Google Scholar]

- MacLean, M.G.; Campbell, M.J.; Maynard, D.S.; Ducey, M.J.; Congalton, R.G. Requirements for Labelling Forest Polygons in an Object-Based Image Analysis Classification; University of New Hampshire: Durham, NH, USA, 2012. [Google Scholar]

- Anderson, J.R.; Hardy, E.E.; Roach, J.T.; Witmer, R.E. A land use and land cover classification system for use with remote sensor data. Geol. Surv. Prof. Pap. 1976, 964, 41. [Google Scholar]

- King, D.I.; Schlossberg, S. Synthesis of the conservation value of the early-successional stage in forests of eastern North America. For. Ecol. Manag. 2014, 324, 186–195. [Google Scholar] [CrossRef]

- Weidner, U. Contribution to the assessment of segmentation quality for remote sensing applications. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 479–484. [Google Scholar]

- Chen, Y.; Ming, D.; Zhao, L.; Lv, B.; Zhou, K.; Qing, Y. Review on high spatial resolution remote sensing image segmentation evaluation. Photogramm. Eng. Remote Sens. 2018, 84, 629–646. [Google Scholar] [CrossRef]

- GRANIT. GRANIT LiDAR Distribution Site. Available online: https://lidar.unh.edu/map/ (accessed on 1 August 2021).

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef] [Green Version]

- Hirschmugl, M.; Ofner, M.; Raggam, J.; Schardt, M. Single tree detection in very high resolution remote sensing data. Remote Sens. Environ. 2007, 110, 533–544. [Google Scholar] [CrossRef]

- Loh, W.-Y. Classification and regression trees. WIREs Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Krzywinski, M.; Altman, N. Corrigendum: Classification and regression trees. Nat. Methods 2017, 14, 757–758. [Google Scholar] [CrossRef]

- Chapelle, O.; Haffner, P.; Vapnik, V.N. Support vector machines for histogram-based image classification. IEEE Trans. Neural Net. 1999, 10, 1055–1064. [Google Scholar] [CrossRef] [PubMed]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. JMLR 2011, 12, 2825–2830. [Google Scholar]

- Leckie, D.G.; Gougeon, F.A.; Walsworth, N.; Paradine, D. Stand delineation and composition estimation using semi-automated individual tree crown analysis. Remote Sens. Environ. 2003, 85, 355–369. [Google Scholar] [CrossRef]

- Gini, R.; Passoni, D.; Pinto, L.; Sona, G. Use of unmanned aerial systems for multispectral survey and tree classification: A test in a park area of northern Italy. Eur. J. Remote Sens. 2014, 47, 251–269. [Google Scholar] [CrossRef]

- Whitman, A.A.; Hagan, J.M. An index to identify late-successional forest in temperate and boreal zones. For. Ecol. Manag. 2007, 246, 144–154. [Google Scholar] [CrossRef]

- Wessel, M.; Brandmeier, M.; Tiede, D. Evaluation of different machine learning algorithms for scalable classification of tree types and tree species based on Sentinel-2 data. Remote Sens. 2018, 10, 1419. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăgu, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Zaman, B.; Jensen, A.M.; McKee, M. Use of High-Resolution Multispectral Imagery Acquired with an Autonomous Unmanned Aerial Vehicle to Quantify the Spread of an Invasive Wetlands Species. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium; IEEE: Vancouver, BC, Canada, 2011; pp. 803–806. [Google Scholar]

- Otsu, K.; Pla, M.; Duane, A.; Cardil, A.; Brotons, L. Estimating the threshold of detection on tree crown defoliation using vegetation indices from uas multispectral imagery. Drones 2019, 3, 80. [Google Scholar] [CrossRef] [Green Version]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Hernandez-Santin, L.; Rudge, M.L.; Bartolo, R.E.; Erskine, P.D. Identifying species and monitoring understorey from uas-derived data: A literature review and future directions. Drones 2019, 3, 9. [Google Scholar] [CrossRef] [Green Version]

- Mishra, N.B.; Mainali, K.P.; Shrestha, B.B.; Radenz, J.; Karki, D. Species-level vegetation mapping in a Himalayan treeline ecotone using unmanned aerial system (UAS) imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 445. [Google Scholar] [CrossRef] [Green Version]

- Persad, R.A.; Armenakis, C. Automatic 3D Surface Co-Registration Using Keypoint Matching. Photogramm. Eng. Remote Sens. 2017, 83, 137–151. [Google Scholar] [CrossRef]

- Pal, N.R.; Pal, S.K. A review on image segmentation techniques. Pattern Recognit. 1993, 26, 1277–1294. [Google Scholar] [CrossRef]

- Yan, W.; Guan, H.; Cao, L.; Yu, Y.; Gao, S.; Lu, J.Y. An automated hierarchical approach for three-dimensional segmentation of single trees using UAV LiDAR data. Remote Sens. 2018, 10, 1999. [Google Scholar] [CrossRef] [Green Version]

- Lobo Torres, D.; Queiroz Feitosa, R.; Nigri Happ, P.; Elena Cué La Rosa, L.; Marcato Junior, J.; Martins, J.; Olã Bressan, P.; Gonçalves, W.N.; Liesenberg, V. Applying Fully Convolutional Architectures for Semantic Segmentation of a Single Tree Species in Urban Environment on High Resolution UAV Optical Imagery. Sensors 2020, 20, 563. [Google Scholar] [CrossRef] [Green Version]

- Effiom, A.E.; van Leeuwen, L.M.; Nyktas, P.; Okojie, J.A.; Erdbrügger, J. Combining unmanned aerial vehicle and multispectral Pleiades data for tree species identification, a prerequisite for accurate carbon estimation. J. Appl. Remote Sens. 2019, 13, 034530. [Google Scholar] [CrossRef]

- Leukert, K.; Darwish, A.; Reinhardt, W. Transferability of Knowledge-based Classification Rules. In Proceedings of the ISPRS—International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences Theme Session 11: Automatic Image Interpretation in the GIS Environment; International Journal of Photogrammetry and Remote Sensing: Amsterdam, The Netherlands, 2004; p. 6. [Google Scholar]

- Pax-Lenney, M.; Woodcock, C.E.; Macomber, S.A.; Gopal, S.; Song, C. Forest mapping with a generalized classifier and Landsat TM data. Remote Sens. Environ. 2001, 77, 241–250. [Google Scholar] [CrossRef]

- Michener, W.K.; Jones, M.B. Ecoinformatics: Supporting ecology as a data-intensive science. Trends Ecol. Evol. 2012, 27, 85–93. [Google Scholar] [CrossRef] [Green Version]

| Visual Interpretation Sample (Inventory Plot) Sizes | ||||||||

|---|---|---|---|---|---|---|---|---|

| WP | EH | MC | MF | OAK | RM | AB | MH | ES |

| 85 | 10 | 44 | 131 | 40 | 23 | 10 | 37 | 28 |

| Conifer | MF | Deciduous | ES | |||||

| 139 | 131 | 110 | 28 | |||||

| Field (Reference) Data | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WP | EH | MC | MF | AB | RM | OAK | MH | ES | TOTAL | USERS ACCURACY | |||

| UAS Visual Interpretation | WP | 51 | 1 | 17 | 13 | 0 | 1 | 0 | 1 | 1 | 85 | 60.0% | |

| EH | 2 | 1 | 1 | 3 | 1 | 0 | 0 | 1 | 0 | 9 | 11.11% | ||

| MC | 5 | 3 | 3 | 9 | 0 | 1 | 0 | 1 | 2 | 24 | 12.5% | ||

| MF | 22 | 2 | 20 | 65 | 2 | 8 | 14 | 12 | 8 | 153 | 42.48% | ||

| AB | 0 | 0 | 2 | 0 | 3 | 0 | 0 | 1 | 0 | 6 | 50.0% | ||

| RM | 0 | 0 | 0 | 3 | 1 | 5 | 3 | 3 | 0 | 15 | 33.3% | ||

| OAK | 2 | 0 | 1 | 10 | 1 | 1 | 17 | 10 | 4 | 46 | 36.96% | ||

| MH | 2 | 3 | 0 | 25 | 2 | 5 | 6 | 6 | 3 | 52 | 11.54% | ||

| ES | 1 | 0 | 0 | 3 | 0 | 2 | 0 | 2 | 10 | 8 | 55.56% | ||

| TOTAL | 85 | 10 | 44 | 131 | 10 | 23 | 40 | 37 | 28 | 161/408 | |||

| PRODUCERS ACCURACY | 60.0% | 10.0% | 6.8% | 49.62% | 40.0% | 21.74% | 42.50% | 16.22% | 35.71% | OVERALL ACCURACY 39.46% | |||

| Field (Reference) Data | |||||||

|---|---|---|---|---|---|---|---|

| C | MF | D | ES | TOTAL | USERS ACCURACY | ||

| UAS Visual Interpretation | C | 84 | 26 | 3 | 3 | 116 | 72.41% |

| MF | 44 | 65 | 38 | 8 | 115 | 41.94% | |

| D | 10 | 38 | 63 | 7 | 118 | 53.39% | |

| ES | 1 | 2 | 6 | 10 | 19 | 52.63% | |

| TOTAL | 139 | 131 | 110 | 28 | 222/408 | ||

| PRODUCERS ACCURACY | 60.43% | 49.62% | 57.27% | 35.71% | OVERALL ACCURACY 54.44% | ||

| Unmanned Aerial Systems (UAS) Visual Interpretation Uncertainty: 9 Composition Classes | |||||||

|---|---|---|---|---|---|---|---|

| Field Data | Field-Based Composition (%) | J-1 | J-2 | J-3 | H-1 | H-2 | H-3 |

| WP | 87.5% WP, 6.3% EH, 6.3% AB | WP | MC | MC | WP | MC | MC |

| WP | 75% WP, 12.5% RM, 12.5% MH | WP | MC | MC | WP | MC | MC |

| WP | 83.3% WP, 8.3% OAK, 8.3% ES | MF | MF | MF | WP | MF | MF |

| WP | 91.7% WP, 8.3% RM | WP | MC | WP | WP | MC | WP |

| EH | 75% EH, 25% WP | WP | WP | WP | MC | WP | WP |

| EH | 90% EH, 10% ES | EH | MF | MF | EH | EH | MC |

| EH | 85.7% EH, 14.3% ES | EH | WP | EH | MC | MF | EH |

| EH | 85.7% EH, 14.3% ES | MF | MC | EH | EH | EH | MC |

| MC | 41.7% EH, 41.7% WP, 8% RM, 8% MH | MF | MC | EH | MC | WP | MF |

| MC | 44.4% WP, 33.3% EH, 22.2% BB | EH | MC | EH | MC | MC | EH |

| MC | 69.23% WP, 15.4% ES, 7.7% MH, 7.7% OAK | WP | WP | MC | WP | WP | WP |

| MC | 45.5% WP, 27.3% EH, 27.3% OAK | MC | MF | WP | WP | MF | MC |

| MF | 60% EH, 40% ES | EH | MF | MF | EH | EH | MF |

| MF | 50% WP, 33.3% OAK, 8.3% MH, 8.3% RM | MH | MF | MF | MF | MF | MC |

| MF | 54.5% WP, 45.5% OAK | MF | WP | WP | MF | MC | MC |

| MF | 62.5% WP, 37.5% OAK | OAK | MF | MH | MF | OAK | MH |

| OAK | 81.2% OAK, 18.2% AB | OAK | MH | MH | OAK | OAK | MH |

| OAK | 66.7% OAK, 33.3% MH | MH | MH | MH | OAK | MF | EH |

| OAK | 60% OAK, 20% RM, 20% WP | OAK | MH | MH | MH | MF | OAK |

| OAK | 66.7% OAK, 33.3% EH | OAK | OAK | RM | OAK | OAK | OAK |

| RM | 100% RM | MH | RM | MH | RM | MH | EH |

| RM | 50% RM, 50% MH | ES | ES | ES | WP | ES | RM |

| RM | 77.8% RM, 11.1% EH, 11.1% OAK | MH | RM | RM | RM | RM | AB |

| RM | 60% RM, 20% WP, 20% OAK | MF | OAK | OAK | RM | RM | MH |

| AB | 44.4% OAK, 33% AB, 22.2% EH | AB | OAK | OAK | AB | MH | EH |

| AB | 33.3% MH 25% AB, 16.7% EH, 16.7% RM, 16.7% ES | AB | ES | AB | AB | AB | MH |

| AB | 66.7% AB, 33.3% OAK | MF | MH | MH | AB | MH | RM |

| AB | 40% AB, 20% RM, 20% MH, 20% ES | RM | MH | OAK | AB | OAK | OAK |

| MH | 50% MH, 25% OAK, 25% EH | MH | MH | MF | OAK | MH | MF |

| MH | 33.3% MH, 22.2% OAK, 22.2% ES, 11.1% EH, 11.1% RM | OAK | MH | MH | OAK | MC | MH |

| MH | 37.5% RM, 25% MC, 25% ES, 12.5% OAK | OAK | MH | OAK | MF | MH | MF |

| MH | 50% RM, 16.7% EH, 16.7% ES, 16.7% MH | MF | AB | AB | MF | AB | MH |

| ES | 100% ES | ES | EH | ES | MF | EH | EH |

| ES | 100% ES | MH | ES | AB | MF | ES | ES |

| ES | 100% ES | OAK | MH | MH | MF | MH | MH |

| ES | 100% ES | ES | ES | ES | WP | MH | ES |

| Unmanned Aerial Systems (UAS) Visual Interpretation Uncertainty: 4 Composition Classes | |||||||

|---|---|---|---|---|---|---|---|

| Field Data | Field-Based Composition (%) | J-1 | J-2 | J-3 | H-1 | H-2 | H-3 |

| Coniferous | 87.5% WP, 6.3% EH, 6.3% AB | C | C | C | C | C | C |

| Coniferous | 75% WP, 12.5% RM, 12.5% MH | C | C | C | C | C | C |

| Coniferous | 83.3% WP, 8.3% OAK, 8.3% ES | MF | MF | MF | C | MF | MF |

| Coniferous | 91.7% WP, 8.3% RM | C | C | C | C | C | C |

| Coniferous | 75% EH, 25% WP | C | C | C | C | C | C |

| Coniferous | 90% EH, 10% ES | C | MF | MF | C | C | C |

| Coniferous | 85.7% EH, 14.3% ES | C | C | C | C | MF | C |

| Coniferous | 85.7% EH, 14.3% ES | MF | C | C | C | C | C |

| Coniferous | 41.7% EH, 41.7% WP, 8% RM, 8% MH | MF | C | C | C | C | MF |

| Coniferous | 44.4% WP, 33.3% EH, 22.2% BB | C | C | C | C | C | C |

| Coniferous | 69.23% WP, 15.4% ES, 7.7% MH, 7.7% OAK | C | C | C | C | C | C |

| Coniferous | 45.5% WP, 27.3% EH, 27.3% OAK | C | MF | C | C | MF | C |

| MF | 60% EH, 40% ES | C | MF | MF | C | C | MF |

| MF | 50% WP, 33.3% OAK, 8.3% MH, 8.3% RM | D | MF | MF | MF | MF | C |

| MF | 54.5% WP, 45.5% OAK | MF | C | C | MF | C | C |

| MF | 62.5% WP, 37.5% OAK | D | MF | D | MF | D | D |

| Deciduous | 81.2% OAK, 18.2% AB | D | D | D | D | D | D |

| Deciduous | 66.7% OAK, 33.3% MH | D | D | D | D | MF | C |

| Deciduous | 60% OAK, 20% RM, 20% WP | D | D | D | D | MF | D |

| Deciduous | 66.7% OAK, 33.3% EH | D | D | D | D | D | D |

| Deciduous | 100% RM | D | D | D | D | D | C |

| Deciduous | 50% RM, 50% MH | ES | ES | ES | C | ES | D |

| Deciduous | 77.8% RM, 11.1% EH, 11.1% OAK | D | D | D | D | D | D |

| Deciduous | 60% RM, 20% WP, 20% OAK | MF | D | D | D | D | D |

| Deciduous | 44.4% OAK, 33% AB, 22.2% EH | D | D | D | D | D | C |

| Deciduous | 33.3% MH 25% AB, 16.7% EH, 16.7% RM, 16.7% ES | D | ES | D | D | D | D |

| Deciduous | 66.7% AB, 33.3% OAK | MF | D | D | D | D | D |

| Deciduous | 40% AB, 20% RM, 20% MH, 20% ES | D | D | D | D | D | D |

| Deciduous | 50% MH, 25% OAK, 25% EH | D | D | MF | D | D | MF |

| Deciduous | 33.3% MH, 22.2% OAK, 22.2% ES, 11.1% EH, 11.1% RM | D | D | D | D | C | D |

| Deciduous | 37.5% RM, 25% MC, 25% ES, 12.5% OAK | D | D | D | MF | D | MF |

| Deciduous | 50% RM, 16.7% EH, 16.7% ES, 16.7% MH | MF | D | D | MF | D | D |

| ES | 100% ES | ES | C | ES | MF | C | C |

| ES | 100% ES | D | ES | D | MF | ES | ES |

| ES | 100% ES | D | D | D | MF | D | D |

| ES | 100% ES | ES | ES | ES | C | D | ES |

| Correct Detection | Over-Detection (Commission Error) | Under-Detection (Omission Error) | Total |

|---|---|---|---|

| 85 | 132 | 14 | 231 |

| 36.80% | 57.14% | 6.1% | Overall Detection Accuracy |

| 93.9% |

| Individual Tree Reference Data Sample Sizes | ||||||||

|---|---|---|---|---|---|---|---|---|

| WP | EH | OC | ES | OH | OAK | RM | AB | |

| NAIP | 97 | 76 | 90 | 79 | 77 | 135 | 95 | 77 |

| UAS | 102 | 77 | 85 | 74 | 88 | 152 | 97 | 77 |

| Field (Reference) Data | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WP | EH | OC | AB | RM | OAK | OH | ES | TOTAL | USERS ACCURACY | |||

| UAS Imagery Using the SVM Classifier | WP | 36 | 4 | 6 | 0 | 1 | 1 | 7 | 2 | 57 | 63.16% | |

| EH | 2 | 16 | 3 | 13 | 4 | 6 | 3 | 7 | 54 | 29.63% | ||

| OC | 5 | 1 | 20 | 1 | 2 | 2 | 3 | 2 | 36 | 55.56% | ||

| AB | 0 | 6 | 2 | 14 | 2 | 2 | 3 | 3 | 32 | 43.75% | ||

| RM | 1 | 2 | 0 | 1 | 18 | 2 | 4 | 3 | 31 | 58.06% | ||

| OAK | 0 | 4 | 2 | 4 | 7 | 49 | 13 | 9 | 88 | 55.68% | ||

| OH | 2 | 1 | 4 | 0 | 6 | 4 | 6 | 1 | 24 | 25.0% | ||

| ES | 0 | 1 | 1 | 2 | 4 | 2 | 1 | 6 | 17 | 35.29% | ||

| TOTAL | 46 | 35 | 38 | 35 | 44 | 68 | 40 | 33 | 165/339 | |||

| PRODUCERS ACCURACY | 78.26% | 45.71% | 52.63% | 40.0% | 40.91% | 72.06% | 15.0% | 18.18% | OVERALL ACCURACY 48.67% | |||

| Field (Reference) Data | ||||||

|---|---|---|---|---|---|---|

| UAS Imagery Using the RF Classifier | C | D | ES | TOTAL | USERS ACCURACY | |

| C | 86 | 18 | 18 | 122 | 70.49% | |

| D | 27 | 126 | 34 | 187 | 67.38% | |

| ES | 6 | 8 | 11 | 25 | 44.0% | |

| TOTAL | 119 | 152 | 63 | 229/334 | ||

| PRODUCERS ACCURACY | 72.27% | 82.89% | 17.46% | OVERALL ACCURACY 68.56% | ||

| Individual Tree Classification Accuracies using the RF classifier, UAS Imagery, and 8 and 4 Composition Classes. | ||||

|---|---|---|---|---|

| 55% Training/45% Testing | 55% Training/45% Testing with Feature Reduction | 65% Training/ 35% Testing | Out-of-Bag (OOB) Validation | |

| Minimum Sample Size | 30 per Class | 30 per Class | 26 Per Class | Permutations of 3% from the total |

| Average Accuracy 8 Classes | 45.84% | 46.67% | 43.07% | 45.84% |

| Average Accuracy 4 Classes | 64.01% | 70.48% | 65.36% | 65.51% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fraser, B.T.; Congalton, R.G. A Comparison of Methods for Determining Forest Composition from High-Spatial-Resolution Remotely Sensed Imagery. Forests 2021, 12, 1290. https://doi.org/10.3390/f12091290

Fraser BT, Congalton RG. A Comparison of Methods for Determining Forest Composition from High-Spatial-Resolution Remotely Sensed Imagery. Forests. 2021; 12(9):1290. https://doi.org/10.3390/f12091290

Chicago/Turabian StyleFraser, Benjamin T., and Russell G. Congalton. 2021. "A Comparison of Methods for Determining Forest Composition from High-Spatial-Resolution Remotely Sensed Imagery" Forests 12, no. 9: 1290. https://doi.org/10.3390/f12091290

APA StyleFraser, B. T., & Congalton, R. G. (2021). A Comparison of Methods for Determining Forest Composition from High-Spatial-Resolution Remotely Sensed Imagery. Forests, 12(9), 1290. https://doi.org/10.3390/f12091290