Due Diligence for Deforestation-Free Supply Chains with Copernicus Sentinel-2 Imagery and Machine Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Areas

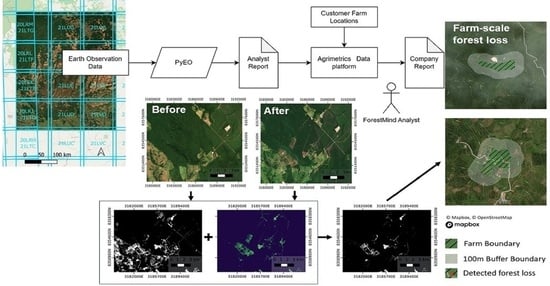

2.2. Software Development and Image Analysis

2.3. Model Training

2.4. Pilot Operational Application

3. Results

3.1. Median Image Composite Creation

3.2. Near-Real-Time Image Query and Download Functionality

3.3. Random Forest Classifications

3.4. Post-Classification Change Detection

3.5. dNDVI Change Thresholding to Create Hybrid Change Detections

3.6. Time-Series Analysis and Aggregation into the Analyst Report

- Layer 1 ‘First_Change_Date’: The acquisition date of the Sentinel-2 image in which a change of interest (i.e., forest loss) was first detected. This is expressed as the number of days since 1 January 2000;

- Layer 2 ‘Total_Change_Detection_Count’: The total number of times when a change was detected since the First_Change_Date for each pixel;

- Layer 3 ‘Total_NoChange_Detection_Count’: The total number of times when no change was detected since the First_Change_Date for each pixel;

- Layer 4 ‘Total_Classification_Count’: The total number of times when a land cover class was identified for each pixel, taking into account partial satellite orbit coverage and cloud cover;

- Layer 5 ‘Percentage_Change_Detection’: The computed ratio of Layer 2 to Layer 4 expressed as a percentage. This indicates the consistency of a detected change once it has first been detected and thus the confidence that it is a permanent change rather than, for example, seasonal agricultural variation or periodic flooding;

- Layer 6 ‘Change_Detection_Decision’: A computed binary layer that is set to 1 to indicate regions that pass a change detection threshold and so allows regions of significant change to be rapidly identified over the large spatial area of a tile. Currently, the decision criterion is that ((Layer 2 >= 5) and (Layer 5 >= 50)), i.e., that at least five land cover changes of interest were detected and that the change was present in at least 50% of the change detection images;

- Layer 7 ‘Change_Detection_Date_Mask’: A subset of First_Change_Date only showing those areas where the change decision criteria were met. It is the product of Layer 1 and Layer 6. This allows regions where land use change is expanding over time to be more easily identified over the large spatial area of a tile.

3.7. Validation of the Forest Loss Detections

3.8. Independent Validation of Farm-Scale Change Detection Accuracy

- PyEO Forest Loss—this study, University of Leicester (7 February 2019–22 February 2021);

- Global Forest Loss—University of Maryland (2017–2020).

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chakravarty, S.; Ghosh, S.; Suresh, C.; Dey, A.; Shukla, G. Deforestation: Causes, Effects and Control Strategies. Glob. Perspect. Sustain. For. Manag. 2012, 1, 1–26. [Google Scholar]

- European Commission Green Deal: EU Agrees Law to Fight Global Deforestation and Forest Degradation Driven by EU Production and Consumption 2022. Available online: https://environment.ec.europa.eu/news/green-deal-new-law-fight-global-deforestation-and-forest-degradation-driven-eu-production-and-2023-06-29_en (accessed on 28 September 2023).

- US Congress Forest Act of 2021. 2021. Available online: https://www.congress.gov/bill/117th-congress/senate-bill/2950 (accessed on 28 September 2023).

- UK Government: Government Sets out Plans to Clean up the UK’s Supply Chains to Help Protect Forests. 2020. Available online: https://www.gov.uk/government/news/government-sets-out-plans-to-clean-up-the-uks-supply-chains-to-help-protect-forests (accessed on 28 September 2023).

- Tucker, C.J.; Townshend, J.R. Strategies for Monitoring Tropical Deforestation Using Satellite Data. Int. J. Remote Sens. 2000, 21, 1461–1471. [Google Scholar] [CrossRef]

- Herold, M.; Johns, T. Linking Requirements with Capabilities for Deforestation Monitoring in the Context of the UNFCCC-REDD Process. Environ. Res. Lett. 2007, 2, 045025. [Google Scholar] [CrossRef]

- Finer, M.; Novoa, S.; Weisse, M.J.; Petersen, R.; Mascaro, J.; Souto, T.; Stearns, F.; Martinez, R.G. Combating Deforestation: From Satellite to Intervention. Science 2018, 360, 1303–1305. [Google Scholar] [CrossRef] [PubMed]

- Schoene, D.; Killmann, W.; von Lüpke, H.; Wilkie, M.L. Definitional Issues Related to Reducing Emissions from Deforestation in Developing Countries. For. Clim. Chang. Work. 2007, 5. Available online: https://www.uncclearn.org/wp-content/uploads/library/fao44.pdf (accessed on 28 September 2023).

- Wadsworth, R.; Balzter, H.; Gerard, F.; George, C.; Comber, A.; Fisher, P. An Environmental Assessment of Land Cover and Land Use Change in Central Siberia Using Quantified Conceptual Overlaps to Reconcile Inconsistent Data Sets. J. Land Use Sci. 2008, 3, 251–264. [Google Scholar] [CrossRef]

- Watanabe, M.; Koyama, C.; Hayashi, M.; Kaneko, Y.; Shimada, M. Development of Early-Stage Deforestation Detection Algorithm (Advanced) with PALSAR-2/ScanSAR for JICA-JAXA Program (JJ-FAST). In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2446–2449. [Google Scholar]

- Reiche, J.; Mullissa, A.; Slagter, B.; Gou, Y.; Tsendbazar, N.-E.; Odongo-Braun, C.; Vollrath, A.; Weisse, M.J.; Stolle, F.; Pickens, A.; et al. Forest Disturbance Alerts for the Congo Basin Using Sentinel-1. Environ. Res. Lett. 2021, 16, 024005. [Google Scholar] [CrossRef]

- Portillo-Quintero, C.; Hernández-Stefanoni, J.L.; Reyes-Palomeque, G.; Subedi, M.R. The Road to Operationalization of Effective Tropical Forest Monitoring Systems. Remote Sens. 2021, 13, 1370. [Google Scholar] [CrossRef]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef]

- Roberts, J.F.; Mwangi, R.; Mukabi, F.; Njui, J.; Nzioka, K.; Ndambiri, J.K.; Bispo, P.C.; Espirito-Santo, F.D.B.; Gou, Y.; Johnson, S.C.M.; et al. Pyeo: A Python Package for near-Real-Time Forest Cover Change Detection from Earth Observation Using Machine Learning. Comput. Geosci. 2022, 167, 105192. [Google Scholar] [CrossRef]

- Pacheco-Pascagaza, A.M.; Gou, Y.; Louis, V.; Roberts, J.F.; Rodríguez-Veiga, P.; da Conceição Bispo, P.; Espírito-Santo, F.D.B.; Robb, C.; Upton, C.; Galindo, G.; et al. Near Real-Time Change Detection System Using Sentinel-2 and Machine Learning: A Test for Mexican and Colombian Forests. Remote Sens. 2022, 14, 707. [Google Scholar] [CrossRef]

- Reiche, J.; Hamunyela, E.; Verbesselt, J.; Hoekman, D.; Herold, M. Improving Near-Real Time Deforestation Monitoring in Tropical Dry Forests by Combining Dense Sentinel-1 Time Series with Landsat and ALOS-2 PALSAR-2. Remote Sens. Environ. 2018, 204, 147–161. [Google Scholar] [CrossRef]

- Doblas Prieto, J.; Lima, L.; Mermoz, S.; Bouvet, A.; Reiche, J.; Watanabe, M.; Sant Anna, S.; Shimabukuro, Y. Inter-Comparison of Optical and SAR-Based Forest Disturbance Warning Systems in the Amazon Shows the Potential of Combined SAR-Optical Monitoring. Int. J. Remote Sens. 2023, 44, 59–77. [Google Scholar] [CrossRef]

- Chiteculo, V.; Abdollahnejad, A.; Panagiotidis, D.; Surovỳ, P.; Sharma, R.P. Defining Deforestation Patterns Using Satellite Images from 2000 and 2017: Assessment of Forest Management in Miombo Forests—A Case Study of Huambo Province in Angola. Sustainability 2018, 11, 98. [Google Scholar] [CrossRef]

- Roberts, J.; Balzter, H.; Gou, Y.; Louis, V.; Robb, C. Pyeo: Automated Satellite Imagery Processing; Zenodo: Meyrin, Switzerland, 2020; Available online: https://zenodo.org/records/3689674 (accessed on 10 December 2020).

- Balzter, H.; Roberts, J.F.; Robb, C.; Alonso Rueda Rodriguez, D.; Zaheer, U. Clcr/Pyeo: ForestMind Extensions (v0.8.0). 2023. Available online: https://zenodo.org/records/8116761 (accessed on 3 November 2023).

- QGIS Development Team. QGIS Geographic Information System Version 3.28.15; QGIS Association: Bern, Switzerland, 2022; Available online: https://www.qgis.org/ (accessed on 20 September 2022).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Cochran, W.G. Sampling Techniques; John Wiley & Sons: Hoboken, NJ, USA, 1977. [Google Scholar]

- Arévalo, P.; Olofsson, P.; Woodcock, C.E. Continuous Monitoring of Land Change Activities and Post-Disturbance Dynamics from Landsat Time Series: A Test Methodology for REDD+ Reporting. Remote Sens. Environ. 2020, 238, 111051. [Google Scholar] [CrossRef]

- Olofsson, P.; Arévalo, P.; Espejo, A.B.; Green, C.; Lindquist, E.; McRoberts, R.E.; Sanz, M.J. Mitigating the Effects of Omission Errors on Area and Area Change Estimates. Remote Sens. Environ. 2020, 236, 111492. [Google Scholar] [CrossRef]

- Bullock, E.L.; Woodcock, C.E.; Olofsson, P. Monitoring Tropical Forest Degradation Using Spectral Unmixing and Landsat Time Series Analysis. Remote Sens. Environ. 2020, 238, 110968. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Stehman, S.V.; Woodcock, C.E. Making Better Use of Accuracy Data in Land Change Studies: Estimating Accuracy and Area and Quantifying Uncertainty Using Stratified Estimation. Remote Sens. Environ. 2013, 129, 122–131. [Google Scholar] [CrossRef]

- Vargas, C.; Montalban, J.; Leon, A.A. Early Warning Tropical Forest Loss Alerts in Peru Using Landsat. Environ. Res. Commun. 2019, 1, 121002. [Google Scholar] [CrossRef]

- Watanabe, M.; Koyama, C.; Hayashi, M.; Nagatani, I.; Tadono, T.; Shimada, M. Trial of Detection Accuracies Improvement for JJ-FAST Deforestation Detection Algorithm Using Deep Learning. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2911–2914. [Google Scholar]

- Dinerstein, E.; Olson, D.; Joshi, A.; Vynne, C.; Burgess, N.D.; Wikramanayake, E.; Hahn, N.; Palminteri, S.; Hedao, P.; Noss, R.; et al. An Ecoregion-Based Approach to Protecting Half the Terrestrial Realm. BioScience 2017, 67, 534–545. [Google Scholar] [CrossRef] [PubMed]

| Class Number | Description |

|---|---|

| 1 | Primary forest |

| 2 | Plantation forest |

| 3 | Bare soil |

| 4 | Crops |

| 5 | Grassland |

| 6 | Open water |

| 7 | Burn scar |

| 8 | Cloud |

| 9 | Cloud shadow |

| 10 | Haze |

| 11 | Sparse woodland |

| 12 | Dense woodland |

| Granule ID |

|---|

| T21LTD |

| T21LTE |

| T21LTF |

| T21LTG |

| T21LUD |

| T21LUE |

| T21LUF |

| T21LUG |

| T21LVD |

| T21LVE |

| T21LVF |

| T21LVG |

| Reference Class → | |||||||

|---|---|---|---|---|---|---|---|

| Predicted Class ↓ | 1 | 3 | 4 | 5 | 11 | 12 | UA ↓ |

| 1 | 84,955 | 2 | 210 | 56 | 4650 | 9 | 94.5% |

| 3 | 0 | 87,159 | 1092 | 109 | 1017 | 524 | 96.9% |

| 4 | 73 | 1332 | 85,147 | 1977 | 611 | 1250 | 94.2% |

| 5 | 53 | 93 | 2000 | 11,005 | 959 | 272 | 76.5% |

| 11 | 4730 | 650 | 374 | 545 | 84,214 | 87 | 93.0% |

| 12 | 53 | 871 | 2958 | 534 | 508 | 4110 | 45.5% |

| PA → | 94.5% | 96.7% | 92.8% | 77.4% | 91.6% | 65.7% | OA = 92.8% |

| Class | Band | Min | Max | Mean | Stdev |

|---|---|---|---|---|---|

| 1 | 2 | 74 | 668 | 207.49 | 31.83 |

| 3 | 130 | 1130 | 398.40 | 57.63 | |

| 4 | 86 | 1352 | 229.00 | 49.20 | |

| 8 | 912 | 5144 | 2726.92 | 371.26 | |

| 3 | 2 | 211 | 2113 | 477.09 | 216.21 |

| 3 | 391 | 2655 | 741.56 | 279.71 | |

| 4 | 323 | 3348 | 1034.24 | 453.30 | |

| 8 | 1160 | 4087 | 2246.23 | 632.23 | |

| 4 | 2 | 203 | 1872 | 415.48 | 97.21 |

| 3 | 411 | 2334 | 724.29 | 145.90 | |

| 4 | 267 | 2837 | 621.16 | 241.67 | |

| 8 | 1723 | 5684 | 3644.23 | 646.96 | |

| 5 | 2 | 184 | 771 | 429.65 | 106.77 |

| 3 | 340 | 1129 | 771.52 | 154.28 | |

| 4 | 214 | 1393 | 746.34 | 237.52 | |

| 8 | 1742 | 4003 | 2813.10 | 311.96 | |

| 11 | 2 | 146 | 626 | 300.66 | 55.23 |

| 3 | 298 | 914 | 536.95 | 62.15 | |

| 4 | 176 | 1214 | 430.51 | 145.68 | |

| 8 | 1530 | 4324 | 2468.49 | 289.34 | |

| 12 | 2 | 189 | 906 | 466.51 | 99.30 |

| 3 | 361 | 1336 | 774.15 | 133.53 | |

| 4 | 225 | 1798 | 815.29 | 240.96 | |

| 8 | 1320 | 4628 | 2897.72 | 362.87 |

| Guatemala OA = 86.3% κ = 0.71 | No Change | Change | User Accuracy |

| No Change | 193 | 7 | 96.5% |

| Change | 48 | 152 | 76% |

| Producer Accuracy | 80.1% | 95.6% | |

| Mato Grosso, Brazil OA = 85.5% κ = 0.72 | No Change | Change | User Accuracy |

| No Change | 187 | 13 | 93.5% |

| Change | 45 | 155 | 77.5% |

| Producer Accuracy | 80.6% | 92.3% |

| Period | Total Number of Farms | Deforestation-Free Farms | Farms with Deforestation < 0.1 ha | Farms with Deforestation > 0.1 ha |

|---|---|---|---|---|

| Jan 2020–Jan 2022 (Baseline Update) | 263 | 155 | 94 | 14 |

| Jan 2022–Aug 2022 | 263 | 149 | 113 | 1 |

| Jan 2020–Aug 2022 (Whole Monitoring Period) | 263 | 105 | 136 | 22 |

| Dataset | Overall Accuracy | Rate of Commission | Rate of Omission |

|---|---|---|---|

| PyEO forest loss— Soy Brazil | 83% | 18% | 1% |

| PyEO forest loss— Coffee Guatemala | 80% | 21% | 3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reading, I.; Bika, K.; Drakesmith, T.; McNeill, C.; Cheesbrough, S.; Byrne, J.; Balzter, H. Due Diligence for Deforestation-Free Supply Chains with Copernicus Sentinel-2 Imagery and Machine Learning. Forests 2024, 15, 617. https://doi.org/10.3390/f15040617

Reading I, Bika K, Drakesmith T, McNeill C, Cheesbrough S, Byrne J, Balzter H. Due Diligence for Deforestation-Free Supply Chains with Copernicus Sentinel-2 Imagery and Machine Learning. Forests. 2024; 15(4):617. https://doi.org/10.3390/f15040617

Chicago/Turabian StyleReading, Ivan, Konstantina Bika, Toby Drakesmith, Chris McNeill, Sarah Cheesbrough, Justin Byrne, and Heiko Balzter. 2024. "Due Diligence for Deforestation-Free Supply Chains with Copernicus Sentinel-2 Imagery and Machine Learning" Forests 15, no. 4: 617. https://doi.org/10.3390/f15040617

APA StyleReading, I., Bika, K., Drakesmith, T., McNeill, C., Cheesbrough, S., Byrne, J., & Balzter, H. (2024). Due Diligence for Deforestation-Free Supply Chains with Copernicus Sentinel-2 Imagery and Machine Learning. Forests, 15(4), 617. https://doi.org/10.3390/f15040617