A Review on Clustering Techniques: Creating Better User Experience for Online Roadshow

Abstract

:1. Introduction

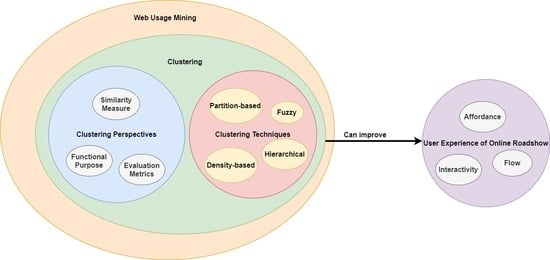

- In [10,11,12,13], the clustering techniques are generally discussed without focusing on any specific application, while our paper specifically discusses the clustering techniques used in web usage mining. In [10], greater focuses are on time series clustering, similarity measures, and evaluation metrics. In [11,13], both papers exclude the review of fuzzy-based clustering techniques.

2. Clustering Techniques

2.1. Partition-Based Clustering

- Step 1.

- Randomly select centroids for each cluster.

- Step 2.

- Calculate the distance of all data points to the centroids and assign them to the closest cluster.

- Step 3.

- Get the new centroids of each cluster by taking the mean of all data points in the cluster.

- Step 4.

- Repeat steps 2 and 3 until all the points converged and the centroids stop moving.

- Step 1.

- Randomly select k random points out of the data points as medoids.

- Step 2.

- Calculate the distance of all data points to the medoids and assign them to the closest cluster.

- Step 3.

- Randomly select one non-medoid point and recalculate the cost.

- Step 4.

- Swap the medoid with the non-medoid point as the new medoid point if the swap reduces the cost.

- Step 5.

- Repeat steps 2 to 4 until all the points converge and the medoid point stop moving.

2.2. Hierarchical Clustering

- Step 1.

- Draw a random sample and partition it.

- Step 2.

- Partially cluster the partitions.

- Step 3.

- Eliminate the outliers.

- Step 4.

- Cluster the partial clusters, shrinking representative towards the centroid.

- Step 5.

- Label the data.

- Step 1.

- Construct a k-NN graph.

- Step 2.

- Partition the graph to produce equal-sized partitions and minimize the number of edges cut using a partitioning algorithm.

- Step 3.

- Merge the partitioned clusters whose relative interconnectivity and relative closeness are above some user-specified thresholds.

- Step 1.

- Determine the number of clusters, k.

- Step 2.

- Pick a cluster to split.

- Step 3.

- Find two sub-clusters using k-means clustering (bisecting step).

- Step 4.

- Repeat step 3 to take the split with the least total sum of squared error (SSE) until the list of clusters is k.

2.3. Density-Based Clustering

- Step 1.

- Determine the value of minPts and eps.

- Step 2.

- Randomly select a starting data point. If there are at least minPts within a radius of eps to the starting data point, then the points are part of the same cluster. Otherwise the point is considered a noise.

- Step 3.

- Repeat step 2 until all the points are visited.

2.4. Fuzzy Clustering

- Step 1.

- Determine the number of clusters, c.

- Step 2.

- Randomly initialize the membership value of the clusters.

- Step 3.

- Calculate the value of centroid and update the membership value.

- Step 4.

- Repeat step 3 until the objective function is less than a threshold value.

3. Discussion

3.1. Similarity Measure

3.2. Evaluation Metrics

3.3. Functional Purpose

3.4. Case Study: Online Roadshow

4. Conclusions

5. Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Leow, K.R.; Leow, M.C.; Ong, L.Y. Online Roadshow: A New Model for the Next-Generation Digital Marketing. In Proceedings of the Future Technologies Conference, Vancouver, BC, Canada, 28–29 October 2021. [Google Scholar]

- Unger, R.; Chandler, C. A Project Guide to UX Design: For User Experience Designers in the Field or in the Making; New Riders: Indianapolis, IN, USA, 2012. [Google Scholar]

- Choi, M.W. A study on the application of user experience to ICT-based advertising. Int. J. Pure Appl. Math. 2018, 120, 5571–5586. [Google Scholar]

- Brajnik, G.; Gabrielli, S. A review of online advertising effects on the user experience. Int. J. Hum. Comput. Interact. 2010, 26, 971–997. [Google Scholar] [CrossRef] [Green Version]

- Pucillo, F.; Cascini, G. A framework for user experience, needs and affordances. Des. Stud. 2014, 35, 160–179. [Google Scholar] [CrossRef]

- Ivancsy, R.; Kovacs, F. Clustering Techniques Utilized in Web Usage Mining. In Proceedings of the 5th WSEAS International Conference on Artificial Intelligence, Knowledge Engineering and Data Bases, Madrid, Spain, 15–17 February 2006; Volume 2006, pp. 237–242. [Google Scholar]

- Cooley, R.; Mobasher, B.; Srivastava, J. Web Mining: Information and Pattern Discovery on the World Wide Web. In Proceedings of the Ninth IEEE International Conference on Tools with Artificial Intelligence, Newport Beach, CA, USA, 3–8 November 1997; pp. 558–567. [Google Scholar] [CrossRef]

- Etzioni, O. The World-Wide Web: Quagmire or gold mine? Commun. ACM 1996, 39, 65–68. [Google Scholar] [CrossRef]

- Jafari, M.; SoleymaniSabzchi, F.; Jamali, S. Extracting Users’ Navigational Behavior from Web Log Data: A Survey. J. Comput. Sci. Appl. 2013, 1, 39–45. [Google Scholar] [CrossRef]

- Aghabozorgi, S.; Seyed Shirkhorshidi, A.; Ying Wah, T. Time-series clustering—A decade review. Inf. Syst. 2015, 53, 16–38. [Google Scholar] [CrossRef]

- Rai, P.; Singh, S. A Survey of Clustering Techniques. Int. J. Comput. Appl. 2010, 7, 1–5. [Google Scholar] [CrossRef]

- Saxena, A.; Prasad, M.; Gupta, A.; Bharill, N.; Patel, O.P.; Tiwari, A.; Er, M.J.; Ding, W.; Lin, C.T. A review of clustering techniques and developments. Neurocomputing 2017, 267, 664–681. [Google Scholar] [CrossRef] [Green Version]

- Popat, S.K.; Emmanuel, M. Review and comparative study of clustering techniques. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 805–812. [Google Scholar]

- Shirkhorshidi, A.S.; Aghabozorgi, S.; Wah, T.Y.; Herawan, T. Big Data Clustering: A Review. In Proceedings of the 14th International Conference on Computational Science and Its Applications, Guimarães, Portugal, 30 June–3 July 2014; Murgante, B., Misra, S., Rocha, A.M.A.C., Torre, C., Rocha, J.G., Falcão, M.I., Taniar, D., Apduhan, B.O., Gervasi, O., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 707–720. [Google Scholar]

- Kameshwaran, K.; Malarvizhi, K. Survey on clustering techniques in data mining. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 2272–2276. [Google Scholar]

- Kaur, R.; Kaur, S. A Review: Techniques for Clustering of Web Usage Mining. Int. J. Sci. Res. 2012, 3, 1541–1545. [Google Scholar]

- Dehariya, V.K.; Shrivastava, S.K.; Jain, R.C. Clustering of Image Data Set using k-Means and Fuzzy k-Means Algorithms. In Proceedings of the 2010 International Conference on Computational Intelligence and Communication Networks, Bhopal, India, 26–28 November 2010; IEEE: Piscataway, NJ, USA; pp. 386–391. [Google Scholar]

- da Cruz Nassif, L.F.; Hruschka, E.R. Document Clustering for Forensic Analysis: An Approach for Improving Computer Inspection. In IEEE Transactions on Information Forensics and Security; IEEE: Piscataway, NJ, USA, 2012; Volume 8, pp. 46–54. [Google Scholar]

- Ahmad, H.; Zubair Islam, M.; Ali, R.; Haider, A.; Kim, H. Intelligent Stretch Optimization in Information Centric Networking-Based Tactile Internet Applications. Appl. Sci. 2021, 11, 7351. [Google Scholar] [CrossRef]

- Haider, A.; Khan, M.A.; Rehman, A.; Rahman, M.; Kim, H.S. A Real-Time Sequential Deep Extreme Learning Machine Cybersecurity Intrusion Detection System. Comput. Mater. Contin. 2021, 66, 1785–1798. [Google Scholar] [CrossRef]

- Äyrämö, S.; Kärkkäinen, T. Introduction to Partitioning-Based Clustering Methods with a Robust Example; Reports of the Department of Mathematical Information Technology. Series C, Software engineering and computational intelligence; No. C. 1/2006; Department of Mathematical Information Technology, University of Jyväskylä: Jyväskylä, Finland, 2006; pp. 1–34. [Google Scholar] [CrossRef]

- El Aissaoui, O.; El Madani El Alami, Y.; Oughdir, L.; El Allioui, Y. Integrating Web Usage Mining for an Automatic Learner Profile Detection: A Learning Styles-Based Approach. In Proceedings of the International Conference on Intelligent Systems and Computer Vision (ISCV), Fez, Morocco, 2–4 April 2018; IEEE: Piscataway, NJ, USA; pp. 1–6. [Google Scholar]

- Kaur, S.; Rashid, E.M. Web news mining using Back Propagation Neural Network and clustering using k-Means algorithm in big data. Indian J. Sci. Technol. 2016, 9. [Google Scholar] [CrossRef]

- Kathuria, A.; Jansen, B.J.; Hafernik, C.; Spink, A. Classifying the user intent of web queries using k-Means clustering. Internet Res. 2010, 20, 563–581. [Google Scholar] [CrossRef]

- Nasser, M.; Salim, N.; Hamza, H.; Saeed, F. Clustering web users for reductions the internet traffic load and users access cost based on k-Means algorithm. Int. J. Eng. Technol. 2018, 7, 3162–3169. [Google Scholar] [CrossRef]

- Chatterjee, R.P.; Deb, K.; Banerjee, S.; Das, A.; Bag, R. Web Mining Using k-Means Clustering and Latest Substring Association Rule for E-Commerce. J. Mech. Contin. Math. Sci. 2019, 14, 28–44. [Google Scholar] [CrossRef]

- Poornalatha, G.; Raghavendra, P.S. Web User Session Clustering using Modified k-Means Algorithm. In Advances in Computing and Communications; Springer: Berlin/Heidelberg, Germany, 2011; Volume 191, pp. 243–252. [Google Scholar] [CrossRef]

- Selvakumar, K.; Ramesh, L.S.; Kannan, A. Enhanced k-Means clustering algorithm for evolving user groups. Indian J. Sci. Technol. 2015, 8. [Google Scholar] [CrossRef] [Green Version]

- Alguliyev, R.M.; Aliguliyev, R.M.; Abdullayeva, F.J. PSO+k-Means algorithm for anomaly detection in big data. Stat. Optim. Inf. Comput. 2019, 7, 348–359. [Google Scholar] [CrossRef]

- Patel, R.; Kansara, A. Web pages recommendation system based on k-medoid clustering method. Int. J. Adv. Eng. Res. Dev. 2015, 2, 745–751. [Google Scholar]

- Ansari, Z.A. Web User Session Cluster Discovery Based on k-Means and k-Medoids Techniques. Int. J. Comput. Sci. Eng. Technol. 2014, 5, 1105–1113. [Google Scholar]

- Sengottuvelan, P.; Gopalakrishnan, T. Efficient Web Usage Mining Based on K-Medoids Clustering Technique. Int. J. Comput. Inf. Eng. 2015, 9, 998–1002. [Google Scholar] [CrossRef]

- Ji, W.T.; Guo, Q.J.; Zhong, S.; Zhou, E. Improved k-Medoids Clustering Algorithm under Semantic Web. In Advances in Intelligent Systems Research; Trans Tech Publications Ltd.: Stafa-Zurich, Switzerland, 2013. [Google Scholar]

- Shinde, S.K.; Kulkarni, U.V. Hybrid Personalized Recommender System using Fast k-Medoids Clustering Algorithm. J. Adv. Inf. Technol. 2011, 2, 152–158. [Google Scholar] [CrossRef]

- Rani, Y.; Rohil, H. A study of hierarchical clustering algorithms. Int. J. Inf. Comput. Technol. 2015, 3, 1115–1122. [Google Scholar]

- Dhanalakshmi, P.; Ramani, K. Clustering of users on web log data using Optimized CURE Clustering. HELIX 2018, 7, 2018–2024. [Google Scholar] [CrossRef]

- Kumble, N.; Tewari, V. Improved CURE Clustering Algorithm using Shared Nearest Neighbour Technique. Int. J. Emerg. Trends Eng. Res. 2021, 9, 151–157. [Google Scholar] [CrossRef]

- Karypis, G.; Kumar, V. Multilevel k-Way Hypergraph Partitioning. In Proceedings of the 36th Annual Design Automation Conference (DAC 1999), New Orleans, LA, USA, 21–25 June 1999; Hindawi: London, UK, 1999; pp. 343–348. [Google Scholar]

- Prasanth, A.; Hemalatha, M. Chameleon clustering algorithm with semantic analysis algorithm for efficient web usage mining. Int. Rev. Comput. Softw. 2015, 10, 529–535. [Google Scholar] [CrossRef]

- Prasanth, A.; Valsala, S. Semantic Chameleon Clustering Analysis Algorithm with Recommendation Rules for Efficient Web Usage Mining. In Proceedings of the 9th IEEE-GCC Conference and Exhibition (GCCCE 2017), Manama, Bahrain, 8–11 May 2017; IEEE: Piscataway, NJ, USA; pp. 1–9. [Google Scholar]

- Abirami, K.; Mayilvahanan, P. Performance Analysis of k-Means and Bisecting k-Means Algorithms in Weblog Data. Int. J. Emerg. Technol. Eng. Res. 2016, 4, 119–124. [Google Scholar]

- Patil, R.; Khan, A. Bisecting k-Means for Clustering Web Log data. Int. J. Comput. Appl. 2015, 116, 36–41. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In KDD-96 Proceedings; AAAI: Menlo Park, CA, USA, 1996; Volume 96, pp. 226–231. [Google Scholar]

- Langhnoja, S.G.; Barot, M.P.; Mehta, D.B. Web Usage Mining using Association Rule Mining on Clustered Data for Pattern Discovery. Int. J. Data Min. Tech. Appl. 2013, 2, 141–150. [Google Scholar]

- Ansari, Z.A. Discovery of web user session clusters using dbscan and leader clustering techniques. Int. J. Res. Appl. Sci. Eng. Technol. 2014, 2, 209–217. [Google Scholar]

- Khan, M.M.R.; Siddique, M.A.B.; Arif, R.B.; Oishe, M.R. ADBSCAN: Adaptive Density-Based Spatial Clustering of Applications with Noise for Identifying Clusters with Varying Densities. In Proceedings of the 4th International Conference on Electrical Engineering and Information Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018; IEEE: Piscataway, NJ, USA; pp. 107–111. [Google Scholar]

- Huang, Y.; Huang, W.; Xiang, X.; Yan, J. An empirical study of personalized advertising recommendation based on DBSCAN clustering of sina weibo user-generated content. Procedia Comput. Sci. 2021, 183, 303–310. [Google Scholar] [CrossRef]

- Xie, P.; Zhang, L.; Wang, Y.; Li, Q. Application of An Improved DBSCAN Algorithm in Web Text Mining. In Proceedings of the International Workshop on Cloud Computing and Information Security (CCIS), Shanghai, China, 9–11 November 2013; Atlantis Press: Amsterdam, The Netherlands; pp. 400–403. [Google Scholar]

- Udantha, M.; Ranathunga, S.; Dias, G. Modelling Website User Behaviors by Combining the EM and DBSCAN Algorithms. In Proceedings of the Moratuwa Engineering Research Conference (MERCon), Moratuwa, Sri Lanka, 5–6 April 2016; IEEE: Piscataway, NJ, USA; pp. 168–173. [Google Scholar]

- Zhou, K.; Fu, C.; Yang, S. Fuzziness parameter selection in fuzzy c-means: The perspective of cluster validation. Sci. China Inf. Sci. 2014, 57. [Google Scholar] [CrossRef] [Green Version]

- Torra, V. On the Selection of m for Fuzzy c-Means. In Proceedings of the 2015 Conference of the International Fuzzy Systems Association and the European Society for Fuzzy Logic and Technology, Gijón, Asturias, Spain, 30 June–3 July 2015. [Google Scholar] [CrossRef] [Green Version]

- Lingras, P.; Yan, R.; West, C. Fuzzy C-Means Clustering of Web Users for Educational Sites. In Advances in Artificial Intelligence; Xiang, Y., Chaib-draa, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; pp. 557–562. [Google Scholar]

- Agarwal, A.; Saxena, A. An approach for improving page search by clustering with reference to web log data in R. Int. J. Sci. Technol. Res. 2020, 9, 2832–2838. [Google Scholar]

- Chandel, G.S.; Patidar, K. A Result Evolution Approach for Web usage mining using Fuzzy C-Mean Clustering Algorithm. Int. J. Comput. Sci. Netw. Secur. 2016, 16, 135–140. [Google Scholar]

- Ali, W.; Alrabighi, M. Web Users Clustering Based on Fuzzy C-MEANS. VAWKUM Trans. Comput. Sci. 2016, 4, 51–59. [Google Scholar] [CrossRef]

- Suresh, K.; Mohana, R.M.; Reddy, A.R.M.; Subrmanyam, A. Improved FCM Algorithm for Clustering on Web Usage Mining. In Proceedings of the International Conference on Computer and Management (CAMAN), Wuhan, China, 19–21 May 2011; IEEE: Piscataway, NJ, USA; pp. 11–14. [Google Scholar]

- Niware, D.K.; Chaturvedi, S.K. Web Usage Mining through Efficient Genetic Fuzzy C-Means. Int. J. Comput. Sci. Netw. Secur. (IJCSNS 2015) 2014, 14, 113. [Google Scholar]

- Cobos, C.; Mendoza, M.; Manic, M.; Leon, E.; Herrera-Viedma, E. Clustering of web search results based on an Iterative Fuzzy C-means Algorithm and Bayesian Information Criterion. In Proceedings of the 2013 Joint IFSA World Congress and NAFIPS Annual Meeting (IFSA/NAFIPS), Edmonton, AB, Canada, 24–28 June 2013; IEEE: Piscataway, NJ, USA; pp. 507–512. [Google Scholar]

- Chitraa, V.; Thanamani, A.S. Web Log Data Analysis by Enhanced Fuzzy C Means Clustering. Int. J. Comput. Sci. Appl. 2014, 4, 81–95. [Google Scholar] [CrossRef] [Green Version]

- Pan, Y.; Zhang, L.; Li, Z. Mining event logs for knowledge discovery based on adaptive efficient fuzzy Kohonen clustering network. Knowl. Based Syst. 2020, 209, 106482. [Google Scholar] [CrossRef]

- Zheng, W.; Mo, S.; Duan, P.; Jin, X. An Improved Pagerank Algorithm Based on Fuzzy C-Means Clustering and Information Entropy. In Proceedings of the 2017 3rd IEEE International Conference on Control Science and Systems Engineering (ICCSSE), Beijing, China, 17–19 August 2017; pp. 615–618. [Google Scholar]

- Anwar, S.; Rohmat, C.L.; Basysyar, F.M.; Wijaya, Y.A. Clustering of internet network usage using the K-Medoid method. In Proceedings of the Annual Conference on Computer Science and Engineering Technology (AC2SET 2020), Medan, Indonesia, 23 September 2020; IOP Publishing: Bristol, UK, 2021; Volume 1088. [Google Scholar]

- Santhisree, K.; Damodaram, A. Cure: Clustering on Sequential Data for Web Personalization: Tests and Experimental Results. Int. J. Comput. Sci. Commun. 2011, 2, 101–104. [Google Scholar]

- Gupta, U.; Patil, N. Recommender system based on Hierarchical Clustering algorithm Chameleon. In Proceedings of the IEEE International Advance Computing Conference (IACC), Banglore, India, 12–13 June 2015; IEEE: Piscataway, NJ, USA; pp. 1006–1010. [Google Scholar]

- Kumar, T.V.; Guruprasad, S. Clustering of Web Usage Data using Chameleon Algorithm. Int. J. Innov. Res. Comput. Commun. Eng. 2014, 2, 4533–4540. [Google Scholar]

- Deepali, B.A.; Chaudhari, J. A New Bisecting k-Means algorithm for Inferring User Search Goals Engine. Int. J. Sci. Res. 2014, 3, 515–521. [Google Scholar]

- Santhisree, K.; Damodaram, A. SSM-DBSCAN and SSM-OPTICS: Incorporating a new similarity measure for Density based Clustering of Web usage data. Int. J. Comput. Sci. Eng. 2011, 3, 3170–3184. [Google Scholar]

- Chen, B.; Jiang, T.; Chen, L. Weblog Fuzzy Clustering Algorithm based on Convolutional Neural Network. Microprocess. Microsyst. 2020, 103420. [Google Scholar] [CrossRef]

- Shivaprasad, G.; Reddy, N.V.S.; Acharya, U.D.; Aithal, P.K. Neuro-Fuzzy Based Hybrid Model for Web Usage Mining. Procedia Comput. Sci. 2015, 54, 327–334. [Google Scholar] [CrossRef] [Green Version]

- Hasija, H.; Chaurasia, D. Recommender System with Web Usage Mining Based on Fuzzy c Means and Neural Networks. In Proceedings of the 2015 1st International Conference on Next Generation Computing Technologies (NGCT), Dehradun, India, 4–5 September 2015; IEEE: Piscataway, NJ, USA; pp. 768–772. [Google Scholar]

- Halkidi, M.; Batistakis, Y.; Vazirgiannis, M. On Clustering Validation Techniques. J. Intell. Inf. Syst. 2001, 17, 107–145. [Google Scholar] [CrossRef]

- Davies, D.L.; Bouldin, D.W. A Cluster Separation Measure. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: Piscataway, NJ, USA, 1979; Volume PAMI-1, pp. 224–227. [Google Scholar]

- Hubert, L.; Schultz, J. Quadratic Assignment as a General Data Analysis Strategy. Br. J. Math. Stat. Psychol. 1976, 29, 190–241. [Google Scholar] [CrossRef]

- Rokach, L.; Maimon, O. Clustering Methods. In Data Mining and Knowledge Discovery Handbook; Maimon, O., Rokach, L., Eds.; Springer: Boston, MA, USA, 2005; pp. 321–352. ISBN 978-0-387-25465-4. [Google Scholar]

- Rosenberg, A.; Hirschberg, J. V-measure: A Conditional Entropy-Based External Cluster Evaluation Measure. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, 28–30 June 2007; pp. 410–420. [Google Scholar]

- Dunn, J.C. Well-Separated Clusters and Optimal Fuzzy Partitions. J. Cybern. 1974, 4, 95–104. [Google Scholar] [CrossRef]

- Petrovic, S. A Comparison between the Silhouette Index and the Davies-Bouldin Index in Labelling IDS Clusters. In Proceedings of the 11th Nordic Workshop on Secure IT-systems, Linköping, Sweden, 19–20 October 2006; pp. 53–64. [Google Scholar]

- Palacio-Niño, J.-O.; Berzal, F. Evaluation Metrics for Unsupervised Learning Algorithms. arXiv 2019, arXiv:1905.05667. [Google Scholar]

- Xu, Y.; Lee, M.J. Identifying Personas in Online Shopping Communities. Multimodal Technol. Interact. 2020, 4, 19. [Google Scholar] [CrossRef]

| Clustering Technique | Similarity Measure | Evaluation Metric | Functional Purpose |

|---|---|---|---|

| k-means | Cosine similarity [25], Euclidean distance [23,24,26]. | Residual SSE [25], Accuracy [22,23], Percentage error [24], Precision [22,26], Recall [22], F-measure [22]. | User group discovery [25], Page categorization [23], Web queries categorization [24], Page recommendation [26], Web personalization [22]. |

| Improved k-means | Cosine similarity [28], Euclidean distance [29], Variable Length Vector Distance * [27]. | Jaccard index [27], Purity [29], Entropy [29], Dunn index [29], Silhouette index [29]. | User group categorization [28], User group discovery [27], Anomaly detection [29]. |

| k-medoids | Euclidean distance [31,32], Cosine similarity [30], Hamming distance [30]. | DB index [31,62], C index [31], SSE [31], Percentage of recommendation quality [30]. | User group discovery [31,62], Page recommendation [30], Web personalization [32]. |

| Improved k-medoids | Euclidean distance [33,34]. | Accuracy [33,34], Recall [33]. | Page categorization [33], Page recommendation [34]. |

| CURE | Euclidean distance [63], Jaccard similarity [63], Projected Euclidean distance [63], Cosine similarity [63], Fuzzy similarity [63]. | Inter-cluster distance [63], Intra-cluster distance [63]. | Web personalization [63]. |

| Improved CURE | Manhattan distance [36], Euclidean distance [37]. | Precision [36], Recall [36], Accuracy [36]. | User group discovery [36]. |

| CHAMELEON | - | MAE [64]. | User group discovery [65], Page recommendation [64]. |

| Improved CHAMELEON | - | Precision [39,40], Recall [39,40], F-measure [40], R-measure [40]. | Page categorization [39], Web personalization [40]. |

| Bisecting k-means | Cosine similarity [66]. | Accuracy [41,42], Classified AP (CAP) * [66]. | User group discovery [41], Web queries categorization [66], Intrusion detection [42]. |

| DBSCAN | Euclidean distance [45] | DB index [45], C index [31]. | User group discovery [44,45]. |

| Improved DBSCAN | SSM * [67]. | V-measure [49], Intra-cluster distance [49], Accuracy [47,48], Recall [47], F-measure [47], intra-cluster distance [67]. | User group discovery [49,67], Page categorization [48], Web personalization [47]. |

| FCM | Euclidean distance [52,53,61,68], Manhattan distance [53]. | Error rate [68,69], Accuracy [54,61,68,70], SSE [55], MAE [70], Inter-cluster distance [53], Intra-cluster distance [53], Recall [68], F-measure [68], Snew * [60]. | User group categorization [52], Page recommendation [53,70], Web personalization [68], User group discovery [54,55,69], Improvement of PageRank algorithm * [61], Improvement of Kohonen clustering * [60]. |

| Improved FCM | Euclidean distance [57], Cosine similarity [58]. | Rand index [59], SSE [59], Error rate [57], Precision [58], Recall [58], F-measure [58], Accuracy [58]. | User group discovery [56,57,59], Web queries categorization [58]. |

| Similarity Measure | Partition-Based | Hierarchical | Density-Based | Fuzzy | ||

|---|---|---|---|---|---|---|

| k-Means | k-Medoids | CURE | Bisecting k-Means | DBSCAN | FCM | |

| Cosine similarity | 2 | 1 | 1 | 1 | 0 | 1 |

| Euclidean distance | 4 | 4 | 2 | 0 | 1 | 5 |

| Fuzzy similarity | 0 | 0 | 1 | 0 | 0 | 0 |

| Hamming distance | 0 | 1 | 0 | 0 | 0 | 0 |

| Jaccard similarity | 0 | 0 | 1 | 0 | 0 | 0 |

| Manhattan distance | 0 | 0 | 1 | 0 | 0 | 1 |

| (New) SSM | 0 | 0 | 0 | 0 | 1 | 0 |

| (New) VLVD | 1 | 0 | 0 | 0 | 0 | 0 |

| Evaluation Metrics | Equations |

|---|---|

| DB index [72] | where nc is the number of clusters, i and j are cluster labels, diam(ci) and diam(cj) are diameters of clusters, d(ci, cj) is the average distance between the clusters. |

| C index [73] | where S is the sum of distances over all pairs of objects from the same cluster, Smin is the sum of the of the m smallest distances out of all pairs of objects, and Smax is the sum of the m largest distances out of all pairs of objects (let m be the number of pairs of objects). |

| SSE [74] | where n is the number of clusters, c is the number of points, is the data point, and is the centroid cluster. |

| V measure [75] | where h is the homogeneity, c is the completeness and is a weight factor that can be adjusted. |

| Dunn index [76] | where i and j are the cluster labels, k is the number of clusters, is the dissimilarity value of cluster ci and cj, and is the diameter/intra-cluster distance of the cluster. |

| Silhouette index [77] | where n is the total number of points, is the average distance between point i and all the other points in its own cluster, and is the average distance between point i and all the other points in other clusters. |

| MAE/Error rate [68] | where n is the total number of points, is the actual cluster label and is the predicted cluster label. |

| Accuracy [78] | where TP is true positive, TN is true negative, FP is false positive, and FN is false negative. |

| Rand index [74] | where TP is true positive, TN is true negative, FP is false positive, and FN is false negative. |

| Jaccard index [78] | where TP is true positive, FP is false positive, and FN is false negative. |

| Recall [74] | where TP is true positive, and FN is false negative. |

| Precision [74] | where TP is true positive, and FP is false positive. |

| F-measure [78] | where is a weight factor that can be adjusted, P is the precision value, and R is the recall value. |

| Purity [78] | where N is the number of points, j is the cluster label, is a cluster. |

| Entropy [78] | where c is the number of clusters, is the probability of a point in the cluster i is being classified as class j. |

| R-measure [40] | where R is the points in the clusters. |

| Evaluation Metrics | Number of Applications | Range | Clustering Quality |

|---|---|---|---|

| Intra-cluster distance | 4 | 0 to +∞ | Distance ↓ |

| SSE | 4 | 0 to +∞ | SSE ↓ |

| DB index | 3 | −∞ to +∞ | Index ↓ |

| C index | 2 | 0 to 1 | Index ↓ |

| Inter-cluster distance | 2 | 0 to +∞ | Distance ↑ |

| Dunn index | 1 | 0 to +∞ | Index ↑ |

| Silhouette index | 1 | −1 to +1 | Index ↑ |

| (New) Snew | 1 | 0 to +∞ | Index ↓ |

| Evaluation Metrics | Number of Applications | Clustering Quality |

|---|---|---|

| Accuracy | 15 | Accuracy ↑ |

| Recall | 9 | Recall ↑ |

| Precision | 8 | Precision ↑ |

| Error rate/MAE | 6 | Error rate/MAE ↓ |

| F-measure | 5 | F-measure ↑ |

| Entropy | 1 | Entropy ↓ |

| Jaccard index | 1 | Index ↑ |

| Purity | 1 | Purity ↑ |

| R-measure | 1 | R-measure ↑ |

| Rand index | 1 | Rand index ↑ |

| V-measure | 1 | V-measure ↑ |

| Functional Purpose | Ways to Improve User Experience | Clustering Techniques |

|---|---|---|

| User group discovery | Discovering the type of users on the website helps to segment the users based on different behavioral patterns. When the user groups of the website are known, developers can improve the website so that it can be catered to different user groups. | DBSCAN [44,45,49,67], FCM [54,55,56,57,59,69], k-medoids [31,62], CHAMELEON [65], bisecting k-means [41], CURE [36], k-means [25,27] |

| User group categorization | Categorizing of users into groups of similar interests helps the developers to improve the recommendation system in the website so that it can suggest web pages to users to sustain their interest. | FCM [52], k-means [28] |

| Page categorization | Clustering of the web pages groups the web pages into similar content types or themes. Developers can improve the design of the website so that the users can access the pages conveniently based on its content type. | DBSCAN [48], k-medoids [33], CHAMELEON [39], k-means [23] |

| Web queries categorization | Classifying a web search query to one or more categories based on the topics enables users to easily find their interested topic. Users will feel more comfortable and in control when navigating the website. | k-means [24], bisecting k-means [66], FCM [58] |

| Page recommendation | Providing suggestions of web pages based on similar user group behavior helps to reduce the time spent for the users to search for web pages. | k-means [26], k-medoids [30,34], CHAMELEON [64], FCM [53,70] |

| Web personalization | Customization of the web pages is based on the user’s past browsing activities on the website. A personalized user interface elements based on their preferences allow the users to interact in a familiar environment. | CURE [63], DBSCAN [47], k-medoids [32], FCM [68], CHAMELEON [40], k-means [22] |

| Purpose | Partition-Based | Hierarchical | Density-Based | Fuzzy | |||

|---|---|---|---|---|---|---|---|

| k-Means | k-Medoids | CURE | CHAMELEON | Bisecting k-Means | DBSCAN | FCM | |

| User group discovery | 2 | 2 | 1 | 1 | 1 | 4 | 6 |

| User group categorization | 1 | 0 | 0 | 0 | 0 | 0 | 1 |

| Page categorization | 1 | 1 | 0 | 1 | 0 | 1 | 0 |

| Web queries categorization | 1 | 0 | 0 | 0 | 1 | 0 | 1 |

| Page recommendation | 1 | 2 | 0 | 1 | 0 | 0 | 2 |

| Web personalization | 1 | 1 | 1 | 1 | 0 | 2 | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, Z.-Y.; Ong, L.-Y.; Leow, M.-C. A Review on Clustering Techniques: Creating Better User Experience for Online Roadshow. Future Internet 2021, 13, 233. https://doi.org/10.3390/fi13090233

Lim Z-Y, Ong L-Y, Leow M-C. A Review on Clustering Techniques: Creating Better User Experience for Online Roadshow. Future Internet. 2021; 13(9):233. https://doi.org/10.3390/fi13090233

Chicago/Turabian StyleLim, Zhou-Yi, Lee-Yeng Ong, and Meng-Chew Leow. 2021. "A Review on Clustering Techniques: Creating Better User Experience for Online Roadshow" Future Internet 13, no. 9: 233. https://doi.org/10.3390/fi13090233

APA StyleLim, Z. -Y., Ong, L. -Y., & Leow, M. -C. (2021). A Review on Clustering Techniques: Creating Better User Experience for Online Roadshow. Future Internet, 13(9), 233. https://doi.org/10.3390/fi13090233