Accuracy Assessment Measures for Object Extraction from Remote Sensing Images

Abstract

:1. Introduction

2. Methodology

2.1. Object Matching

2.2. Area-Based Accuracy Measures

2.3. Number-Based Accuracy Measures

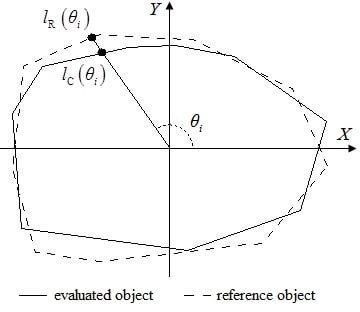

2.4. Feature Similarity-Based Accuracy Measures

2.5. Distance-Based Accuracy Measures

3. Experimental Results and Analysis

3.1. Data Description

3.2. Object Extraction

3.3. Evaluation of Object Extraction Accuracy Using Different Measures

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions:

Conflicts of Interest

References

- Guanter, L.; Segl, K.; Kaufmann, H. Simulation of optical remote-sensing scenes with application to the EnMAP hyperspectral mission. TGRS 2009, 47, 2340–2351. [Google Scholar] [CrossRef]

- Bovensmann, H.; Buchwitz, M.; Burrows, J.P.; Reuter, M.; Krings, T.; Gerilowski, K.; Schneising, O.; Heymann, J.; Tretner, A.; Erzinger, J. A remote sensing technique for global monitoring of power plant CO2 emissions from space and related applications. Atmos. Meas. Tech. 2010, 3, 781–811. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Yu, Q. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Kettig, R.L.; Landgrebe, D.A. Classification of multispectral image data by extraction and classification of homogeneous objects. ITGE 1976, 14, 19–26. [Google Scholar] [CrossRef]

- Zhan, Q.; Molenaar, M.; Tempfli, K.; Shi, W. Quality assessment for geo-spatial objects derived from remotely sensed data. Int. J. Remote Sens. 2005, 26, 2953–2974. [Google Scholar] [CrossRef]

- Möller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 311–321. [Google Scholar] [CrossRef]

- Rutzinger, M.; Rottensteiner, F.; Pfeifer, N. A comparison of evaluation techniques for building extraction from airborne laser scanning. J.-STARS 2009, 2, 11–20. [Google Scholar] [CrossRef]

- Hofmann, P.; Blaschke, T.; Strobl, J. Quantifying the robustness of fuzzy rule sets in object-based image analysis. Int. J. Remote Sens. 2011, 32, 7359–7381. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Accounting for the area of polygon sampling units for the prediction of primary accuracy assessment indices. Remote Sens. Environ. 2014, 142, 9–19. [Google Scholar] [CrossRef]

- Styers, D.M.; Moskal, L.M.; Richardson, J.J.; Halabisky, M.A. Evaluation of the contribution of LiDAR data and postclassification procedures to object-based classification accuracy. J. Appl. Remote Sens. 2014, 8, 083529. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Maier, S.W.; Boggs, G.S. Area-based and location-based validation of classified image objects. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 117–130. [Google Scholar] [CrossRef]

- Shi, W.; Zhang, X.; Hao, M.; Shao, P.; Cai, L.; Lyu, X. Validation of land cover products using reliability evaluation methods. Remote Sens. 2015, 7, 7846–7864. [Google Scholar] [CrossRef]

- Yang, J.; He, Y.; Caspersen, J.; Jones, T. A discrepancy measure for segmentation evaluation from the perspective of object recognition. ISPRS J. Photogramm. Remote Sens. 2015, 101, 186–192. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, X.; Xiao, P.; He, G.; Zhu, L. Segmentation quality evaluation using region-based precision and recall measures for remote sensing images. ISPRS J. Photogramm. Remote Sens. 2015, 102, 73–84. [Google Scholar] [CrossRef]

- Maclean, M.G.; Congalton, R.G. Map accuracy assessment issues when using an object-oriented approach. In Proceedings of the ASPRS 2012 Annual Conference, Sacramento, CA, USA, 19–23 March 2012; pp. 19–23. [Google Scholar]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Montaghi, A.; Larsen, R.; Greve, M.H. Accuracy assessment measures for image segmentation goodness of the Land Parcel Identification System (LPIS) in Denmark. Remote Sens. Lett. 2013, 4, 946–955. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Fraser, C.S. An automatic and threshold-free performance evaluation system for building extraction techniques from airborne LiDAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4184–4198. [Google Scholar] [CrossRef]

- Bonnet, S.; Gaulton, R.; Lehaire, F.; Lejeune, P. Canopy Gap Mapping from Airborne Laser Scanning: An Assessment of the Positional and Geometrical Accuracy. Remote Sens. 2015, 7, 11267–11294. [Google Scholar] [CrossRef]

- Shahzad, N.; Ahmad, S.R.; Ashraf, S. An assessment of pan-sharpening algorithms for mapping mangrove ecosystems: A hybrid approach. Int. J. Remote Sens. 2017, 38, 1579–1599. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R.; Baud, I. Extraction of slum areas from VHR imagery using GLCM variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1830–1840. [Google Scholar] [CrossRef]

- Möller, M.; Birger, J.; Gidudu, A.; Gläßer, C. A framework for the geometric accuracy assessment of classified objects. Int. J. Remote Sens. 2013, 34, 8685–8698. [Google Scholar] [CrossRef]

- Cheng, J.; Bo, Y.; Zhu, Y.; Ji, X. A novel method for assessing the segmentation quality of high-spatial resolution remote-sensing images. Int. J. Remote Sens. 2014, 35, 3816–3839. [Google Scholar] [CrossRef]

- Eisank, C.; Smith, M.; Hillier, J. Assessment of multiresolution segmentation for delimiting drumlins in digital elevation models. Geomorphology 2014, 214, 452–464. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, X.; Xiao, P.; Feng, X.; Feng, L.; Ye, N. Toward evaluating multiscale segmentations of high spatial resolution remote sensing images. TGRS 2015, 53, 3694–3706. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Witharana, C.; Civco, D.L.; Meyer, T.H. Evaluation of data fusion and image segmentation in earth observation based rapid mapping workflows. ISPRS J. Photogramm. Remote Sens. 2014, 87, 1–18. [Google Scholar] [CrossRef]

- Lizarazo, I. Accuracy assessment of object-based image classification: Another STEP. Int. J. Remote Sens. 2014, 35, 6135–6156. [Google Scholar] [CrossRef]

- Tversky, A. Features of similarity. Read. Cognit. Sci. 1977, 84, 290–302. [Google Scholar] [CrossRef]

- Pratt, W. Introduction to Digital Image Processing; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Cai, L.; Shi, W.; He, P.; Miao, Z.; Hao, M.; Zhang, H. Fusion of multiple features to produce a segmentation algorithm for remote sensing images. Remote Sens. Lett. 2015, 6, 390–398. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

| Class | Correctness (%) | Completeness (%) | Quality (%) |

|---|---|---|---|

| Water | 93.55 | 89.09 | 83.94 |

| Building | 76.34 | 83.84 | 66.55 |

| Class | Threshold | Correct Number | Correct Rate (%) | False Rate (%) | Missing Rate (%) |

|---|---|---|---|---|---|

| Water | 0.90 | 38 | 56.72 | 43.28 | 46.48 |

| 0.85 | 55 | 82.09 | 17.91 | 22.54 | |

| 0.80 | 63 | 94.03 | 5.97 | 11.27 | |

| Building | 0.90 | 4 | 9.30 | 90.70 | 90.48 |

| 0.85 | 21 | 48.84 | 51.16 | 50.00 | |

| 0.80 | 31 | 72.09 | 27.91 | 26.19 |

| Class | Index | Size Similarity (%) | Improved Size Similarity (%) | Matching Similarity (%) |

|---|---|---|---|---|

| Water | Area | 84.86 | 82.57 | 77.09 |

| Perimeter | 89.12 | 87.88 | 58.92 | |

| Area and perimeter | 86.28 | 84.34 | 71.04 | |

| Building | Area | 79.19 | 73.74 | 66.21 |

| Perimeter | 81.16 | 78.55 | 55.55 | |

| Area and perimeter | 79.85 | 75.34 | 62.66 |

| Class | Shape Similarity (%) | Improved Shape Similarity (%) |

|---|---|---|

| Water | 85.50 | 85.90 |

| Building | 76.46 | 77.99 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, L.; Shi, W.; Miao, Z.; Hao, M. Accuracy Assessment Measures for Object Extraction from Remote Sensing Images. Remote Sens. 2018, 10, 303. https://doi.org/10.3390/rs10020303

Cai L, Shi W, Miao Z, Hao M. Accuracy Assessment Measures for Object Extraction from Remote Sensing Images. Remote Sensing. 2018; 10(2):303. https://doi.org/10.3390/rs10020303

Chicago/Turabian StyleCai, Liping, Wenzhong Shi, Zelang Miao, and Ming Hao. 2018. "Accuracy Assessment Measures for Object Extraction from Remote Sensing Images" Remote Sensing 10, no. 2: 303. https://doi.org/10.3390/rs10020303

APA StyleCai, L., Shi, W., Miao, Z., & Hao, M. (2018). Accuracy Assessment Measures for Object Extraction from Remote Sensing Images. Remote Sensing, 10(2), 303. https://doi.org/10.3390/rs10020303