A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction

Abstract

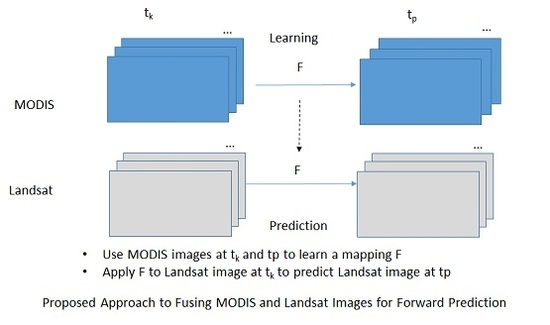

:1. Introduction

2. Materials and Methods

2.1. A Simple Motivating Example of Our Approach

2.2. Proposed Approach Based on Hybrid Color Mapping (HCM)

2.3. Evaluation Metrics

- Absolute Difference (AD). The AD of two vectorized images (ground truth) and (prediction) is defined aswhere Z is the number of pixels in each image. The ideal value of AD is 0 if the prediction is perfect.

- RMSE (Root Mean Squared Error). The RMSE of two vectorized images (ground truth) and (prediction) is defined aswhere Z is the number of pixels in each image. The ideal value of RMSE is 0 if the prediction is perfect.

- CC (Cross-Correlation). We used the codes from Open Remote Sensing website (https://openremotesensing.net/). The ideal value of CC is 1 if the prediction is perfect.

- ERGAS (Erreur Relative. Globale Adimensionnelle de Synthese). We used the codes from [23]. The ERGAS is defined asfor some constant d depending on the resolution and is the mean the ground truth image. The ideal value of ERGAS is 0 if a prediction algorithm flawlessly reconstructs the Landsat bands.

- SSIM (Structural Similarity). It is a metric to reflect the similarity between two images. An equation of SSIM can be found in [9]. The ideal value of SSIM is 1 for perfect prediction.

- SAM (Spectral Angle Mapper) [23]. The spectral angle mapper measures the angle between two vectors. The ideal value of SAM is 0 for perfect reconstruction.

3. Results

3.1. Data Set 1: Scene Contents Are Homogeneous

3.2. Data Set 2: Scene Contents Are Heterogeneous

4. Discussions

4.1. Additional Simulation Studies Using Synthetic Data to Address 16:1 Resolution Concern for HCM

4.2. Performance of HCM for Applications with 25:1 Resolution Difference

4.3. Performance of HCM for Applications with 30:1 Resolution Difference

4.4. Necessity and Importance of Having Diverse Methods for Image Fusion

4.5. Combine HCM with Image Clustering

4.5.1. Example in Homogeneous Area

4.5.2. Example in Heterogeneous Area

4.6. General Comments and Observations

5. Conclusions

Author Contributions

Conflicts of Interest

References

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Gao, F.; Anderson, M.; Zhang, X.; Yang, Z.; Alfieri, J.; Kustas, B.; Mueller, R.; Johnson, D.; Prueger, J. Mapping crop progress at field scales using Landsat and MODIS imagery. Remote Sens. Environ. 2017, 188, 9–25. [Google Scholar] [CrossRef]

- Hilker, T.; Wulder, A.M.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Addesso, P.; Conte, R.; Longo, M.; Restaino, R.; Vivone, G. Sequential Bayesian methods for resolution enhancement of TIR image sequences. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 233–243. [Google Scholar] [CrossRef]

- Addesso, P.; Conte, R.; Longo, M.; Restaino, R.; Vivone, G. A sequential Bayesian procedure for integrating heterogeneous remotely sensed data for irrigation management. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XIV, Edinburgh, UK, 19 October 2012; Volume 8531, p. 85310C. [Google Scholar]

- Agam, N.; Kustas, W.P.; Anderson, M.C.; Li, F.; Neale, C.M. A vegetation index based technique for spatial sharpening of thermal imagery. Remote Sens. Environ. 2007, 107, 545–558. [Google Scholar] [CrossRef]

- Gao, F.; Hilker, T.; Zhu, X.; Anderson, M.; Masek, J.; Wang, P.; Yang, Y. Fusing Landsat and MODIS Data for Vegetation Monitoring. IEEE Geosci. Remote Sens. Mag. 2015, 3, 47–60. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Hazaymeh, K.; Hassan, Q.K. Spatiotemporal image-fusion model for enhancing temporal resolution of Landsat-8 surface reflectance images using MODIS images. J. Appl. Remote Sens. 2015, 9, 096095. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS–Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Fu, D.; Chen, B.; Wang, J.; Zhu, X.; Hilker, T. An improved image fusion approach based on enhanced spatial and temporal the adaptive reflectance fusion model. Remote Sens. 2013, 5, 6346–6360. [Google Scholar] [CrossRef]

- Wu, M.; Niu, Z.; Wang, C.; Wu, C.; Wang, L. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model. J. Appl. Remote Sens. 2012, 6, 063507. [Google Scholar]

- Zhang, W.; Li, A.; Jin, H.; Bian, J.; Zhang, Z.; Lei, G.; Qin, Z.; Huang, C. An enhanced spatial and temporal data fusion model for fusing Landsat and MODIS surface reflectance to generate high temporal Landsat-like data. Remote Sens. 2013, 5, 5346–5368. [Google Scholar] [CrossRef]

- Huang, B.; Song, H. Spatiotemporal reflectance fusion via sparse representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar] [CrossRef]

- Song, H.; Huang, B. Spatiotemporal satellite image fusion through one-pair image learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar] [CrossRef]

- Zhou, J.; Kwan, C.; Budavari, B. Hyperspectral image super-resolution: A hybrid color mapping approach. J. Appl. Remote Sens. 2016, 10, 035024. [Google Scholar] [CrossRef]

- Kwan, C.; Budavari, B.; Dao, M.; Ayhan, B.; Bell, J.F. Pansharpening of Mastcam images. In Proceedings of the 2017 IEEE International IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Kwan, C.; Ayhan, B.; Budavari, B. Fusion of THEMIS and TES for Accurate Mars Surface Characterization. In Proceedings of the 2017 IEEE International IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Kwan, C.; Budavari, B.; Bovik, A.C.; Marchisio, G. Blind Quality Assessment of Fused WorldView-3 Images by Using the Combinations of Pansharpening and Hypersharpening Paradigms. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1835–1839. [Google Scholar] [CrossRef]

- Balgopal, R. Applications of the Frobenius Norm Criterion in Multivariate Analysis; University of Alabama: Tuscaloosa, AL, USA, 1996. [Google Scholar]

- Matrix Calculus. Available online: http://www.psi.toronto.edu/matrix/calculus.html (accessed on 20 November 2017).

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A Critical Comparison among Pansharpening Algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Alparone, L.; Baronti, S.; Garzelli, A.; Nencini, F. A global quality measurement of pan-sharpened multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 313–317. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi-/hyper-spectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Wofe, R.; Huang, C. Building consistent medium resolution satellite data set using moderate resolution imaging spectroradiometer products as reference. J. Appl. Remote Sens. 2010, 4, 043526. [Google Scholar]

- Vermote, E.F.; El Saleous, N.Z.; Justice, C.O. Atmospheric correction of MODIS data in the visible to middle infrared: First results. Remote Sens. Environ. 2002, 83, 97–111. [Google Scholar] [CrossRef]

| Day 128 | Day 144 | ||||

| Mean | Standard Deviation | Mean | Standard Deviation | ||

| MODIS | 0.085 | 0.057 | MODIS | 0.090 | 0.064 |

| Landsat | 0.089 | 0.058 | Landsat | 0.090 | 0.066 |

| Day 214 | Day 246 | ||||

| Mean | Standard Deviation | Mean | Standard Deviation | ||

| MODIS | 0.137 | 0.149 | MODIS | 0.135 | 0.124 |

| Landsat | 0.139 | 0.155 | Landsat | 0.133 | 0.125 |

| HCM | |||||||||

| AD | RMSE | cc | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0076 | 0.01 | 0.9699 | 9.71 × 10−8 | 0.9679 | 0.4638 | 0.888 | 0.836 | 0.9174 |

| Red | 0.0043 | 0.006 | 0.9475 | 1.07 × 10−7 | 0.9815 | 1.0247 | 0.8498 | ||

| Green | 0.0045 | 0.006 | 0.9114 | 1.11 × 10−7 | 0.9858 | 0.9485 | 0.751 | ||

| Bhie | 0.004 | 0.0052 | 0.8524 | 1.06 × 10−7 | 0.9827 | 1.1754 | 0.6183 | ||

| SW1 | 0.0068 | 0.01 | 0.9814 | 3.77 × 10−3 | 0.9712 | 0.5571 | 0.9251 | ||

| SW2 | 0.0065 | 0.0094 | 0.9705 | 1.48 × 10−1 | 0.9674 | 0.9163 | 0.8817 | ||

| STARFM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0089 | 0.0316 | 0.7645 | 1.55 × 10−1 | 0.9593 | 1.4716 | 0.8642 | 0.8152 | 3.3102 |

| Red | 0.0047 | 0.0172 | 0.6703 | 4.49 × 10−2 | 0.9802 | 2.9705 | 0.8474 | ||

| Green | 0.0048 | 0.0188 | 0.479 | 5.43 × 10−2 | 0.9849 | 2.9963 | 0.7551 | ||

| Bhie | 0.0044 | 0.0185 | 0.385 | 5.27 × 10−2 | 0.9807 | 4.1505 | 0.6119 | ||

| SW1 | 0.0083 | 0.0293 | 0.8614 | 1.35 × 10−1 | 0.9635 | 1.6291 | 0.9039 | ||

| SW2 | 0.0069 | 0.0253 | 0.8279 | 2.25 × 10−1 | 0.9602 | 2.4753 | 0.8641 | ||

| FSDAF | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0086 | 0.015 | 0.9266 | 7.99 × 10−8 | 0.9599 | 0.6919 | 0.8722 | 0.8197 | 1.382 |

| Red | 0.0048 | 0.0086 | 0.8825 | 8.78 × 10−8 | 0.9782 | 1.4573 | 0.8385 | ||

| Green | 0.0047 | 0.0084 | 0.7775 | 9.21 × 10−8 | 0.984 | 1.3224 | 0.7515 | ||

| Bhie | 0.0047 | 0.0083 | 0.6743 | 8.78 × 10−8 | 0.9791 | 1.8207 | 0.5995 | ||

| SW1 | 0.0082 | 0.015 | 0.9565 | 3.64 × 10−3 | 0.9622 | 0.8283 | 0.9114 | ||

| SW2 | 0.007 | 0.0122 | 0.949 | 1.31 × 10−1 | 0.9613 | 1.178 | 0.8758 | ||

| STI-FM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.008 | 0.0113 | 0.9607 | 1.02 × 10−7 | 0.9632 | 0.5206 | 0.8806 | 0.8224 | 1.1434 |

| Red | 0.0045 | 0.0071 | 0.9198 | 1.03 × 10−7 | 0.9793 | 1.2185 | 0.8425 | ||

| Green | 0.0044 | 0.0066 | 0.8715 | 1.20 × 10−7 | 0.9853 | 1.0468 | 0.7574 | ||

| Bhie | 0.004 | 0.0061 | 0.7568 | 1.08 × 10−7 | 0.9799 | 1.3997 | 0.5827 | ||

| SW1 | 0.0079 | 0.0117 | 0.9741 | 7.21 × 10−2 | 0.9641 | 0.6524 | 0.9164 | ||

| SW2 | 0.0072 | 0.0107 | 0.9622 | 1.95 × 10−1 | 0.9606 | 1.0359 | 0.8716 | ||

| HCM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N | Overall ERGAS | |

| NIR | 0.0149 | 0.0229 | 0.9331 | 1.07 × 10−7 | 0.908 | 0.8809 | 0.7828 | 0.7238 | 1.392 |

| Red | 0.0053 | 0.0083 | 0.8489 | 1.32 × 10−4 | 0.9667 | 1.8552 | 0.6499 | ||

| Green | 0.0051 | 0.0074 | 0.8872 | 1.08 × 10−7 | 0.979 | 1.2187 | 0.6578 | ||

| Bhie | 0.0041 | 0.0057 | 0.7791 | 1.14 × 10−7 | 0.9785 | 1.5306 | 0.4733 | ||

| SW1 | 0.0082 | 0.0135 | 0.9604 | 5.51 × 10−2 | 0.959 | 0.8261 | 0.861 | ||

| SW2 | 0.0077 | 0.0116 | 0.9299 | 3.80 × 10−1 | 0.956 | 1.4902 | 0.744 | ||

| STARFM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N | Overall ERGAS | |

| NIR | 0.0155 | 0.0243 | 0.9249 | 5.42 × 10−3 | 0.8963 | 0.9374 | 0.7507 | 0.6566 | 4.9979 |

| Red | 0.0056 | 0.0168 | 0.627 | 3.69 × 10−2 | 0.9606 | 3.7372 | 0.6232 | ||

| Green | 0.005 | 0.0069 | 0.9026 | 1.11 × 10−7 | 0.9811 | 1.1404 | 0.6538 | ||

| Bhie | 0.0042 | 0.0061 | 0.785 | 0.0001 | 0.977 | 1.6074 | 0.4669 | ||

| SW1 | 0.0088 | 0.0315 | 0.8387 | 0.1979 | 0.9526 | 1.9218 | 0.8555 | ||

| SW2 | 0.014 | 0.0813 | 0.4725 | 1.2764 | 0.9185 | 11.3554 | 0.6756 | ||

| FSDAF | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N | Overall ERGAS | |

| NIR | 0.0161 | 0.024 | 0.9290 | 8.82 × 10−8 | 0.8891 | 0.9228 | 0.7460 | 0.7119 | 1.3660 |

| Red | 0.0055 | 0.0085 | 0.8465 | 1.32 × 10−4 | 0.9645 | 1.8914 | 0.6331 | ||

| Green | 0.0052 | 0.0071 | 0.8920 | 9.04 × 10−8 | 0.9808 | 1.1767 | 0.6516 | ||

| Bhie | 0.0049 | 0.0062 | 0.7824 | 9.33 × 10−8 | 0.9764 | 1.6191 | 0.4542 | ||

| SW1 | 0.0100 | 0.0125 | 0.9652 | 5.12 × 10−2 | 0.9558 | 0.7622 | 0.8761 | ||

| SW2 | 0.0083 | 0.0111 | 0.9356 | 2.35 × 10−1 | 0.9511 | 0.7622 | 0.7733 | ||

| STI-FM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N | Overall ERGAS | |

| NIR | 0.0219 | 0.0403 | 0.7629 | 1.15 × 10−7 | 0.8777 | 1.5255 | 0.6898 | 0.6672 | 1.9131 |

| Red | 0.0059 | 0.0095 | 0.7765 | 1.85 × 10−3 | 0.9525 | 2.1092 | 0.6087 | ||

| Green | 0.0059 | 0.0096 | 0.7337 | 1.17 × 10−7 | 0.9644 | 1.5609 | 0.6051 | ||

| Bhie | 0.0044 | 0.0078 | 0.5346 | 0 | 0.9622 | 2.1301 | 0.4146 | ||

| SW1 | 0.0112 | 0.0230 | 0.8793 | 0.0972 | 0.9362 | 1.3756 | 0.8102 | ||

| SW2 | 0.0089 | 0.0147 | 0.8825 | 0.3051 | 0.9379 | 1.8564 | 0.7184 |

| HCM | |||||||||

| AD | RMSE | cc | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0078 | 0.0113 | 0.9821 | 1.50 × 10−1 | 0.9718 | 0.4632 | 0.9403 | 0.8291 | 0.9943 |

| Red | 0.0030 | 0.0042 | 0.9395 | 2.60 × 10−2 | 0.9848 | 1.2648 | 0.8047 | ||

| Green | 0.0026 | 0.0035 | 0.9471 | 7.93 × 10−4 | 0.9899 | 0.7675 | 0.7970 | ||

| Bhie | 0.0030 | 0.0040 | 0.8326 | 1.09 × 10−7 | 0.9839 | 1.4608 | 0.5031 | ||

| SW1 | 0.0055 | 0.0083 | 0.9828 | 1.11 × 100 | 0.9769 | 0.5793 | 0.9240 | ||

| SW2 | 0.0045 | 0.0065 | 0.9748 | 2.21 × 100 | 0.9766 | 0.9337 | 0.8708 | ||

| STARFM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0105 | 0.0414 | 0.8313 | 4.24 × 10−1 | 0.9482 | 1.7106 | 0.8982 | 0.7376 | 6.9867 |

| Red | 0.0038 | 0.0280 | 0.4243 | 1.44 × 10−1 | 0.9781 | 8.5351 | 0.7662 | ||

| Green | 0.0031 | 0.0164 | 0.5508 | 4.63 × 10−2 | 0.9863 | 3.6126 | 0.7692 | ||

| Bhie | 0.0033 | 0.0062 | 0.7186 | 3.44 × 10−3 | 0.9824 | 2.1397 | 0.5094 | ||

| SW1 | 0.0165 | 0.1021 | 0.5782 | 2.41 × 100 | 0.9408 | 7.8226 | 0.8298 | ||

| SW2 | 0.0102 | 0.0743 | 0.458 | 2.52 × 100 | 0.9437 | 11.5312 | 0.7689 | ||

| FSDAF | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0083 | 0.0123 | 0.9787 | 1.49 × 10−1 | 0.9651 | 0.5048 | 0.9314 | 0.8027 | 1.0761 |

| Red | 0.0032 | 0.0045 | 0.9347 | 2.08 × 10−2 | 0.9835 | 1.3653 | 0.7770 | ||

| Green | 0.0028 | 0.0040 | 0.9314 | 7.93 × 10−4 | 0.9886 | 0.8838 | 0.7665 | ||

| Bhie | 0.0035 | 0.0046 | 0.8392 | 8.98 × 10−8 | 0.9808 | 1.5633 | 0.4958 | ||

| SW1 | 0.0061 | 0.0093 | 0.9782 | 9.56 × 10−1 | 0.9723 | 0.6613 | 0.9089 | ||

| SW2 | 0.0050 | 0.0071 | 0.9703 | 2.06 × 100 | 0.972 | 0.9996 | 0.8414 | ||

| STI-FM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0097 | 0.0139 | 0.9728 | 1.77 × 10−1 | 0.9576 | 0.5706 | 0.9184 | 0.7565 | 1.4495 |

| Red | 0.0042 | 0.0056 | 0.9006 | 2.60 × 10−2 | 0.9699 | 1.6338 | 0.6923 | ||

| Green | 0.0040 | 0.0053 | 0.9097 | 7.93 × 10−4 | 0.9767 | 1.1121 | 0.6672 | ||

| Bhie | 0.0053 | 0.0067 | 0.5534 | 1.09 × 10−7 | 0.9572 | 2.7313 | 0.2374 | ||

| SW1 | 0.0065 | 0.0096 | 0.9767 | 1.18 × 100 | 0.9696 | 0.6715 | 0.9088 | ||

| SW2 | 0.0055 | 0.0081 | 0.9614 | 2.22 × 100 | 0.9656 | 1.1400 | 0.8340 | ||

| HCM | |||||||||

| AD | RMSE | cc | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0740 | 0.0945 | 0.2210 | 9.98 × 10−8 | 0.4816 | 1.8595 | 0.2341 | 0.5901 | 3.4322 |

| Red | 0.0119 | 0.0175 | 0.6700 | 1.04 × 10−7 | 0.8732 | 5.1146 | 0.4439 | ||

| Green | 0.0093 | 0.0116 | 0.7712 | 1.04 × 10−7 | 0.9441 | 2.3015 | 0.4824 | ||

| Bhie | 0.0095 | 0.0119 | 0.7846 | 1.24 × 10−7 | 0.8984 | 4.9286 | 0.2943 | ||

| SW1 | 0.0212 | 0.0283 | 0.7789 | 2.73 × 10−3 | 0.8565 | 1.2631 | 0.6754 | ||

| SW2 | 0.0221 | 0.0288 | 0.6564 | 5.73 × 10−3 | 0.8198 | 3.1785 | 0.4630 | ||

| STARFM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0650 | 0.0857 | 0.2737 | 5.39 × 10−2 | 0.5057 | 1.6615 | 0.1906 | 0.5031 | 11.5752 |

| Red | 0.0182 | 0.0803 | 0.1716 | 1.03 × 100 | 0.8331 | 24.7442 | 0.4145 | ||

| Green | 0.0092 | 0.0232 | 0.4432 | 5.62 × 10−2 | 0.9426 | 4.3045 | 0.5394 | ||

| Bhie | 0.0085 | 0.0226 | 0.3576 | 5.74 × 10−2 | 0.9261 | 7.2917 | 0.4620 | ||

| SW1 | 0.0236 | 0.0434 | 0.6450 | 1.35 × 10−1 | 0.8358 | 1.9414 | 0.6305 | ||

| SW2 | 0.0312 | 0.0794 | 0.3244 | 7.93 × 10−1 | 0.7151 | 8.6564 | 0.3562 | ||

| FSDAF | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0572 | 0.0732 | 0.4771 | 8.12 × 10−8 | 0.5774 | 1.4157 | 0.261 | 0.6253 | 3.0218 |

| Red | 0.0129 | 0.0190 | 0.6737 | 8.61 × 10−8 | 0.8503 | 5.1000 | 0.4873 | ||

| Green | 0.0090 | 0.0113 | 0.7889 | 8.36 × 10−8 | 0.9444 | 2.1736 | 0.5351 | ||

| Bhie | 0.0068 | 0.0089 | 0.7962 | 1.05 × 10−7 | 0.9475 | 2.6787 | 0.5365 | ||

| SW1 | 0.0220 | 0.0291 | 0.7705 | 1.82 × 10−3 | 0.8482 | 1.3084 | 0.6457 | ||

| SW2 | 0.0274 | 0.0342 | 0.5922 | 2.60 × 10−3 | 0.7486 | 3.6338 | 0.4224 | ||

| STI-FM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0623 | 0.0791 | 0.2939 | 9.51 × 10−8 | 0.5474 | 1.5191 | 0.1750 | 0.4610 | 3.1275 |

| Red | 0.0110 | 0.0199 | 0.4640 | 1.10 × 10−7 | 0.8718 | 5.2349 | 0.2323 | ||

| Green | 0.0084 | 0.0131 | 0.6456 | 1.27 × 10−7 | 0.9370 | 2.2967 | 0.3297 | ||

| Bhie | 0.0091 | 0.0134 | 0.6248 | 1.15 × 10−7 | 0.9031 | 5.4819 | 0.1646 | ||

| SW1 | 0.0174 | 0.0245 | 0.7495 | 2.60 × 10−3 | 0.8791 | 1.0360 | 0.6897 | ||

| SW2 | 0.0145 | 0.0277 | 0.5489 | 5.21 × 10−3 | 0.8400 | 2.9161 | 0.3632 | ||

| HCM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0257 | 0.0353 | 0.8700 | 1.06 × 10−7 | 0.8437 | 0.7735 | 0.7937 | 0.7732 | 1.9607 |

| Red | 0.0107 | 0.0167 | 0.7977 | 1.05 × 10−7 | 0.9140 | 3.4840 | 0.6125 | ||

| Green | 0.0087 | 0.0126 | 0.7876 | 1.13 × 10−7 | 0.9476 | 1.9139 | 0.5692 | ||

| Bhie | 0.0046 | 0.0070 | 0.8804 | 1.12 × 10−7 | 0.9743 | 1.8618 | 0.7254 | ||

| SW1 | 0.0113 | 0.0170 | 0.8793 | 3.64 × 10−3 | 0.9364 | 0.7327 | 0.8441 | ||

| SW2 | 0.0112 | 0.0175 | 0.8456 | 9.11 × 10−3 | 0.9246 | 1.6148 | 0.7769 | ||

| STARFM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Overall Q2N Overall ERGAS | ||

| NIR | 0.0306 | 0.0539 | 0.7734 | 1.44 × 10−1 | 0.8176 | 1.1639 | 0.7522 | 0.7701 | 4.3193 |

| Red | 0.0091 | 0.0306 | 0.4349 | 1.16 × 10−1 | 0.9419 | 5.4824 | 0.6861 | ||

| Green | 0.0087 | 0.0296 | 0.3512 | 1.15 × 10−1 | 0.9606 | 4.0248 | 0.6216 | ||

| Bhie | 0.0049 | 0.0279 | 0.3113 | 1.15 × 10−1 | 0.9772 | 6.7902 | 0.7572 | ||

| SW1 | 0.0117 | 0.0375 | 0.6222 | 1.45 × 10−1 | 0.9353 | 1.5759 | 0.8376 | ||

| SW2 | 0.0111 | 0.0357 | 0.5597 | 1.46 × 10−1 | 0.9271 | 3.1603 | 0.7723 | ||

| FSDAF | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Q Overall | Overall ERGAS | |

| NIR | 0.0261 | 0.0358 | 0.8756 | 8.70 × 10−8 | 0.848 | 0.7723 | 0.7903 | 0.7823 | 1.3975 |

| Red | 0.0080 | 0.0119 | 0.8325 | 8.64 × 10−8 | 0.9455 | 2.1292 | 0.6969 | ||

| Green | 0.0073 | 0.0096 | 0.8285 | 9.36 × 10−8 | 0.9635 | 1.3114 | 0.6521 | ||

| Bhie | 0.0048 | 0.0068 | 0.8908 | 9.50 × 10−8 | 0.9758 | 1.5796 | 0.7368 | ||

| SW1 | 0.0105 | 0.0160 | 0.8771 | 1.17 × 10−3 | 0.9383 | 0.6711 | 0.8502 | ||

| SW2 | 0.0101 | 0.0158 | 0.8450 | 4.69 × 10−3 | 0.9315 | 1.3832 | 0.7875 | ||

| STI-FM | |||||||||

| AD | RMSE | CC | SAM | SSIM | ERGAS | Q2N | Q Overall | Overall ERGAS | |

| NIR | 0.0268 | 0.0364 | 0.8540 | 1.08 × 10−7 | 0.8398 | 0.7923 | 0.7766 | 0.7489 | 1.5995 |

| Red | 0.0095 | 0.0136 | 0.7710 | 1.18 × 10−7 | 0.9366 | 2.4225 | 0.6135 | ||

| Green | 0.0095 | 0.0118 | 0.7414 | 1.07 × 10−7 | 0.9567 | 1.5699 | 0.5094 | ||

| Bhie | 0.0043 | 0.0064 | 0.8785 | 1.13 × 10−7 | 0.9784 | 1.5804 | 0.7420 | ||

| SW1 | 0.0133 | 0.0180 | 0.8651 | 1.30 × 10−3 | 0.9396 | 0.7362 | 0.7986 | ||

| SW2 | 0.0106 | 0.0168 | 0.8252 | 5.47 × 10−3 | 0.9275 | 1.4820 | 0.7692 | ||

| RMSE | CC | SAM | SSIM | |

|---|---|---|---|---|

| Landsat 1 | 0.0850 | 0.8370 | 0.0290 | 0.9190 |

| FSDAF | 0.0240 | 0.9860 | 0.0050 | 0.9110 |

| HCM | 0.0320 | 0.9770 | 0.0110 | 0.9460 |

| Cluster 50 × 50 | Cluster based HCM | Local based HCM | ||

| RMSE | CC | RMSE | CC | |

| NIR | 0.0111 | 0.9573 | 0.0100 | 0.9699 |

| Red | 0.0060 | 0.9348 | 0.0060 | 0.9475 |

| Green | 0.0050 | 0.8999 | 0.0060 | 0.9114 |

| Cluster 20 × 20 | Cluster based HCM | Local based HCM | ||

| RMSE | CC | RMSE | CC | |

| NIR | 0.0110 | 0.9580 | 0.0100 | 0.9699 |

| Red | 0.0059 | 0.9353 | 0.0060 | 0.9475 |

| Green | 0.0050 | 0.9023 | 0.0060 | 0.9114 |

| Cluster 5 × 5 | Cluster based HCM | Local based HCM | ||

| RMSE | CC | RMSE | CC | |

| NIR | 0.0105 | 0.9615 | 0.0100 | 0.9699 |

| Red | 0.0059 | 0.9372 | 0.0060 | 0.9475 |

| Green | 0.0048 | 0.9086 | 0.0060 | 0.9114 |

| Chister 50 × 50 | Chister based HCM | Local based HCM | ||

| RMSE | CC | RMSE | CC | |

| NIR | 0.0359 | 0.8695 | 0.0353 | 0.8700 |

| Red | 0.0171 | 0.7948 | 0.0167 | 0.7977 |

| Green | 0.0124 | 0.7857 | 0.0126 | 0.7876 |

| Bhie | 0.0082 | 0.8724 | 0.0070 | 0.8804 |

| SW1 | 0.0171 | 0.8789 | 0.0170 | 0.8793 |

| SW2 | 0.0178 | 0.8444 | 0.0175 | 0.8456 |

| Chister 20 × 20 | Chister based HCM | Local based HCM | ||

| RMSE | CC | RMSE | CC | |

| NIR | 0.0360 | 0.8687 | 0.0353 | 0.8700 |

| Red | 0.0170 | 0.7959 | 0.0167 | 0.7977 |

| Green | 0.0124 | 0.7858 | 0.0126 | 0.7876 |

| Bhie | 0.0081 | 0.8729 | 0.0070 | 0.8804 |

| SW1 | 0.0171 | 0.8785 | 0.0170 | 0.8793 |

| SW2 | 0.0178 | 0.8443 | 0.0175 | 0.8456 |

| Chister 5 × 5 | Chister based HCM | Local based HCM | ||

| RMSE | CC | RMSE | CC | |

| NIR | 0.0369 | 0.8603 | 0.0353 | 0.8700 |

| Red | 0.0173 | 0.7885 | 0.0167 | 0.7977 |

| Green | 0.0125 | 0.7791 | 0.0126 | 0.7876 |

| Bhie | 0.0080 | 0.8725 | 0.0070 | 0.8804 |

| SW1 | 0.0173 | 0.8757 | 0.0170 | 0.8793 |

| SW2 | 0.0179 | 0.8403 | 0.0175 | 0.8456 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kwan, C.; Budavari, B.; Gao, F.; Zhu, X. A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction. Remote Sens. 2018, 10, 520. https://doi.org/10.3390/rs10040520

Kwan C, Budavari B, Gao F, Zhu X. A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction. Remote Sensing. 2018; 10(4):520. https://doi.org/10.3390/rs10040520

Chicago/Turabian StyleKwan, Chiman, Bence Budavari, Feng Gao, and Xiaolin Zhu. 2018. "A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction" Remote Sensing 10, no. 4: 520. https://doi.org/10.3390/rs10040520

APA StyleKwan, C., Budavari, B., Gao, F., & Zhu, X. (2018). A Hybrid Color Mapping Approach to Fusing MODIS and Landsat Images for Forward Prediction. Remote Sensing, 10(4), 520. https://doi.org/10.3390/rs10040520