A Novel Affine and Contrast Invariant Descriptor for Infrared and Visible Image Registration

Abstract

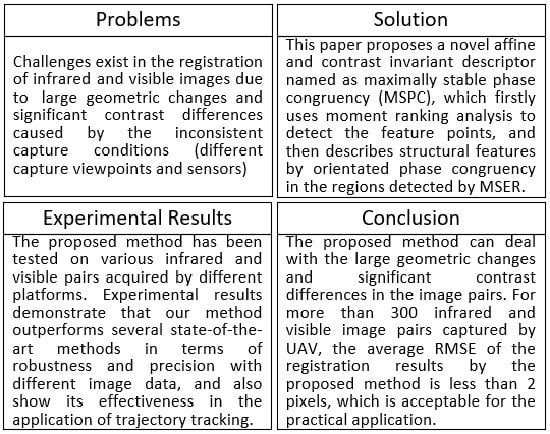

:1. Introduction

2. Related Works

3. Methodology

3.1. Salient Feature Points Detection

- (1)

- Compute the moment analysis equations at each point in the image as follows:where refers to the phase congruency value determined at orientation .

- (2)

- The minimum moment matrix and principal axis matrix are given by

- (1)

- Compute the minimum moment matrix at each point in the input image using (2)–(6).

- (2)

- To ensure the significance of feature points, candidate feature points are obtained by filtering :where is the mean of values that are larger than 0.1 and adaptive to matrix .

- (3)

- To make the feature points distributed uniformly, we extract from by using non-maximum suppress in the neighborhood of :

- (4)

- The significance ranking space is built by sorting the positions in according to corresponding value in from maximum to minimum.

- (5)

- The top of significance ranking space are selected as SFP.

3.2. Maximally Stable Phase Congruency Descriptor

3.2.1. Structural Features Extraction

- (1)

- Compute different phase congruency images with and the principal axis matrix from the input image using (2)–(7).

- (2)

- To embody the significance of structural features over the image maximumly, structural features image (SFI) is constructed from different according to the principal axis matrix . The value at in SFI can be expressed as follows:wherewhere is the phase congruency image corresponding to .

3.2.2. Affine Invariant Structural Descriptor

- (1)

- Compute the scale and orientation by using (14)–(20) for each feature point extracted by MSFPE.

- (2)

- Estimate the coarse rectangle shape of the feature point’s neighborhood by (21).

- (3)

- Get the fine ellipse region for the feature point by applying MSER to the coarse rectangle region on SFI obtained by (11).

- (4)

- Normalize the ellipse region to a circle region according to the long axis to ensure the affine invariance of the descriptor.

- (5)

- Calculate the weighted statistical histogram with four orientations distributed in by structural feature values in the circle region , in which, the weight of a certain orientation can be computed as follows:

- (6)

- The orientation histogram is normalized as a descriptor by

3.3. Registration Using the MSPC Descriptor

- (1)

- Compute the phase congruency images using Log-Gabor filters over the scales and orientations from infrared and visible images, respectively.

- (2)

- Extract the salient feature points based on the moment analysis of the phase congruency images by the MSFPE algorithm proposed in Section 3.1.

- (3)

- Construct the structural features using the multi-orientation phase congruency by the SFE algorithm presented in Section 3.2.

- (4)

- Generate the descriptors for the salient feature points using the construction algorithm of the MSPC designed in Section 3.2.

- (5)

- Find the matching points via the minimization of the Euclidean distances between the descriptors and refine the matching with random sample consensus (RANSAC).

- (6)

- Obtain the transformation from the matching and achieve the image registration.

4. Experimental Results and Analysis

4.1. Comparative Experiments

4.2. Validity Verification Experiments

4.3. Applied Experiments

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Prasad, D.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Video processing from Electro-Optical sensors for object detection and tracking in a maritime environment: A Survey. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1993–2016. [Google Scholar] [CrossRef]

- Li, H.; Ding, W.; Cao, X.; Liu, C. Image registration and fusion of visible and infrared integrated camera for medium-altitude unmanned aerial vehicle. Remote Sens. 2017, 9, 441. [Google Scholar] [CrossRef]

- Klimaszewski, J.; Kondej, M.; Kawecki, M.; Putz, B. Registration of infrared and visible images based on edge extraction and phase correlation approaches. In Image Processing and Communications and Challenges 4. Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2013; Volume 184, pp. 153–162. [Google Scholar]

- Feng, X.; Wu, W.; Li, Z.; Jeon, G.; Pang, Y. Weighted-Hausdorff distance using gradient orientation information for visible and infrared image registration. Optik 2015, 126, 3823–3829. [Google Scholar] [CrossRef]

- Rabatel, G.; Labbe, S. Registration of visible and near infrared unmanned aerial vehicle images based on Fourier-Mellin transform. Precision Agric. 2016, 17, 564–587. [Google Scholar] [CrossRef]

- Sun, M.; Zhang, B.; Liu, J.; Wang, Y.; Yang, Q. The registration of aerial infrared and visible Images. In Proceedings of the International Conference on Educational Information Technology, Chongqing, China, 17–19 September 2010; Volume 1, pp. 438–442. [Google Scholar]

- Kuczyński, K.; Stęgierski, R. Problems of infrared and Visible-Light images automatic registration. In Image Processing and Communications Challenges 5. Advances in Intelligent Systems and Computing; Springer: Berlin/Heidelberg, Germany, 2014; Volume 233, pp. 125–132. [Google Scholar]

- Wang, P.; Qu, Z.; Wang, P.; Gao, Y.; Shen, Z. A coarse-to-fine matching algorithm for FLIR and optical satellite image registration. IEEE Trans. Geosci. Remote Sens. Lett. 2012, 9, 599–603. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J.; Bruzzone, L.; Li, S. Robust registration of multimodal remote sensing images based on structural similarity. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2941–2958. [Google Scholar] [CrossRef]

- Chen, H.M.; Arora, M.K.; Varshney, P.K. Mutual information-based image registration for remote sensing data. Int. J. Remote Sens. 2003, 24, 3701–3706. [Google Scholar] [CrossRef]

- Yang, F.; Ding, M.; Zhang, X.; Wu, Y.; Hu, J. Two phase non-rigid multi-modal image registration using weber local descriptor-based similarity metrics and normalized mutual information. Sensors 2013, 13, 7599–7617. [Google Scholar] [CrossRef] [PubMed]

- Orchard, J. Efficient least squares multimodal registration with a globally exhaustive alignment search. IEEE Trans. Image Process. 2007, 16, 2526–2534. [Google Scholar] [CrossRef] [PubMed]

- Geng, Y.; Wang, Y. Registration of visible and infrared images based on gradient information. 3D Res. 2017, 8, 1–10. [Google Scholar] [CrossRef]

- Zou, Y.; Dong, F.; Lei, B.; Fang, L.; Sun, S. Image thresholding based on template matching with arctangent Hausdorff distance measure. Opt. Lasers Eng. 2013, 51, 600–609. [Google Scholar] [CrossRef]

- Zhu, X.; Hao, Y.G.; Wang, H.Y. Research on infrared and visible images registration algorithm based on graph. In Proceedings of the International Conference on Information Science and Technology, Wuhan, China, 24–26 March 2017; p. 02002. [Google Scholar]

- Yi, X.; Wang, B.; Fang, Y.; Liu, S. Registration of infrared and visible images based on the correlation of the edges. In Proceedings of the International Congress on Image and Signal Processing, Hangzhou, China, 16–18 December 2013; pp. 990–994. [Google Scholar]

- Han, J.; Pauwels, E.; Zeeuw, P. Visible and infrared image registration employing line-based geometric Analysis. In Computational Intelligence for Multimedia Understanding; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7252, pp. 114–125. [Google Scholar]

- Li, Y.; Stevenson, R.L. Multimodal image registration with line segments by selective search. IEEE Trans. Cybern. 2017, 47, 1285–1297. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wu, F.; Hu, Z. MSLD: A robust descriptor for line matching. Pattern Recognit. 2009, 42, 941–953. [Google Scholar] [CrossRef]

- Zhao, C.; Zhao, H.; Lv, J.; Sun, S.; Li, B. Multimodal image matching based on multimodality robust line segment descriptor. Neurocomputing 2016, 177, 290–303. [Google Scholar] [CrossRef]

- Lyu, C.; Jie Jian, J. Remote sensing image registration with line segments and their intersections. Remote Sens. 2017, 9, 439. [Google Scholar]

- Chen, Y.; Dai, J.; Mao, X.; Liu, Y.; Jiang, X. Image registration between visible and infrared images for electrical equipment inspection robots based on quadrilateral features. In Proceedings of the International Conference on Robotics and Automation Engineering, Shanghai, China, 29–31 December 2017; pp. 126–130. [Google Scholar]

- Lowe, D. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features. Comput. Vis. Image Understand. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Hossain, M.; Teng, S.W.; Lu, G. Achieving high multi-modal registration performance using simplified Hough-transform with improved symmetric-SIFT. In Proceedings of the International Conference on Digital Image Computing Techniques and Applications, Fremantle, WA, Australia, 3–5 December 2012; pp. 1–7. [Google Scholar]

- Zhao, D.; Yang, Y.; Ji, Z.; Hu, X. Rapid multimodality registration based on MM-SURF. Neurocomputing 2014, 131, 87–97. [Google Scholar] [CrossRef]

- Wu, F.; Wang, B.; Yi, X.; Li, M.; Hao, J.; Qin, H.; Zhou, H. Visible and infrared image registration based on visual salient features. J. Electron. Imaging 2015, 24, 053017. [Google Scholar] [CrossRef]

- Wong, A.; Orchard, J. Robust multimodal registration using local phase-coherence representations. J. Signal Process. Syst. 2009, 54, 89–100. [Google Scholar] [CrossRef]

- Xia, R.; Zhao, J.; Liu, Y. A robust feature-based registration method of multimodal image using phase congruency and coherent point drift. In Proceedings of the International Symposium on Multispectral Image Processing and Pattern Recognition, Wuhan, China, 26–27 October 2013; Volume 8919, p. 891903. [Google Scholar]

- Liu, X.; Lei, Z.; Yu, Q.; Zhang, X.; Shang, Y.; Hou, W. Multi-modal image matching based on local frequency information. EURASIP J. Adv. Sig. Process. 2013, 3, 1–11. [Google Scholar] [CrossRef]

- Chen, M.; Habib, A.; He, H.; Zhu, Q.; Zhang, W. Robust feature matching method for SAR and optical images by using Gaussian-Gamma-Shaped Bi-Windows-based descriptor and geometric constraint. Remote Sens. 2017, 9, 882. [Google Scholar] [CrossRef]

- Zhang, L.; Dwarikanath, M.; Jeroen, A.W.T.; Jaap, S.; Lucas, J.V.; Frans, M.V. Image registration based on autocorrelation of local structure. IEEE Trans. Med. Imaging 2016, 35, 63–75. [Google Scholar]

- Morrone, M.C.; Ross, J.; Burr, D.C.; Owens, R. Mach bands are phase dependent. Nature 1986, 324, 250–253. [Google Scholar] [CrossRef]

- Kovesi, P. Phase congruency: A low-level image invariant. Psych. Res. 2000, 64, 136–148. [Google Scholar] [CrossRef]

- Kovesi, P. Phase congruency detects corners and edges. In Proceedings of the Conference on Digital Image Computing: Techniques and Applications, 10–12 December 2003; pp. 309–318. [Google Scholar]

- Donoser, M.; Bischof, H. Efficient maximally stable extremal region (MSER) tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 553–560. [Google Scholar]

| Image Pairs | MM-SURF | FVS-DR | LFI | MRLSD | HOPC | Our Method | |

|---|---|---|---|---|---|---|---|

| Precision | (a) | 40.72 | 75.36 | 80.22 | 85.58 | 87.13 | 91.85 |

| (b) | 35.14 | 77.81 | 82.56 | 88.72 | 93.37 | 97.78 | |

| (c) | 22.31 | 73.30 | 77.28 | 82.15 | 91.26 | 96.65 | |

| (d) | 9.84 | 69.81 | 75.95 | 78.31 | 81.54 | 90.21 | |

| Repeat-ability | (a) | 10.83 | 20.48 | 28.47 | 35.19 | 32.24 | 39.60 |

| (b) | 5.77 | 14.63 | 25.23 | 33.64 | 35.79 | 42.80 | |

| (c) | 3.23 | 11.12 | 21.41 | 20.33 | 23.82 | 26.00 | |

| (d) | 2.18 | 6.42 | 15.52 | 19.97 | 17.82 | 24.80 | |

| Image Pairs | MM-SURF | FVS-DR | LFI | MRLSD | HOPC | Our Method |

|---|---|---|---|---|---|---|

| (a) | 2.61 | 2.44 | 3.54 | 1.57 | 2.11 | 0.82 |

| (b) | 3.36 | 2.88 | 2.72 | ---- | 3.63 | 1.23 |

| (c) | 4.68 | 3.39 | 3.66 | 2.35 | 4.55 | 0.76 |

| (d) | 3.97 | 3.73 | 4.19 | 2.56 | 4.62 | 0.58 |

| (e) | ---- | ---- | 5.57 | 3.12 | ---- | 1.37 |

| (f) | ---- | ---- | 4.81 | 3.38 | 2.26 | 1.41 |

| Method | MM-SURF | FVS-DR | LFI | MRLSD | HOPC | Our Method |

|---|---|---|---|---|---|---|

| Run time | 0.8S | 1.85S | 2.8S | 2.5S | 15.8S | 2.1S |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Ai, Y.; Zhang, J.; Wang, Z. A Novel Affine and Contrast Invariant Descriptor for Infrared and Visible Image Registration. Remote Sens. 2018, 10, 658. https://doi.org/10.3390/rs10040658

Liu X, Ai Y, Zhang J, Wang Z. A Novel Affine and Contrast Invariant Descriptor for Infrared and Visible Image Registration. Remote Sensing. 2018; 10(4):658. https://doi.org/10.3390/rs10040658

Chicago/Turabian StyleLiu, Xiangzeng, Yunfeng Ai, Juli Zhang, and Zhuping Wang. 2018. "A Novel Affine and Contrast Invariant Descriptor for Infrared and Visible Image Registration" Remote Sensing 10, no. 4: 658. https://doi.org/10.3390/rs10040658

APA StyleLiu, X., Ai, Y., Zhang, J., & Wang, Z. (2018). A Novel Affine and Contrast Invariant Descriptor for Infrared and Visible Image Registration. Remote Sensing, 10(4), 658. https://doi.org/10.3390/rs10040658