Unsupervised Classification Algorithm for Early Weed Detection in Row-Crops by Combining Spatial and Spectral Information

Abstract

:1. Introduction

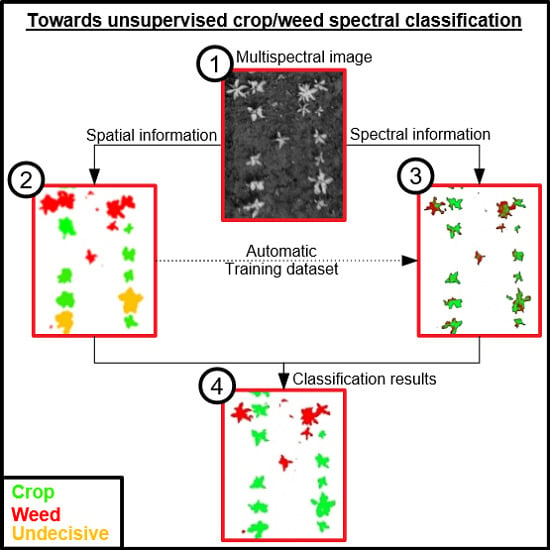

- (1)

- A classification method is developed by combining spectral and spatial information, and by using spatial information to build automatically the training dataset used by a supervised classifier without requiring any manual selection of soil, crop, or weed pixels;

- (2)

- The contribution of the spatial information alone, the spectral information alone and the combination of spectral and spatial information is analyzed with respect to the classification quality for a set of images captured in sugar beet and maize fields.

2. Materials and Methods

2.1. Site Description and Data Collection

2.1.1. Experimental Sites

2.1.2. Field Data Acquisition

2.1.3. Multispectral Imagery Acquisition

2.2. Data Processing and Analysis

2.2.1. Algorithm Based on Spatial Information

2.2.2. Algorithm Based on Spectral Information

- classi(k) is 1 when pixel i is from class k;

- classi(k) is 0 when pixel i is not from class k;

- is the belonging rate of the connected component in class k;

- k is crop or weed;

- is the number of pixels in the connected component; and

- is the estimated vegetation rate of pixel i.

2.2.3. Weed Detection Procedure Combining Spatial and Spectral Methods

- -

- crop and weed map: pixels inside/outside of crop rows;

- -

- indecisive crop and weed map: pixels classified as crop or weed with less certainty, these results are obtained from step 3 to 5 described in Figure 4; and

- -

- row map.

- -

- inter-row pixels are considered as weed (i.e., from spatial method) and

- -

- in-row pixel classes come from spectral method results.

2.2.4. Crop and Weed Detection Quality

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Acknowledgements

Conflicts of Interest

References

- Oerke, E. Crop losses to pests. J. Agric. Sci. 2006, 144, 31–43. [Google Scholar] [CrossRef]

- Timmermann, C.; Gerhards, R.; Kühbauch, W. The economic impact of site-specific weed control. Precis. Agric. 2003, 4, 249–260. [Google Scholar] [CrossRef]

- Weis, M.; Gutjahr, C.; Ayala, V.R.; Gerhards, R.; Ritter, C.; Schölderle, F. Precision farming for weed management: Techniques. Gesunde Pflanzen 2008, 60, 171–181. [Google Scholar] [CrossRef]

- Lee, W.S.; Slaughter, D.; Giles, D. Robotic weed control system for tomatoes. Precis. Agric. 1999, 1, 95–113. [Google Scholar] [CrossRef]

- Gerhards, R.; Oebel, H. Practical experiences with a system for site-specific weed control in arable crops using real-time image analysis and GPS-controlled patch spraying. Weed Res. 2006, 46, 185–193. [Google Scholar] [CrossRef]

- Stafford, J.V.; Ambler, B. In-field location using GPS for spatially variable field operations. Comput. Electron. Agric. 1994, 11, 23–36. [Google Scholar] [CrossRef]

- Nordmeyer, H. Patchy weed distribution and site-specific weed control in winter cereals. Precis. Agric. 2006, 7, 219–231. [Google Scholar] [CrossRef]

- Gutjahr, C.; Sokefeld, M.; Gerhards, R. Evaluation of two patch spraying systems in winter wheat and maize. Weed Res. 2012, 52, 510–519. [Google Scholar] [CrossRef]

- Riemens, M. Developments in physical weed control in northwest Europe. Julius-Kühn-Archiv 2016, 24, 24–26. [Google Scholar]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J.; Garcia-Ruiz, F.; Christensen, S.; Streibig, J.C. Potential uses of small unmanned aircraft systems (UAS) in weed research. Weed Res. 2013, 53, 242–248. [Google Scholar] [CrossRef]

- Lamb, D.W.; Brown, R.B. Remote-sensing and mapping of weeds in crops. J. Agric. Eng. Res. 2001, 78, 117–125. [Google Scholar] [CrossRef]

- Herrmann, I.; Shapira, U.; Kinast, S.; Karnieli, A.; Bonfil, D. Ground-level hyperspectral imagery for detecting weeds in wheat fields. Precis. Agric. 2013, 14, 637–659. [Google Scholar] [CrossRef]

- Shapira, U.; Herrmann, I.; Karnieli, A.; Bonfil, D.J. Field spectroscopy for weed detection in wheat and chickpea fields. Int. J. Remote Sens. 2013, 34, 6094–6108. [Google Scholar] [CrossRef]

- Huang, Y.; Lee, M.A.; Thomson, S.J.; Reddy, K.N. Ground-based hyperspectral remote sensing for weed management in crop production. Int. J. Agric. Biol. Eng. 2016, 9, 98. [Google Scholar]

- Feyaerts, F.; Van Gool, L. Multi-spectral vision system for weed detection. Pattern Recognit. Lett. 2001, 22, 667–674. [Google Scholar] [CrossRef]

- Vrindts, E.; De Baerdemaeker, J.; Ramon, H. Weed detection using canopy reflection. Precis. Agric. 2002, 3, 63–80. [Google Scholar] [CrossRef]

- Girma, K.; Mosali, J.; Raun, W.; Freeman, K.; Martin, K.; Solie, J.; Stone, M. Identification of optical spectral signatures for detecting cheat and ryegrass in winter wheat. Crop Sci. 2005, 45, 477–485. [Google Scholar] [CrossRef]

- Carter, G.A.; Knapp, A.K. Leaf optical properties in higher plants: Linking spectral characteristics to stress and chlorophyll concentration. Am. J. Bot. 2001, 88, 677–684. [Google Scholar] [CrossRef] [PubMed]

- Torres-Sánchez, J.; López-Granados, F.; De Castro, A.I.; Peña-Barragán, J.M. Configuration and specifications of an unmanned aerial vehicle (UAV) for early site specific weed management. PLoS ONE 2013, 8, e58210. [Google Scholar] [CrossRef] [PubMed]

- Peña, J.M.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; López-Granados, F. Quantifying efficacy and limits of unmanned aerial vehicle (UAV) technology for weed seedling detection as affected by sensor resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef] [PubMed]

- Lottes, P.; Khanna, R.; Pfeifer, J.; Siegwart, R.; Stachniss, C. UAV-based crop and weed classification for smart farming. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. Selecting patterns and features for between-and within-crop-row weed mapping using UAV-imagery. Expert Syst. Appl. 2016, 47, 85–94. [Google Scholar] [CrossRef]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Peña, J.-M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. In Third Earth Resources Technology Satellite-1 Symposium—Volume I: Technical Presentations; NASA: Washington, DC, USA, 1974; p. 309. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.; de Castro, A.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Ahmed, F.; Al-Mamun, H.A.; Bari, A.S.M.H.; Hossain, E.; Kwan, P. Classification of crops and weeds from digital images: A support vector machine approach. Crop Prot. 2012, 40, 98–104. [Google Scholar] [CrossRef]

- Pérez, A.J.; López, F.; Benlloch, J.V.; Christensen, S. Colour and shape analysis techniques for weed detection in cereal fields. Comput. Electron. Agric. 2000, 25, 197–212. [Google Scholar] [CrossRef]

- Burks, T.; Shearer, S.; Payne, F. Classification of weed species using color texture features and discriminant analysis. Trans. ASAE 2000, 43, 441. [Google Scholar] [CrossRef]

- Marchant, J.A. Tracking of row structure in three crops using image analysis. Comput. Electron. Agric. 1996, 15, 161–179. [Google Scholar] [CrossRef]

- Leemans, V.; Destain, M.F. Application of the hough transform for seed row localisation using machine vision. Biosyst. Eng. 2006, 94, 325–336. [Google Scholar] [CrossRef]

- Tillett, N.D.; Hague, T.; Miles, S.J. Inter-row vision guidance for mechanical weed control in sugar beet. Comput. Electron. Agric. 2002, 33, 163–177. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.D.; Wheeler, H. Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Vioix, J.-B.; Douzals, J.-P.; Truchetet, F.; Assémat, L.; Guillemin, J.-P. Spatial and spectral methods for weed detection and localization. EURASIP J. Adv. Signal Proc. 2002, 2002, 793080. [Google Scholar] [CrossRef]

- Gabor, D. Theory of communication. Part 1: The analysis of information. Electr. Eng. Part III Radio Commun. Eng. J. Inst. 1946, 93, 429–441. [Google Scholar] [CrossRef]

- Vioix, J.-B. Conception et Réalisation d’un Dispositif D’imagerie Multispectrale Embarqué: Du Capteur aux Traitements Pour la Détection D’adventices. Ph.D. Thesis, Université de Bourgogne, Dijon, France, 2004. [Google Scholar]

- Hung, C.; Xu, Z.; Sukkarieh, S. Feature learning based approach for weed classification using high resolution aerial images from a digital camera mounted on a UAV. Remote Sens. 2014, 6, 12037. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Gutiérrez, P.A.; Peña, J.M.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. An experimental comparison for the identification of weeds in sunflower crops via unmanned aerial vehicles and object-based analysis. In Proceedings of the International Work-Conference on Artificial Neural Networks, Palma de Mallorca, Spain, 10–12 June 2015; Springer: Berlin, Germany, 2015; pp. 252–262. [Google Scholar]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Vangeyte, J.; Pižurica, A.; He, Y.; Pieters, J.G. Fusion of pixel and object-based features for weed mapping using unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. Geoinform. 2018, 67, 43–53. [Google Scholar] [CrossRef]

- De Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An automatic random forest-obia algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Louargant, M.; Villette, S.; Jones, G.; Vigneau, N.; Paoli, J.N.; Gée, C. Weed detection by UAV: Simulation of the impact of spectral mixing in multispectral images. Precis. Agric. 2017, 18, 932–951. [Google Scholar] [CrossRef]

- Meier, U. Growth Stages of Mono- and Dicotyledonous Plants; Blackwell Wissenschafts-Verlag: Berlin, Germany, 1997. [Google Scholar]

- Verger, A.; Vigneau, N.; Chéron, C.; Gilliot, J.-M.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Introduction to the fourier transform and the frequency domain. In Digital Image Processing, 2nd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2002; pp. 149–167. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the great plains with erts. In Goddard Space Flight Center 3d ERTS-1 Symposium; NASA: Washington, DC, USA, 1974; Volume 1, pp. 309–317. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Hough, P.V.C. Method and Means for Recognizing Complex Patterns. U.S. Patent 3,069,654, 18 December 1962. [Google Scholar]

- Duda, R.O.; Hart, P.E. Use of the hough transformation to detect lines and curves in pictures. Commun. Assoc. Comput. Mach. 1972, 15, 11–15. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.M.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Louargant, M. Proxidétection des Adventices par Imagerie Aérienne: Vers un Service de Gestion par Drone. Ph.D. Thesis, Université de Bourgogne, Dijon, France, 2016. [Google Scholar]

- Jones, G.; Gée, C.; Truchetet, F. Assessment of an inter-row weed infestation rate on simulated agronomic images. Comput. Electron. Agric. 2009, 67, 43–50. [Google Scholar] [CrossRef]

- Hadoux, X.; Gorretta, N.; Rabatel, G. Weeds-wheat discrimination using hyperspectral imagery. In Proceedings of the International Conference on Agricultural Engineering, Valence, Spain, 8–12 July 2012. [Google Scholar]

- Borregaard, T.; Nielsen, H.; Norgaard, L.; Have, H. Crop-weed discrimination by line imaging spectroscopy. J. Agric. Eng. Res. 2000, 75, 389–400. [Google Scholar] [CrossRef]

- Piron, A.; Leemans, V.; Kleynen, O.; Lebeau, F.; Destain, M.F. Selection of the most efficient wavelength bands for discriminating weeds from crop. Comput. Electron. Agric. 2008, 62, 141–148. [Google Scholar] [CrossRef]

| Situations | ||

|---|---|---|

| Results on images of sugar beet field | 0.89 | 0.74 |

| Results on images of maize field | 0.86 | 0.84 |

| Results on all images | 0.88 | 0.79 |

| Situations | [–] | [] | ||

|---|---|---|---|---|

| Results on images of sugar beet field | 0.94 | 0.67 | [0.01–0.09] | [0.02–0.14] |

| Results on images of maize field | 0.76 | 0.83 | [0.01–0.05] | [0–0.06] |

| Results on all images | 0.85 | 0.75 | [0.01–0.09] | [0–0.14] |

| Situations | [–] | [–] | ||

|---|---|---|---|---|

| Results on images of sugar beet field | 0.92 | 0.81 | [0.01–0.04] | [0–0.06] |

| Results on images of maize field | 0.74 | 0.97 | [0.02–0.04] | [0–0.01] |

| Results on all images | 0.83 | 0.89 | [0.01–0.04] | [0–0.06] |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Louargant, M.; Jones, G.; Faroux, R.; Paoli, J.-N.; Maillot, T.; Gée, C.; Villette, S. Unsupervised Classification Algorithm for Early Weed Detection in Row-Crops by Combining Spatial and Spectral Information. Remote Sens. 2018, 10, 761. https://doi.org/10.3390/rs10050761

Louargant M, Jones G, Faroux R, Paoli J-N, Maillot T, Gée C, Villette S. Unsupervised Classification Algorithm for Early Weed Detection in Row-Crops by Combining Spatial and Spectral Information. Remote Sensing. 2018; 10(5):761. https://doi.org/10.3390/rs10050761

Chicago/Turabian StyleLouargant, Marine, Gawain Jones, Romain Faroux, Jean-Noël Paoli, Thibault Maillot, Christelle Gée, and Sylvain Villette. 2018. "Unsupervised Classification Algorithm for Early Weed Detection in Row-Crops by Combining Spatial and Spectral Information" Remote Sensing 10, no. 5: 761. https://doi.org/10.3390/rs10050761

APA StyleLouargant, M., Jones, G., Faroux, R., Paoli, J. -N., Maillot, T., Gée, C., & Villette, S. (2018). Unsupervised Classification Algorithm for Early Weed Detection in Row-Crops by Combining Spatial and Spectral Information. Remote Sensing, 10(5), 761. https://doi.org/10.3390/rs10050761