Animal Detection Using Thermal Images and Its Required Observation Conditions

Abstract

:1. Introduction

2. Methods

2.1. Applicable Evaluation

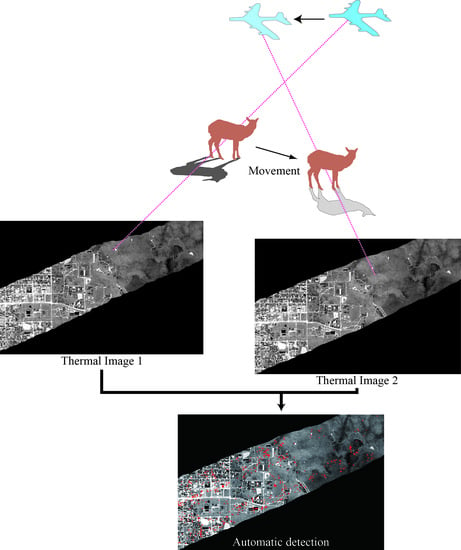

2.2. Airborne Platform Imagery

2.3. Methods for the Ground Experiment

2.4. Methods for the Unmanned Air Vehicle Unmanned Air Vehicle (UAV) Experiment

- Detection of moving targetsIt is necessary to confirm that the DWA algorithm can automatically extract moving targets from thermal UAV images.

- Detection error of non-moving objectsThe DWA algorithm was designed to avoid the extraction of non-moving objects. Accordingly, it is necessary to confirm that the DWA algorithm does not extract non-moving objects, which only change in shape in thermal images.

- A walking humanA standing human on the road was photographed by a hovering UAV. The same walking human on the road was photographed again by the hovering UAV after the position of the UAV was moved.

- A standing human and dog that only change their posesA standing human and dog on the grass were photographed by the hovering UAV. After only changing poses, they were photographed again by the hovering UAV after the position of the UAV was moved.

2.5. Outline of the Computer-Aided Detection of Moving Wild Animals (DWA) Algorithm

2.6. Application of the Method to Thermal Images

- Make binary imagesInitially, binary images are constructed using the Laplacian histogram method [16] with a moving window function, the P-tile method [17], and the Otsu method [18] to extract objects whose surface temperature is higher than the surrounding temperature. The binarization is performed only for pixels in the possible surface temperature range of sika deer. In this study, we set thresholds surface temperature range of 15.0–20.0 °C based on the previous study that showed the surface temperature range of sika deer in the airborne thermal images was 17.0–18.0 °C [14].

- Edge detectionAlthough the surface temperatures in many regions are higher than the surrounding temperature, the curve of the change in surface temperature is typically gentle. However, the contours of sika deer are more distinct. Therefore, edge detection using the Laplacian filter is performed.

- Extract only overlapping objectsTo integrate the results of (1) and (2), we extract only objects that overlap between (1) and (2).

- Classify moving animal candidates according to areaWe reject the extracted objects which are different size from target objects in the thermal images, such as cars, artifacts, and human. In this study, we set thresholds size range of 3–25 pixels because the pixel resolution of the airborne thermal images (40 cm and 50 cm) and head and body length of sika deer is 90–190 cm [6].

- Compare two imagesIf part of a candidate object overlaps in different images or the surface temperature of the candidate object is almost equal for the same pixels in different images, then this candidate is rejected, and the object is not considered a moving object. In this study, we set a threshold surface temperature gap of 0 °C. Furthermore, we changed the threshold surface temperature gap from 0 to 0.5, according to the gap in the average surface temperatures in each image.

3. Results

3.1. Applicability Results

3.2. Airborne Examination Results

4. Discussion

- The spatial resolution must be finer than one-fifth of the body length of the target species to automatically extract targets from remote sensing images.

- Objects under tree crowns do not appear in aerial images. The possibility of extracting moving wild animals decreases as the area of tree crowns in an image increases.Although a correction of the number of extracted moving wild animals using the proportion of forest is necessary for population estimates, the correction is not necessary to grasp the population change by using the number of extracted animals as a population index.

- Wild animals exhibit well-defined activity patterns, such as sleeping, foraging, migration, feeding, and resting. To extract moving wild animals, the target species should be moving when the survey is conducted.

- When shooting intervals are too long, targets can move out of the area of overlap between two images. In contrast, when shooting intervals are too short, targets cannot be extracted because the movement distance in a given interval must be longer than the body length. Thus, shooting intervals are decided after a survey of the movement speed of the target species in observation period.

5. Conclusions

- Using the same observation geometry to obtain pairs of thermal images.

- The spatial resolution must be finer than one-fifth of the body length of the target species.

- Wild animals exhibit well-defined activity patterns, such as sleeping, foraging, migration, feeding, and resting. To extract moving wild animals, the target species should be moving when the survey is conducted.

- When shooting intervals are too long, targets can move out of the area of overlap between two images. In contrast, when shooting intervals are too short, targets cannot be extracted because the movement distance in a given interval must be longer than the body length. Thus, shooting intervals are decided after a survey of the movement speed of the target species in observation period.

- The shooting intervals cause a thermal gap between two images by radiative cooling. Furthermore, conductivity is related to thermal conductivity, which differs according to materials. Therefore, we firmly recommend that shooting be performed in the early morning.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Oishi, Y. The use of remote sensing to reduce friction between human and wild animals and sustainably manage biodiversity. J. Remote Sens. Soc. Jpn. 2016, 36, 152–155, (In Japanese with English Abstract). [Google Scholar] [CrossRef]

- Watanabe, T.; Okuyama, M.; Fukamachi, K. A review of Japan’s environmental policies for Satoyama and Satoumi landscape restoration. Glob. Environ. Res. 2012, 16, 125–135. [Google Scholar]

- Annual Report on Food, Agriculture and Rural Area in Japan FY 2015. Available online: http://www.maff.go.jp/e/data/publish/attach/pdf/index-34.pdf (accessed on 17 August 2017).

- Adaptive Management. Available online: http://www2.usgs.gov/sdc/doc/DOI-Adaptive ManagementTechGuide.pdf (accessed on 21 August 2017).

- Lancia, R.A.; Braun, C.E.; Collopy, M.W.; Dueser, R.D.; Kie, J.G.; Martinka, C.J.; Nichols, J.D.; Nudds, T.D.; Porath, W.R.; Tilghman, N.G. ARM! For the future: Adaptive resource management in the wildlife profession. Wildl. Soc. Bull. 1996, 24, 436–442. [Google Scholar]

- Ohdachi, S.D.; Ishibashi, Y.; Iwasa, M.A.; Saito, T. The Wild Mammals of Japan; Shoukadoh Book Sellers: Kyoto, Japan, 2009; ISBN 9784879746269. [Google Scholar]

- Oishi, Y.; Matsunaga, T.; Nakasugi, O. Automatic detection of the tracks of wild animals in the snow in airborne remote sensing images and its use. J. Remote Sens. Soc. Jpn. 2010, 30, 19–30, (In Japanese with English Abstract). [Google Scholar] [CrossRef]

- Oishi, Y.; Matsunaga, T. Support system for surveying moving wild animals in the snow using aerial remote-sensing images. Int. J. Remote Sens. 2014, 35, 1374–1394. [Google Scholar] [CrossRef]

- Kissell, R.E., Jr.; Tappe, P.A. Assessment of thermal infrared detection rates using white-tailed deer surrogates. J. Ark. Acad. Sci. 2004, 58, 70–73. [Google Scholar]

- Chretien, L.; Theau, J.; Menard, P. Visible and Thermal Infrared Remote Sensing for the Detection of White-tailed Deer Using an Unmanned Aerial System. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- Christiansen, P.; Steen, K.A.; Jorgensen, R.N.; Karstoft, H. Automated detection and recognition of wildlife using thermal cameras. Sensors 2014, 14, 13778–13793. [Google Scholar] [CrossRef] [PubMed]

- Terletzky, P.A.; Ramsey, R.D. Comparison of three techniques to identify and count individual animals in aerial imagery. J. Signal Inf. Process. 2016, 7, 123–135. [Google Scholar] [CrossRef]

- Maps and Geospatial Information. Available online: http://www.gsi.go.jp/kiban/index.html (accessed on 4 December 2017).

- Tamura, A.; Miyasaka, S.; Yoshida, N.; Unome, S. New approach of data acquisition by state of the art airborne sensor: Detection of wild animal by airborne thermal sensor system. J. Adv. Surv. Technol. 2016, 108, 38–49. (In Japanese) [Google Scholar]

- Steen, K.A.; Villa-Henriksen, A.; Therkildsen, O.R.; Green, O. Automatic detection of animals in mowing operations using thermal cameras. Sensors 2012, 12, 7587–7597. [Google Scholar] [CrossRef] [PubMed]

- Weszka, J.S.; Nagel, R.N.; Rosenfeld, A. A threshold selection technique. IEEE Trans. Comput. 1974, C-23, 1322–1326. [Google Scholar] [CrossRef]

- Doyle, W. Operation useful for similarity-invariant pattern recognition. J. Assoc. Comput. Mach. 1962, 9, 259–267. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, SMC-9, 62–66. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Yu, J.; Tan, Y.; Tian, J.; Ma, J. A novel spatio-temporal saliency approach for robust dim moving target detection from airborne infrared image sequences. Inf. Sci. 2016, 369, 548–563. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y. Robust infrared small target detection using local steering kernel reconstruction. Pattern Recognit. 2018, 77, 113–125. [Google Scholar] [CrossRef]

- Takeda, H.; Farsiu, S.; Milanfar, P. Kernel regression for image processing and reconstruction. IEEE Trans. Image Process. 2007, 16, 346–366. [Google Scholar] [CrossRef]

- Imamoglu, N.; Zhang, C.; Shimoda, W.; Fang, Y.; Shi, B. Saliency Detection by Forward and Backward Cues in Deep-CNN. In Proceedings of the Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| The Number of Sika Deer Identified by Visual Inspection | The Number of Sika Deer Extracted by the Proposed Method | ||

|---|---|---|---|

| Total | 357 | 849 | |

| Moving sika deer | 299 | 225 | Producer’s accuracy 75.3% |

| Stopping sika deer | 155 | ||

| Not in an overlapping area | 58 | ||

| User’s accuracy 29.3% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Oishi, Y.; Oguma, H.; Tamura, A.; Nakamura, R.; Matsunaga, T. Animal Detection Using Thermal Images and Its Required Observation Conditions. Remote Sens. 2018, 10, 1050. https://doi.org/10.3390/rs10071050

Oishi Y, Oguma H, Tamura A, Nakamura R, Matsunaga T. Animal Detection Using Thermal Images and Its Required Observation Conditions. Remote Sensing. 2018; 10(7):1050. https://doi.org/10.3390/rs10071050

Chicago/Turabian StyleOishi, Yu, Hiroyuki Oguma, Ayako Tamura, Ryosuke Nakamura, and Tsuneo Matsunaga. 2018. "Animal Detection Using Thermal Images and Its Required Observation Conditions" Remote Sensing 10, no. 7: 1050. https://doi.org/10.3390/rs10071050

APA StyleOishi, Y., Oguma, H., Tamura, A., Nakamura, R., & Matsunaga, T. (2018). Animal Detection Using Thermal Images and Its Required Observation Conditions. Remote Sensing, 10(7), 1050. https://doi.org/10.3390/rs10071050