Figure 1.

Location of study area and experimental design: (a) location of study area in China; (b) map showing Changping District in Beijing City; (c) design of treatments and images of ground-measurement field acquired from unmanned aerial vehicle mounted high-definition digital camera.

Figure 1.

Location of study area and experimental design: (a) location of study area in China; (b) map showing Changping District in Beijing City; (c) design of treatments and images of ground-measurement field acquired from unmanned aerial vehicle mounted high-definition digital camera.

Figure 2.

UAV-UHD hyperspectral images and corresponding crop height on (a,b) 21 April; (c,d) 26 April; and (e,f) 13 May 2015.

Figure 2.

UAV-UHD hyperspectral images and corresponding crop height on (a,b) 21 April; (c,d) 26 April; and (e,f) 13 May 2015.

Figure 3.

Digital camera images and corresponding crop height on (a,b) 21 April; (c,d) 26 April; and (e,f) 13 May 2015.

Figure 3.

Digital camera images and corresponding crop height on (a,b) 21 April; (c,d) 26 April; and (e,f) 13 May 2015.

Figure 4.

Averaged hyperspectral reflectance spectra, averaged DN values, and crop height in the three growing stages: (a) G-hyperspectral; (b) UHD-hyperspectral; (c) calibrated DC-DN values; (d) G-height; (e) UHD-height; (f) DC height. G- indicates data measured by ground-based ASD spectrometer and measuring stick; UHD- indicates data measured using the UHD 185 mounted on the UAV; and DC- indicates data measured by using the digital camera mounted on the UAV.

Figure 4.

Averaged hyperspectral reflectance spectra, averaged DN values, and crop height in the three growing stages: (a) G-hyperspectral; (b) UHD-hyperspectral; (c) calibrated DC-DN values; (d) G-height; (e) UHD-height; (f) DC height. G- indicates data measured by ground-based ASD spectrometer and measuring stick; UHD- indicates data measured using the UHD 185 mounted on the UAV; and DC- indicates data measured by using the digital camera mounted on the UAV.

Figure 5.

Pearson correlation coefficient between VIs, crop height (H), AGB and LAI: (a) G-Vis; (b) UHD-Vis; (c) DC-VIs.

Figure 5.

Pearson correlation coefficient between VIs, crop height (H), AGB and LAI: (a) G-Vis; (b) UHD-Vis; (c) DC-VIs.

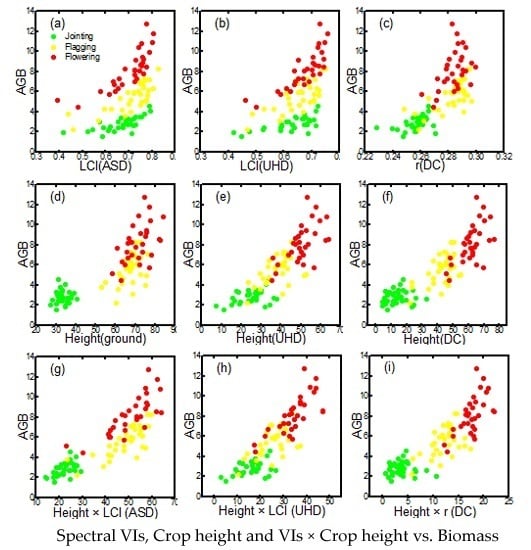

Figure 6.

Relationship between best VIs and AGB: (a) G-LCI; (b) UHD-LCI; (c) DC-r; (d) G-height; (e) UHD-height; (f) DC-height; (g) G-height × LCI; (h) UHD-height × LCI; (i) DC-height × r.

Figure 6.

Relationship between best VIs and AGB: (a) G-LCI; (b) UHD-LCI; (c) DC-r; (d) G-height; (e) UHD-height; (f) DC-height; (g) G-height × LCI; (h) UHD-height × LCI; (i) DC-height × r.

Figure 7.

Relationship between best VIs and LAI: (a) G-LCI; (b) UHD-LCI; (c) DC-B; (d) G-height; (e) UHD-height; (f) DC-height; (g) G-height × LCI; (h) G-height × LCI; (i) DC-height × B.

Figure 7.

Relationship between best VIs and LAI: (a) G-LCI; (b) UHD-LCI; (c) DC-B; (d) G-height; (e) UHD-height; (f) DC-height; (g) G-height × LCI; (h) G-height × LCI; (i) DC-height × B.

Figure 8.

Relationship between the predicted and measured winter wheat AGB (t/ha): (a) G-height, VIs, and PLSR; (b) G-height, VIs, and RF; (c) G-VIs and PLSR; (d) G-VIs and RF; (e) UHD-height, VIs, and PLSR; (f) UHD-height, VIs, and RF; (g) UHD-VIs and PLSR; (h) UHD-VIs and RF; (i) DC-height, VIs, and PLSR; (j) DC-height, VIs, and RF; (k) DC-VIs and PLSR; (l) DC-VIs and RF (validation dataset, mean AGB = 4.58 t/ha).

Figure 8.

Relationship between the predicted and measured winter wheat AGB (t/ha): (a) G-height, VIs, and PLSR; (b) G-height, VIs, and RF; (c) G-VIs and PLSR; (d) G-VIs and RF; (e) UHD-height, VIs, and PLSR; (f) UHD-height, VIs, and RF; (g) UHD-VIs and PLSR; (h) UHD-VIs and RF; (i) DC-height, VIs, and PLSR; (j) DC-height, VIs, and RF; (k) DC-VIs and PLSR; (l) DC-VIs and RF (validation dataset, mean AGB = 4.58 t/ha).

Figure 9.

Relationship between predicted and measured winter wheat LAI (m2/m2): (a) G-height, VIs, and PLSR; (b) G-height, VIs, and RF; (c) G-VIs and PLSR; (d) G-VIs and RF; (e) UHD-height, VIs, and PLSR; (f) UHD-height, VIs, and RF; (g) UHD-VIs and PLSR; (h) UHD-VIs and RF; (i) DC-height, VIs, and PLSR; (j) DC-height, VIs, and RF; (k) DC-VIs and PLSR; (l) DC-VIs and RF (validation dataset, mean LAI = 3.57 m2/m2).

Figure 9.

Relationship between predicted and measured winter wheat LAI (m2/m2): (a) G-height, VIs, and PLSR; (b) G-height, VIs, and RF; (c) G-VIs and PLSR; (d) G-VIs and RF; (e) UHD-height, VIs, and PLSR; (f) UHD-height, VIs, and RF; (g) UHD-VIs and PLSR; (h) UHD-VIs and RF; (i) DC-height, VIs, and PLSR; (j) DC-height, VIs, and RF; (k) DC-VIs and PLSR; (l) DC-VIs and RF (validation dataset, mean LAI = 3.57 m2/m2).

Figure 10.

Above-ground Biomass (t/ha) maps made using UAV-UHD, UAV-DC, and PLSR. (a) UHD on 21 April; (b) UHD on 26 April; (c) UHD on 13 May; (d) DC on 21 April; (e) DC on 26 April; and (f) DC on 13 May. Note: UHD- indicates data measured using the snapshot hyperspectral sensor mounted on the UAV; and DC- indicates data measured by using the digital camera mounted on the UAV.

Figure 10.

Above-ground Biomass (t/ha) maps made using UAV-UHD, UAV-DC, and PLSR. (a) UHD on 21 April; (b) UHD on 26 April; (c) UHD on 13 May; (d) DC on 21 April; (e) DC on 26 April; and (f) DC on 13 May. Note: UHD- indicates data measured using the snapshot hyperspectral sensor mounted on the UAV; and DC- indicates data measured by using the digital camera mounted on the UAV.

Figure 11.

Leaf Area Index (m2/m2) maps based on UAV-UHD and UAV-DC images and with PLSR. (a) UHD on 21 April; (b) UHD on 26 April; (c) UHD on 13 May; (d) DC on 21 April; (e) DC on 26 April; (f) DC on 13 May. Note: UHD- indicates data measured using the snapshot hyperspectral sensor mounted on the UAV; and DC- indicates data measured by using the digital camera mounted on the UAV.

Figure 11.

Leaf Area Index (m2/m2) maps based on UAV-UHD and UAV-DC images and with PLSR. (a) UHD on 21 April; (b) UHD on 26 April; (c) UHD on 13 May; (d) DC on 21 April; (e) DC on 26 April; (f) DC on 13 May. Note: UHD- indicates data measured using the snapshot hyperspectral sensor mounted on the UAV; and DC- indicates data measured by using the digital camera mounted on the UAV.

Table 1.

Sensor parameters and abbreviations used in this study.

Table 1.

Sensor parameters and abbreviations used in this study.

| Platforms | Ground | UAV |

|---|

| Sensor Types | Spectrometer | Snapshot Spectrometer | Digital camera |

| Sensor Names | ASD FieldSpec 3 | UHD 185 | Sony DSC–QX100 |

| Field of view | 25° | 19° | 64° |

| Image size | - | 1000 × 1000 | 5472 × 3648 |

| Working height | 1.3 m | 50 m | 50 m |

| Spectral information | 350~2500 nm | 450–950 nm | R, G, B |

| Original spectral resolution | 3 nm @ 700 nm;

8.5 nm @ 1400 nm;

6.5 nm @ 2100 nm | 8 nm @ 532 nm | - |

| Data spectral resolution | 1 nm | 4 nm | Red, Green and Blue band |

| Image spatial resolution | - | 2.16 × 2.43 cm | 1.11 × 1.11 cm |

| Height | Steel tape ruler | Photogrammetry method | Photogrammetry method |

| Crop Height Abbreviations | G-height | UHD-height | DC-height |

Table 2.

Summary of bands and vegetation indices (VIs) used in this study.

Table 2.

Summary of bands and vegetation indices (VIs) used in this study.

| Sensors | High-Definition Digital Camera | Hyperspectral Sensor |

|---|

| Type | VIs | Equation | VIs | Equation |

|---|

| Spectral information | R | DNr | B460 | 460 nm of hyperspectral reflectance |

| G | DNg | B560 | 560 nm of hyperspectral reflectance |

| B | DNb | B670 | 670 nm of hyperspectral reflectance |

| r | R/(R + G + B) | B800 | 800 nm of hyperspectral reflectance |

| g | G/(R + G + B) | BGI | B460/B560 |

| b | B/(R + G + B) | NDVI | (B800 − B670)/(B800 + B670) |

| B/R | B/R | LCI | (B850 − B710)/(B850 + B670) |

| B/G | B/G | NPCI | (B670 − B460)/(B670 + B460) |

| R/G | R/G | EVI2 | 2.5 × (B800 − B670)/(B800 + 2.4 × B670 + 1) |

| EXR | 1.4 × r − g | OSAVI | 1.16 × (B800 − B670)/(B800 + B670 + 0.16) |

| VARI | (g − r)/(g + r − b) | SPVI | 0.4 × (3.7(B800 − B670) − 1.2 ×|B530 − B670|) |

| GRVI | (g − r)/(g + r) | MCARI | ((B700 − B670) − 0.2×(B700 − B560))/(B700/B670) |

| DSM information | Crop Height | Photogrammetry method | Crop Height | Photogrammetry method |

Table 3.

Descriptive statistics of above-ground biomass (AGB, t/ha), and leaf area index (LAI, m2/m2), and crop height (cm) from the study area.

Table 3.

Descriptive statistics of above-ground biomass (AGB, t/ha), and leaf area index (LAI, m2/m2), and crop height (cm) from the study area.

| Dataset | Period | Crop Variables | Samples | Min | Mean | Max | Standard Deviation | Coefficient of Variation (%) |

|---|

| Calibration | Jointing | AGB | 32 | 1.45 | 2.76 | 4.52 | 0.67 | 24.28 |

| LAI | 32 | 2.27 | 3.94 | 5.87 | 0.88 | 22.34 |

| Height | 32 | 27.33 | 33.55 | 41.00 | 3.16 | 9.42 |

| Flagging | AGB | 32 | 2.19 | 5.48 | 8.26 | 2.11 | 38.50 |

| LAI | 32 | 1.30 | 4.11 | 8.81 | 1.47 | 35.77 |

| Height | 32 | 53.33 | 67.19 | 76.33 | 6.44 | 9.58 |

| Flowering | AGB | 32 | 4.39 | 8.07 | 12.73 | 1.88 | 23.30 |

| LAI | 32 | 1.24 | 3.24 | 5.89 | 1.13 | 34.88 |

| Height | 32 | 58.00 | 71.38 | 84.33 | 6.62 | 9.27 |

| Validation | Jointing | AGB | 16 | 1.20 | 2.16 | 3.05 | 0.50 | 23.15 |

| LAI | 16 | 1.69 | 2.98 | 4.39 | 0.68 | 22.82 |

| Height | 16 | 25.67 | 29.18 | 34.33 | 2.11 | 7.23 |

| Flagging | AGB | 16 | 2.21 | 4.36 | 6.01 | 1.24 | 28.44 |

| LAI | 16 | 1.72 | 4.33 | 6.63 | 1.53 | 35.33 |

| Height | 16 | 53.33 | 62.37 | 70.66 | 5.12 | 8.21 |

| Flowering | AGB | 16 | 3.41 | 7.21 | 10.56 | 1.98 | 27.46 |

| LAI | 16 | 1.34 | 3.39 | 5.53 | 1.27 | 37.46 |

| Height | 16 | 55.00 | 69.60 | 82.33 | 7.50 | 10.78 |

Table 4.

Relationship between crop parameters and spectra acquired during different growing stages.

Table 4.

Relationship between crop parameters and spectra acquired during different growing stages.

| Crop Parameters | Regression Equation Methods |

|---|

| Equations | R2 | MAE | RMSE |

|---|

| Jointing | AGB | G-MCARI | | 0.66 | 0.30 | 0.39 |

| DC-G | | 0.64 | 0.32 | 0.42 |

| UHD-LCI | | 0.50 | 0.38 | 0.49 |

| LAI | G-MCARI | −374.23x + 8.406 | 0.62 | 0.44 | 0.54 |

| DC-G | | 0.63 | 0.47 | 0.60 |

| UHD-LCI | | 0.45 | 0.51 | 0.68 |

| Flagging | AGB | G-LCI | | 0.67 | 0.75 | 0.84 |

| DC-b | | 0.57 | 0.86 | 0.97 |

| UHD-LCI | | 0.58 | 2.61 | 2.85 |

| LAI | G-LCI | | 0.78 | 0.88 | 0.55 |

| DC-b | 13327 | 0.76 | 0.88 | 0.47 |

| UHD-NPCI | | 0.74 | 0.92 | 0.51 |

| Flowering | AGB | G-SPVI | | 0.67 | 0.87 | 1.12 |

| DC-b | | 0.74 | 0.86 | 1.19 |

| UHD-BGI | | 0.67 | 0.93 | 1.18 |

| LAI | G-SPVI | | 0.72 | 0.55 | 0.66 |

| DC-b | | 0.81 | 0.47 | 0.65 |

| UHD-MCARI | | 0.72 | 0.51 | 0.67 |

| Total for three stages | AGB | G-LCI | | 0.26 | 1.88 | 2.25 |

| DC-r | | 0.67 | 1.19 | 1.71 |

| UHD-LCI | | 0.30 | 1.84 | 2.18 |

| LAI | G-LCI | | 0.59 | 0.67 | 0.88 |

| DC-B | | 0.55 | 0.70 | 0.90 |

| UHD-LCI | | 0.46 | 0.80 | 1.01 |

Table 5.

Relationship between crop parameters and crop height in different growing stages.

Table 5.

Relationship between crop parameters and crop height in different growing stages.

| Crop Parameters | Regression Equation Methods |

|---|

| Height | Equations | R2 | MAE | RMSE |

|---|

| Jointing | AGB | G-height | | 0.02 | 0.54 | 0.67 |

| DC-height | | 0.03 | 0.56 | 0.67 |

| UHD-height | | 0.17 | 0.51 | 0.62 |

| LAI | G-height | | 0.01 | 0.68 | 0.88 |

| DC-height | | 0.08 | 0.68 | 0.89 |

| UHD-height | | 0.12 | 0.63 | 0.83 |

| Flagging | AGB | G-height | | 0.24 | 0.96 | 1.25 |

| DC-height | | 0.30 | 1.12 | 1.36 |

| UHD-height | | 0.29 | 0.96 | 1.20 |

| LAI | G-height | | 0.33 | 0.98 | 1.34 |

| DC-height | | 0.40 | 1.00 | 1.34 |

| UHD-height | | 0.42 | 0.96 | 1.25 |

| Flowering | AGB | G-height | | 0.27 | 1.3 | 1.61 |

| DC-height | | 0.36 | 1.26 | 1.61 |

| UHD-height | | 0.38 | 1.21 | 1.53 |

| LAI | G-height | | 0.22 | 0.78 | 1.00 |

| DC-height | | 0.33 | 0.76 | 0.97 |

| UHD-height | | 0.33 | 0.74 | 0.94 |

| Total for three stages | AGB | G-height | | 0.73 | 1.08 | 1.49 |

| DC-height | | 0.73 | 1.00 | 1.29 |

| UHD-height | | 0.71 | 1.04 | 1.39 |

| LAI | G-height | | 0.01 | 1.01 | 1.31 |

| DC-height | | 0.01 | 1.01 | 1.31 |

| UHD-height | | 0.02 | 1.01 | 1.30 |

Table 6.

Relationship between crop parameters and spectral VIs and crop height in different growing stages.

Table 6.

Relationship between crop parameters and spectral VIs and crop height in different growing stages.

| Crop Parameters | Regression Equation Methods |

|---|

| Information | Equations | R2 | MAE | RMSE |

|---|

| Jointing | AGB | G-height, MCARI | | 0.56 | 0.38 | 0.46 |

| DC-height, G | | 0.11 | 0.55 | 0.67 |

| UHD-height, LCI | | 0.19 | 0.50 | 0.61 |

| LAI | G-height, MCARI | | 0.47 | 0.54 | 0.65 |

| DC-height, G | | 0.01 | 0.67 | 0.88 |

| UHD-height, LCI | | 0.16 | 0.61 | 0.83 |

| Flagging | AGB | G-height, LCI | | 0.61 | 0.72 | 0.91 |

| DC-height, b | | 0.30 | 1.09 | 1.27 |

| UHD-height, LCI | | 0.38 | 0.98 | 1.20 |

| LAI | G-height, LCI | | 0.79 | 0.60 | 0.86 |

| DC-height, b | | 0.43 | 1.07 | 1.41 |

| UHD-height, NPCI | | 0.68 | 0.80 | 1.06 |

| Flowering | AGB | G-height, SPVI | | 0.59 | 1.00 | 1.26 |

| DC-height, b | | 0.37 | 1.20 | 1.49 |

| UHD-height, BGI | | 0.49 | 1.12 | 1.42 |

| LAI | G-height, SPVI | | 0.60 | 0.65 | 0.79 |

| DC-height, b | | 0.42 | 0.71 | 0.90 |

| UHD-height, MCARI | | 0.71 | 0.64 | 0.84 |

| Total for three stages | AGB | G-height, LCI | | 0.81 | 0.99 | 1.32 |

| DC-height, r | | 0.77 | 1.02 | 1.30 |

| UHD-height, LCI | | 0.74 | 1.05 | 1.38 |

| LAI | G-height, LCI | | 0.07 | 1.00 | 1.27 |

| DC-height, B | | 0.04 | 1.01 | 1.29 |

| UHD-height, LCI | | 0.06 | 1.00 | 1.27 |

Table 7.

Leaf area index and above-ground biomass estimates based on all vegetation indices from calibration dataset and using partial least square regression and random forest methods (calibration dataset, mean AGB = 5.44 t/ha, mean LAI = 3.77 m2/m2).

Table 7.

Leaf area index and above-ground biomass estimates based on all vegetation indices from calibration dataset and using partial least square regression and random forest methods (calibration dataset, mean AGB = 5.44 t/ha, mean LAI = 3.77 m2/m2).

| Methods | Data | AGB (t/ha) | LAI (m2/m2) |

|---|

| R2 | MAE | nRMSE (%) | RMSE | R2 | MAE | nRMSE (%) | RMSE |

|---|

| RF | G-VIs | 0.93 | 0.58 | 14.14 | 0.77 | 0.94 | 0.26 | 8.75 | 0.33 |

| DC-VIs | 0.93 | 0.52 | 13.22 | 0.72 | 0.94 | 0.28 | 9.55 | 0.36 |

| UHD-VIs | 0.93 | 0.65 | 14.70 | 0.80 | 0.93 | 0.32 | 10.61 | 0.40 |

| PLSR | G-VIs | 0.35 | 1.75 | 38.39 | 2.09 | 0.58 | 0.64 | 22.81 | 0.86 |

| DC-VIs | 0.61 | 1.19 | 29.76 | 1.62 | 0.50 | 0.73 | 24.67 | 0.93 |

| UHD-VIs | 0.34 | 1.77 | 38.58 | 2.10 | 0.45 | 0.80 | 25.73 | 0.97 |

Table 8.

Estimates of leaf area index and above-ground biomass based on crop height, using all vegetation indices and using partial least square regression and random forest methods (calibration dataset, mean AGB = 5.44 t/ha, mean LAI = 3.77 m2/m2).

Table 8.

Estimates of leaf area index and above-ground biomass based on crop height, using all vegetation indices and using partial least square regression and random forest methods (calibration dataset, mean AGB = 5.44 t/ha, mean LAI = 3.77 m2/m2).

| Methods | Data | AGB (t/ha) | LAI (m2/m2) |

|---|

| R2 | MAE | nRMSE (%) | RMSE | R2 | MAE | nRMSE (%) | RMSE |

|---|

| RF | G-height, VIs | 0.96 | 0.40 | 10.47 | 0.57 | 0.95 | 0.24 | 8.22 | 0.31 |

| DC-height, VIs | 0.96 | 0.42 | 10.10 | 0.55 | 0.94 | 0.27 | 9.54 | 0.36 |

| UHD-height, VIs | 0.94 | 0.55 | 13.04 | 0.71 | 0.94 | 0.31 | 10.34 | 0.39 |

| PLSR | G-height, VIs | 0.81 | 0.83 | 20.58 | 1.12 | 0.65 | 0.56 | 20.42 | 0.77 |

| DC-height, VIs | 0.77 | 0.95 | 22.97 | 1.25 | 0.52 | 0.72 | 24.13 | 0.91 |

| UHD-height, VIs | 0.64 | 1.26 | 28.48 | 1.55 | 0.47 | 0.79 | 25.46 | 0.96 |