1. Introduction

Modeling radiative transfer (RT) of vegetation is a fundamental problem of land surface remote sensing. The bidirectional reflectance distribution function (BRDF) is one of the most important outputs for RT models, which can help normalize directional reflectance to more consistent nadir BRDF-adjusted surface reflectances (NBAR) products [

1,

2] or extract vegetation information [

3]. However, natural vegetation generally has significant spatial heterogeneity in both horizontal and vertical dimensions [

4], resulting in a great challenge to retrieve an accurate vegetation leaf area index (LAI) and cover fraction [

5,

6]. In mountainous areas, it is much more complex due to the three-dimensional (3D) structure of forest vegetation and terrain background [

7]. To our knowledge, there are only two widely used operational BRDF products, MCD43 of the moderate resolution imaging spectroradiometer (MODIS) [

2,

8] and the multiangle imaging spectroradiometer (MISR). Both have a spatial resolution of 500 m or 1 km. Within a domain with a size larger than 0.5 km (hereafter named the km-scale), the land surface is more likely to be heterogeneous with changing elevations or mixed land covers. Especially in mountainous areas, forest BRDF and albedo are significantly affected by the heterogeneous terrain and complex vegetation composition [

9,

10]. To simulate the combined effects of heterogeneous terrain and complex vegetation composition on MODIS or MISR BRDF products, RT models are expected to contend with a domain (or scene) size greater than 0.5 km. Although many 3D models may simulate such a large scene, there are very few that are specifically optimized to cope with the km-scale. Thus, a suitable RT model at the km-scale considering heterogeneous terrain is strongly required to deeply understand the RT process and improve the inversion accuracy of LAI and the vegetation cover fraction.

Considering the 3D nature of forest heterogeneities, there are three potential groups of RT models to be used at the km-scale: (a) the modified geometric optical reflectance models (GO) [

7,

11]; (b) classical 3D radiative transfer (3DRT) solutions [

12,

13]; (c) computer simulation models using ray tracing [

14,

15] or radiosity techniques [

16]. GO models, usually used for a flat background [

11,

17], have been improved recently on an inclined slope [

7]. However, it is still difficult to extend GO models to larger scales containing more than one slope. The 3DRT models have good mathematics and physical background, but they also have a weakness in describing the topography effect (nonflat terrain), as well as the multiple scattering processes that occur within the minimum 3D element (cells or voxels). Another limitation of 3DRT is its time-consuming feature arising from the equally defined discrete directions. The GO models have a key role in describing generalized BRDF features, and can simulate canopy reflectance reasonably well in many cases. However, they are also heavily reliant on the validity of various sets of assumptions and simplifications, and thus, are limited to relatively simple heterogeneous scenes on flat or constant slope terrains. The 3DRT models are attractive in their use of a Cartesian grid of volume cells (voxels) that reduce scene complexity but maintain a certain degree of spatial fidelity. However, there will be an inherent loss of the structural heterogeneity within the voxel resulting from averaging canopy structural properties. By contrast, computer simulation models have fewer assumptions on topography and tree structure, and have more suitability for scientific research, and are thus widely used to support sensor design [

18], model validation [

19], and assumption evaluation [

20,

21].

However, complaints of computer simulation models (both ray tracing and radiosity) have also been discussed because of their low computation efficiency from considering too much of the 3D details in the explicit descriptions of location, orientation, size, and shape of each scatterer. This limitation may hamper their expansion at the km-scale. To reduce the computation cost, a few efforts have been made recently to develop faster computer simulation models. For example, the discrete anisotropic radiative transfer (DART) model used balanced voxel size and improved its direction discretization and oversampling algorithms [

22]. The Rayspread model [

23] used the message passing interface (MPI) to construct a distributed and parallel simulation architecture and accelerate the Raytran code [

15]. To simulate realistic 3D scenes at the km-scale, the large-scale emulation system for realistic three-dimensional forest simulation (LESS) model also used parallel computation to accelerate the ray tracing code [

24]. Based on the radiosity-graphics-combined model (RGM) [

25], a subdivision method was adopted to simulate directional reflectance over a 500 m forest scene containing topography [

26]. By defining the concept of “porous object”, the radiosity applicable to a porous individual objects (RAPID) model significantly reduced the 3D scene complexity and improved the computation efficiency on directional reflectance [

16,

27].

However, most computer simulation models still focused on domain sizes less than 500 m [

19]. The km-scale RT models are very limited. These km-scale RT models, such as the LESS model [

24], are usually running on supercomputers in the laboratory to save time, which is not a convenient approach for widespread use. Desktop computers are generally inexpensive and are easier to access than supercomputers. It will be beneficial for scientists to use desktop computers if any 3D model can be identified that runs fast at the km-scale on a desktop platform with comparable accuracy to models run on supercomputers. As a result, more remote sensing users can conduct their desired simulations easily, such as assessing topographic correction accuracy [

28,

29], testing scale effects [

30] or validating large-scale BRDF models over complex terrains [

31]. Furthermore, from the completeness aspect of the RT family, km-scale models are also important to fill the missing part of the landscape scale.

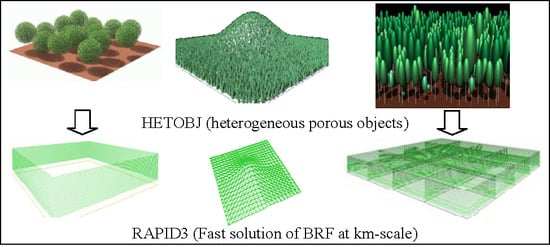

With these considerations in mind, the porous object concept is upgraded from RAPID at the tree crown scale to the heterogeneous porous object at the plot scale to accelerate RAPID for km-scale scenes, hereafter called RAPID3 version.

Section 2 introduces the history and background of radiosity and RAPID. Then, the extension method of RAPID3 on km-scale simulation is presented in

Section 3. The next section evaluates RAPID3 using the third radiation transfer model intercomparison (RAMI-3) test cases. Finally, the conclusion and future remarks comprise

Section 5.

2. Theoretical Background of RAPID

RAPID is a radiosity-based model using computer graphics methods to compute RT within and above 3D vegetated scenes from visible/near infrared (VNIR, 0.47–2.5 μm) [

16] to thermal infrared (TIR, 8–14 μm) [

32], and microwave (MV, 2.5–30 cm) parts [

33] of the electromagnetic spectrum [

16,

33].

The traditional radiosity methods [

32] use planar surfaces to create the 3D scene and solve the RT in VNIR and TIR as follows:

where

Bi or Bj is the radiosity on surface element i or j, defined as the total radiation flux density leaving that surface (the unit is Wm−2). Ei is the surface thermal emission in TIR or the light source emission in VNIR, and χi is the surface reflection or transmission coefficient which depends on the relative orientation (normal vector ) of surface i and j to each other. The Fij is the form factor or “view factor” which specifies the fraction of radiant flux leaving another surface j that reaches the surface i, and N is the number of discrete two-sided surface elements to be considered.

Equation (1) works well at small scales (e.g., crop canopies at a small domain size) with a few planar surfaces. However, a 3D scene at large scales (e.g., forest canopies at a large domain size) could have millions of surfaces, resulting in a huge memory burden and high computation time cost on computers. For example, the radiosity-graphics-based model (RGM) [

25] may crash if the surface polygon number is larger than 500,000 on a 32 bit operation system (OS). To reduce the limitation, RGM was extended to large-scale forest canopies using a subscene division method [

26]. This version has been verified in the context of the fourth RAMI (RAMI-IV) (marked as RGM2). To save time, parallel computation of subscenes was implemented using a few personal computers (PC). However, one subscene failure due to unexpected bugs would lead to the failure to merge the whole scene, which is still not ideal for users.

Thus, RAPID was developed based on RGM for large-scale forest scenes, which is considerably easier to use and faster than RGM2. RAPID is capable of simulating the bidirectional reflectance factor (BRF) over various kinds of vegetated scenes (homogeneous or heterogeneous). Unlike the thousands of polygons used in RGM2, RAPID uses dozens of porous objects to represent a group of grass or crop plants or tree crowns (see

Figure 1). Each porous object has several properties, including shape, size, thickness, LAI, leaf angle distribution (LAD), and leaf clumping conditions. These properties are used during dynamical generation of the subleaves within porous objects at run-time; hence, only view factors between porous objects (not between hundreds of small leaves) need to be calculated and stored. As a result, the model significantly reduces the huge memory requirement and long computation time of view factors for a large and realistic vegetation scene.

Seven major steps are required for RAPID to simulate the BRF:

(1) Generating a user-defined 3D scene containing porous objects using the graphical user interface (GUI) developed via C++ language, and an open graphic library (OpenGL) based on the digital elevation model (DEM), and a reference background picture and forest inventory data as inputs. Users can freely define 3D objects (tree species, crop types, buildings, and water bodies) and place them on the target regions. A parameter editor is integrated in the GUI to modify the key parameters, such as height, width, orientation, radius, and so on.

(2) Calculating sunlit fractions and irradiances from direct light of all objects using the painter algorithm, and a dynamic projection method in the solar incident direction is performed. The painter algorithm sorts all the objects in a scene by their depths to the painter and then paints them in this order. The dynamic projection generates randomly oriented and located leaves within a porous object according to its properties, including LAI, LAD, and leaf size.

(3) Calculating view fractions of sky and irradiances from diffuse light of all objects by averaging those from a few solid angles (at least 40) of the above sky (hemisphere) is performed. In each solid angle, the dynamic projection similar to (2) is used. During the dynamic projections, the overlapping fractions between any pairs of objects are recorded.

(4) Estimating view factors between all pairs of objects using their mean overlapping fractions in all solid angles, as described in step (3), is performed.

(5) Determining single scattering values of all objects using the direct irradiance results from step (2) and diffuse irradiance from step (3) is performed. The equivalent reflectance and transmittance of porous objects are also estimated from leaf reflectance and transmittance using LAD.

(6) Solving multiple scattering and finding the best radiosity values using results of (4) and (5) is performed.

(7) Calculating BRFs and rendering images in all view directions is performed. At this step, the dynamic projection repeats for each view direction. However, to decide whether a pixel is sunlit or not, the projected image in (2) is required.

The results from all steps, including the generated 3D scene, direct light fractions and output radiosity values, can be rendered as gray or color images in arbitrary view directions in the GUI.

In 2016, RAPID2 [

33] was released, including new functions of atmospheric RT by linking the vector linearized discrete ordinate radiative transfer (V-LIDORT) model [

34], DBT simulation in TIR by updating TRGM code [

35] and backscattering simulation in MV [

36], as well as LiDAR point cloud and waveform simulations [

37]. RAPID2 has been used to support comparing LAI inversion algorithms [

38], validating a new kernel-based BRDF model [

39], validating an analytic vegetation-soil-road-mixed DBT model [

40], and simulating continuous remote sensing images [

27]. Although RAPID2 is able to simulate km-scale images, it requires a few hours to run on a server (12 CPU cores), which may restrict RAPID2 to limited user groups having access to super computation power. Thus, the faster version RAPID3 is developed in this paper to specifically accelerate the simulation for km-scale scenes.

3. Model Description

RAPID3 is a downward compatible model of RGM, RAPID, and RAPID2. That means the RAPID model series can run at small scales with a few meters to large scales with kilometers. For clarity, the most suitable model at three scales was suggested to run efficiently:

Small scenes of approximately a few meters: it is better to choose RGM using realistic leaves and branches as the basic elements;

Middle scenes of approximately tens of meters: it is better to choose RAPID or RAPID2 using porous objects as the basic elements;

Large scenes with hundreds of meters to kilometers (km-scale): it is better to choose a new version specified for this scale, e.g., the RAPID3 which is fully illustrated in this paper.

The focus of this paper is to develop RAPID3 for the km-scale.

3.1. Definition of the Heterogeneous Porous Object (HETOBJ)

In RAPID, a porous object is used to represent a thin layer of leaves with a low LAI (from 0.30 to 0.40 m2m−2) and a predefined LAD. The leaves are randomly distributed in the layer without significant clumping. Therefore, this porous object can be seen as a homogeneous porous object (HOMOBJ). HOMOBJ is good to describe a tree crown layer but not sufficient to define a layer of a forest plot. If users group several HOMOBJs into one porous object, the total object number in the 3D scene will be further reduced. As a result, the computation costs will also be reduced. This grouping of HOMOBJ is the key idea for RAPID3 to simulate km-scale scene rapidly, which is named as “heterogeneous porous objects” (HETOBJ). To be precise, a HETOBJ is an object representing a thin layer of leaves or trunks with significant horizontal clumping. A HETOBJ can be seen as a collection of HOMOBJs.

Figure 2 demonstrates the concepts of HOMOBJ and HETOBJ. The major feature of HOMOBJ is the simple random distribution of leaves in it. After extension, the leaves can be heterogeneously clumped in HETOBJ, such as the row structure (

Figure 2b), clustering crowns (

Figure 2c), and random trunk layers (

Figure 2d). To describe the clumping effect in each HETOBJ, the number of clusters and the relative XY coordinates to the lower-left corner, as well as the shape and size of each cluster (e.g., the radius of a circular cluster for trees) should be given as input. Other major input parameters include LAI, LAD, crown thickness (

hcrown) or trunk length (

htrunk), and leaf length (

l).

Figure 3 shows the examples of simplifying real-world forests (a and d) into the HOMOBJ in RAPID (b and e), and then into HETOBJ in RAPID3 (c and f). In

Figure 3b, RAPID uses six HOMOBJs and one trunk to represent one tree, resulting in 36 HOMOBJs and six trunks. In

Figure 3c, the scene is further simplified as the collection of six crowns and one trunk HETOBJ. The soil polygons are kept solid in both RAPID and RAPID3. In RAPID, the soil polygon size should not be too large (the default 0.5 m,

Figure 3b) to achieve a good accuracy. However, in

Figure 3c, one soil polygon is sufficiently the same size as the HETOBJ. Finally, there are only 7 HETOBJs in

Figure 3c. Similarly, the forest stand containing 15 trees in

Figure 3d was represented by 300 HOMOBJs in

Figure 3e, which was then further simplified as only 20 HETOBJs in

Figure 3f.

To accurately parameterize each HETOBJ, two new input files, TEXTURE.IN and OBJ_CLUMP.IN, were introduced to define tree locations, radii, and radius adjustment coefficients. The TEXTURE.IN file stores the number of crown HOMOBJs within a HETOBJ, relative coordinates (X

tree, Y

tree) of each HOMOBJ center to the lower-left corner of the HETOBJ, and the maximum radius of each HOMOBJ (R

tree). It is assumed that all HOMOBJs within the HETOBJ have the same circular shape, thickness, LAI, LAD, trunk diameter (D

trunk), and optical parameters. The number of crown HOMOBJs controls the stem density. Changing the (X

tree, Y

tree) of each tree can adjust the spatial distribution of crowns within a HETOBJ. The OBJ_CLUMP.IN file defines the tree radius adjustment coefficients (

fR) that are compared to R

tree for a crown HOMOBJ within a HETOBJ (see

Figure 4). Therefore, the R

tree and

fR at different heights can control the tree crown vertical shape to be a sphere, cone, or any other user-defined profiles.

Generally, to model the forests over complex terrains, the digital elevation model (DEM) with triangles should be used. The DEM triangles are first generated as solid soil polygons. To generate trees on DEM, the forest inventory data for all trees in the DEM region should be provided, including their species, heights, positions, crown radii, crown lengths (

Hcrown), trunk lengths (

Htrunk), and LAIs. However, for each soil triangle (may be irregular), the inside trees must be separated from the outside trees. Only the contained trees are used to summarize the important structure parameters for HETOBJ, including the tree number, local positions, tree height ranges, LAI, crown radius ranges,

Hcrown, and

Htrunk. Based on these tree parameters, the soil polygon is simply replicated a few times to generate one trunk and a few crown HETOBJs by shifting the z values from zero to the top tree height contained in the HETOBJ. When z is zero, one trunk HETOBJ is created first with vertical thickness of

Htrunk. When z is larger than

Htrunk, the z shifting step is estimated as

where

fshape is 0.24 or 0.12 for ellipsoid or cone shaped crowns according to leaf volume density.

Figure 5 shows the generated 3D scenes on a single slope (a–c) and a ridge (d–f).

Figure 5a–c are similar to

Figure 3a–c, except the slope and tree numbers.

Figure 5d–e shows a forest stand with 25 cone-shape trees on a ridge (d), and its abstraction using 78 HETOBJs (e), as well as the dynamically projected object sequence ID image of (e) using the method in

Section 3.2. The two large triangles in (

Figure 5f) are the two soil polygons to compose the ridge.

3.2. Dynamic Projection of HETOBJ

In RAPID, the leaves in a HOMOBJ are dynamically generated and projected into a 2D pixel array (

Figure 6a). First, according to the basal area, LAI and leaf size parameters of a porous object, the number of leaves within the porous object, is estimated. Then, the center points, zenith angles, and azimuth angles for all leaves are randomly generated, where the center points and azimuth angles use a uniform random function; and leaf zenith angles follow the LAD function. Once randomly generated, each leaf has spatially explicit coordinates and is projected into the 2D pixel array. To observe a leaf envelope in the array, the mean leaf pixel size should be at least 9. Otherwise, the leaf will be neglected, and will not be drawn. Then, the 2D array size should be at least 3000 by 3000 for a 100 m by 100 m scene if the leaf length is 10 cm. If the scene is 1 km by 1 km, the array size will be larger than 30,000 by 30,000 (30,000 width × 30,000 height × 4 bytes = 3.6 × 10

9 bytes = 3.4 GB). Considering that at least two projection arrays (sun and viewer directions) should be used simultaneously, the memory costs of two arrays will be approximately 7 GB. Another important memory cost concerns the view factors, which exponentially increases with the scene size. As a result, the scene has to be limited to a certain size based on the random-access memory (RAM) available. Thus, the subdivision method should be used to run the km-scale scene on the PC. However, subdivision does not significantly reduce computation cost, and introduces additional complexity.

In RAPID3, instead of projecting many finite-size leaves (

Figure 6a), points (pixels) are used to render a HOMOBJ or HETOBJ (

Figure 6a–c). The point number

N is proportional to LA × G(Ω

in), where LA is the total leaf area of a HETOBJ;

G is the G-function [

41], defined as the averaged projection area of all possible leaf angles along the projection direction Ω

in:

where Ω(

θ,

φ) is the leaf orientation direction with zenith angle

θ and azimuth angle

φ;

f(

θ) is the LAD function varying with

θ, but independent of

φ. As a result, the total pixel number in

Figure 6a,b should be equal if the same size target 2D arrays are used. In other words, the gap fractions in

Figure 6a,b are the same. However, the same 2D array size can be used to project more HOMOBJs in RAPID3 as long as their gap fractions are kept constant. For example,

Figure 6c shows the projected random pixels of the scene defined in

Figure 3f.

The following paragraphs explain the projection process using a 2D array P(NX, NY) for a 3D scene with a center of (xcenter, ycenter, zcenter) and a radius (Rsphere) of a circumsphere in a direction Ω(θ, φ). The NX and NY are the size of the array P in the x and y directions.

First, the rotation transformation of the Cartesian coordinate system for any point (

x0,

y0,

z0) in the 3D scene must be performed along the direction Ω:

Second, a scaling transformation is applied to fit the rotated 3D scene to the 2D pixel array:

where

a and

b are the scaling coefficients:

At the pixel position (

xpix,

ypix), the projected polygon code (PID) will be written into the pixel value [

P(

xpix,

ypix) = PID]. To address mutual shadowing, a 2D depth array

D(NX, NY) is used to record the relative depth value [

D(

xpix,

ypix) =

zview] (distance to the sensor). If another polygon pixel has the same pixel position to the current polygon pixel, the depth value comparison will determine whether or not to overwrite the

P and

D arrays. This is similar to the commonly used

z-buffer method in computer graphics [

42]. The z-buffer approach is one solution to the visibility problem, which tests the z-depth of each surface to determine the closest (visible) surface to the sensor.

Based on the above method, together with the number of trees

Ntree, the list of tree center coordinates (

Xtree,

Ytree) and their radii (

Rtree × fR) of a HETOBJ,

N points are randomly generated within the range of tree crowns and projected into the array

P and

D. Four uniform random numbers using PID as the seed are required to generate a point, including the random tree ID (

f1), random radius (

f2), random azimuth (

f3), and random height (

f4). The PID seed initializes a pseudorandom number generator and produces the same series of random numbers for any view angle. The tree ID (TID) is the floor of

Ntree ×

f1. Finally, the 3D coordinate (

x0,

y0, z

0) of a leaf point will be

where

z(x,

y) is the plane equation of the HETOBJ bottom plane, and

h is the thickness of the HETOBJ.

3.3. Estimation of View Factors and Single Scattering

In an arbitrary incident direction Ω, all objects in a 3D scene are sorted by the

z-buffer values along the direction. Then, the objects are drawn in sequence using the

z-buffer algorithm. For example, if object

i is under another object

j,

i will be first rendered into the projection array

P. The total number of

i pixels in the direction Ω is

Ni(Ω). Then, object

j will be projected into the array

P. Since

j may overlap

i, resulting in replacement of

i with the

j value, the number of

i pixels will decrease (noted as

Ti(Ω)), and the number of overlapped pixels are recorded as

Nij(Ω). After repeating the projection for all discretized directions of the 2π hemispheric sky (discretization is the same as RAPID), the view factor between

i and

j will be

Note that each object has two sides: facing (+) or back to (−) the incident direction. Since j+ and i− will not see each other, their view factors will be zero. The Fij is in fact the view factor of j− over i+.

Since the porous object

i has a visible thickness, and the leaf points within

i have a uniform vertical distribution, some projected pixels of

i may overlap existing

i pixels, resulting in self-overlapping. Compared to HOMOBJ, the HETOBJ contains a few HOMOBJs, which increases the self-overlapping possibility. Thus, the inner multiple scattering of a HETOBJ should be considered. By recording the number of self-overlapped pixels of

i as

Nii(Ω), the inner multiple scattering is included by new inner view factors (

Fii).

Assume that the total incident light is defined as 1.0 with a diffuse fraction (

kd). The single scattering of

i+ and

i− from diffuse light will be

where

ρi and

τi are the equivalent reflectance and transmittance of the object

i defined in RAPID. The sunlit fraction of

i in the solar incident direction Ω

sun is

Then, the direct single scatterings of

i+ and

i− in the solar incident direction Ω

sun are estimated as follows:

where

θsun is the zenith angle of the solar direction; the two

fLAD are the upside (+) and downside (−) coefficients to correct the LAD effect (

f(

θ)) of the horizontal HETOBJs are

where

θ and

φ are the zenith and azimuth angle, respectively; the dot products between leaf normal Ω(

θ,

φ) and sun direction Ω

sun estimate the correction coefficient of sunlit irradiance; (1 + cos(

θ))/2 and (1 − cos(

θ))/2 represent the upward and downward view fractions for the leaf normal Ω(

θ,

φ). Combining the diffuse and direct light, the total single scattering of

i (+ or −) is expressed as

For an inclined HETOBJ, its upper hemisphere differs from that of a horizontal HETOBJ. Thus, the two

fLAD are dependent not only on the LAD, but also on the normal of the HETOBJ (Ω

i):

Based on the input leaf reflectance and transmittance (

ρsub-object and

τsub-object), the equivalent values (

ρi and

τi) of the inclined HETOBJ are modified as

3.4. Solution of Multiple Scattering

Using the single scattering values and view factors (see

Section 3.3) as inputs, Equation (1) is used to solve the average radiosity of each object (

Bi) through the Gauss–Seidel iteration method, which was well described in RAPID. Note that the inner view factor

(Fii) has been included, although without explicit indication. After iteration, the final radiosity is

It is obvious that HETOBJ is a non-Lambertian object. The anisotropy of reflectance is assumed to be mainly determined by the directionality of single scattering caused by the significant radiance difference between sunlit and shaded leaves or branches. Since the sunlit fractions of all objects are already known from dynamic projections, the sunlit and shaded radiosity of

i are estimated as follows:

In fact, the sunlit and shaded radiosity for each HETOBJ should also be angularly dependent. The isotropic average assumption in Equation (24) may cause potential errors on the BRF.

3.5. Solution of BRF of a 3D Scene

Since the total incidence is 1.0, the BRFs of a 3D scene are weighted averages of radiosity from all objects in each view direction

v (Equation (25)).

where

N is the number of all objects, including both solid facets and HETOBJs;

or

is the viewed area of a sunlit or shaded object

i that is visible to the viewer.

The weights are the fractions of viewed object areas (Ai,lit and Ai,shd) with respect to the sum of the viewed areas of all objects (the denominator in Equation (25)). In traditional radiosity models, automatic subdivision of planar facets into smaller subfacets is used to make each subfacet either sunlit or shaded. Similarly, porous objects are divided into two parts: the sunlit and shaded part. Without separating the sunlit from the shaded part, the BRF contrast (e.g., between forward and backward view directions) and hotspot effect decrease significantly. That is why the radiosity has been decomposed into sunlit and shaded parts (Equation (24)). Thus, determining whether a pixel is sunlit is the key problem for BRF synthesis.

In RAPID, affine transform equations were used to determine if a pixel with an object ID

i in the view pixel array is visible in the sun projection array or not. If visible, the pixel is sunlit; otherwise, shaded. In this way, the

or

is accurately estimated. However, by using randomly scattered pixels (

Figure 6b–c) instead of collections of subleaf pixels (

Figure 6a), the error from an affine transform for a HETOBJ increases, resulting in overestimating shaded fractions at the large view zenith angle (VZA). Only in the hot spot direction, the error is zero. In addition, affine transform equations should be built for each subleaf; thus, the computation costs with an affine transform solution and the RAM costs for coefficients storage are unacceptable for large scenes.

In RAPID3, to reduce the error, the computation and the RAM costs from the affine transform, Equation (26) is developed to directly transform the pixel coordinates (

xpix,

ypix) from the viewer direction to the sun direction (

xpix_sun,

ypix_sun). The idea is to combine the z-buffer values (

zview) and pixel positions (

xpix,

ypix) in viewer projection arrays

Pview to inverse 3D coordinates of leaf points, and then project them back into the solar projection array

Psun at location (

xpix_sun,

ypix_sun). If the polygon ID of

Pview (

xpix,

ypix) matches

Psun (

xpix_sun,

ypix_sun), this pixel is sunlit; otherwise, shaded. However, due to the round-off errors from floating point values to integer values, the pixel positions may be shifted one to two-pixel widths, thus leading to underestimated sunlit fractions.

Unlike planar facets, leaf points do not have an explicit normal vector (similar to the voxel concept in 3DRT), whose scattering is isotropic. In RT theory, a scattering phase function is required to describe the anisotropy. In this paper, RAPID was used to presimulate the sunlit fractions (

fi,∆lit) of a virtual HOMOBJ using the LAI, LAD, and thickness values of a HETOBJ

i to represent the scattering anisotropy of this HETOBJ in all directions. These sunlit fractions are used to correct the

or

in Equation (25):

5. Conclusions

By extending the porous object concept in the RAPID model from homogeneous to heterogeneous conditions, the RAPID3 model has been proposed specifically for km-scale RT simulation. It is the first 3D model focusing on a fast RT solution of vegetation canopies in mountainous areas. The major advance in the development of RAPID is the significantly lower computation time of BRF at the km-scale (approximately 13 min). The heterogeneous porous object (HETOBJ) concept is the key innovation that reduces the complexity of 3D forest scenes significantly. The corresponding radiosity method has been adapted from HOMOBJ to HETOBJ. Five RAMI-3 scenes, from homogenous canopies (HOM03/13) to discrete forest canopies (HET01, HET03, and HET05), have been used to evaluate RAPID3. Based on HET03, RAPID3 also simulated the enhanced contrast of BRF between the backward and forward directions due to topography based on the HET03 scene. This analysis demonstrated the suitability of the RAPID model to understand how the topography affects the BRF.

The major improvements on the RAPID model are as follows:

Better visibility determination: The painter algorithm has been updated as the z-buffer algorithm, which is more accurate regarding the visibility determination between large objects.

New inner view factors: The inner multiple scattering of a HETOBJ are considered using the new inner view factors (Fii), which compensated for the underestimation of multiple scattering.

Less memory costs: Random points (pixels) instead of many finite-size leaves are projected to render a HOMOBJ or HETOBJ, which reduced the memory requirement of large pixel array size for the km-scale in RAPID. Due to the z-buffer values, the affine transform used in RAPID has been replaced by a simple matrix transform equation to decide whether a pixel is sunlit or not, which reduced the memory costs related to storing the affine transform coefficients of all subleaves.

There are also two main limitations to the current version of RAPID3:

- 1.

Large errors in some cases (e.g., HET05): The mean RMSE of the simulated BRF is 0.015; however, the maximum BRF bias from ROMCREF can be up to 0.02 and 0.05 in the red and NIR band, respectively. These errors mainly originate from the sunlit fraction estimation error and the isotropic sunlit radiance assumption on HETOBJ.

- 2.

Considerable effort to generate RAPID3 scenes: The RAPID GUI has not been extended to automatically transform RAPID scenes to RAPID3 scenes. The 3D scene file (POLY.IN) and two new files (TEXTURE.IN and OBJ_CLUMP.IN) were generated in MATLAB R2012a (The Math Works, Inc.) coding for each scene.

Nevertheless, RAPID3 has great potential to contribute to the remote sensing community. In the radiosity-graphics-combined model family, the RGM, RAPID, RAPID2, and RAPID3 models are becoming a model series, being capable of simulating multiscale 3D scenes from very detailed crops and forest plots to landscapes at the km-scale. These multiscale simulation capacities have been integrated into the unique free software platform (RAPID), which should be very useful for the remote sensing community. The heterogeneous porous object (HETOBJ) concept is not only applicable to radiosity models, but also possibly to the speedup of ray tracing codes. In the future, more work will be performed to improve RAPID3, including error reduction, CPU- and GPU-based acceleration, more validation and application case studies, and GUI enhancement.