Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity

Abstract

:1. Introduction

2. Study Materials

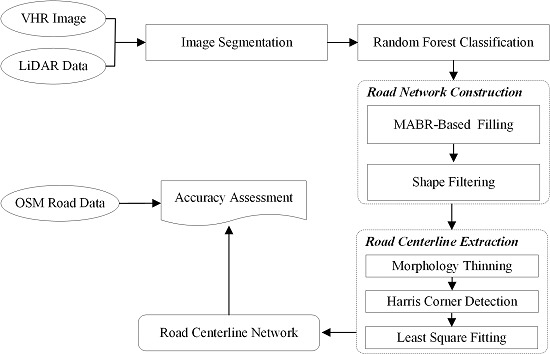

3. Methodology

3.1. Image Segmentation

3.2. Random Forest Classification

3.3. Road Network Construction

3.3.1. MABR-Based FILLING

| Algorithm 1. The detailed processing steps of MABR-based filling approach. |

| Input: Object-based classification |

| Output: Complete road network |

| 1. For road, shadow and car classes, morphology opening is performed in turn to break small connections; |

| 2. Labal object-based classification by connected component analysis. Lshadow, Ltree and Lcar represent connected components of shadow, tree and car classes, respectively; |

| 3. Identify the adjacency relation between connected components. Ni is the number of road connected components adjacent to connected components i; |

| 4. For each Lshadow, Ltree and Lcar if (Ni ≥ 1) then extract valid boundary pixels and create the minimum area bounding rectangle (MABR). revise class of the pixels within the MABR as road. else continue end if |

| 5. Remove over-filling by taking building and bare classes as mask. |

3.3.2. Shape Filtering

3.4. Road Centerline Extraction

| Algorithm 2. Road centerline extraction by the MHL approach. |

| Input: Complex road network |

| Output: Accurate road centerline network |

| 1. Extract the initial road centerline network by the morphology thinning; |

| 2. Decompose the initial road centerline network into the road centerline segment using the Harris corner detection. |

| 3. Link road centerline segments with similar direction and spatial neighborhood; |

| 4. Remove short road centralline segments whose length is less than the average road width; |

| 5. Fitting each road centerline segment using the least square fitting. |

3.5. Method Comparison

3.6. Accuracy Assessment

4. Results

4.1. The New York Dataset

4.2. The Vaihingen Dataset

4.3. The Guangzhou Dataset

5. Discussion

5.1. Effectiveness Analysis of Key Processes

5.2. Parameter Sensitivity Analysis

5.3. Computational Cost Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| VHR | Very-High-Resolution |

| LiDAR | Light Detection and Ranging |

| MABR | minimum area bounding rectangle |

| MBR | minimum bounding rectangle |

| FNEA | fractal net evolution approach |

| DSM | digital surface model |

| nDSM | normalized digital surface model |

| DTM | digital terrain model |

| OSM | open Street Map |

| SOLI | skeleton-based object linearity index |

| MHL | morphology thinning, Harris corner detection, and least square fitting |

References

- Das, S.; Mirnalinee, T.; Varghese, K. Use of salient features for the design of a multistage framework to extract roads from high-resolution multispectral satellite images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3906–3931. [Google Scholar] [CrossRef]

- Wang, J.; Qin, Q.; Gao, Z.; Zhao, J.; Ye, X. A new approach to urban road extraction using high-resolution aerial image. ISPRS Int. J. Geo-Inf. 2016, 5, 114. [Google Scholar] [CrossRef]

- Maboudi, M.; Amini, J.; Hahn, M.; Saati, M. Road network extraction from vhr satellite images using context aware object feature integration and tensor voting. Remote Sens. 2016, 8, 637. [Google Scholar] [CrossRef]

- Gao, L.; Shi, W.; Miao, Z.; Lv, Z. Method based on edge constraint and fast marching for road centerline extraction from very high-resolution remote sensing images. Remote Sens. 2018, 10, 900. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Gamba, P.; Li, Z. An object-based method for road network extraction in vhr satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4853–4862. [Google Scholar] [CrossRef]

- Mena, J.B. State of the art on automatic road extraction for gis update: A novel classification. Pattern Recognit. Lett. 2003, 24, 3037–3058. [Google Scholar] [CrossRef]

- Miao, Z.; Wang, B.; Shi, W.; Zhang, H. A semi-automatic method for road centerline extraction from vhr images. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1856–1860. [Google Scholar] [CrossRef]

- Cardim, G.; Silva, E.; Dias, M.; Bravo, I.; Gardel, A. Statistical evaluation and analysis of road extraction methodologies using a unique dataset from remote sensing. Remote Sens. 2018, 10, 620. [Google Scholar] [CrossRef]

- Benjamin, S.; Gaydos, L. Spatial resolution requirements for automated cartographic road extraction. Photogramm. Eng. Remote Sens. 1990, 56, 93–100. [Google Scholar]

- Liu, J.; Qin, Q.; Li, J.; Li, Y. Rural road extraction from high-resolution remote sensing images based on geometric feature inference. ISPRS Int. J. Geo-Inf. 2017, 6, 314. [Google Scholar] [CrossRef]

- Hu, J.; Razdan, A.; Femiani, J.C.; Cui, M.; Wonka, P. Road network extraction and intersection detection from aerial images by tracking road footprints. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4144–4157. [Google Scholar] [CrossRef]

- Shi, W.; Zhu, C. The line segment match method for extracting road network from high-resolution satellite images. IEEE Trans. Geosci. Remote Sens. 2002, 40, 511–514. [Google Scholar]

- Song, M.; Civco, D. Road extraction using svm and image segmentation. Photogramm. Eng. Remote Sens. 2004, 70, 1365–1371. [Google Scholar] [CrossRef]

- Mokhtarzade, M.; Zoej, M.V. Road detection from high-resolution satellite images using artificial neural networks. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 32–40. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Zhang, L. Road centreline extraction from high-resolution imagery based on multiscale structural features and support vector machines. Int. J. Remote Sens. 2009, 30, 1977–1987. [Google Scholar] [CrossRef]

- Sujatha, C.; Selvathi, D. Connected component-based technique for automatic extraction of road centerline in high resolution satellite images. EURASIP J. Image Video Process. 2015, 2015, 8. [Google Scholar] [CrossRef]

- Shi, W.; Miao, Z.; Wang, Q.; Zhang, H. Spectral–spatial classification and shape features for urban road centerline extraction. IEEE Geosci. Remote Sens. Lett. 2014, 11, 788–792. [Google Scholar]

- Zhao, J.; You, S.; Huang, J. In Rapid extraction and updating of road network from airborne lidar data. In Proceedings of the Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 11–13 October 2011; pp. 1–7. [Google Scholar]

- Choi, Y.-W.; Jang, Y.-W.; Lee, H.-J.; Cho, G.-S. Three-dimensional lidar data classifying to extract road point in urban area. IEEE Geosci. Remote Sens. Lett. 2008, 5, 725–729. [Google Scholar] [CrossRef]

- Xu, J.-Z.; Wan, Y.-C.; Lai, Z.-L. Multi-scale method for extracting road centerlines from lidar datasets. Infrared Laser Eng. 2009, 6, 034. [Google Scholar]

- Hu, X.; Li, Y.; Shan, J.; Zhang, J.; Zhang, Y. Road centerline extraction in complex urban scenes from lidar data based on multiple features. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7448–7456. [Google Scholar]

- Hui, Z.; Hu, Y.; Jin, S.; Yevenyo, Y.Z. Road centerline extraction from airborne lidar point cloud based on hierarchical fusion and optimization. ISPRS J. Photogramm. Remote Sens. 2016, 118, 22–36. [Google Scholar] [CrossRef]

- Meng, X.; Wang, L.; Currit, N. Morphology-based building detection from airborne lidar data. Photogramm. Eng. Remote Sens. 2009, 75, 437–442. [Google Scholar] [CrossRef]

- Hu, X.; Ye, L.; Pang, S.; Shan, J. Semi-global filtering of airborne lidar data for fast extraction of digital terrain models. Remote Sens. 2015, 7, 10996–11015. [Google Scholar] [CrossRef]

- Clode, S.; Kootsookos, P.J.; Rottensteiner, F. The automatic extraction of roads from lidar data. In Proceedings of the International Society for Photogrammetry and Remote Sensing′s Twentieth Annual Congress, Istanbul, Turkey, 12–23 July 2004; pp. 231–236. [Google Scholar]

- Gargoum, S.; El-Basyouny, K. Automated extraction of road features using LiDARdata: A review of LiDAR applications in transportation. In Proceedings of the 4th International Conference on Transportation Information and Safety, Banff, AB, Canada, 8–10 August 2017; pp. 563–574. [Google Scholar]

- Liu, L.; Lim, S. A framework of road extraction from airborne lidar data and aerial imagery. J. Spatial Sci. 2016, 61, 263–281. [Google Scholar] [CrossRef]

- Sameen, M.I.; Pradhan, B. A two-stage optimization strategy for fuzzy object-based analysis using airborne lidar and high-resolution orthophotos for urban road extraction. J. Sens. 2017. [Google Scholar] [CrossRef]

- Hu, X.; Tao, C.V.; Hu, Y. Automatic Road Extraction from Dense Urban Area by Integrated Processing of High Resolution Imagery and Lidar Data; International Archives of Photogrammetry, Remote Sensing and Spatial Information Sciences: Istanbul, Turkey, 2004; Volume 35, p. B3. [Google Scholar]

- Grote, A.; Heipke, C.; Rottensteiner, F. Road network extraction in suburban areas. Photogramm. Rec. 2012, 27, 8–28. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, Z.; Tao, C.V. A robust method for semi-automatic extraction of road centerlines using a piecewise parabolic model and least square template matching. Photogramm. Eng. Remote Sens. 2004, 70, 1393–1398. [Google Scholar] [CrossRef]

- Miao, Z.; Shi, W.; Samat, A.; Lisini, G.; Gamba, P. Information fusion for urban road extraction from vhr optical satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1817–1829. [Google Scholar] [CrossRef]

- Shi, W.; Miao, Z.; Debayle, J. An integrated method for urban main-road centerline extraction from optical remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 3359–3372. [Google Scholar] [CrossRef]

- Jang, B.-K.; Chin, R.T. Analysis of thinning algorithms using mathematical morphology. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 541–551. [Google Scholar] [CrossRef]

- Zhang, Q.; Couloigner, I. Accurate centerline detection and line width estimation of thick lines using the radon transform. IEEE Trans. Image Process. 2007, 16, 310–316. [Google Scholar] [CrossRef] [PubMed]

- Poullis, C.; You, S. Delineation and geometric modeling of road networks. ISPRS J. Photogramm. Remote Sens. 2010, 65, 165–181. [Google Scholar] [CrossRef]

- Kyle, B.; Benjamin, B.; Leslie, C.; Timothy, J.; Sebastian, L.; Richard, N.; Sophia, P.; Sunith, S.; Hoel, W.; Yue, X. Arlington, Massachusetts—Aerial imagery object identification dataset for building and road detection, and building height estimation. Figshare Filset 2016. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D. ISPRS Test Project on Urban Classification and 3d Building Reconstruction. Commission III-Photogrammetric Computer Vision and Image Analysis, Working Group III/4-3D Scene Analysis. 2013, pp. 1–17. Available online: http://www2.isprs.org/commissions/comms/wg4/detection-and-reconstruction.html (accessed on 7 January 2013).

- Meng, X.; Wang, L.; Silván-Cárdenas, J.L.; Currit, N. A multi-directional ground filtering algorithm for airborne lidar. ISPRS J. Photogramm. Remote Sens. 2009, 64, 117–124. [Google Scholar] [CrossRef]

- Haklay, M.; Weber, P. Openstreetmap: User-generated street maps. IEEE Pervasive Comput. 2008, 7, 12–18. [Google Scholar] [CrossRef]

- Hay, G.J.; Blaschke, T.; Marceau, D.J.; Bouchard, A. A comparison of three image-object methods for the multiscale analysis of landscape structure. ISPRS J. Photogramm. Remote Sens. 2003, 57, 327–345. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Ning, X.; Lin, X. An index based on joint density of corners and line segments for built-up area detection from high resolution satellite imagery. ISPRS Int. J. Geo-Inf. 2017, 6, 338. [Google Scholar] [CrossRef]

- Guo, Z.; Du, S. Mining parameter information for building extraction and change detection with very high-resolution imagery and GIS data. Geosci. Remote Sens. 2017, 54, 38–63. [Google Scholar] [CrossRef]

- Tian, S.; Zhang, X.; Tian, J.; Sun, Q. Random forest classification of wetland landcovers from multi-sensor data in the arid region of Xinjiang, China. Remote Sens. 2016, 8, 954. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Li, X.; Shao, G. Object-based land-cover mapping with high resolution aerial photography at a county scale in midwestern USA. Remote Sens. 2014, 6, 11372–11390. [Google Scholar] [CrossRef]

- Gu, H.; Han, Y.; Yang, Y.; Li, H.; Liu, Z.; Soergel, U.; Blaschke, T.; Cui, S. An efficient parallel multi-scale segmentation method for remote sensing imagery. Remote Sens. 2018, 10, 590. [Google Scholar] [CrossRef]

- Witharana, C.; Lynch, H.J. An object-based image analysis approach for detecting penguin guano in very high spatial resolution satellite images. Remote Sens. 2016, 8, 375. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. Uav remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Hinz, S.; Baumgartner, A. Automatic extraction of urban road networks from multi-view aerial imagery. ISPRS J. Photogramm. Remote Sens. 2003, 58, 83–98. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Zhang, L. An adaptive mean-shift analysis approach for object extraction and classification from urban hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2008, 46, 4173–4185. [Google Scholar] [CrossRef]

- Kwak, E.; Habib, A. Automatic representation and reconstruction of dbm from lidar data using recursive minimum bounding rectangle. ISPRS J. Photogramm. Remote Sens. 2014, 93, 171–191. [Google Scholar] [CrossRef]

- Misra, I.; Moorthi, S.M.; Dhar, D.; Ramakrishnan, R. An automatic satellite image registration technique based on harris corner detection and random sample consensus (RANSAC) outlier rejection model. In Proceedings of the 1st International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 15–17 March 2012; pp. 68–73. [Google Scholar]

- Heipke, C.; Mayer, H.; Wiedemann, C.; Jamet, O. Evaluation of automatic road extraction. Int. Arch. Photogramm. Remote Sens. 1997, 32, 151–160. [Google Scholar]

| Method | Completeness | Correctness | Quality |

|---|---|---|---|

| Proposed method | 0.9306 | 0.9599 | 0.8810 |

| Huang’s method | 0.7939 | 0.9313 | 0.7162 |

| Miao’s method | 0.8653 | 0.8785 | 0.7737 |

| Method | Completeness | Correctness | Quality |

|---|---|---|---|

| Proposed method | 0.9047 | 0.9576 | 0.8490 |

| Huang’s method | 0.8139 | 0.8829 | 0.7007 |

| Miao’s method | 0.8817 | 0.8821 | 0.7870 |

| Method | Completeness | Correctness | Quality |

|---|---|---|---|

| Proposed method | 0.8019 | 0.9354 | 0.7522 |

| Huang’s method | 0.7314 | 0.8211 | 0.6890 |

| Miao’s method | 0.7832 | 0.8726 | 0.7169 |

| New York Dataset | Vaihingen Dataset | |||||

|---|---|---|---|---|---|---|

| Proposed Method | Huang’s Method | Miao’s Method | Proposed Method | Huang’s Method | Miao’s Method | |

| Image segmentation | 687 s | 846 s | --- | 529 s | 581 s | --- |

| Other processing | 1585 s | 723 s | 2143 s | 1137 s | 507 s | 1479 s |

| Total | 2272 s | 1569 s | 2143 s | 1666 s | 1088 s | 1479 s |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Zhang, X.; Sun, Y.; Zhang, P. Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity. Remote Sens. 2018, 10, 1284. https://doi.org/10.3390/rs10081284

Zhang Z, Zhang X, Sun Y, Zhang P. Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity. Remote Sensing. 2018; 10(8):1284. https://doi.org/10.3390/rs10081284

Chicago/Turabian StyleZhang, Zhiqiang, Xinchang Zhang, Ying Sun, and Pengcheng Zhang. 2018. "Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity" Remote Sensing 10, no. 8: 1284. https://doi.org/10.3390/rs10081284

APA StyleZhang, Z., Zhang, X., Sun, Y., & Zhang, P. (2018). Road Centerline Extraction from Very-High-Resolution Aerial Image and LiDAR Data Based on Road Connectivity. Remote Sensing, 10(8), 1284. https://doi.org/10.3390/rs10081284