New Strategies for Time Delay Estimation during System Calibration for UAV-Based GNSS/INS-Assisted Imaging Systems

Abstract

:1. Introduction

2. Related Work

3. Methodology

3.1. Conceptual Basis of Bundle Block Adjustment

- : ground coordinates of the object point

- : vector connecting perspective center to the image point

- : principal point coordinates

- principal distance

- : distortion in x and y directions for image point

- : time of exposure

- : position of IMU body frame relative to the mapping reference frame at time derived from the GNSS/INS integration process

- : rotation matrix from the IMU body frame to the mapping reference frame at time derived from the GNSS/INS integration process

- : lever arm from camera to IMU body frame

- : rotation (boresight) matrix from camera to IMU body frame

- : scale factor for point captured by camera at time t

3.2. Direct Approach for Time Delay Estimation

3.3. Optimal Flight Configuration for System Calibration while Considering Time Delay

3.4. Indirect Approach for Time Delay Estimation

4. Experimental Results

4.1. Data Acquisition

4.2. Dataset Description

4.3. Experimental Results and Analysis

4.3.1. DJI M200 Integrated with FLIR Duo Pro R—FLIR Thermal

4.3.2. DJI M200 Integrated with FLIR Duo Pro R—FLIR RGB

4.3.3. DJI M600 Integrated with Sony Alpha 7R (ILCE-7R)

5. Conclusions

- Two approaches, direct and indirect, were shown to accurately estimate time delay to accommodate users with and without capability of modifying bundle adjustment software code.

- The indirect approach does not require modification to the bundle adjustment code, and it also only needs a single bundle adjustment process.

- Rigorously derived optimal flight configurations were presented.

- The two approaches were shown to be reliable across a variety of platforms and sensors.

- The direct approach is capable of producing accuracy at approximately the same level as the GSD of the system.

- The accuracies achieved were without the use of ground control points.

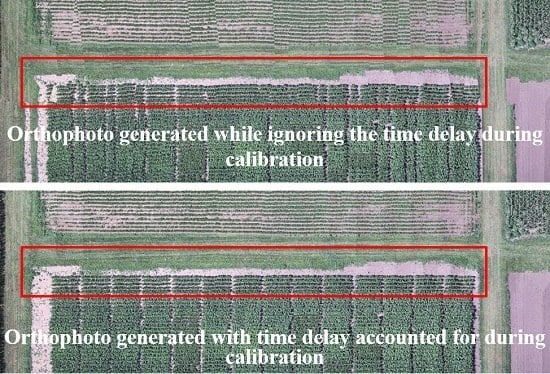

- The direct and indirect approaches are capable of using the original trajectory data for generating accurate orthophotos.

- Both approaches were shown to handle sensors with relatively large time delays appropriately, therefore no prior hardware modification is necessary.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Biancoin, B.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Masjedi, A.; Quijano, K.; Flatt, J.E.; Crawford, M.; Habib, A. UAV-based multi-sensor multi-platform integration for high throughput phenotyping. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; International Society for Optics and Photonics: Baltimore, MD, USA, 2019; Volume 11008, p. 110080E. [Google Scholar]

- Masjedi, A.; Zhao, J.; Thompson, A.M.; Yang, K.W.; Flatt, J.E.; Crawford, M.; Chapman, S. Sorghum Biomass Prediction Using Uav—Based Remote Sensing Data and Crop Model Simulation. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Zhang, Z.; Masjedi, A.; Zhao, J.; Crawford, M. Prediction of Sorghum biomass based on image based features derived from time series of UAV images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 6154–6157. [Google Scholar]

- Chen, Y.; Ribera, J.; Boomsma, C.; Delp, E. Locating crop plant centers from UAV—Based RGB imagery. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2030–2037. [Google Scholar]

- Buchaillot, M.; Gracia-Romero, A.; Vergara-Diaz, O.; Zaman-Allah, M.A.; Tarekegne, A.; Cairns, J.E.; Prassanna, B.M.; Araus, J.L.; Kefauver, S.C. Evaluating Maize Genotype Performance under Low Nitrogen Conditions Using RGB UAV Phenotyping Techniques. Sensors 2019, 19, 1815. [Google Scholar] [CrossRef] [PubMed]

- Gracia-Romero, A.; Kefauver, S.C.; Fernandez-Gallego, J.A.; Vergara-Díaz, O.; Nieto-Taladriz, M.T.; Araus, J.L. UAV and Ground Image-Based Phenotyping: A Proof of Concept with Durum Wheat. Remote Sens. 2019, 11, 1244. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Habib, A.; Han, Y.; Xiong, W.; He, F.; Zhang, Z.; Crawford, M. Automated Ortho-Rectification of UAV—Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery. Remote Sens. 2016, 10, 796. [Google Scholar] [CrossRef]

- Bisquert, M.; Sánchez, J.; López-Urrea, R.; Caselles, V. Estimating high resolution evapotranspiration from disaggregated thermal images. Remote Sens. Environ. 2016, 187, 423–433. [Google Scholar] [CrossRef]

- Merlin, O.; Chirouze, J.; Olioso, A.; Jarlan, L.; Chehbouni, G.; Boulet, G. An image-based four-source surface energy balance model to estimate crop evapotranspiration from solar reflectance/thermal emission data (SEB-4S). Agric. For. Meteorol. 2014, 184, 188–203. [Google Scholar] [CrossRef] [Green Version]

- Zhang, D.; Zhou, G. Estimation of Soil Moisture from Optical and Thermal Remote Sensing: A Review. Sensors 2016, 16, 1308. [Google Scholar] [CrossRef]

- Sun, L.; Schulz, K. The Improvement of Land Cover Classification by Thermal Remote Sensing. Remote Sens. 2015, 7, 8368–8390. [Google Scholar] [CrossRef] [Green Version]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D.; et al. Uav-based high resolution thermal imaging for vegetation monitoring, and plant phenotyping using ici 8640 p, flir vue pro r 640, and thermomap cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef]

- Mikhail, E.M.; Bethel, J.S.; McGlone, J.C. Introduction to Modern Photogrammetry; John Wiley & Sons: New York, NY, USA, 2001; pp. 68–72, 123–125. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Rathnayaka, P.; Baek, S.; Park, S. Calibration of a Different Field-of-view Stereo Camera System using an Embedded Checkerboard Pattern. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Porto, Portugal, 27 February 2017. [Google Scholar]

- Habib, A.; Morgan, M.; Lee, Y. Bundle Adjustment with Self-Calibration using Straight Lines. Photogramm. Record 2002, 17, 635–650. [Google Scholar] [CrossRef]

- Li, Z.; Tan, J.; Liu, H. Rigorous Boresight Self-Calibration of Mobile and UAV LiDAR Scanning Systems by Strip Adjustment. Remote Sens. 2019, 11, 442. [Google Scholar] [CrossRef]

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Flatt, J.E.; Crawford, M. Boresight Calibration of GNSS/INS-Assisted Push-Broom Hyperspectral Scanners on UAV Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1734–1749. [Google Scholar] [CrossRef]

- Costa, F.A.L.; Mitishita, E.A. An approach to improve direct sensor orientation using the integration of photogrammetric and lidar datasets. Int. J. Remote Sens. 2019, 1–22. [Google Scholar] [CrossRef]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.M.; Habib, A. Automated Aerial Triangulation for UAV—Based Mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef]

- Tomaštík, J.; Mokroš, M.; Surový, P.; Grznárová, A.; Merganič, J. UAV RTK/PPK Method—An Optimal Solution for Mapping Inaccessible Forested Areas? Remote Sens. 2019, 11, 721. [Google Scholar] [CrossRef]

- Chiang, K.; Tsai, M.; Chu, C. The Development of an UAV Borne Direct Georeferenced Photogrammetric Platform for Ground Control Point Free Applications. Sensors 2012, 12, 9161–9180. [Google Scholar] [CrossRef] [Green Version]

- Padró, J.C.; Muñoz, F.J.; Planas, J.; Pons, X. Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 130–140. [Google Scholar] [CrossRef]

- Rehak, M.; Mabillard, R.; Skaloud, J. A Micro-UAV with the Capability of Direct Georeferencing. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 317–323. [Google Scholar] [CrossRef]

- Weng, J.; Cohen, P.; Herniou, M. Camera Calibration with Distortion Models and Accuracy Evaluation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 965–980. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mohammadi, N. Illumination-Robust remote sensing image matching based on oriented self-similarity. ISPRS J. Photogramm. Remote Sens. 2019, 153, 21–35. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate Camera Calibration from Multi-View Stereo and Bundle. Int. J. Comput. Vis. 2009, 84, 257–268. [Google Scholar] [CrossRef]

- Chiang, K.; Tsai, M.; Naser, E.; Habib, A.; Chu, C. New Calibration Method Using Low Cost MEM IMUs to Verify the Performance of UAV-Borne MMS Payloads. Sensors 2015, 15, 6560–6585. [Google Scholar] [CrossRef] [Green Version]

- Gabrlik, P.; Cour-Harbo, A.L.; Kalvodova, P.; Zalud, L.; Janata, P. Calibration and accuracy assessment in a direct georeferencing system for UAS photogrammetry. Int. J. Remote Sens. 2018, 39, 4931–4959. [Google Scholar] [CrossRef] [Green Version]

- Delara, R.; Mitistia, E.A.; Habib, A. Bundle Adjustment of Images from Non-metric CCD Camera Using LiDAR Data as Control Points. In Proceedings of the International Archives of 20th ISPRS Congress, Istanbul, Turkey, 12–23 July 2004. [Google Scholar]

- Elbahnasawy, M.; Habib, A. GNSS/INS-assisted Multi-camera Mobile Mapping: System Architecture, Modeling, Calibration, and Enhanced Navigation. Ph.D. Thesis, Purdue University, West Lafayette, IN, USA, August 2018. [Google Scholar]

- Rehak, M.; Skaloud, J. Time synchronization of consumer cameras on Micro Aerial Vehicles. ISPRS J. Photogramm. Remote Sens. 2017, 123, 114–123. [Google Scholar] [CrossRef]

- Agisoft. 2013. Available online: http://www.agisoft.ru (accessed on 7 June 2019).

- Blazquez, M. A New Approach to Spatio-Temporal Calibration of Multi-Sensor Systems. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Beijing, China, 3–13 July 2008. [Google Scholar]

- Ravi, R.; Lin, Y.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. SimultaneousSystem Calibration of a Multi-LiDAR Multicamera Mobile Mapping Platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Matrice 200 User Manual. Available online: https://dl.djicdn.com/downloads/M200/20180910/M200_User_Manual_EN.pdf (accessed on 8 November 2018).

- Matrice 600 Pro User Manual. Available online: https://dl.djicdn.com/downloads/m600%20pro/20180417/Matrice_600_Pro_User_Manual_v1.0_EN.pdf (accessed on 8 November 2018).

- APX. Trimble APX-15UAV(V2)—Datasheet. 2017. Available online: https://www.applanix.com/downloads/products/specs/APX15_DS_NEW_0408_YW.pdf (accessed on 8 November 2018).

- FLIR. FLIR Duo Pro R—User Guide. 2018. Available online: https://www.flir.com/globalassets/imported-assets/document/duo-pro-r-user-guide-v1.0.pdf (accessed on 8 November 2018).

- Sony. Sony ILCE-7R—Specifications and Features. 2018. Available online: https://www.sony.com/electronics/interchangeable-lens-cameras/ilce-7r/specifications (accessed on 8 November 2018).

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Shoemake, K. Animating rotation with quaternion curves. ACM SIGGRAPH Comput. Graph. 1985, 19, 245–254. [Google Scholar] [CrossRef]

| System Parameter | Image Point Location | Flying Direction | Flying Height | Linear Velocity | Angular Velocity |

|---|---|---|---|---|---|

| Lever Arm | NO | YES (except ΔZ) | NO | NO | NO |

| Boresight | YES | YES | YES | NO | NO |

| Time Delay | YES (only in the presence of angular velocities) | YES | YES (only in the presence of angular velocities) | YES | YES |

| Sensor | Δω (Degree) | Δφ (Degree) | Δκ (Degree) | Δx (m) | Δy (m) | Δz (m) | Angular FOV (Degrees) |

|---|---|---|---|---|---|---|---|

| FLIR—Thermal | 180 | 0 | −90 | 0.045 | −0.015 | 0.045 | 32 × 26 |

| FLIR—RGB | 0.045 | 0.025 | 0.050 | 57 × 42 | |||

| Sony—RGB | 180 | 0 | −90 | 0.260 | 0.026 | −0.010 | 54 × 38 |

| Date | Sensor | Altitude above Ground | Ground Speed | GSD Thermal | GSD RGB | Overlap Thermal/RGB | Sidelap Thermal/RGB | Number of Flight Lines | Number of Images |

|---|---|---|---|---|---|---|---|---|---|

| July 25 2018 | FLIR Duo Pro R | 20 m | 2.7 m/s | 1.8 cm | 0.7 cm | 70/80% | 70/80% | 6 | 284 |

| 40 m | 5.4 m/s | 3.6 cm | 1.4 cm | 70/80% | 70/80% | 6 | 164 | ||

| Sept. 14 2018 | 20 m | 2.7 m/s | 1.8 cm | 0.7 cm | 70/80% | 70/80% | 6 | 294 | |

| 40 m | 5.4 m/s | 3.6 cm | 1.4 cm | 70/80% | 70/80% | 6 | 168 | ||

| May 05 2019 | Sony A7R | 20 m | 2.7 m/s | - | 0.28 cm | 70% | 82% | 6 | 198 |

| 40 m | 5.4 m/s | - | 0.56 cm | 70% | 82% | 6 | 116 |

| Estimated c (Pixel) | Estimated (Pixel) | Estimated (Pixel) | Estimated k1 (Pixel-2) | Estimated k2 (Pixel-4) | Estimated p1 (Pixel-1) | Estimated p2 (Pixel-2) |

|---|---|---|---|---|---|---|

| Thermal- FLIR | ||||||

| 1131.96 | 3.015 × 10−7 | 9.998 × 10−14 | −1.992 × 10−6 | 2.302 × 10−6 | ||

| RGB- FLIR | ||||||

| 4122.26 | −2.429 × 10−8 | −1.250 × 10−15 | 1.576 × 10−7 | −2.693 × 10−7 | ||

| RGB- Sony | ||||||

| 7436.44 | 7.771 × 10−10 | −6.557 × 10−17 | 1.906 × 10−7 | 2.702 × 10−7 | ||

| Estimated Time Delay Δt (ms) | Estimated Lever Arm ΔX (m) | Estimated Lever Arm ΔY (m) | Estimated Boresight (°) | Estimated Boresight (°) | Estimated Boresight (°) | Square Root of A-Posteriori Variance Factor (Pixel) | |

|---|---|---|---|---|---|---|---|

| Ignoring Time Delay (bundle adjustment) | |||||||

| July 25th | NA | NA | NA | 179.12 | 1.26 | −90.63 | 0.67 |

| Sept 14th | NA | NA | NA | 179.05 | 1.23 | −90.54 | 0.84 |

| Direct Approach (mini bundle adjustment) | |||||||

| July 25th | −268 ± 2.6 | 0.114 ± 0.024 | −0.032 ± 0.024 | 179.03 ± 0.055 | −0.395 ± 0.052 | −90.82 ± 0.093 | 4.63 |

| Sept 14th | −261 ± 1.41 | 0.100 ± 0.015 | −0.038 ± 0.014 | 178.99 ± 0.030 | −0.508 ± 0.028 | −90.50 ± 0.060 | 2.19 |

| Indirect Approach (bundle adjustment) | |||||||

| July 25th: Operation 1 | N/A | −1.46 | −0.015 (constant) | 179.11 | −0.56 | −90.62 | 0.48 |

| July 25th: Operation 2 | −279 * | 0.066 | −0.29 | 178.68 | −0.56 | −90.72 | 0.47 |

| Sept 14th: Operation 1 | N/A | −1.44 | −0.015 (constant) | 179.05 | −0.58 | −90.55 | 0.75 |

| Sept 14th: Operation 2 | −275 * | 0.142 | −0.27 | 178.68 | −0.50 | −90.91 | 0.72 |

| ΔX | ΔY | ΔZ (Not Estimated) | |||||

|---|---|---|---|---|---|---|---|

| ΔX | 1 | ||||||

| ΔY | −0.001 | 1 | |||||

| ΔZ (not estimated) | 0 | 0 | 1 | ||||

| Δ | 0.015 | −0.945 | 0 | 1 | |||

| Δ | 0.885 | −0.013 | 0 | 0.009 | 1 | ||

| Δ | 0.011 | −0.026 | 0 | 0.024 | −0.011 | 1 | |

| Δ | −0.023 | −0.067 | 0 | 0.022 | 0.326 | −0.025 | 1 |

| Without Considering Time Delay Thermal—July | Without Considering Time Delay Thermal—Sept | |||||

| N1 | 0.03 | 0.03 | −0.18 | 0.01 | −0.05 | −0.06 |

| N2 | 0.05 | 0.06 | −0.29 | −0.06 | −0.06 | −0.12 |

| N3 | 0.03 | 0.08 | −0.51 | −0.03 | −0.01 | −0.03 |

| N4 | −0.04 | 0.12 | −0.12 | −0.02 | −0.01 | 0.02 |

| N5 | 0.04 | 0.19 | 0.08 | 0.070 | 0.02 | 0.00 |

| Mean | 0.02 | 0.10 | −0.20 | −0.01 | −0.02 | −0.04 |

| Standard Deviation | 0.04 | 0.06 | 0.22 | 0.05 | 0.03 | 0.05 |

| RMSE | 0.04 | 0.11 | 0.28 | 0.05 | 0.04 | 0.06 |

| Direct Approach Thermal—July | Direct Approach Thermal—Sept | |||||

| N1 | −0.02 | 0.05 | 0.14 | −0.01 | −0.02 | 0.24 |

| N2 | 0.01 | 0.03 | 0.25 | 0.01 | 0.00 | 0.31 |

| N3 | 0.01 | 0.03 | 0.12 | 0.00 | −0.00 | 0.27 |

| N4 | −0.00 | 0.03 | 0.28 | 0.00 | 0.01 | 0.15 |

| N5 | −0.04 | 0.02 | 0.12 | −0.003 | 0.01 | 0.16 |

| Mean | −0.01 | 0.03 | 0.18 | 0.00 | 0.00 | 0.22 |

| Standard Deviation | 0.02 | 0.01 | 0.08 | 0.01 | 0.01 | 0.07 |

| RMSE | 0.02 | 0.03 | 0.19 | 0.01 | 0.01 | 0.23 |

| Indirect Approach Thermal—July | Indirect Approach Thermal—Sept | |||||

| N1 | 0.01 | 0.05 | −0.11 | −0.061 | −0.02 | 0.14 |

| N2 | −0.01 | 0.05 | −0.06 | −0.078 | −0.03 | 0.12 |

| N3 | 0.01 | 0.05 | −0.03 | −0.03 | 0.01 | 0.21 |

| N4 | 0.00 | 0.05 | 0.04 | −0.0 | −0.00 | 0.16 |

| N5 | 0.04 | 0.07 | 0.10 | 0.07 | 0.01 | 0.20 |

| Mean | 0.01 | 0.05 | −0.01 | −0.02 | −0.01 | 0.17 |

| Standard Deviation | 0.02 | 0.01 | 0.08 | 0.06 | 0.02 | 0.04 |

| RMSE | 0.02 | 0.06 | 0.07 | 0.06 | 0.02 | 0.17 |

| Direct Approach | |||

|---|---|---|---|

| X(m) | Y(m) | Z(m) | |

| July 25th | 0.018 | 0.018 | 0.096 |

| Sept 14th | 0.011 | 0.011 | 0.072 |

| Ignoring Time Delay—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| July 25th | −0.12/−0.07 | 0.24/0.11 | 0.25/0.12 |

| Sept 14th | −0.05/−0.12 | 0.21/0.24 | 0.23/0.27 |

| Ignoring Time Delay—Refined Trajectory Data | |||

| July 25th | −0.02/−0.10 | 0.03/0.08 | 0.03/0.13 |

| Sept 14th | 0.02/0.02 | 0.04/0.03 | 0.05/0.03 |

| Direct Approach—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| July 25th | −0.04/−0.07 | 0.09/0.03 | 0.10/0.07 |

| Sept 14th | −0.09/0.03 | 0.14/0.03 | 0.15/0.03 |

| Indirect Approach—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| July 25th | −0.04/−0.07 | 0.08/0.03 | 0.08/0.08 |

| Sept 14th | −0.06/−0.06 | 0.14/0.08 | 0.14/0.09 |

| Estimated Time Delay Δt (ms) | Estimated Lever Arm ΔX (m) | Estimated Lever Arm ΔY (m) | Estimated Boresight (°) | Estimated Boresight (°) | Estimated Boresight (°) | Square Root of A-Posteriori Variance Factor (Pixel) | |

|---|---|---|---|---|---|---|---|

| Ignoring Time Delay (bundle adjustment) | |||||||

| July 25th | NA | NA | NA | 178.55 | 0.26 | −90.84 | 2.46 |

| Sept 14th | NA | NA | NA | 178.61 | 0.50 | −90.67 | 2.54 |

| Direct Approach (mini bundle adjustment) | |||||||

| July 25th | −205 ± 0.433 | 0.068 ± 0.005 | 0.005 ± 0.005 | 178.57 ± 0.011 | 0.072 ± 0.011 | −90.92 ± 0.014 | 4.78 |

| Sept 14th | −203 ± 0.457 | 0.073 ± 0.005 | 0.0083 ± 0.005 | 178.58 ± 0.012 | 0.119 ± 0.011 | −90.83 ± 0.015 | 4.76 |

| Indirect Approach (bundle adjustment) | |||||||

| July 25th: Operation 1 | N/A | −0.97 | 0.025 (constant) | 178.56 | 0.23 | −90.87 | 2.46 |

| July 25th: Operation 2 | −188 | 0.06 | −0.02 | 178.55 | 0.23 | −90.86 | 2.45 |

| Sept 14th: Operation 1 | N/A | −1.03 | 0.025 (constant) | 178.59 | 0.26 | −90.68 | 2.53 |

| Sept 14th: Operation 2 | −199 | 0.11 | −0.03 | 178.53 | 0.22 | −90.83 | 2.51 |

| Without Considering Time Delay RGB—July | Without Considering Time Delay RGB—September | |||||

| N1 | −0.07 | −0.05 | 0.11 | 0.06 | −0.03 | 0.07 |

| N2 | −0.03 | 0.01 | 0.10 | 0.01 | −0.00 | 0.01 |

| N3 | 0.01 | 0.08 | 0.08 | −0.01 | −0.03 | −0.00 |

| N4 | 0.04 | 0.13 | 0.06 | −0.02 | 0.00 | 0.08 |

| N5 | 0.09 | 0.20 | 0.01 | 0.07 | 0.06 | 0.14 |

| Mean | 0.01 | 0.07 | 0.07 | 0.02 | 0.00 | 0.06 |

| Standard Deviation | 0.06 | 0.10 | 0.04 | 0.04 | 0.04 | 0.06 |

| RMSE | 0.06 | 0.11 | 0.08 | 0.04 | 0.03 | 0.08 |

| Direct Approach RGB—July | Direct Approach RGB—September | |||||

| N1 | 0.00 | 0.04 | 0.07 | −0.00 | −0.02 | 0.10 |

| N2 | −0.01 | 0.03 | 0.10 | −0.01 | −0.01 | 0.11 |

| N3 | 0.00 | 0.03 | 0.12 | −0.00 | −0.00 | 0.13 |

| N4 | −0.01 | 0.02 | 0.12 | −0.02 | −0.00 | 0.04 |

| N5 | −0.00 | 0.01 | 0.09 | −0.02 | 0.00 | 0.05 |

| Mean | 0.00 | 0.02 | 0.10 | −0.01 | −0.01 | 0.09 |

| Standard Deviation | 0.01 | 0.01 | 0.02 | 0.01 | 0.01 | 0.04 |

| RMSE | 0.01 | 0.03 | 0.10 | 0.01 | 0.01 | 0.09 |

| Indirect Approach RGB—July | Indirect Approach RGB—September | |||||

| N1 | 0.06 | 0.00 | −0.05 | −0.05 | −0.03 | 0.05 |

| N2 | 0.04 | 0.04 | −0.07 | −0.05 | −0.00 | 0.01 |

| N3 | 0.03 | 0.09 | −0.10 | −0.03 | −0.03 | 0.08 |

| N4 | 0.00 | 0.13 | −0.11 | −0.04 | −0.01 | 0.14 |

| N5 | −0.01 | 0.17 | −0.15 | −0.01 | 0.03 | 0.18 |

| Mean | 0.02 | 0.04 | −0.04 | −0.04 | −0.01 | 0.09 |

| Standard Deviation | 0.04 | 0.05 | 0.03 | 0.02 | 0.02 | 0.07 |

| RMSE | 0.04 | 0.07 | 0.05 | 0.04 | 0.02 | 0.11 |

| Direct Approach | |||

|---|---|---|---|

| X(m) | Y(m) | Z(m) | |

| July 25th | 0.004 | 0.004 | 0.015 |

| Sept 14th | 0.004 | 0.004 | 0.016 |

| Ignoring Time Delay—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| July 25th | −1.11/0.06 | 0.02/0.06 | 1.12/0.07 |

| Sept 14th | −0.38/−0.05 | 0.77/0.03 | 0.78/0.06 |

| Ignoring Time Delay—Refined Trajectory Data | |||

| July 25th | −0.01/−0.10 | 0.07/0.09 | 0.08/0.13 |

| Sept 14th | −0.01/−0.01 | 0.05/0.04 | 0.05/0.04 |

| Direct Approach—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| July 25th | −0.04/−0.07 | 0.06/0.02 | 0.06/0.07 |

| Sept 14th | 0.01/0.01 | 0.01/0.03 | 0.01/0.03 |

| Indirect Approach—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| July 25th | −0.03/0.07 | 0.04/0.03 | 0.05/0.08 |

| Sept 14th | −0.01/0.01 | 0.03/0.03 | 0.03/0.03 |

| Estimated Time Delay Δt (ms) | Estimated Lever Arm ΔX (m) | Estimated Lever Arm ΔY (m) | Estimated Boresight (°) | Estimated Boresight (°) | Estimated Boresight (°) | Square Root of A-Posteriori Variance Factor (Pixel) | |

|---|---|---|---|---|---|---|---|

| Ignoring Time Delay (bundle adjustment) | |||||||

| May 06th | NA | NA | NA | 178.29 | −0.09 | −91.12 | 1.56 |

| Direct Approach (mini bundle adjustment) | |||||||

| May 06th | −1.25 ± 0.48 | 0.267 ± 0.004 | 0.019 ± 0.004 | 179.32 ± 0.011 | −0.097 ± 0.010 | −91.08 ± 0.013 | 5.61 |

| Indirect Approach (bundle adjustment) | |||||||

| May 06th: Operation 1 | N/A | 0.268 | 0.026 (constant) | 179.29 | −0.09 | −91.12 | 1.56 |

| May 06th: Operation 2 | −0.5 | 0.27 | 0.002 | 179.29 | −0.09 | −91.12 | 1.56 |

| Ignoring Time Delay | Direct Approach | Indirect Approach | |||||||

|---|---|---|---|---|---|---|---|---|---|

| N1 | −0.04 | 0.02 | −0.00 | −0.01 | 0.02 | −0.03 | −0.04 | 0.02 | −0.00 |

| N2 | −0.03 | 0.00 | −0.05 | −0.01 | 0.01 | −0.03 | −0.03 | 0.01 | −0.05 |

| N3 | −0.03 | 0.02 | −0.06 | −0.02 | 0.02 | −0.02 | −0.03 | 0.02 | −0.06 |

| N4 | −0.01 | 0.02 | −0.07 | −0.01 | 0.01 | −0.03 | −0.01 | 0.02 | −0.07 |

| N5 | 0.02 | 0.01 | −0.01 | −0.01 | −0.00 | −0.00 | 0.02 | 0.01 | −0.01 |

| Mean | −0.02 | 0.02 | −0.04 | −0.01 | 0.01 | −0.02 | −0.02 | 0.02 | −0.04 |

| Standard Deviation | 0.02 | 0.01 | 0.03 | 0.00 | 0.01 | 0.01 | 0.02 | 0.01 | 0.03 |

| RMSE | 0.03 | 0.02 | 0.05 | 0.01 | 0.01 | 0.03 | 0.03 | 0.02 | 0.05 |

| Direct Approach | |||

|---|---|---|---|

| X(m) | Y(m) | Z(m) | |

| May 06th | 0.003 | 0.003 | 0.013 |

| Ignoring Time Delay—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| May 06th | 0.03/−0.02 | 0.02/0.02 | 0.04/0.03 |

| Ignoring Time Delay—Adjusted Trajectory Data | |||

| May 06th | 0.02/−0.02 | 0.02/0.01 | 0.03/0.02 |

| Direct Approach—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| May 06th | 0.03/−0.02 | 0.01/0.02 | 0.03/0.03 |

| Indirect Approach—Original Trajectory Data | |||

| Mean—X/Y (m) | Standard Deviation—X/Y (m) | RMSE—X/Y (m) | |

| May 06th | 0.03/−0.02 | 0.02/0.02 | 0.03/0.03 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

LaForest, L.; Hasheminasab, S.M.; Zhou, T.; Flatt, J.E.; Habib, A. New Strategies for Time Delay Estimation during System Calibration for UAV-Based GNSS/INS-Assisted Imaging Systems. Remote Sens. 2019, 11, 1811. https://doi.org/10.3390/rs11151811

LaForest L, Hasheminasab SM, Zhou T, Flatt JE, Habib A. New Strategies for Time Delay Estimation during System Calibration for UAV-Based GNSS/INS-Assisted Imaging Systems. Remote Sensing. 2019; 11(15):1811. https://doi.org/10.3390/rs11151811

Chicago/Turabian StyleLaForest, Lisa, Seyyed Meghdad Hasheminasab, Tian Zhou, John Evan Flatt, and Ayman Habib. 2019. "New Strategies for Time Delay Estimation during System Calibration for UAV-Based GNSS/INS-Assisted Imaging Systems" Remote Sensing 11, no. 15: 1811. https://doi.org/10.3390/rs11151811

APA StyleLaForest, L., Hasheminasab, S. M., Zhou, T., Flatt, J. E., & Habib, A. (2019). New Strategies for Time Delay Estimation during System Calibration for UAV-Based GNSS/INS-Assisted Imaging Systems. Remote Sensing, 11(15), 1811. https://doi.org/10.3390/rs11151811