1. Introduction

Land cover change detection (LCCD) with bi-temporal remote sensing images is a popular technique in remote sensing applications [

1,

2,

3]. This technique concentrates on finding and capturing land cover changes using two or more remote sensing images that cover the same geographic area acquired on different dates [

1,

4,

5,

6]. LCCD plays an important role in large-scale land use analysis [

7,

8,

9], environment monitoring evaluation [

10,

11], natural hazard assessment [

12,

13,

14], and natural resource inventory [

15]. However, issues such as “salt-and-pepper” noise in the detection results, especially for VHR remote sensing images [

16,

17,

18], pose a challenge in the practical applications of LCCD with remote sensing images.

LCCD with bi-temporal remote sensing images can be viewed as a pattern recognition problem in image processing where two groups of pixels are labelled, one class for the changed pixels and the other for the unchanged pixels [

19]. Existing methods for LCCD can be classified into two types: binary change detection and “from–to” change detection. A binary change detection method acquires land cover change information by measuring the change magnitude through a comparison of bi-temporal images such as image rotation [

20], image difference [

21], and change vector analysis methods [

22,

23,

24]. In these methods, a binary threshold is adopted to separate the pixels of the change magnitude image (CMI) into “changed” and “unchanged”. Some advantages of binary change detection are that it is straightforward and operational; however, the limitation of this method is that it can only provide the size and distribution of the change target without providing more details on the change information [

25,

26]. In contrast, the “from–to” change method can directly recognize the kinds of changes “from one to another”. However, most “from–to” change detection methods depend on the performance of the corresponding land cover classification [

22,

27,

28,

29].

In recent decades, a considerable number of studies have focused on LCCD based on VHR remote sensing images [

30,

31,

32]. The VHR remote sensing image can depict ground targets in more detail than median-low resolution remote sensing images. However, these VHR images with insufficient spectra, but higher resolution usually means a larger intra-variance of the intra-class [

33,

34,

35]. Although satellite sensors such as the WorldView-3 satellite have collected VHR images with eight spectral bands (red, red edge, coastal, blue, green, yellow, near-IR1, and near-IR2) in recent years, the image still demonstrates “larger intra-variance of the intraclass” [

36,

37]. Furthermore, when using LCCD on VHR bi-temporal images, the two images acquired on different dates are usually inconsistent in terms of atmospheric conditions, sun height, or seasonal phenology; this difference will bring “pseudo-change” in the detection map [

38,

39]. To address this problem, the contextual spatial feature is usually adopted to smoothen the noise and improve detection accuracies. For example, Celik et al. proposed a method called principal component analysis and k-means clustering (PCA_Kmeans), which divided the CMI into h × h overlapping blocks [

40]. The fuzzy clustering method was integrated into the change vector analysis for LCCD (CVA_FCM) [

41]. The semi-supervised fuzzy c-means clustering algorithm (Semi_FCM) was developed to address the problem of separating the different images into changed and unchanged pixels [

42]. Zhang et al. presented a novel method for unsupervised change detection (CD) from remote sensing images using level set evolution with local uncertainty constraints (LSELUC) [

43]. The level set method was developed for acquiring landslide inventory mapping with VHR remote sensing images [

13]. The Markov random field is another effective way of employing contextual information to improve the performance of LCCD with VHR remote sensing images [

44,

45,

46]. Although these methods can reduce the noise in the detection map, they are sensitive to contextual space and the progress of determining the contextual scale depends on the mathematical model used and the experience of the practitioner.

Apart from the aforementioned spatial context-based LCCD methods, which are referred to as pre-processing LCCD techniques in this study, a number of studies have also reported that the post-processing procedure can further improve the performance and accuracy of LCCD [

22,

47]. The post-processing LCCD method focuses on processing the initial detection map and enhancing the performance of LCCD. For example, post-processing with majority voting (MV) has played an important role in improving the raw classification of remote sensing images [

48,

49]. A general post-processing classification framework (GPCF) was proposed to smoothen the noise of the initial classification map in [

50]. Inspired by the post-processing work in image classification, in our previous study [

51], an object-based expectation maximization (OBEM) post-processing approach was developed to refine raw LCCD results, which confirmed that using post-processing could effectively improve the performance of LCCD.

While reviewing LCCD techniques with remote sensing images in the past decades [

1,

2,

20,

21,

52], most LCCD methods were found to concentrate on the extraction and utilization of one single feature to measure the change magnitude between bi-temporal images. In addition, if these methods are defined as “pre-processing LCCD techniques”, then post-processing LCCD techniques for the methods are still missing. With the challenge of LCCD with VHR images becoming increasingly prominent in recent years, a considerable number of initial detection results cannot satisfy the requirements of practical application due to the large amount of “salt-and-pepper noise” in a raw change detection map. Pre- and post-processing LCCD techniques should complement each other to improve the change detection performance and accuracies. This complementarity and improvement serve as the basic motivation and viewpoint of our work.

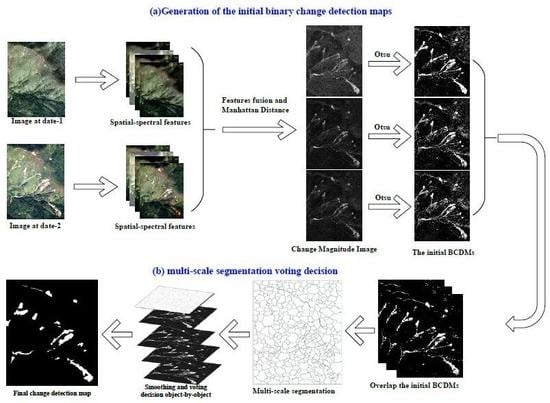

This study, which was inspired by the effectiveness of spatial–spectral feature fusion for image classification [

34,

53,

54,

55] and the post-processing LCCD method [

51], developed a novel LCCD approach to improve the performance of LCCD with VHR bi-temporal remote sensing images. The contribution of the proposed framework lies in constructing a new workflow based on the existing techniques including the spatial–spectral feature fusion and the multi-scale segmentation majority voting techniques. Compared with our previous works, which include the MV [

49] and OBEM [

51], the improvement and difference of this proposed framework are twofold:

- 1)

While the binary change detection was viewed previously as a “pre-processing technique”, MV [

49] and GPCF [

50] can be applied to smoothen the noise and improve the initial detection performance. However, the existing regular window sliding technique cannot cover various ground targets with different shapes and sizes. Hence, in the proposed framework, in addition to the spatial–spectral feature fusion strategy, the initial detection map sets were fused and smoothened using multi-scale segments that represent anastomosis with the size and shape of the ground targets.

- 2)

In the previous post-processing method called the OBEM [

51], the best raw initial change detection result is first chosen from the initial detection results set, then multi-scale segmentation based on the post-event images is adopted to smoothen the noise of the selected initial raw map. In contrast, in the proposed framework, the multi-scale segmentation was used directly to fuse the initial detection results and generate the final change detection map by the majority voting decision.

Three pairs of VHR remote sensing images for depicting real land cover change events were employed to assess the effectiveness and performance of our proposed framework. Four state-of-the-art context based LCCD methods and three post-processing LCCD methods were adopted and compared with the proposed framework. Experiments based on the bi-temporal remote sensing images, which covered the real landslide and land use events, were conducted for comparisons. The present study concluded that the proposed LCCD framework based on the integration of spatial–spectral features and multi-scale segmentation voting decision was better suited for the task of change detection than other state-of-the-art techniques.

The rest of this article is divided into four sections.

Section 2 describes the proposed methodology. A description of the experimental dataset is given in

Section 3.

Section 4 presents the details of the experiments and discussion on the results. Conclusions are drawn in

Section 5.

4. Results and Analysis

Various measuring indices were considered in the quantitative assessment of the proposed framework: the ratio of false alarms (FA), the ratio of missed alarms (MA), the ratio of total errors (TE), overall accuracy (OA), and Kappa coefficient (Ka). All performance measuring indices were considered for a comparative analysis of the experiments.

As mentioned in the above sections, evaluation of the effectiveness of the proposed method, raw spectral feature, three spatial-spectral feature fusion approaches (layer stacking [

35], mean-weight [

60], and adaptive weight [

61]), and the proposed framework was applied on the Site A VHR bi-temporal images for comparison.

Table 5 shows that compared with methods that use the raw spectral feature alone, the spatial feature coupled with the spectral feature could clearly improve the cover detection accuracies. For instance, the improvement of FA was about 5.98% in terms of the layer stacking fusion approach [

35] and the EMPs spatial feature extraction approach [

57]. Furthermore, the proposed approach achieved the best detection accuracies when compared with the spatial–spectral feature fusion-based approach and that of using the raw spectral feature alone.

Figure 4 demonstrates that the proposed framework clearly smoothened the salt-and-pepper noise in the results using the raw spectral feature alone and each spatial–spectral feature fusion-based approach.

The proposed approach was compared with state-of-the-art LCCD methods including PCA_Kmean [

40], CVA_FCM [

41], Semi_FCM [

42], and LSELUC [

43] to further outline the advantages of the proposed framework. For experimentation, two pairs of VHR remote sensing images were considered for comparison.

Table 6 shows the results of the comparison of the Site B landslide aerial remote sensing images with state-of-the-art methods. The advantages of the proposed approach can be found in three ways: (1) The results showed that among the state-of-the art methods, the relatively new LSELUC approach achieved better accuracies because reliable local spatial information was considered through local uncertainties in the developed LSELUC approach. However, the detection accuracies of the proposed framework achieved the best accuracies in terms of FA, MA, TE, OA, and Ka; (2) Different spatial–spatial feature fusion methods that may have different effects on the performance of the proposed framework were adopted in the proposed framework; however, the best accuracy could be achieved by the proposed framework regardless of which spatial–spectra feature fusion method was adopted; and (3) For the Site B aerial images, EMPs [

57] coupled with spectral feature in the proposed approach acquired the best accuracies.

Comparisons among the approaches were performed as shown in the bar charts of

Figure 5. The figure clearly presents the advantages of the proposed approach. The visual performance of the comparisons further verified the conclusion of comparisons of the Site B landslide aerial images as shown in

Figure 6. The comparisons on the Ji’Nan QuickBird satellite images for detecting land cover and land use change were conducted and similar conclusions were reached. The details can be found in

Table 7 and

Figure 7 and

Figure 8. From the quantitative comparisons and visual performance, it can be seen that the proposed approach achieved the best accuracies in terms of FA, MA, and TA, regardless of the utilized spatial feature extraction approach that was employed.

The proposed approach was compared with MV [

49], GPCF [

50], and OBEM [

51] to further investigate the advantages of the proposed approach as designed in the third experiment. The comparative results for the Site B landslide aerial images are shown in

Table 8. From these comparisons, the proposed approach appears to have achieved a competitive detection accuracy when compared with that of MV [

49], GPCF [

50], and OBEM [

51]. In addition, while visual performance was observed as shown in

Figure 9, the proposed approach (the fourth column in

Figure 9) presented less noise than the others. This finding was further verified in the quantitative comparison in

Table 9. A similar conclusion can be reached from the comparisons conducted on the Ji’Nan QB satellite remote sensing images shown in

Table 9 and

Figure 10.

The sensitivity of the detection accuracy and the parameters of the proposed approach are discussed in this section with the aim of extending the potential application of the proposed approach. We only observed the relationship between scale and detection accuracies. Parameter scale indicates the size of the segmental object and a larger scale will generate larger segments and the ground details of a target may be smoothened. In contrast, a smaller scale will yield a smaller segment and more ground detail will be preserved. However, more noise will be introduced to the detection results. Therefore, an appropriate scale should be adjusted according to the given images.

Figure 11 shows that MA, TE, and FA decreased as the scale increased. Furthermore, MA and TE decreased gradually when the scale ranged from 10 to 30. However, when the value of the scale was larger than 30, MA and TE remained at a horizontal level. These results can be attributed to the size of the segments being large enough to obtain optimum accuracy because the scale has less effect on the size of the segments as the other parameters (compactness and shape) are fixed. In addition to MA and TE, FA also decreased as the scale increased and then fluctuated in the range of 2.99 to 3.91. This fluctuation may be caused by the uncertain distribution of spatial heterogeneity.

The relationship between the segmental scale and the detection accuracy in the Ji’Nan QB satellite images was also investigated.

Figure 12 shows that for the Ji’Nan dataset, FA and TE decreased as the scale increased, and MA first increased then decreased. These findings are helpful in determining the parameters of the proposed approach.

5. Conclusions

In the present work, a novel framework for detecting land cover change using spatial–spectral feature fusion and multi-scale segmentation voting decision strategies was proposed. Instead of using a single feature to obtain the binary change detection map directly, spatial features were extracted and coupled with the raw spectral feature through different fusion strategies. Different spatial–spectral features were provided with different initial BCDMs. Finally, a multi-scale segmentation voting decision strategy was proposed to fuse the initial BCDMs into the final change detection map. The main contribution of the proposed approach was that it provides a comprehensive framework for more accurate land cover change detection using bitemporal VHR remote sensing images. Multi-spatial features and different feature fusion strategies were introduced to generate the initial BCDMs. In addition, multi-scale segmentation voting decision was first promoted to fuse the initial BCDMs into the final change detection map. The advantages of multi-scale segmentation voting decision have two aspects: (1) the different performance of the initial BCMDs, which are obtained from different spatial–spectral features, can be utilized together to avoid the bias detection; and (2) majority voting with the constraint of a multi-scale object can consider the uncertainty of the ground target such as the shape and size of a target, which is helpful in improving the voting accuracy.

Experiments were carried out on three pairs of datasets to confirm the effectiveness of the proposed approach. The results of the experiments showed that the proposed approach achieved better performance than using the raw spectral feature alone and other state-of-the-art LCCD techniques. However, one limitation of the proposed framework is that it requires many parameters in practical application, and the optimized parameter setting for a specific dataset is time-consuming. In the future, an extensive investigation of the proposed approach will be conducted on additional types of images and land cover change events such as unmanned aerial vehicle images and forest disasters. Theoretically, further investigations on a method with various sourcing image and land cover change events will improve the robustness of the method. A comprehensive investigation will also broaden the applicability of the proposed approach.