A Multi-Scale Filtering Building Index for Building Extraction in Very High-Resolution Satellite Imagery

Abstract

:1. Introduction

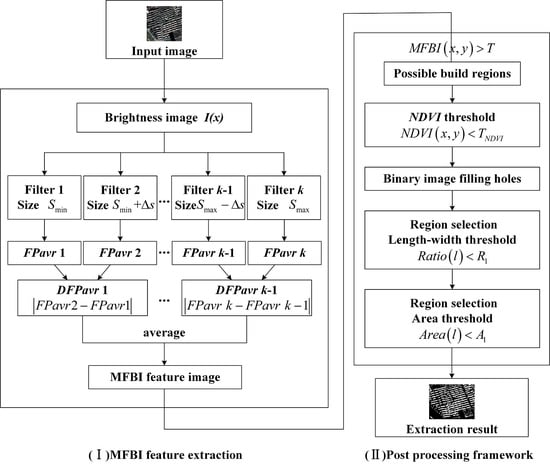

- A multi-scale filtering building index (MFBI) for building extraction in VHRRSI is presented. It overcomes some drawbacks of MBI like the heavy computation cost, with better accuracy and a much faster computational speed than MBI.

- Two scenarios to generate multiple channel MFBI (MMFBI) are presented, in the hope of utilizing more spectral information that contributes to urban regions in optical VHRRSI. These two scenarios can reduce false alarms in MFBI and achieve better performance.

- A new building extraction dataset for VHRRSI is introduced in this paper. It is especially appropriate for building extraction task in Chinese region. It can serve as a benchmark for current model-driven methods and a complementary of several available data-driven VHRRSI building extraction datasets.

2. Related Work

2.1. Morphological Profile

2.2. Morphological Building Index

2.3. Building Extraction Datasets

- Every dataset for HRRSI usually consists of a few small-size pieces of images and is often incapable to represent the performance of a proposed method in different situations.

- Till now almost all datasets for VHRRSI are from aerial imagery which covers some regions in the US or Europe, with a good imaging condition. No VHRRSI dataset designed for Chinese region is available now, while urban and suburban landscapes in China and Western countries are quite different (examples are demonstrated in Figure 3). Note that it is acknowledged that different training samples usually lead to quite different performance for data-driven methods and that in many cases the imaging condition of satellite image is different from aerial images.

- No open VHRRSI building extraction dataset from satellite imagery is available now, let alone the requirement to fit into both model-driven and data-driven methods since for model-driven methods, the near infrared channel is quite important for their implementation and performance.

3. Methodology

3.1. Multi-Scale Building Index

3.2. Joint Use of MFBI and Spectral Information

4. Experiments and Analysis

4.1. Dataset

- The inter-class similarity and intra-class dissimilarity in VHRRSI. It is widely acknowledged that with the increase of spatial resolution, both the similarity between different types of land cover and the dissimilarity of the same type of land cover have largely increased, resulting in a series of problems for the automatic interpretation of VHRRSI. Hence, to test the performance of a specific algorithm, the variety of building shapes, building sizes and building roofs must be considered when selecting samples for our datasets.

- Land covers hard to be distinguished in the building extraction task. In Reference [61], Mohsen concludes that one of the major challenges in building extraction tasks in VHRRSI is the existence of shadow, vegetation, water regions, and man-made non-building features. These types of land cover should also appear in the samples of our datasets to test the performance of an algorithm.

- The covering of typical Chinese landscape in different regions as many as possible. It is known that different regions in China usually have different building structures due to a series of factors such as the influence of economy, climate, population and so on. Meanwhile, the urban, suburban and rural areas should also be covered.

4.2. Parameter Settings

4.3. Experiments on Basic MFBIs

4.3.1. Experiment on Computation Time

4.3.2. Experiments on WHUBED

- From the perspective of performance on different types of samples, all of the three methods, namely, objected based segmentation, MBI and MFBI, tend to perform better on urban or suburban areas. It can be explained that in these regions, many buildings are in regular shape while roads, one of the main false alarms, can be easily removed by post-processing when calculating shape index and length-width ratio. However, although MFBI performs better than the other two methods on rural areas, neither of these three methods are robust enough on rural areas, where the farmland with a regular geometric shape and too much bright bare soil can cause severe false alarms.

- The proposed MFBI tends to have relatively high recall and the precision value is lower than the recall value. The high recall and relatively high false alarm of MFBI might be explained by the utilization of rectangular filtering windows. Rectangular filters tend to stress the influence of bright pixels belonging to building areas but pixels around the building areas could also have a high gray value after calculating MFBI. In other words, the rectangular filtering window might not fully utilize the abundant spatial information of building areas in VHRRSI.

4.3.3. Sensitivity Analysis

- With the increase of MFBI, the F-score tends to increase first and then decrease. While the recall tends to decrease, the precision tends to increase. This trend fits the general regulation of the recall and precision curves offered in References [34,50]. A small threshold usually leads to the selection of a relatively large amount of samples. Although we will get a high recall from these samples, a large number of false positives are among these samples, leading to the relatively low precision. On the contrary, when the threshold is set high, the algorithm will select a relatively small number of samples with relatively high precision, while some true positives are missed.

- When the threshold is set from 0.4 to 0.6, the proposed method can usually achieve the best performance with a high recall value and a modest precision value, no matter in urban, suburban or rural regions. It can be explained that after the feature extraction of MFBI, pixels belonging to building areas often have an MFBI value at about 0.4 to 0.6. Such regulation has also been reported in Reference [34].

4.4. Experiments on Two Proposed Scenarios

- The implementation of PCA can help extract building features. In the first scenario, the PCA is implemented on the original image from our datasets. Much of the information from building pixels can be enhanced (see from the second column of Figure 10) and these homogenous regions are salient in the first component.

- For the second scenario to generate MMFBI, after the calculation of MFBI in each channel and the PCA transformation, the MMFBI feature map is more capable to enhance building areas than the MMFBI feature map in our first scenario, which simply implements PCA on optical images. It can be explained that the calculation of MFBI on each channel selects pixels that could belong to building areas and later PCA refines these selected pixels. For example, some pixels belonging to vegetation are selected in the red channel but are not selected in the green and blue channel, and the PCA implementation can exclude these pixels from the feature map.

- The feature extraction ability of the two proposed scenarios is better than the basic MFBI, especially when we take account of the precision and the F-score. This result is reasonable since information that contributes greatly to building areas and other man-made objects in the red, green and blue channel are all taken into account and the signal of some false alarms from one single channel can be suppressed. This phenomenon is clearly demonstrated in Figure 8 and Figure 11. From the sixth and seventh row of Figure 8 and the first column of Figure 11, much noise mainly from wide roads can be observed in the MFBI feature map, while in the second and the third column of Figure 11, noise is much less in the MMFBI feature maps.

- The first scenario can make the feature map more compactness since the first component of the three channel optical image contains more information on the building structures while suppresses much information from other types of land cover. This phenomenon is clearly demonstrated in the second row of Figure 11.

- The second scenario can improve the accuracy mainly because of the calculation of MFBI on three channels separately and PCA transformation after that. Calculating MFBI on each channel makes full use of information that can present the signal of building areas and the PCA transformation on this image can refine the result by eliminating some pixels belonging to other land ground types such as road or bare soil. The situation that some roads mixed with building areas in the feature map of MFBI and the first scenario of MMFBI can be removed in the feature map of the second scenario MMFBI is obvious in the image of Figure 11c,f,i. Meanwhile, as is mentioned in Section 3.2, after PCA, while the first component contains much signal from building areas, the second component contains much information from other land covers such as roads and bare soil. However, simply using the second component also takes the risk of excluding some building areas whose material is similar to roads. It should be emphasized that in pixel-level building detection, one of the major differences between HRRSI and VHRRSI is that roads are much wider in VHRRSI and are more difficult to be eliminated.

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, X.; Wen, D.W.; Li, J.Y.; Qin, R.J. Multi-level monitoring of subtle urban changes for the megacities of China using high-resolution multi-view satellite imagery. Remote Sens. Environ. 2017, 56, 56–75. [Google Scholar] [CrossRef]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef]

- Zhang, T.; Huang, X. Monitoring of Urban Impervious Surfaces Using Time Series of High-Resolution Remote Sensing Images in Rapidly Urbanized Areas: A Case Study of Shenzhen. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 2692–2708. [Google Scholar] [CrossRef]

- Herold, M.; Goldstein, N.C.; Clarke, K.C. The spatiotemporal form of urban growth: Measurement, analysis and modeling. Remote Sens. Environ. 2003, 86, 286–302. [Google Scholar] [CrossRef]

- Shen, J. Estimating Urbanization Levels in Chinese Provinces in 1982—2000. Int. Stat. Rev. 2006, 74, 89–107. [Google Scholar] [CrossRef]

- Yew, C.P. Pseudo–Urbanization? Competitive government behavior and urban sprawl in China. J. Contemp. China 2012, 21, 281–298. [Google Scholar] [CrossRef]

- Zhu, Y.G.; Ioannidis, J.P.; Li, H.; Jones, K.C.; Martin, F.L. Understanding and harnessing the health effects of rapid urbanization in China. Environ. Sci. Technol. 2011, 45, 5099–5104. [Google Scholar] [CrossRef] [PubMed]

- Ji, C.Y.; Liu, Q.H.; Sun, D.F.; Wang, S.; Lin, P.; Li, X.W. Monitoring urban expansion with remote sensing in China. Int. J. Remote Sens. 2001, 22, 1441–1455. [Google Scholar] [CrossRef]

- Zhang, Y. Optimisation of building detection in satellite images by combining multispectral classification and texture filtering. ISPRS J. Photogramm. Remote Sens. 1999, 54, 50–60. [Google Scholar] [CrossRef]

- Mayer, H. Automatic Object Extraction from Aerial Imagery—A Survey Focusing on Buildings. Comput. Vis. Image Underst. 1999, 74, 138–149. [Google Scholar] [CrossRef]

- Harris, R. Satellite remote sensing: Low spatial resolution. Prog. Phys. Geogr. 1995, 9, 600–606. [Google Scholar] [CrossRef]

- Haala, N.; Kada, M. An update on automatic 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2010, 65, 570–580. [Google Scholar] [CrossRef]

- Thomas, M. Remote Sensing and Image Interpretation; John Wiley & Sons: Hoboken, NJ, USA, 1979. [Google Scholar]

- Cheng, G.; Han, J.W. A Survey on Object Detection in Optical Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2016, 117, 11–28. [Google Scholar] [CrossRef]

- Han, X.; Zhong, Y.; Zhang, L. An Efficient and Robust Integrated Geospatial Object Detection Framework for High Spatial Resolution Remote Sensing Imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef]

- Qiu, S.H.; Wen, G.J.; Liu, J.; Deng, Z.P.; Fan, Y.X. Unified Partial Configuration Model Framework for Fast Partially Occluded Object Detection in High–Resolution Remote Sensing Images. Remote Sens. 2018, 10, 464. [Google Scholar] [CrossRef]

- Xu, Z.Z.; Xu, X.; Wang, L.; Yang, R.; Pu, F.L. Deformable ConvNet with Aspect Ratio Constrained NMS for Object Detection in Remote Sensing Imagery. Remote Sens. 2017, 9, 1312. [Google Scholar] [CrossRef]

- Awrangjeb, M. Effective Generation and Update of a Building Map Database through Automatic Building Change Detection from LiDAR Point Cloud Data. Remote Sens. 2015, 7, 14119–14150. [Google Scholar] [CrossRef]

- Barragán, W.; Campos, A.; Sanchez, G. Automatic Generation of Building Mapping Using Digital, Vertical and Aerial High Resolution Photographs and LIDAR Point Clouds. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B7, 171–176. [Google Scholar]

- Tu, J.H.; Li, D.R.; Feng, W.Q.; Han, Q.H.; Sui, H.G. Detecting Damaged Building Regions Based on Semantic Scene Change from Multi–Temporal High–Resolution Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2017, 6, 131. [Google Scholar] [CrossRef]

- Dong, L.G.; Shan, J. A comprehensive review of earthquake-induced building damage detection with remote sensing techniques. ISPRS J. Photogramm. Remote Sens. 2013, 84, 85–99. [Google Scholar] [CrossRef]

- Zhao, B.; Zhong, Y.F.; Zhang, L.P. A spectral-structural bag-of-features scene classifier for very high spatial resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2016, 116, 73–85. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Zhu, Q.Q.; Zhang, L.P. Scene Classification Based on the MultiFeature Fusion Probabilistic Topic Model for High Spatial Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6207–6222. [Google Scholar] [CrossRef]

- Csillik, O. Fast Segmentation and Classification of Very High Resolution Remote Sensing Data Using SLIC Superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef]

- Demir, B.; Bruzzone, L. Histogram–Based Attribute Profiles for Classification of Very High Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2096–2107. [Google Scholar] [CrossRef]

- Pesaresi, M.; Benediktsson, J.A. A new approach for the morphological segmentation of high–resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Pesaresi, M.; Amason, K. Classification and feature extraction for remote sensing images from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef] [Green Version]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and Spatial Classification of Hyperspectral Data Using SVMs and Morphological Profiles. IEEE Trans. Geosci. Remote Sens. 2007, 46, 4834–4837. [Google Scholar]

- Mura, M.D.; Benediktsson, J.A.; Waske, B.; Bruzzone, L. Morphological Attribute Profiles for the Analysis of Very High Resolution Images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3747–3762. [Google Scholar] [CrossRef]

- Hussain, E.; Shan, J. Urban building extraction through object-based image classification assisted by digital surface model and zoning map. Int. J. Image Data Fusion 2016, 7, 63–82. [Google Scholar] [CrossRef]

- Attarzadeh, R.; Momeni, M. Object-Based Rule Sets and Its Transferability for Building Extraction from High Resolution Satellite Imagery. J. Indian Soc. Remote 2017, 1–10. [Google Scholar] [CrossRef]

- Pesaresi, M.; Gerhardinger, A.; Kayitakire, F. A Robust Built–Up Area Presence Index by Anisotropic Rotation–Invariant Textural Measure. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2009, 1, 180–192. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P. A multiscale urban complexity index based on 3D wavelet transform for spectral-spatial feature extraction and classification: An evaluation on the 8-channel WorldView-2 imagery. Int. J. Remote Sens. 2012, 33, 2641–2656. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P. A Multidirectional and Multiscale Morphological Index for Automatic Building Extraction from Multispectral GeoEye-1 Imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Karantzalos, K.; Paragios, N. Recognition–driven two–dimensional competing priors toward automatic and accurate building detection. IEEE Trans. Geosci. Remote Sens. 2009, 47, 133–144. [Google Scholar] [CrossRef]

- Ahmadi, S.; Zoej, M.J.V.; Ebadi, H.; Moghaddam, H.A.; Mohammadzadeh, A. Automatic urban building boundary extraction from high resolution aerial images using an innovative model of active contours. Int. J. Appl. Earth Obs. 2010, 12, 150–157. [Google Scholar] [CrossRef]

- Croitoru, A.; Doytsher, Y. Monocular right–angle building hypothesis generation in regularized urban areas by pose clustering. Photogramm. Eng. Remote Sens. 2003, 69, 151–169. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. Urban–area and building detection using SIFT keypoints and graph theory. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1156–1167. [Google Scholar] [CrossRef]

- Xia, G.S.; Hu, J.W.; Hu, F.; Shi, B.G.; Bai, X.; Zhong, Y.F.; Zhang, L.; Lu, X. AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef] [Green Version]

- Gilani, S.; Awrangjeb, M.; Lu, G.J. An Automatic Building Extraction and Regularisation Technique Using LiDAR Point Cloud Data and Orthoimage. Remote Sens. 2016, 8, 27. [Google Scholar] [CrossRef]

- Yan, Y.M.; Tan, Z.C.; Su, N.; Zhao, C.H. Building Extraction Based on an Optimized Stacked Sparse Autoencoder of Structure and Training Samples Using LIDAR DSM and Optical Images. Sensors 2017, 17, 1957. [Google Scholar] [CrossRef] [PubMed]

- Maltezos, E. Deep convolutional neural networks for building extraction from orthoimages and dense image matching point clouds. J. Appl. Remote Sens. 2017, 11, 042620-1–042620-22. [Google Scholar] [CrossRef]

- Yang, L.X.; Yuan, J.Y.; Lunga, D.; Laverdiere, M.; Rose, A.; Bhaduri, B. Building Extraction at Scale Using Convolutional Neural Network: Mapping of the United States. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 1–15. [Google Scholar] [CrossRef]

- Bittner, K.; Cui, S.Y.; Reinartz, P. Building Extraction from Remote Sensing Data Using Fully Convolutional Networks. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-1/W1, 481–486. [Google Scholar] [CrossRef]

- Ok, A.O.; Senaras, C.; Yuksel, B. Automated Detection of Arbitrarily Shaped Buildings in Complex Environments from Monocular VHR Optical Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1701–1717. [Google Scholar] [CrossRef]

- Ok, A.O. Automated Detection of Buildings from Single VHR Multispectral Images Using Shadow Information and Graph Cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Li, E.; Xu, S.B.; Meng, W.L.; Zhang, X.P. Building Extraction from Remotely Sensed Images by Integrating Saliency Cue. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 10, 906–919. [Google Scholar] [CrossRef]

- Chen, Y.X.; Lv, Z.Y.; Huang, B.; Jia, Y. Delineation of Built-Up Areas from Very High-Resolution Satellite Imagery Using Multi-Scale Textures and Spatial Dependence. Remote Sens. 2018, 10, 1596. [Google Scholar] [CrossRef]

- Li, S.D.; Tang, H.; Huang, X.; Mao, T.; Niu, X.N. Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters. Remote Sens. 2017, 9, 1177. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P. Morphological Building/Shadow Index for Building Extraction from High-Resolution Imagery Over Urban Areas. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2012, 5, 161–172. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P. An SVM Ensemble Approach Combining Spectral, Structural, and Semantic Features for the Classification of High-Resolution Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 257–272. [Google Scholar] [CrossRef]

- Bi, Q.; Qin, K.; Zhang, H.; Han, W.J.; Li, Z.L.; Xu, K. Building Change Detection Based on Multi-Scale Filtering and Grid Partition. In Proceedings of the Tenth IAPR Workshop on Pattern Recognition in Remote Sensing, Beijing, China, 1–7 August 2018. [Google Scholar]

- Huang, X.; Han, X.P.; Zhang, L.P.; Gong, J.Y.; Liao, W.Z.; Benediktsson, J.A. Generalized Differential Morphological Profiles for Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2016, 9, 1736–1751. [Google Scholar] [CrossRef]

- Ghanea, M.; Moallem, P.; Momeni, M. Automatic building extraction in dense urban areas through GeoEye multispectral imagery. Int. J. Remote Sens. 2014, 35, 5094–5119. [Google Scholar] [CrossRef]

- Zhang, Q.; Huang, X.; Zhang, G.X. A Morphological Building Detection Framework for High–Resolution Optical Imagery Over Urban Areas. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1388–1392. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the IEEE International Symposium on Geoscience and Remote Sensing (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Huang, X.; Yuan, W.L.; Li, J.Y.; Zhang, L.P. A New Building Extraction Postprocessing Framework for High–Spatial–Resolution Remote–Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 654–668. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemometr. Intell. Lab. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Eklundh, L.; Singh, A. A comparative analysis of standardised and unstandardised Principal Components Analysis in remote sensing. Int. J. Remote Sens. 1993, 14, 1359–1370. [Google Scholar] [CrossRef]

- Ghanea, M.; Moallem, P.; Momeni, M. Building Extraction from High–Resolution Satellite Images in Urban Areas: Recent Methods and Strategies Against Significant Challenges; Int. J. Remote Sens. 2016, 37, 5234–5248. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Fraser, C.S. An Automatic and Threshold-Free Performance Evaluation System for Building Extraction Techniques from Airborne LIDAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4184–4198. [Google Scholar] [CrossRef]

| Name | Spatial Resolution | Sources | Sizes | Region | Channels |

|---|---|---|---|---|---|

| Hangzhou | 2.0 m | WorldView2 | unknown | China | 5 * |

| Wuhan | 2.0 m | GeoEye1 | 645 × 564 | China | 4 |

| Tehran | 2.0 m | GeoEye1 | 750 × 750 | Iran | 4 |

| Shanghai | 2.0 m | WorldView2 | 645 × 564 | China | 4 |

| Spatial Resolution | Sources | Sizes | Tiles | Region | Channels | |

|---|---|---|---|---|---|---|

| Massachusetts | 1.0 m | Aerial | 1500 × 1500 | 151 | America | 3 RGB |

| Inria | 0.3 m | Aerial | 5000 × 5000 | 180 | America, Europe | 3 RGB |

| Smin | Step Size | Smax | Threshold | |

|---|---|---|---|---|

| MBI | 2 | 5 | 42 | 0.45 |

| MFBI | 3 | 6 | 33 | 0.45 |

| Image Size (in Pixel) | Spatial Resolution (in Meters) | Sensors | Computation Time of MBI (in Seconds) | Computation Time of MFBI (in Seconds) | |

|---|---|---|---|---|---|

| Image1 | 15392 × 16384 | 0.6 | Quick Bird | 2177.76 | 26.91 |

| Image2 | 16384 × 16384 | 0.5 | World View 2 | 1565.07 | 29.03 |

| Image3 | 19464 × 18573 | 0.8 | Gaofen 2 | 2191.13 | 38.49 |

| R | P | F | R | P | F | R | P | F | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sample1 | eC | 58.04 | 61.53 | 61.53 | Sample2 | eC | 60.74 | 66.74 | 63.60 | Sample3 | eC | 50.25 | 80.72 | 61.94 |

| MBI | 65.79 | 73.81 | 69.57 | MBI | 80.41 | 63.60 | 71.02 | MBI | 61.03 | 76.55 | 67.92 | |||

| MFBI | 94.87 | 68.58 | 79.61 | MFBI | 96.99 | 60.56 | 74.56 | MFBI | 90.93 | 68.17 | 77.92 | |||

| S1 | 92.82 | 70.57 | 80.18 | S1 | 92.92 | 67.02 | 77.87 | S1 | 84.24 | 75.61 | 79.69 | |||

| S2 | 93.56 | 70.91 | 80.68 | S2 | 91.29 | 64.26 | 75.42 | S2 | 88.06 | 72.02 | 79.23 | |||

| Sample4 | eC | 58.08 | 79.08 | 66.97 | Sample5 | eC | 57.23 | 62.27 | 59.64 | Sample6 | eC | 67.95 | 38.70 | 49.32 |

| MBI | 83.22 | 74.21 | 78.46 | MBI | 69.13 | 78.72 | 73.61 | MBI | 88.85 | 70.85 | 78.84 | |||

| MFBI | 95.86 | 71.99 | 82.23 | MFBI | 82.49 | 72.10 | 76.95 | MFBI | 98.57 | 67.62 | 80.21 | |||

| S1 | 95.69 | 73.91 | 83.40 | S1 | 95.52 | 74.82 | 83.91 | S1 | 95.29 | 71.51 | 81.70 | |||

| S2 | 94.28 | 68.29 | 79.21 | S2 | 96.21 | 73.66 | 83.41 | S2 | 96.17 | 69.58 | 80.74 | |||

| Sample7 | eC | 62.29 | 74.12 | 67.69 | Sample8 | eC | 64.04 | 38.74 | 48.27 | Sample9 | eC | 48.00 | 30.61 | 37.38 |

| MBI | 83.87 | 73.09 | 78.11 | MBI | 81.92 | 69.08 | 74.95 | MBI | 65.72 | 74.95 | 70.03 | |||

| MFBI | 97.65 | 68.10 | 80.24 | MFBI | 91.10 | 67.45 | 77.51 | MFBI | 89.68 | 66.98 | 76.69 | |||

| S1 | 94.63 | 71.56 | 81.49 | S1 | 91.45 | 68.28 | 78.19 | S1 | 85.18 | 71.43 | 77.70 | |||

| S2 | 92.67 | 73.04 | 81.69 | S2 | 91.87 | 67.25 | 77.65 | S2 | 85.27 | 70.49 | 77.18 | |||

| Sample10 | eC | 54.75 | 45.91 | 49.94 | Sample11 | eC | 47.61 | 36.29 | 41.19 | Sample12 | eC | 29.97 | 39.38 | 34.04 |

| MBI | 67.23 | 74.18 | 70.53 | MBI | 67.59 | 74.18 | 70.73 | MBI | 67.01 | 71.98 | 69.40 | |||

| MFBI | 88.37 | 69.77 | 77.97 | MFBI | 81.25 | 63.93 | 71.56 | MFBI | 87.57 | 66.64 | 75.69 | |||

| S1 | 85.11 | 72.02 | 78.02 | S1 | 79.61 | 71.77 | 75.48 | S1 | 83.39 | 68.58 | 75.26 | |||

| S2 | 85.97 | 74.45 | 79.80 | S2 | 79.73 | 70.97 | 75.09 | S2 | 82.04 | 69.15 | 75.05 | |||

| Sample13 | eC | 22.10 | 28.71 | 24.98 | Sample14 | eC | 54.33 | 45.71 | 49.65 | Sample15 | eC | 53.46 | 61.00 | 56.98 |

| MBI | 51.03 | 65.94 | 57.53 | MBI | 74.65 | 82.15 | 78.22 | MBI | 78.44 | 72.54 | 75.37 | |||

| MFBI | 62.21 | 59.94 | 61.05 | MFBI | 84.25 | 76.98 | 80.45 | MFBI | 89.15 | 68.90 | 77.73 | |||

| S1 | 67.82 | 59.87 | 63.59 | S1 | 86.74 | 78.04 | 82.16 | S1 | 90.20 | 70.49 | 79.14 | |||

| S2 | 68.75 | 57.91 | 62.86 | S2 | 86.48 | 78.67 | 82.39 | S2 | 89.78 | 70.39 | 78.91 | |||

| Sample16 | eC | 51.41 | 37.26 | 43.21 | Sample17 | eC | 56.72 | 48.63 | 52.36 | Sample18 | eC | 78.38 | 59.81 | 67.85 |

| MBI | 71.42 | 77.76 | 74.45 | MBI | 79.48 | 73.98 | 76.63 | MBI | 67.88 | 78.00 | 72.59 | |||

| MFBI | 89.30 | 70.40 | 78.73 | MFBI | 88.00 | 68.60 | 77.10 | MFBI | 87.65 | 71.19 | 78.56 | |||

| S1 | 88.64 | 76.51 | 82.13 | S1 | 89.07 | 67.22 | 76.62 | S1 | 86.62 | 77.20 | 81.64 | |||

| S2 | 89.85 | 79.51 | 84.36 | S2 | 87.19 | 74.16 | 80.15 | S2 | 86.65 | 76.47 | 81.24 | |||

| Sample19 | eC | 69.99 | 28.71 | 40.72 | Sample20 | eC | 56.30 | 47.88 | 51.75 | Sample21 | eC | 58.89 | 47.81 | 52.77 |

| MBI | 68.50 | 71.00 | 69.73 | MBI | 71.86 | 70.56 | 71.20 | MBI | 73.49 | 74.35 | 73.91 | |||

| MFBI | 91.21 | 65.84 | 76.48 | MFBI | 87.78 | 67.15 | 76.09 | MFBI | 89.57 | 67.09 | 76.72 | |||

| S1 | 90.56 | 68.06 | 77.71 | S1 | 87.66 | 69.93 | 77.79 | S1 | 90.07 | 66.96 | 76.81 | |||

| S2 | 90.15 | 69.40 | 78.43 | S2 | 87.08 | 68.36 | 76.59 | S2 | 87.05 | 72.14 | 78.90 | |||

| Sample22 | eC | 72.56 | 46.72 | 56.84 | Sample23 | eC | 76.57 | 63.26 | 69.28 | Sample24 | eC | 79.11 | 38.45 | 51.74 |

| MBI | 82.17 | 66.67 | 73.61 | MBI | 59.98 | 74.24 | 72.04 | MBI | 73.56 | 75.01 | 74.28 | |||

| MFBI | 91.58 | 66.97 | 77.37 | MFBI | 89.80 | 71.21 | 79.43 | MFBI | 99.38 | 65.64 | 79.06 | |||

| S1 | 91.31 | 76.38 | 83.18 | S1 | 89.36 | 74.57 | 81.30 | S1 | 97.97 | 69.57 | 81.36 | |||

| S2 | 91.75 | 75.46 | 82.82 | S2 | 89.12 | 78.10 | 83.24 | S2 | 98.10 | 70.72 | 82.19 | |||

| Sample25 | eC | 48.90 | 50.61 | 49.74 | Sample26 | eC | 57.06 | 48.44 | 52.39 | Sample27 | eC | 57.32 | 50.83 | 53.88 |

| MBI | 69.50 | 78.89 | 73.90 | MBI | 70.58 | 69.24 | 69.91 | MBI | 83.81 | 78.33 | 80.98 | |||

| MFBI | 80.40 | 74.52 | 77.35 | MFBI | 82.51 | 65.83 | 73.23 | MFBI | 89.04 | 73.21 | 80.35 | |||

| S1 | 84.03 | 73.01 | 78.14 | S1 | 82.02 | 72.20 | 76.80 | S1 | 88.27 | 77.44 | 82.50 | |||

| S2 | 83.99 | 73.33 | 78.30 | S2 | 81.60 | 68.28 | 74.35 | S2 | 89.72 | 76.40 | 82.53 | |||

| Sample28 | eC | 48.22 | 60.16 | 53.53 | Sample29 | eC | 58.40 | 53.60 | 55.90 | Sample30 | eC | 49.12 | 73.77 | 58.98 |

| MBI | 67.27 | 75.79 | 71.28 | MBI | 63.53 | 79.05 | 70.44 | MBI | 72.88 | 78.89 | 75.77 | |||

| MFBI | 80.37 | 71.41 | 75.63 | MFBI | 89.66 | 69.53 | 78.32 | MFBI | 88.14 | 75.59 | 81.38 | |||

| S1 | 76.44 | 75.90 | 76.17 | S1 | 84.32 | 79.76 | 81.98 | S1 | 88.34 | 78.55 | 83.16 | |||

| S2 | 75.96 | 75.85 | 75.91 | S2 | 88.27 | 74.63 | 80.88 | S2 | 89.06 | 76.86 | 82.51 | |||

| Sample31 | eC | 56.11 | 59.01 | 57.52 | Sample32 | eC | 61.19 | 65.94 | 63.48 | Sample33 | eC | 72.82 | 52.61 | 61.09 |

| MBI | 76.51 | 73.36 | 74.90 | MBI | 71.91 | 79.62 | 75.57 | MBI | 70.44 | 83.30 | 76.33 | |||

| MFBI | 88.16 | 66.14 | 75.58 | MFBI | 86.84 | 71.49 | 78.42 | MFBI | 89.44 | 76.37 | 82.39 | |||

| S1 | 88.85 | 75.78 | 81.80 | S1 | 87.06 | 83.72 | 85.35 | S1 | 88.73 | 79.30 | 83.75 | |||

| S2 | 87.55 | 68.25 | 76.70 | S2 | 89.62 | 77.81 | 83.30 | S2 | 88.07 | 78.53 | 83.02 | |||

| Sample34 | eC | 74.07 | 52.79 | 61.64 | Sample35 | eC | 80.10 | 43.14 | 56.08 | Sample36 | eC | 80.59 | 37.43 | 51.12 |

| MBI | 76.83 | 73.54 | 75.15 | MBI | 67.27 | 72.60 | 69.83 | MBI | 72.95 | 76.43 | 74.65 | |||

| MFBI | 86.08 | 68.21 | 76.11 | MFBI | 99.51 | 66.15 | 79.47 | MFBI | 86.77 | 70.91 | 78.05 | |||

| S1 | 88.60 | 74.13 | 80.72 | S1 | 95.27 | 69.37 | 80.28 | S1 | 90.63 | 74.12 | 81.55 | |||

| S2 | 87.67 | 72.51 | 79.37 | S2 | 96.79 | 68.11 | 79.95 | S2 | 91.48 | 75.73 | 82.86 | |||

| Sample37 | eC | 57.76 | 32.30 | 41.43 | Sample38 | eC | 55.72 | 14.68 | 23.24 | Sample39 | eC | 69.03 | 42.90 | 52.91 |

| MBI | 67.96 | 61.47 | 64.55 | MBI | 62.78 | 78.75 | 69.86 | MBI | 73.81 | 75.64 | 74.71 | |||

| MFBI | 84.26 | 59.77 | 69.93 | MFBI | 91.79 | 72.90 | 81.27 | MFBI | 89.36 | 67.93 | 77.17 | |||

| S1 | 96.08 | 61.34 | 71.63 | S1 | 90.79 | 75.81 | 82.63 | S1 | 87.07 | 72.89 | 79.35 | |||

| S2 | 93.71 | 65.27 | 73.35 | S2 | 89.65 | 76.20 | 82.38 | S2 | 89.58 | 71.54 | 79.55 | |||

| Sample40 | eC | 74.53 | 70.15 | 72.27 | Sample41 | eC | 30.80 | 50.63 | 38.30 | Sample42 | eC | 63.55 | 57.60 | 60.43 |

| MBI | 70.27 | 75.49 | 72.79 | MBI | 70.12 | 80.87 | 75.11 | MBI | 72.90 | 78.38 | 75.54 | |||

| MFBI | 87.93 | 76.63 | 81.89 | MFBI | 83.54 | 75.48 | 79.31 | MFBI | 87.49 | 74.73 | 80.61 | |||

| S1 | 85.21 | 79.09 | 82.04 | S1 | 89.09 | 79.42 | 84.43 | S1 | 85.94 | 78.09 | 81.83 | |||

| S2 | 86.25 | 78.28 | 82.07 | S2 | 89.41 | 77.84 | 83.22 | S2 | 84.74 | 78.67 | 81.59 | |||

| Sample43 | eC | 17.31 | 71.80 | 27.89 | Sample44 | eC | 16.82 | 29.49 | 21.42 | Sample45 | eC | 25.13 | 80.99 | 38.36 |

| MBI | 53.10 | 84.99 | 65.36 | MBI | 64.57 | 73.27 | 68.65 | MBI | 51.31 | 76.92 | 61.56 | |||

| MFBI | 83.84 | 63.69 | 72.39 | MFBI | 86.20 | 62.23 | 72.28 | MFBI | 84.64 | 57.90 | 68.76 | |||

| S1 | 81.14 | 67.22 | 73.53 | S1 | 84.92 | 67.14 | 74.99 | S1 | 83.07 | 62.96 | 71.63 | |||

| S2 | 83.87 | 66.04 | 73.90 | S2 | 84.30 | 64.62 | 73.16 | S2 | 81.59 | 62.77 | 70.95 | |||

| Sample46 | eC | 18.32 | 32.48 | 23.43 | Sample47 | eC | 25.65 | 82.17 | 39.10 | Sample48 | eC | 18.18 | 73.89 | 29.18 |

| MBI | 57.13 | 75.22 | 64.94 | MBI | 64.47 | 75.01 | 69.34 | MBI | 54.29 | 89.63 | 67.63 | |||

| MFBI | 76.81 | 60.92 | 67.95 | MFBI | 84.66 | 65.31 | 73.73 | MFBI | 82.67 | 74.86 | 78.57 | |||

| S1 | 75.50 | 69.17 | 72.20 | S1 | 86.06 | 72.31 | 78.59 | S1 | 82.08 | 80.50 | 81.28 | |||

| S2 | 73.27 | 91.74 | 72.50 | S2 | 84.51 | 73.38 | 76.80 | S2 | 83.24 | 81.95 | 82.59 | |||

| Sample49 | eC | 10.52 | 53.07 | 17.56 | Sample50 | eC | 12.53 | 31.65 | 17.95 | Sample51 | eC | 72.11 | 59.17 | 65.00 |

| MBI | 57.77 | 78.54 | 66.57 | MBI | 61.63 | 67.97 | 64.64 | MBI | 57.31 | 67.44 | 61.96 | |||

| MFBI | 83.77 | 73.91 | 78.53 | MFBI | 86.69 | 61.76 | 72.13 | MFBI | 91.41 | 60.81 | 73.04 | |||

| S1 | 84.79 | 75.24 | 79.73 | S1 | 85.98 | 63.98 | 73.37 | S1 | 88.27 | 65.04 | 74.90 | |||

| S2 | 84.53 | 76.68 | 80.41 | S2 | 86.87 | 65.68 | 74.81 | S2 | 86.56 | 87.04 | 95.56 | |||

| Sample52 | eC | 57.25 | 12.38 | 20.36 | Sample53 | eC | 48.18 | 31.88 | 38.37 | Sample54 | eC | 32.27 | 42.69 | 36.76 |

| MBI | 59.12 | 70.96 | 64.50 | MBI | 55.59 | 68.97 | 61.56 | MBI | 63.10 | 70.80 | 66.73 | |||

| MFBI | 86.45 | 69.12 | 76.82 | MFBI | 86.12 | 61.35 | 71.66 | MFBI | 68.92 | 73.02 | 70.91 | |||

| S1 | 88.68 | 73.98 | 80.67 | S1 | 85.11 | 64.12 | 73.13 | S1 | 68.72 | 75.86 | 72.12 | |||

| S2 | 85.55 | 75.55 | 80.24 | S2 | 85.31 | 64.67 | 73.57 | S2 | 67.04 | 78.99 | 72.53 | |||

| Sample55 | eC | 47.30 | 38.74 | 42.59 | Sample56 | eC | 68.94 | 57.28 | 62.57 | Sample57 | eC | 42.83 | 46.80 | 38.05 |

| MBI | 62.09 | 72.35 | 66,83 | MBI | 64.33 | 75.65 | 69.54 | MBI | 53.71 | 65.98 | 59.22 | |||

| MFBI | 83.47 | 65.33 | 73.29 | MFBI | 75.12 | 64.81 | 69.58 | MFBI | 78.26 | 60.83 | 68.46 | |||

| S1 | 82.80 | 68.48 | 74.96 | S1 | 81.99 | 66.55 | 73.47 | S1 | 83.71 | 67.99 | 75.04 | |||

| S2 | 82.25 | 68.10 | 74.51 | S2 | 82.31 | 67.02 | 73.88 | S2 | 85.17 | 66.04 | 74.40 |

| Recall | Precision | F1-Score | |

|---|---|---|---|

| eCognition | 53.40 ± 19.37 | 50.21 ± 16.43 | 48.40 ± 14.47 |

| MBI | 68.51 ± 8.84 | 74.40 ± 5.17 | 70.93 ± 5.15 |

| MFBI | 86.94 ± 6.67 | 68.15 ± 4.87 | 76.22 ± 4.22 |

| MMFBI Scenario1 | 86.71 ± 5.80 | 72.15 ± 5.21 | 78.60 ± 4.19 |

| MMFBI Scenario2 | 86.64 ± 5.93 | 71.87 ± 5.10 | 78.40 ± 4.16 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bi, Q.; Qin, K.; Zhang, H.; Zhang, Y.; Li, Z.; Xu, K. A Multi-Scale Filtering Building Index for Building Extraction in Very High-Resolution Satellite Imagery. Remote Sens. 2019, 11, 482. https://doi.org/10.3390/rs11050482

Bi Q, Qin K, Zhang H, Zhang Y, Li Z, Xu K. A Multi-Scale Filtering Building Index for Building Extraction in Very High-Resolution Satellite Imagery. Remote Sensing. 2019; 11(5):482. https://doi.org/10.3390/rs11050482

Chicago/Turabian StyleBi, Qi, Kun Qin, Han Zhang, Ye Zhang, Zhili Li, and Kai Xu. 2019. "A Multi-Scale Filtering Building Index for Building Extraction in Very High-Resolution Satellite Imagery" Remote Sensing 11, no. 5: 482. https://doi.org/10.3390/rs11050482

APA StyleBi, Q., Qin, K., Zhang, H., Zhang, Y., Li, Z., & Xu, K. (2019). A Multi-Scale Filtering Building Index for Building Extraction in Very High-Resolution Satellite Imagery. Remote Sensing, 11(5), 482. https://doi.org/10.3390/rs11050482