Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism

Abstract

:1. Introduction

2. Related Works

2.1. Heritage Information System

2.2. Virtual Reality for Tourism

2.3. Data Capture for Cultural Site

2.4. User-Centered Approaches

3. Context and Problematics

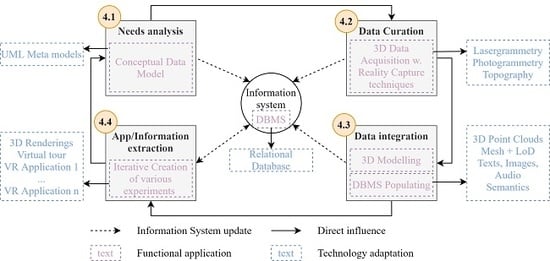

4. Methodology

4.1. Needs Analysis

- Contextualize the project: main facts, target, positioning.

- Characterize the objectives of the project.

- Carry out the work of expressing the needs.

- Conducting the work of collecting user requirements.

- Establish the list of content needs.

- Draw up the list of functional requirements.

- Define, clarify and explain the functionalities that meet each need.

- Order and prioritize functionalities in order of importance.

- Create a synoptic table of content functions and their impact on the product.

- Identify the resources to be activated for production.

- ▪ VR needs to have a use: the value that AR and VR are promising in terms of providing needs to be clearly understood and relevant in the tourist context [24].

- ▪ Quality environment: by offering a high-quality of resolution or sound, more authentic VR and AR environments in which tourists can be fully immersed should be provided [85].

- ▪ No distraction: avoid distractions for the users, bugs, irrelevant information [31].

- ▪ Consumer-centric: one should carefully consider how this object creates meaning for the visitor, how it connects to his/her values, and enables the visitor to create his/her version of the experience [24].

4.2. Reality Capture Methodology for 3D Data Acquisition

4.3. Populating the Prototype

4.4. Virtual Reality Application Design

5. Experiments and Results

5.1. Expressed Needs

- The different parts of the castle must be distinguishable. One must be able to identify the different rooms and walls, as well as smaller elements such as windows, doors, and chimneys.

- Materials and their origins must be recognized for all surfaces of the castle.

- The period must be identified for each part of the castle.

- Embedded information is required for the building construction as well as for the building block.

- The information system must be expendable to supplement with other parts of the domain such as the castle’s garden.

- One must be able to explore a 3D digital reconstruction.

- The solution should allow visitors to experience an immersive reality at a given point in time.

- The different stages of renovation of the castle can be displayed, and one can observe the evolution of the site as well as the construction of some specific elements.

- It is possible to interact with several objects such as doors.

- Visitors should be guided through an intuitive path definition depending on their level of experience.

- The level of realism and texture is important for tourists.

- The solution should allow multiple visitors at once in a common scene.

5.2. The 3D Acquisition

- It is necessary to prefer diffuse lighting of the scene to avoid the coexistence of shadow ranges and areas under direct sunlight (best results are on a cloudy day without shadows);

- Direct illumination can be the source of image saturation if the scene strongly reflects light (shiny materials);

- The shots will be taken with the best resolution of the camera to produce the most accurate 3D model possible (the resolution greatly impact the intended reconstruction precision).

5.3. Data Integration (Geometry Optimization and Visualization Optimization)

5.3.1. Hard Surface Normal-Map Baking

5.3.2. Draw Call Batching

5.3.3. Lightmap Baking

5.3.4. Occlusion Culling and Frustum Culling

5.3.5. Levels of Details (LOD)

5.4. VR Design

5.4.1. The Locomotion System

- ▪

- Keeping the up-axis of the scene aligned with the up-axis of the physical world.

- ▪

- Keeping the scale of the scene fixed.

- ▪

- Keeping the rotation of the scene fixed.

5.4.2. Multi-Users VR Environments

5.4.3. Panoramic 360° Pictures

6. Discussions

7. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ortiz, P.; Sánchez, H.; Pires, H.; Pérez, J.A. Experiences about fusioning 3D digitalization techniques for cultural heritage documentation. In Proceedings of the ISPRS Commission V Symposium ‘Image Engineering and Vision Metrology’, Dresden, Germany, 25–27 September 2006; pp. 224–229. [Google Scholar]

- Mayer, H.; Mosch, M.; Peipe, J. Comparison of photogrammetric and computer vision techniques: 3D reconstruction and visualization of Wartburg Castle. In Proceedings of the XIXth International Symposium, CIPA 2003: New Perspectives to Save Cultural Heritage, Antalya, Turkey, 30 September–4 October 2003; pp. 103–108. [Google Scholar]

- Koch, M.; Kaehler, M. Combining 3D laser-Scanning and close-range Photogrammetry—An approach to Exploit the Strength of Both methods. In Proceedings of the Computer Applications to Archaeology, Williamsburg, VA, USA, 22–26 March 2009; pp. 22–26. [Google Scholar]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A survey of augmented, virtual, and mixed reality for cultural heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Kersten, T.P.; Tschirschwitz, F.; Deggim, S.; Lindstaedt, M. Virtual reality for cultural heritage monuments—from 3D data recording to immersive visualisation. In Proceedings of the Euro-Mediterranean Conference, Nicosia, Cyprus, 29 October 2018; Springer: Cham, Switzerland, 2018; pp. 74–83. [Google Scholar]

- Santana, M. Heritage recording, documentation and information systems in preventive maintenance. Reflect. Prev. Conserv. Maint. Monit. Monum. Sites 2013, 6, 10. [Google Scholar] [CrossRef] [Green Version]

- Chiabrando, F.; Donato, V.; Lo Turco, M.; Santagati, C. Cultural Heritage Documentation, Analysis and Management Using Building Information Modelling: State of the Art and Perspectives BT. In Mechatronics for Cultural Heritage and Civil Engineering; Ottaviano, E., Pelliccio, A., Gattulli, V., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 181–202. ISBN 978-3-319-68646-2. [Google Scholar]

- Liu, X.; Wang, X.; Wright, G.; Cheng, J.; Li, X.; Liu, R. A State-of-the-Art Review on the Integration of Building Information Modeling (BIM) and Geographic Information System (GIS). Isprs Int. J. Geo-Inf. 2017, 6, 53. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Billen, R. A Smart Point Cloud Infrastructure for intelligent environments. In Laser Scanning: An Emerging Technology in Structural Engineering; Lindenbergh, R., Belen, R., Eds.; ISPRS Book Series; Taylor & Francis Group/CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Poux, F.; Hallot, P.; Neuville, R.; Billen, R. Smart point cloud: Definition and remaining challenges. Isprs Ann. Photogramm. Remote. Sens. Spat. Inf. Sci. 2016, IV-2/W1, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Palomar, I.J.; García Valldecabres, J.L.; Tzortzopoulos, P.; Pellicer, E. An online platform to unify and synchronise heritage architecture information. Autom. Constr. 2020, 110, 103008. [Google Scholar] [CrossRef]

- Dutailly, B.; Feruglio, V.; Ferrier, C.; Chapoulie, R.; Bousquet, B.; Bassel, L.; Mora, P.; Lacanette, D. Accéder en 3D aux données de terrain pluridisciplinaires-un outil pour l’étude des grottes ornées. In Proceedings of the Le réel et le virtuel, Marseille, France, 9–11 May 2019. [Google Scholar]

- Potenziani, M.; Callieri, M.; Scopigno, R. Developing and Maintaining a Web 3D Viewer for the CH Community: An Evaluation of the 3DHOP Framework. In Proceedings of the GCH, Vienna, Austria, 12–15 November 2018; pp. 169–178. [Google Scholar]

- Cheong, R. The virtual threat to travel and tourism. Tour. Manag. 1995, 16, 417–422. [Google Scholar] [CrossRef]

- Perry Hobson, J.S.; Williams, A.P. Virtual reality: A new horizon for the tourism industry. J. Vacat. Market. 1995, 1, 124–135. [Google Scholar] [CrossRef]

- Musil, S.; Pigel, G. Can Tourism be Replaced by Virtual Reality Technology? In Information and Communications Technologies in Tourism; Springer: Vienna, Austria, 1994; pp. 87–94. [Google Scholar]

- Williams, P.; Hobson, J.P. Virtual reality and tourism: Fact or fantasy? Tour. Manag. 1995, 16, 423–427. [Google Scholar] [CrossRef]

- Perry Hobson, J.S.; Williams, P. Virtual Reality: The Future of Leisure and Tourism? World Leis. Recreat. 1997, 39, 34–40. [Google Scholar] [CrossRef]

- Google’s latest VR app lets you gaze at prehistoric paintings Engadget. Available online: www.engadget.com (accessed on 28 March 2020).

- Tsingos, N.; Gallo, E.; Drettakis, G. Perceptual audio rendering of complex virtual environments. Acm Trans. Graph. (Tog) 2004, 23, 249–258. [Google Scholar] [CrossRef]

- Dinh, H.Q.; Walker, N.; Hodges, L.F.; Song, C.; Kobayashi, A. Evaluating the importance of multi-sensory input on memory and the sense of presence in virtual environments. In Proceedings of the IEEE Virtual Reality (Cat. No. 99CB36316), Houston, TX, USA, 13–17 March 1999; pp. 222–228. [Google Scholar]

- Tussyadiah, I.P.; Wang, D.; Jung, T.H.; tom Dieck, M.C. Virtual reality, presence, and attitude change: Empirical evidence from tourism. Tour. Manag. 2018, 66, 140–154. [Google Scholar] [CrossRef]

- Top Trends in the Gartner Hype Cycle for Emerging Technologies, 2017. Smarter with Gartner. Available online: www.gartner.com (accessed on 28 March 2020).

- Han, D.-I.D.; Weber, J.; Bastiaansen, M.; Mitas, O.; Lub, X. Virtual and augmented reality technologies to enhance the visitor experience in cultural tourism. In Augmented Reality and Virtual Reality; Springer: Nature, Switzerland, 2019; pp. 113–128. [Google Scholar] [CrossRef]

- Guttentag, D.A. Virtual reality: Applications and implications for tourism. Tour. Manag. 2010, 31, 637–651. [Google Scholar] [CrossRef]

- Jacobson, J.; Ellis, M.; Ellis, S.; Seethaler, L. Immersive Displays for Education Using CaveUT. In Proceedings of the EdMedia + Innovate Learning, Montreal, QC, Canada, 27 June–2 July 2005; Kommers, P., Richards, G., Eds.; Association for the Advancement of Computing in Education (AACE): Montreal, QC, Canada, 2005; pp. 4525–4530. [Google Scholar]

- Mikropoulos, T.A. Presence: A unique characteristic in educational virtual environments. Virtual Real. 2006, 10, 197–206. [Google Scholar] [CrossRef]

- Roussou, M. Learning by doing and learning through play: An exploration of interactivity in virtual environments for children. Comput. Entertain. (Cie) 2004, 2, 10. [Google Scholar] [CrossRef]

- Gimblett, H.R.; Richards, M.T.; Itami, R.M. RBSim: Geographic Simulation of Wilderness Recreation Behavior. J. For. 2001, 99, 36–42. [Google Scholar]

- Marasco, A.; Buonincontri, P.; van Niekerk, M.; Orlowski, M.; Okumus, F. Exploring the role of next-generation virtual technologies in destination marketing. J. Destin. Market. Manag. 2018, 9, 138–148. [Google Scholar] [CrossRef]

- Tussyadiah, I.P.; Wang, D.; Jia, C.H. Virtual reality and attitudes toward tourism destinations. In Information and Communication Technologies in Tourism 2017; Springer: Cham, Switzerland, 2017; pp. 229–239. [Google Scholar]

- Buhalis, D.; Law, R. Progress in information technology and tourism management: 20 years on and 10 years after the Internet—The state of eTourism research. Tour. Manag. 2008, 29, 609–623. [Google Scholar] [CrossRef] [Green Version]

- Wei, W.; Qi, R.; Zhang, L. Effects of virtual reality on theme park visitors’ experience and behaviors: A presence perspective. Tour. Manag. 2019, 71, 282–293. [Google Scholar] [CrossRef]

- Wilkinson, C. Childhood, mobile technologies and everyday experiences: Changing technologies = changing childhoods? Child. Geogr. 2016, 14, 372–373. [Google Scholar] [CrossRef]

- Kharroubi, A.; Hajji, R.; Billen, R.; Poux, F. Classification and integration of massive 3d points clouds in a virtual reality (VR) environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 165–171. [Google Scholar] [CrossRef] [Green Version]

- Virtanen, J.P.; Daniel, S.; Turppa, T.; Zhu, L.; Julin, A.; Hyyppä, H.; Hyyppä, J. Interactive dense point clouds in a game engine. Isprs J. Photogramm. Remote Sen. 2020, 163, 375–389. [Google Scholar] [CrossRef]

- Poux, F. The Smart Point Cloud: Structuring 3D Intelligent Point Data. Ph.D. Thesis, Université de Liège, Liège, Belgique, 2019. [Google Scholar]

- Berger, M.; Tagliasacchi, A.; Seversky, L.M.; Alliez, P.; Guennebaud, G.; Levine, J.A.; Sharf, A.; Silva, C.T. A Survey of Surface Reconstruction from Point Clouds. Comput. Graph. Forum 2017, 36, 301–329. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Neuville, R.; Hallot, P.; Billen, R. Model for Semantically Rich Point Cloud Data. Isprs Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-4/W5, 107–115. [Google Scholar] [CrossRef] [Green Version]

- Schütz, M.; Krösl, K.; Wimmer, M. Real-Time Continuous Level of Detail Rendering of Point Clouds. In Proceedings of the IEEE VR 2019, the 26th IEEE Conference on Virtual Reality and 3D User Interfaces, Osaka, Japan, 23–27 March 2019; pp. 1–8. [Google Scholar]

- Remondino, F. From point cloud to surface: The modeling and visualization problem. Isprs—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2003, XXXIV, 24–28. [Google Scholar]

- Koenderink, J.J.; van Doorn, A.J. Affine structure from motion. J. Opt. Soc. Am. A 1991, 8, 377. [Google Scholar] [CrossRef]

- Haala, N. The Landscape of Dense Image Matching Algorithms. J. Opt. Soc. Am. A 2013, 8, 377–385. [Google Scholar] [CrossRef]

- Quintero, M.S.; Blake, B.; Eppich, R. Conservation of Architectural Heritage: The Role of Digital Documentation Tools: The Need for Appropriate Teaching Material. Int. J. Arch. Comput. 2007, 5, 239–253. [Google Scholar] [CrossRef]

- Remondino, F. Heritage Recording and 3D Modeling with Photogrammetry and 3D Scanning. Remote Sens. 2011, 3, 1104–1138. [Google Scholar] [CrossRef] [Green Version]

- Noya, N.C.; Garcia, Á.L.; Ramirez, F.C.; García, Á.L.; Ramírez, F.C. Combining photogrammetry and photographic enhancement techniques for the recording of megalithic art in north-west Iberia. Digit. Appl. Archaeol. Cult. Herit. 2015, 2, 89–101. [Google Scholar] [CrossRef]

- Yastikli, N. Documentation of cultural heritage using digital photogrammetry and laser scanning. J. Cult. Herit. 2007, 8, 423–427. [Google Scholar] [CrossRef]

- Poux, F.; Neuville, R.; Van Wersch, L.; Nys, G.-A.; Billen, R. 3D Point Clouds in Archaeology: Advances in Acquisition, Processing and Knowledge Integration Applied to Quasi-Planar Objects. Geosciences 2017, 7, 96. [Google Scholar] [CrossRef] [Green Version]

- Tabkha, A.; Hajji, R.; Billen, R.; Poux, F. Semantic enrichment of point cloud by automatic extraction and enhancement of 360° panoramas. Isprs—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W17, 355–362. [Google Scholar] [CrossRef] [Green Version]

- Napolitano, R.K.; Scherer, G.; Glisic, B. Virtual tours and informational modeling for conservation of cultural heritage sites. J. Cult. Herit. 2018, 29, 123–129. [Google Scholar] [CrossRef]

- Rua, H.; Alvito, P. Living the past: 3D models, virtual reality and game engines as tools for supporting archaeology and the reconstruction of cultural heritage—the case-study of the Roman villa of Casal de Freiria. J. Archaeol. Sci. 2011, 38, 3296–3308. [Google Scholar] [CrossRef]

- Poux, F.; Billen, R. Voxel-based 3D point cloud semantic segmentation: Unsupervised geometric and relationship featuring vs. deep learning methods. Isprs Int. J. Geo-Inf. 2019, 8, 213. [Google Scholar] [CrossRef] [Green Version]

- Poux, F.; Neuville, R.; Billen, R. Point Cloud Classification Of Tesserae From Terrestrial Laser Data Combined With Dense Image Matching For Archaeological Information Extraction. Isprs Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W2, 203–211. [Google Scholar] [CrossRef] [Green Version]

- Koutsoudis, A.; Vidmar, B.; Ioannakis, G.; Arnaoutoglou, F.; Pavlidis, G.; Chamzas, C. Multi-image 3D reconstruction data evaluation. J. Cult. Herit. 2014, 15, 73–79. [Google Scholar] [CrossRef]

- Mozas-Calvache, A.T.; Pérez-García, J.L.; Cardenal-Escarcena, F.J.; Mata-Castro, E.; Delgado-García, J. Method for photogrammetric surveying of archaeological sites with light aerial platforms. J. Archaeol. Sci. 2012, 39, 521–530. [Google Scholar] [CrossRef]

- Burens, A.; Grussenmeyer, P.; Guillemin, S.; Carozza, L.; Lévêque, F.; Mathé, V. Methodological Developments in 3D Scanning and Modelling of Archaeological French Heritage Site: The Bronze Age Painted Cave of “Les Fraux”, Dordogne (France). Isprs—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, XL-5/W2, 131–135. [Google Scholar] [CrossRef] [Green Version]

- Kai-Browne, A.; Kohlmeyer, K.; Gonnella, J.; Bremer, T.; Brandhorst, S.; Balda, F.; Plesch, S.; Lehmann, D. 3D Acquisition, Processing and Visualization of Archaeological Artifacts. In Proceedings of the EuroMed 2016: Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection, LImassol, Cyprus, 31 October–5 November 2016; Springer: Cham, Switzerland, 2016; pp. 397–408. [Google Scholar]

- Armesto-González, J.; Riveiro-Rodríguez, B.; González-Aguilera, D.; Rivas-Brea, M.T. Terrestrial laser scanning intensity data applied to damage detection for historical buildings. J. Archaeol. Sci. 2010, 37, 3037–3047. [Google Scholar] [CrossRef]

- James, M.R.; Quinton, J.N. Ultra-rapid topographic surveying for complex environments: The hand-held mobile laser scanner (HMLS). Earth Surf. Process. Landf. 2014, 39, 138–142. [Google Scholar] [CrossRef] [Green Version]

- Lauterbach, H.; Borrmann, D.; Heß, R.; Eck, D.; Schilling, K.; Nüchter, A. Evaluation of a Backpack-Mounted 3D Mobile Scanning System. Remote Sens. 2015, 7, 13753–13781. [Google Scholar] [CrossRef] [Green Version]

- Slama, C.; Theurer, C.; Henriksen, S. Manual of Photogrammetry; Slama, C., Theurer, C., Henriksen, S.W., Eds.; Taylor & Francis Group/CRC Press: Boca Raton, FL, USA, 1980; ISBN 0-937294-01-2. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Weinmann, M. Reconstruction and Analysis of 3D Scenes; Springer International Publishing: Cham, Switzerland, 2016; ISBN 978-3-319-29244-1. [Google Scholar]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef] [Green Version]

- Pix4D Terrestrial 3D Mapping Using Fisheye and Perspective Sensors-Support. Available online: https://support.pix4d.com/hc/en-us/articles/204220385-Scientific-White-Paper-Terrestrial-3D-Mapping-Using-Fisheye-and-Perspective-Sensors#gsc.tab=0 (accessed on 18 April 2016).

- Bentley 3D City Geographic Information System—A Major Step Towards Sustainable Infrastructure. Available online: https://www.bentley.com/en/products/product-line/reality-modeling-software/contextcapture (accessed on 12 February 2020).

- Agisoft LLC Agisoft Photoscan. Available online: http://www.agisoft.com/pdf/photoscan_presentation.pdf (accessed on 12 February 2020).

- nFrames. Available online: http://nframes.com/ (accessed on 12 February 2020).

- Changchang Wu VisualSFM. Available online: http://ccwu.me/vsfm/ (accessed on 12 February 2020).

- Moulon, P. openMVG. Available online: http://imagine.enpc.fr/~moulonp/openMVG/ (accessed on 12 February 2020).

- Autodesk. Autodesk: 123D catch. Available online: http://www.123dapp.com/catch (accessed on 12 February 2020).

- PROFACTOR GmbH ReconstructMe. Available online: http://reconstructme.net/ (accessed on 12 February 2020).

- Eos Systems Inc Photomodeler. Available online: http://www.photomodeler.com/index.html (accessed on 12 February 2020).

- Capturing Reality s.r.o RealityCapture. Available online: https://www.capturingreality.com (accessed on 12 February 2020).

- Bassier, M.; Vergauwen, M.; Poux, F. Point Cloud vs. Mesh Features for Building Interior Classification. Remote Sens. 2020, 12, 2224. [Google Scholar] [CrossRef]

- Gareth, J. The elements of user experience: User-centered design for the Web and beyond. Choice Rev. Online 2011, 49. [Google Scholar]

- Gasson, S. Human-Centered Vs. User-Centered Approaches to Information System Design. J. Inf. Technol. Theory Appl. 2003, 5, 29–46. [Google Scholar]

- Reunanen, M.; Díaz, L.; Horttana, T. A holistic user-centered approach to immersive digital cultural heritage installations: Case Vrouw Maria. J. Comput. Cult. Herit. 2015, 7, 1–16. [Google Scholar] [CrossRef]

- Barbieri, L.; Bruno, F.; Muzzupappa, M. User-centered design of a virtual reality exhibit for archaeological museums. Int. J. Interact. Des. Manuf. 2018, 12, 561–571. [Google Scholar] [CrossRef]

- Ibrahim, N.; Ali, N.M. A conceptual framework for designing virtual heritage environment for cultural learning. J. Comput. Cult. Herit. 2018, 11, 1–27. [Google Scholar] [CrossRef]

- Cipolla Ficarra, F.V.; Cipolla Ficarra, M. Multimedia, user-centered design and tourism: Simplicity, originality and universality. Stud. Comput. Intell. 2008, 142, 461–470. [Google Scholar]

- Loizides, F.; El Kater, A.; Terlikas, C.; Lanitis, A.; Michael, D. Presenting Cypriot Cultural Heritage in Virtual Reality: A User Evaluation. In LNCS; Springer: Cham, Switzerland, 2014; Volume 8740, pp. 572–579. [Google Scholar]

- Sukaviriya, N.; Sinha, V.; Ramachandra, T.; Mani, S.; Stolze, M. User-Centered Design and Business Process Modeling: Cross Road in Rapid Prototyping Tools. In Human-Computer Interaction–INTERACT 2007. INTERACT 2007. Lecture Notes in Computer Science; Baranauskas, C., Palanque, P., Abascal, J., Barbosa, S.D.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4662 LNCS, pp. 165–178. [Google Scholar] [CrossRef] [Green Version]

- Kinzie, M.B.; Cohn, W.F.; Julian, M.F.; Knaus, W.A. A user-centered model for Web site design: Needs assessment, user interface design, and rapid prototyping. J. Am. Med. Inform. Assoc. 2002, 9, 320–330. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jung, T.; tom Dieck, M.C.; Lee, H.; Chung, N. Effects of virtual reality and augmented reality on visitor experiences in museum. In Information and Communication Technologies in Tourism 2016; Springer: Cham, Switzerland, 2016; pp. 621–635. [Google Scholar]

- Treffer, M. Un Système d’ Information Géographique 3D Pour le Château de Jehay; Définition des besoins et modélisation conceptuelle sur base de CityGML. Master’s Thesis, Université de Liège, Liège, Belgique, 2017. [Google Scholar]

- Besl, P.J.; McKay, N.D. A Method for Registration of 3-D Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- AliceVision Meshroom-3D Reconstruction Software. Available online: https://alicevision.org/#meshroom (accessed on 30 July 2019).

- IGN France MicMac. Available online: https://micmac.ensg.eu/index.php/Accueil (accessed on 30 July 2019).

- Gonzalez-Aguilera, D.; López-Fernández, L.; Rodriguez-Gonzalvez, P.; Hernandez-Lopez, D.; Guerrero, D.; Remondino, F.; Menna, F.; Nocerino, E.; Toschi, I.; Ballabeni, A.; et al. GRAPHOS-open-source software for photogrammetric applications. Photogramm. Rec. 2018, 33, 11–29. [Google Scholar] [CrossRef] [Green Version]

- Garland, M. Quadric-Based Polygonal Surface Simplification. Master’s Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 1999. [Google Scholar]

- Unity. Unity-Manual: Draw Call Batching; Unity Technologies: San Francisco, CA, USA, 2017. [Google Scholar]

- Webster, N.L. High poly to low poly workflows for real-time rendering. J. Vis. Commun. Med. 2017, 40, 40–47. [Google Scholar] [CrossRef]

- Ryan, J. Photogrammetry for 3D Content Development in Serious Games and Simulations; MODSIM: San Francisco, CA, USA, 2019; pp. 1–9. [Google Scholar]

- Group, F. OpenGL Insights, 1st ed.; Cozzi, P., Riccio, C., Eds.; A K Peters/CRC Press: Boca Raton, FL, USA, 2012; ISBN 9781439893777. [Google Scholar]

- NVIDIA Corporation SDK White Paper Improve Batching Using Texture Atlases; SDK White Paper; NVIDIA: Santa Clara, CA, USA, 2004.

- Gregory, J. Game Engine Architecture; A K Peters/CRC Press: Boca Raton, FL, USA, 2014; Volume 47, ISBN 9781466560062. [Google Scholar]

- Kajiya, J.T. The Rendering Equation. Acm Siggraph Comput. Graph. 1986, 20, 143–150. [Google Scholar] [CrossRef]

- Nirenstein, S.; Blake, E.; Gain, J. Exact From-Region Visibility Culling. In Proceedings of theThirteenth Eurographics Workshop on Rendering, Pisa, Italy, 26–28 June 2002. [Google Scholar]

- LaViola, J.J., Jr. A discussion of cybersickness in virtual environments. ACM Sigchi Bull. 2000, 32, 47–56. [Google Scholar] [CrossRef]

- Templeman, J.N.; Denbrook, P.S.; Sibert, L.E. Virtual locomotion: Walking in place through virtual environments. Presence: Teleoper. Virtual Environ. 1999, 8, 598–617. [Google Scholar] [CrossRef]

- Tregillus, S.; Folmer, E. VR-STEP: Walking-in-Place using Inertial Sensing for Hands Free Navigation in Mobile VR Environments. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems—CHI ’16, San Jose, CA, USA, 7–12 May 2016; pp. 1250–1255. [Google Scholar]

- Mccauley, M.E.; Sharkey, T.J. Cybersickness: Perception of Self-Motion in Virtual Environment. Presence Teleoper. Virtual Environ. 1992, 1, 311–318. [Google Scholar] [CrossRef]

- Parger, M.; Mueller, J.H.; Schmalstieg, D.; Steinberger, M. Human upper-body inverse kinematics for increased embodiment in consumer-grade virtual reality. In Proceedings of the 24th ACM Symposium on Virtual Reality Software and Technology, Tokyo, Japan, 28 November 2018; pp. 1–10. [Google Scholar]

- Aristidou, A.; Lasenby, J. FABRIK: A fast, iterative solver for the Inverse Kinematics problem. Graph. Model. 2011, 73, 243–260. [Google Scholar] [CrossRef]

- Maciel, A.; De, S. Extending FABRIK with model constraints. Graph. Model. Wil. Online Libr. 2015, 73, 243–260. [Google Scholar]

- Schyns, O. Locomotion and Interactions in Virtual Reality; Liège University: Liège, Belgique, 2018. [Google Scholar]

- Poux, F.; Neuville, R.; Nys, G.-A.; Billen, R. 3D Point Cloud Semantic Modelling: Integrated Framework for Indoor Spaces and Furniture. Remote Sens. 2018, 10, 1412. [Google Scholar] [CrossRef] [Green Version]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Poux, F.; Valembois, Q.; Mattes, C.; Kobbelt, L.; Billen, R. Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism. Remote Sens. 2020, 12, 2583. https://doi.org/10.3390/rs12162583

Poux F, Valembois Q, Mattes C, Kobbelt L, Billen R. Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism. Remote Sensing. 2020; 12(16):2583. https://doi.org/10.3390/rs12162583

Chicago/Turabian StylePoux, Florent, Quentin Valembois, Christian Mattes, Leif Kobbelt, and Roland Billen. 2020. "Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism" Remote Sensing 12, no. 16: 2583. https://doi.org/10.3390/rs12162583

APA StylePoux, F., Valembois, Q., Mattes, C., Kobbelt, L., & Billen, R. (2020). Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism. Remote Sensing, 12(16), 2583. https://doi.org/10.3390/rs12162583