Detection of Longhorned Borer Attack and Assessment in Eucalyptus Plantations Using UAV Imagery

Abstract

:1. Introduction

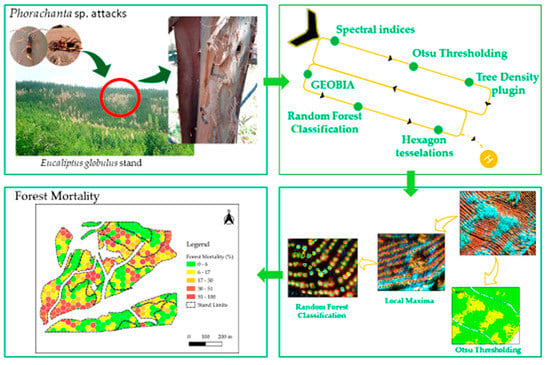

2. Materials and Methods

2.1. Study Area

2.2. Data Acquisition

2.3. Selected Vegetation Indices

2.4. Pixel-Based Analysis

2.4.1. Otsu Thresholding Method

2.4.2. Local Maxima of a Sliding Window

2.5. Object-Based Analysis and Classification

2.6. Accuracy Assessment

2.7. Density Forest Maps

3. Results

3.1. Spectra Details and Vegetation Indexes

3.2. Pixel-Based Analysis

Otsu Thresholding Analysis

3.3. Object-Based Analysis and Classification

3.4. Accurracy Assessment

3.4.1. Otsu Thresholding

3.4.2. Object-Based Analysis and Classification

3.5. Density Forest Maps

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Histograms of Otsu Thresholding Analysis

References

- Rassati, D.; Lieutier, F.; Faccoli, M. Alien Wood-Boring Beetles in Mediterranean Regions. In Insects and Diseases of Mediterranean Forest Systems; Paine, T.D., Lieutier, F., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 293–327. [Google Scholar]

- Estay, S. Invasive insects in the Mediterranean forests of Chile. In Insects and Diseases of Mediterranean Forest Systems; Paine, T.D., Lieutier, F., Eds.; Springer: Cham, Switzerland, 2016; pp. 379–396. [Google Scholar]

- Hanks, L.M.; Paine, T.D.; Millar, J.C.; Hom, J.L. Variation among Eucalyptus species in resistance to eucalyptus longhorned borer in Southern California. Entomol. Exp. Appl. 1995, 74, 185–194. [Google Scholar] [CrossRef]

- Mendel, Z. Seasonal development of the eucalypt borer Phoracantha semipunctata, in Israel. Phytoparasitica 1985, 13, 85–93. [Google Scholar] [CrossRef]

- Hanks, L.M.; Paine, T.D.; Millar, J.C. Mechanisms of resistance in Eucalyptus against larvae of the Eucalyptus Longhorned Borer (Coleoptera: Cerambycidae). Environ. Entomol. 1991, 20, 1583–1588. [Google Scholar] [CrossRef] [Green Version]

- Paine, T.D.; Millar, J.C. Insect pests of eucalypts in California: Implications of managing invasive species. Bull. Entomol. Res. 2002, 92, 147–151. [Google Scholar] [CrossRef] [PubMed]

- Caldeira, M.C.; Fernandéz, V.; Tomé, J.; Pereira, J.S. Positive effect of drought on longicorn borer larval survival and growth on eucalyptus trunks. Annu. For. Sci. 2002, 59, 99–106. [Google Scholar] [CrossRef] [Green Version]

- Soria, F.; Borralho, N.M.G. The genetics of resistance to Phoracantha semipunctata attack in Eucalyptus globulus in Spain. Silvae Genet. 1997, 46, 365–369. [Google Scholar]

- Seaton, S.; Matusick, G.; Ruthrof, K.X.; Hardy, G.E.J. Outbreaks of Phoracantha semipunctata in response to severe drought in a Mediterranean Eucalyptus forest. Forests 2015, 6, 3868–3881. [Google Scholar] [CrossRef]

- Tirado, L.G. Phoracantha semipunctata dans le Sud-Ouest espagnol: Lutte et dégâts. Bull. EPPO 1986, 16, 289–292. [Google Scholar] [CrossRef]

- Wotherspoon, K.; Wardlaw, T.; Bashford, R.; Lawson, S. Relationships between annual rainfall, damage symptoms and insect borer populations in midrotation Eucalyptus nitens and Eucalyptus globulus plantations in Tasmania: Can static traps be used as an early warning device? Aust. For. 2014, 77, 15–24. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Ortiz, S.M.; Breidenbach, J.; Kändler, G. Early detection of bark beetle green attack using Terrasar-X and RapidEye data. Remote Sens. 2013, 5, 1912–1931. [Google Scholar] [CrossRef] [Green Version]

- Wulder, M.A.; Dymond, C.C.; White, J.C.; Leckie, D.G.; Carroll, A.L. Surveying mountain pine beetle damage of forests: A review of remote sensing opportunities. For. Ecol. Manag. 2006, 221, 27–41. [Google Scholar] [CrossRef]

- Hall, R.; Castilla, G.; White, J.C.; Cooke, B.; Skakun, R. Remote sensing of forest pest damage: A review and lessons learned from a Canadian perspective. Can. Èntomol. 2016, 148, 296–356. [Google Scholar] [CrossRef]

- Senf, C.; Seidl, R.; Hostert, P. Remote sensing of forest insect disturbances: Current state and future directions. Int. J. Appl. Earth Obs. Geoinf. 2017, 60, 49–60. [Google Scholar] [CrossRef] [Green Version]

- Gómez, C.; Alejandro, P.; Hermosilla, T.; Montes, F.; Pascual, C.; Ruiz, L.A.; Álvarez-Taboada, F.; Tanase, M.; Valbuena, R. Remote sensing for the Spanish forests in the 21st century: A review of advances, needs, and opportunities. For. Syst. 2019, 28, 1. [Google Scholar] [CrossRef]

- Meddens, A.J.H.; Hicke, J.A.; Vierling, L.A.; Hudak, A.T. Evaluating methods to detect bark beetle-caused tree mortality using single-date and multi-date Landsat imagery. Remote Sens. Environ. 2013, 132, 49–58. [Google Scholar] [CrossRef]

- Waser, L.T.; Küchler, M.; Jütte, K.; Stampfer, T. Evaluating the Potential of WorldView-2 Data to Classify Tree Species and Different Levels of Ash Mortality. Remote Sens. 2014, 6, 4515–4545. [Google Scholar] [CrossRef] [Green Version]

- Oumar, Z.; Mutanga, O. Using WorldView-2 bands and indices to predict bronze bug (Thaumastocoris peregrinus) damage in plantation forests. Int. J. Remote Sens. 2013, 34, 2236–2249. [Google Scholar] [CrossRef]

- Stone, C.; Coops, N.C. Assessment and monitoring of damage from insects in Australian eucalypt forests and commercial plantations. Aust. J. Entomol. 2004, 43, 283–292. [Google Scholar] [CrossRef]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System–Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV–Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree–Level. Remote Sens. 2015, 7, 15467–15493. [Google Scholar] [CrossRef] [Green Version]

- Smigaj, M.; Gaulton, R.; Barr, S.L.; Suárez, J.C. Uav–Borne Thermal Imaging for Forest Health Monitoring: Detection of Disease–Induced Canopy Temperature Increase. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 349–354. [Google Scholar] [CrossRef] [Green Version]

- Otsu, K.; Pla, M.; Vayreda, J.; Brotons, L. Calibrating the Severity of Forest Defoliation by Pine Processionary Moth with Landsat and UAV Imagery. Sensors 2018, 18, 3278. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Otsu, K.; Pla, M.; Duane, A.; Cardil, A.; Brotons, L. Estimating the threshold of detection on tree crown defoliation using vegetation indices from UAS multispectral imagery. Drones 2019, 3, 80. [Google Scholar] [CrossRef] [Green Version]

- Pourazar, H.; Samadzadegan, F.; Javan, F. Aerial multispectral imagery for plant disease detection: Radiometric calibration necessity assessment. Eur. J. Remote Sens. 2019, 52 (Suppl. S3), 17–31. [Google Scholar] [CrossRef] [Green Version]

- Iordache, M.D.; Mantas, V.; Baltazar, E.; Pauly, K.; Lewyckyj, N. A Machine Learning Approach to Detecting Pine Wilt Disease Using Airborne Spectral Imagery. Remote Sens. 2020, 12, 2280. [Google Scholar] [CrossRef]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- FAO. FAO–UNESCO Soil Map of the World. Revised Legend. In World Soil Resources Report; FAO: Rome, Italy, 1998; Volume 60. [Google Scholar]

- SenseFly Parrot Group. Parrot Sequoia Multispectral Camara. Available online: https://www.sensefly.com/camera/parrot-sequoia/ (accessed on 31 August 2020).

- Assmann, J.J.; Kerby, J.T.; Cunlie, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral. sensors—Best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef] [Green Version]

- Pix4D. Pix4D—Drone Mapping Software. Version 4.2. Available online: https://pix4d.com/ (accessed on 31 August 2020).

- Nobuyuki, K.; Hiroshi, T.; Xiufeng, W.; Rei, S. Crop classification using spectral indices derived from Sentinel-2A imagery. J. Inf. Telecommun. 2020, 4, 67–90. [Google Scholar]

- Cogato, A.; Pagay, V.; Marinello, F.; Meggio, F.; Grace, P.; De Antoni Migliorati, M. Assessing the Feasibility of Using Sentinel-2 Imagery to Quantify the Impact of Heatwaves on Irrigated Vineyards. Remote Sens. 2019, 11, 2869. [Google Scholar] [CrossRef] [Green Version]

- Hawryło, P.; Bednarz, B.; Wezyk, P.; Szostak, M. Estimating defoliation of scots pine stands using machine learning methods and vegetation indices of Sentinel-2. Eur. J. Remote Sens. 2018, 51, 194–204. [Google Scholar] [CrossRef] [Green Version]

- Zarco-Tejada, P.J.; Miller, J.R.; Mohammed, G.H.; Noland, T.L.; Sampson, P.H. Vegetation stress detection through Chlorophyll a + b estimation and fluorescence effects on hyperspectral imagery. J. Environ. Qual. 2002, 31, 1433–1441. [Google Scholar] [CrossRef] [PubMed]

- Barry, K.M.; Stone, C.; Mohammed, C. Crown-scale evaluation of spectral indices for defoliated and discoloured eucalypts. Int. J. Remote Sens. 2008, 29, 47–69. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Verbesselt, J.; Robinson, A.; Stone, C.; Culvenor, D. Forecasting tree mortality using change metrics from MODIS satellite data. For. Ecol. Manag. 2009, 258, 1166–1173. [Google Scholar] [CrossRef]

- Datt, B. Remote sensing of chlorophyll a, chlorophyll b, chlorophyll a+ b, and total carotenoid content in eucalyptus leaves. Remote Sens. Environ. 1998, 66, 111–121. [Google Scholar] [CrossRef]

- Datt, B. Visible/near infrared reflectance and chlorophyll content in Eucalyptus leaves. Int. J. Remote Sens. 1999, 20, 2741–2759. [Google Scholar] [CrossRef]

- Deng, X.; Guo, S.; Sun, L.; Chen, J. Identification of Short-Rotation Eucalyptus Plantation at Large Scale Using Multi-Satellite Imageries and Cloud Computing Platform. Remote Sens. 2020, 12, 2153. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Signature analysis of leaf reflectance spectra: Algorithm development for remote sensing of chlorophyll. J. Plant Physiol. 1996, 148, 494–500. [Google Scholar] [CrossRef]

- Olsson, P.O.; Jönsson, A.M.; Eklundh, L. A new invasive insect in Sweden—Physokermes inopinatus: Tracing forest damage with satellite based remote sensing. For. Ecol. Manag. 2012, 285, 29–37. [Google Scholar] [CrossRef]

- Huete, A.R. A soil adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- QGIS Community. QGIS Geographic Information System. Open Source Geospatial Foundation Project. 2020. Available online: https:\qgis.org (accessed on 31 August 2020).

- Richardson, A.J.; Weigand, C.L. Distinguishing vegetation from background information. Photogramm. Eng. Remote Sens. 1977, 43, 1541–1552. [Google Scholar]

- Barnes, E.M.; Clarke, T.R.; Richards, S.E.; Colaizzi, P.D.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of Crop Water Stress, Nitrogen Status and Canopy Density Using Ground-Based Multispectral Data [CD Rom]. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 150, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Otsu, N. A Threshold Selection Method from Gray–Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Goncalves, H.; Corte-Real, L.; Goncalves, J.A. Automatic image registration through image segmentation and SIFT. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2589–2600. [Google Scholar] [CrossRef] [Green Version]

- Goh, T.Y.; Basah, S.N.; Yazid, H.; Safar, M.J.A.; Saad, F.S.A. Performance analysis of image thresholding: Otsu technique. Measurement 2018, 114, 298–307. [Google Scholar] [CrossRef]

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.; Scikit-image Contributors. Scikit-Image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef]

- Van Rossum, G.; De Boer, J. Interactively testing remote servers using the Python programming language. CWI Q. 1991, 4, 283–303. [Google Scholar]

- Wulder, M.; Niemann, K.; Goodenough, D.G.; Wulder, M.A. Local maximum filtering for the extraction of tree locations and basal area from high spatial resolution imagery. Remote Sens. Environ. 2000, 73, 103–114. [Google Scholar] [CrossRef]

- Wulder, M.A.; Niemann, K.O.; Goodenough, D.G. Error reduction methods for local maximum filtering of high spatial resolution imagery for locating trees. Can. J. Remote Sens. 2002, 28, 621–628. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Niemann, K.O.; Nelson, T. Comparison of airborne and satellite high spatial resolution data for the identification of individual trees with local maxima filtering. Int. J. Remote Sens. 2004, 25, 2225–2232. [Google Scholar] [CrossRef]

- Wang, L.; Gong, P.; Biging, G.S. Individual Tree-Crown Delineation and Treetop Detection in High-Spatial-Resolution Aerial Imagery. Photogramm. Eng. Remote Sens. 2004, 70, 351–357. [Google Scholar] [CrossRef] [Green Version]

- Crabbé, A.H.; Cahy, T.; Somers, B.; Verbeke, L.P.; Van Coillie, F. Tree Density Calculator Software Version 1.5.3, QGIS. 2020. Available online: https://bitbucket.org/kul-reseco/localmaxfilter (accessed on 31 August 2020).

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. GIScience Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; Van der Meer, F.; Van der Werff, H.; Van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [Green Version]

- Holloway, J.; Mengersen, K. Statistical machine learning methods and remote sensing for sustainable development goals: A review. Remote Sens. 2018, 10, 21. [Google Scholar] [CrossRef] [Green Version]

- Murfitt, J.; He, Y.; Yang, J.; Mui, A.; De Mille, K. Ash decline assessment in emerald ash borer infested natural forests using high spatial resolution images. Remote Sens. 2016, 8, 256. [Google Scholar] [CrossRef] [Green Version]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-Based Land Cover Classification of Cork Oak Woodlands using UAV Imagery and Orfeo ToolBox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef] [Green Version]

- Liu, D.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 1, 187–194. [Google Scholar] [CrossRef]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable Mean-Shift Algorithm and Its Application to the Segmentation of Arbitrarily Large Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 952–964. [Google Scholar] [CrossRef]

- OTB Development Team. OTB CookBook Documentation; CNES: Paris, France, 2018; p. 305. [Google Scholar]

- Grizonnet, M.; Michel, J.; Poughon, V.; Inglada, J.; Savinaud, M.; Cresson, R. Orfeo ToolBox: Open source processing of remote sensing images. Open Geospat. Data Softw. Stand. 2017, 2, 15. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. Asystematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random Forests for Classification in Ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef] [Green Version]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press; Taylor & Francis Group: Boca Raton, FL, USA, 2019; ISBN 1498776663. [Google Scholar]

- Coleman, T.W.; Graves, A.D.; Heath, Z.; Flowers, R.W.; Hanavan, R.P.; Cluck, D.R.; Ryerson, D. Accuracy of aerial detection surveys for mapping insect and disease disturbances in the United States. For. Ecol. Manag. 2018, 430, 321–336. [Google Scholar] [CrossRef]

- Landis, J.; Koch, G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [Green Version]

- Birch, C.; Oom, S.P.; Beecham, J.A. Rectangular and hexagonal grids used for observation, experiment and simulation in ecology. Ecol. Model. 2007, 206, 347–359. [Google Scholar] [CrossRef]

- Carr, D.B.; Littlefield, R.J.; Nicholson, W.L.; Littlefield, J.S. Scatterplot Matrix Techniques for Large N. J. Am. Stat. Assoc. 1987, 82, 398. [Google Scholar] [CrossRef]

- Carr, D.B.; Olsen, A.R.; White, D. Hexagon Mosaic Maps for Display of Univariate and Bivariate Geographical Data. Cartogr. Geogr. Inf. Sci. 1992, 19, 228–236. [Google Scholar] [CrossRef]

- Rullan-Silva, C.D.; Oltho, A.E.; Delgado de la Mata, J.A.; Pajares-Alonso, J.A. Remote Monitoring of Forest Insect Defoliation—A Review. For. Syst. 2013, 22, 377. [Google Scholar] [CrossRef] [Green Version]

- Cardil, A.; Vepakomma, U.; Brotons, L. Assessing Pine Processionary Moth Defoliation Using Unmanned Aerial Systems. Forests 2017, 8, 402. [Google Scholar] [CrossRef] [Green Version]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Miura, H.; Midorikawa, S. Detection of Slope Failure Areas due to the 2004 Niigata–Ken Chuetsu Earthquake Using High–Resolution Satellite Images and Digital Elevation Model. J. Jpn. Assoc. Earthq. Eng. 2013, 7, 1–14. [Google Scholar]

- Barreto, L.; Ribeiro, M.; Veldkamp, A.; Van Eupen, M.; Kok, K.; Pontes, E. Exploring effective conservation networks based on multi-scale planning unit analysis: A case study of the Balsas sub-basin, Maranhão State, Brazil. J. Ecol. Indic. 2010, 10, 1055–1063. [Google Scholar] [CrossRef]

- Amaral, Y.T.; Santos, E.M.D.; Ribeiro, M.C.; Barreto, L. Landscape structural analysis of the Lençóis Maranhenses national park: Implications for conservation. J. Nat. Conserv. 2019, 51, 125725. [Google Scholar] [CrossRef]

| Flight Parameters | Specifications |

|---|---|

| Flight altitude (m) | 190 |

| Area (ha) | 55 |

| Start time | 12:03 (p.m.) |

| End time | 13:56 (p.m.) |

| Local solar noon | 12:47 (p.m.) |

| Cloud cover (%) | 10 |

| Frontal overlap (%) | 80 |

| Side overlap (%) | 80 |

| Vegetation Index | Equation | References |

|---|---|---|

| Difference Vegetation Index | DVI = Near infrared (NIR) − Red | [49] |

| Green Normalized Difference Vegetation Index | GNDVI = NIR − Green/NIR + Green | [45] |

| Normalized Difference Red-Edge | NDRE = (NIR − RedEdge)/(NIR+RedEdge) | [50] |

| Normalized Difference Vegetation Index | NDVI = NIR − Red/NIR + Red | [51] |

| Soil Adjusted Vegetation Index | SAVI = 1.5 × (NIR − Red)/(NIR + Red + 0.5) | [47] |

| Tree Status | Precision (%) | Recall (%) | F-Score | Kappa Value |

|---|---|---|---|---|

| Dead | 100.0 | 94.4 | 97.1 | 0.96 |

| Healthy | 98.3 | 100.0 | 99.1 |

| Vegetation Indices (VI) | Imagery Interval Values | Histogram Threshold |

|---|---|---|

| DVI | 0.020–1.150 | 0.394 |

| NDVI | 0.150–0.930 | 0.712 |

| GNDVI | 0.248–0.890 | 0.703 |

| NDRE | −0.339–0.660 | 0.215 |

| SAVI | 0.057–0.983 | 0.526 |

| Spectral Indices | Tree Status | User’s Accuracy (%) | Producer’s Accuracy (%) | Overall Accuracy (%) | Kappa Value |

|---|---|---|---|---|---|

| NDVI | Dead | 100.00 | 96.74 | 98.2 | 0.96 |

| Healthy | 96.13 | 100.00 | |||

| NDRE | Dead | 95.51 | 79.07 | 86.4 | 0.73 |

| Healthy | 78.67 | 95.40 | |||

| DIV | Dead | 99.51 | 95.35 | 97.2 | 0.94 |

| Healthy | 94.54 | 99.43 | |||

| GNDVI | Dead | 100.00 | 95.35 | 97.4 | 0.95 |

| Healthy | 94.57 | 100.0 | |||

| SAVI | Dead | 99.50 | 93.49 | 96.1 | 0.92 |

| Healthy | 92.51 | 99.43 |

| Class | Predicted | ||||

|---|---|---|---|---|---|

| Healthy | Dead | Total | Producer’s Accuracy (%) | ||

| Observed | Healthy | 430 | 7 | 437 | 98.4% |

| Dead | 1 | 79 | 79 | 98.8% | |

| Total | 431 | 86 | 517 | - | |

| User’s Accuracy | 99.8% | 91.9% | - | 98.5% | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duarte, A.; Acevedo-Muñoz, L.; Gonçalves, C.I.; Mota, L.; Sarmento, A.; Silva, M.; Fabres, S.; Borralho, N.; Valente, C. Detection of Longhorned Borer Attack and Assessment in Eucalyptus Plantations Using UAV Imagery. Remote Sens. 2020, 12, 3153. https://doi.org/10.3390/rs12193153

Duarte A, Acevedo-Muñoz L, Gonçalves CI, Mota L, Sarmento A, Silva M, Fabres S, Borralho N, Valente C. Detection of Longhorned Borer Attack and Assessment in Eucalyptus Plantations Using UAV Imagery. Remote Sensing. 2020; 12(19):3153. https://doi.org/10.3390/rs12193153

Chicago/Turabian StyleDuarte, André, Luis Acevedo-Muñoz, Catarina I. Gonçalves, Luís Mota, Alexandre Sarmento, Margarida Silva, Sérgio Fabres, Nuno Borralho, and Carlos Valente. 2020. "Detection of Longhorned Borer Attack and Assessment in Eucalyptus Plantations Using UAV Imagery" Remote Sensing 12, no. 19: 3153. https://doi.org/10.3390/rs12193153

APA StyleDuarte, A., Acevedo-Muñoz, L., Gonçalves, C. I., Mota, L., Sarmento, A., Silva, M., Fabres, S., Borralho, N., & Valente, C. (2020). Detection of Longhorned Borer Attack and Assessment in Eucalyptus Plantations Using UAV Imagery. Remote Sensing, 12(19), 3153. https://doi.org/10.3390/rs12193153