Fusion of Spectral and Structural Information from Aerial Images for Improved Biomass Estimation

Abstract

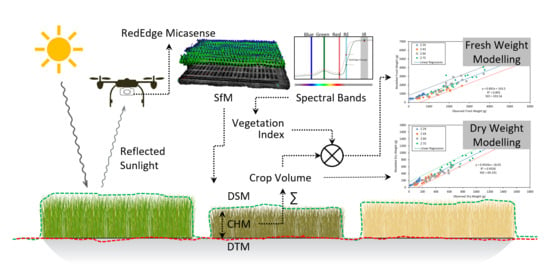

:1. Introduction

2. Materials and Methods

2.1. Field Experiment

2.2. Aerial Data Acquisition System and Field Data Collection

2.3. Reflectance Orthomosaic, Digital Surface Model and Digital Terrain Model

2.4. Image Processing and Data Analysis

2.4.1. Vegetation Indices (VIs)

2.4.2. Crop Height Model (CHM)

2.4.3. Crop Coverage (CC)

2.4.4. Crop Volume (CV) and Dry Weight (DW) Modelling

2.4.5. Crop Volume Multiplication with Vegetation Indices (CV×VIs) and Fresh Weight (FW) Modelling

2.4.6. Plot Level Data Analytics

3. Results

3.1. Crop Height Model

3.2. Crop Coverage

3.3. Dry Weight Modelling

3.4. Fresh Weight Modelling

3.5. Genotypic Ranking Using Ground Measurements and UAV-Based Measurements

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wang, J.; Zhao, C.; Huang, W. Fundamental and Application of Quantitative Remote Sensing in Agriculture; Science China Press: Beijing, China, 2008. [Google Scholar]

- Huang, J.X.; Sedano, F.; Huang, Y.B.; Ma, H.Y.; Li, X.L.; Liang, S.L.; Tian, L.Y.; Zhang, X.D.; Fan, J.L.; Wu, W.B. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to improve regional winter wheat yield estimation. Agric. For. Meterol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Tilly, N.; Aasen, H.; Bareth, G. Fusion of plant height and vegetation indices for the estimation of barley biomass. Remote Sens. 2015, 7, 11449–11480. [Google Scholar] [CrossRef] [Green Version]

- Chen, P.F.; Nicolas, T.; Wang, J.H.; Philippe, V.; Huang, W.J.; Li, B.G. [New index for crop canopy fresh biomass estimation]. Guang Pu Xue Yu Guang Pu Fen Xi 2010, 30, 512–517. [Google Scholar] [PubMed]

- Jin, X.L.; Li, Z.H.; Yang, G.J.; Yang, H.; Feng, H.K.; Xu, X.G.; Wang, J.H.; Li, X.C.; Luo, J.H. Winter wheat yield estimation based on multi-source medium resolution optical and radar imaging data and the AquaCrop model using the particle swarm optimization algorithm. ISPRS J. Photogramm. Remote Sens. 2017, 126, 24–37. [Google Scholar] [CrossRef]

- White, J.W.; Andrade-Sanchez, P.; Gore, M.A.; Bronson, K.F.; Coffelt, T.A.; Conley, M.M.; Feldmann, K.A.; French, A.N.; Heun, J.T.; Hunsaker, D.J.; et al. Field-based phenomics for plant genetics research. Field Crops Res. 2012, 133, 101–112. [Google Scholar] [CrossRef]

- Banerjee, B.P.; Raval, S.; Cullen, P.J. UAV-hyperspectral imaging of spectrally complex environments. Int. J. Remote Sens. 2020, 41, 4136–4159. [Google Scholar] [CrossRef]

- Féret, J.B.; Gitelson, A.A.; Noble, S.D.; Jacquemoud, S. PROSPECT-D: Towards modeling leaf optical properties through a complete lifecycle. Remote Sens. Environ. 2017, 193, 204–215. [Google Scholar] [CrossRef] [Green Version]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; Francois, C.; Ustin, S.L. PROSPECT plus SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Rouse, J.; Haas, R.; Schell, J.; Deering, D.; Harlan, J. Monitoring the Vernal Advancement of Retrogradation of Natural Vegetation; Type III, Final Report; NASA/GSFC: Greenbelt, MD, USA, 1974; p. 371. [Google Scholar]

- Fern, R.R.; Foxley, E.A.; Bruno, A.; Morrison, M.L. Suitability of NDVI and OSAVI as estimators of green biomass and coverage in a semi-arid rangeland. Ecol. Indic. 2018, 94, 16–21. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A Modified Soil Adjusted Vegetation Index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Wirwahn, J.A.; Claupein, W. A Programmable Aerial Multispectral Camera System for In-Season Crop Biomass and Nitrogen Content Estimation. Agriculture 2016, 6, 4. [Google Scholar] [CrossRef] [Green Version]

- Gnyp, M.L.; Miao, Y.X.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.K.; Huang, S.Y.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crops Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Gnyp, M.L.; Yu, K.; Aasen, H.; Yao, Y.; Huang, S.; Miao, Y.; Bareth, C.; Georg. Analysis of Crop Reflectance for Estimating Biomass in Rice Canopies at Different Phenological Stages, Reflexionsanalyse zur Abschätzung der Biomasse von Reis in unterschiedlichen phänologischen Stadien. Photogramm.-Fernerkund.-Geoinf. 2013, 2013, 351–365. [Google Scholar] [CrossRef]

- Koppe, W.; Li, F.; Gnyp, M.L.; Miao, Y.X.; Jia, L.L.; Chen, X.P.; Zhang, F.S.; Bareth, G. Evaluating Multispectral and Hyperspectral Satellite Remote Sensing Data for Estimating Winter Wheat Growth Parameters at Regional Scale in the North China Plain. Photogramm. Fernerkund. Geoinf. 2010, 2012, 167–178. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Roth, L.; Streit, B. Predicting cover crop biomass by lightweight UAS-based RGB and NIR photography: An applied photogrammetric approach. Precis. Agric. 2018, 19, 93–114. [Google Scholar] [CrossRef] [Green Version]

- Atzberger, C.; Darvishzadeh, R.; Immitzer, M.; Schlerf, M.; Skidmore, A.; le Maire, G. Comparative analysis of different retrieval methods for mapping grassland leaf area index using airborne imaging spectroscopy. Int. J. Appl. Earth Obs. Geoinf. 2015, 43, 19–31. [Google Scholar] [CrossRef] [Green Version]

- Yue, J.B.; Feng, H.K.; Yang, G.J.; Li, Z.H. A Comparison of Regression Techniques for Estimation of Above-Ground Winter Wheat Biomass Using Near-Surface Spectroscopy. Remote Sens. 2018, 10, 66. [Google Scholar] [CrossRef] [Green Version]

- Atzberger, C.; Guerif, M.; Baret, F.; Werner, W. Comparative analysis of three chemometric techniques for the spectroradiometric assessment of canopy chlorophyll content in winter wheat. Comput. Electron. Agric. 2010, 73, 165–173. [Google Scholar] [CrossRef]

- Berger, K.; Atzberger, C.; Danner, M.; D’Urso, G.; Mauser, W.; Vuolo, F.; Hank, T. Evaluation of the PROSAIL Model Capabilities for Future Hyperspectral Model Environments: A Review Study. Remote Sens. 2018, 10, 85. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.S. The potential and challenge of remote sensing-based biomass estimation. Int. J. Remote Sens. 2006, 27, 1297–1328. [Google Scholar] [CrossRef]

- Marshall, M.; Thenkabail, P. Developing in situ Non-Destructive Estimates of Crop Biomass to Address Issues of Scale in Remote Sensing. Remote Sens. 2015, 7, 808–835. [Google Scholar] [CrossRef] [Green Version]

- Ali, I.; Greifeneder, F.; Stamenkovic, J.; Neumann, M.; Notarnicola, C. Review of Machine Learning Approaches for Biomass and Soil Moisture Retrievals from Remote Sensing Data. Remote Sens. 2015, 7, 16398–16421. [Google Scholar] [CrossRef] [Green Version]

- Sun, H.; Li, M.Z.; Zhao, Y.; Zhang, Y.E.; Wang, X.M.; Li, X.H. The Spectral Characteristics and Chlorophyll Content at Winter Wheat Growth Stages. Spectrosc. Spectr. Anal. 2010, 30, 192–196. [Google Scholar] [CrossRef]

- Fu, Y.Y.; Yang, G.J.; Wang, J.H.; Song, X.Y.; Feng, H.K. Winter wheat biomass estimation based on spectral indices, band depth analysis and partial least squares regression using hyperspectral measurements. Comput. Electron. Agric. 2014, 100, 51–59. [Google Scholar] [CrossRef]

- Nguy-Robertson, A.; Gitelson, A.; Peng, Y.; Vina, A.; Arkebauer, T.; Rundquist, D. Green Leaf Area Index Estimation in Maize and Soybean: Combining Vegetation Indices to Achieve Maximal Sensitivity. Agron. J. 2012, 104, 1336–1347. [Google Scholar] [CrossRef] [Green Version]

- Gnyp, M.; Miao, Y.; Yuan, F.; Yu, K.; Yao, Y.; Huang, S.; Bareth, G. Derivative analysis to improve rice biomass estimation at early growth stages. In Proceedings of the Workshop on UAV-Based Remote Sensing Methods for Monitoring Vegetation, University of Cologne, Regional Computing Centre (RRZK), Germany, 14 April 2014; pp. 43–50. [Google Scholar] [CrossRef]

- Gnyp, M.L.; Bareth, G.; Li, F.; Lenz-Wiedemann, V.I.S.; Koppe, W.G.; Miao, Y.X.; Hennig, S.D.; Jia, L.L.; Laudien, R.; Chen, X.P.; et al. Development and implementation of a multiscale biomass model using hyperspectral vegetation indices for winter wheat in the North China Plain. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 232–242. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Smith, K.L.; Steven, M.D.; Colls, J.J. Use of hyperspectral derivative ratios in the red-edge region to identify plant stress responses to gas leaks. Remote Sens. Environ. 2004, 92, 207–217. [Google Scholar] [CrossRef]

- Tsai, F.; Philpot, W. Derivative analysis of hyperspectral data. Remote Sens. Environ. 1998, 66, 41–51. [Google Scholar] [CrossRef]

- Bareth, G.; Bendig, J.; Tilly, N.; Hoffmeister, D.; Aasen, H.; Bolten, A. A Comparison of UAV- and TLS-derived Plant Height for Crop Monitoring: Using Polygon Grids for the Analysis of Crop Surface Models (CSMs). Photogramm. Fernerkund. Geoinf. 2016, 2016, 85–94. [Google Scholar] [CrossRef] [Green Version]

- Madec, S.; Baret, F.; de Solan, B.; Thomas, S.; Dutartre, D.; Jezequel, S.; Hemmerle, M.; Colombeau, G.; Comar, A. High-Throughput Phenotyping of Plant Height: Comparing Unmanned Aerial Vehicles and Ground LiDAR Estimates. Front. Plant Sci. 2017, 8, 2002. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tilly, N.; Hoffmeister, D.; Cao, Q.; Huang, S.Y.; Lenz-Wiedemann, V.; Miao, Y.X.; Bareth, G. Multitemporal crop surface models: Accurate plant height measurement and biomass estimation with terrestrial laser scanning in paddy rice. J. Appl. Remote Sens. 2014, 8, 083671. [Google Scholar] [CrossRef]

- Jin, X.L.; Yang, G.J.; Xu, X.G.; Yang, H.; Feng, H.K.; Li, Z.H.; Shen, J.X.; Zhao, C.J.; Lan, Y.B. Combined Multi-Temporal Optical and Radar Parameters for Estimating LAI and Biomass in Winter Wheat Using HJ and RADARSAR-2 Data. Remote Sens. 2015, 7, 13251–13272. [Google Scholar] [CrossRef] [Green Version]

- Koppe, W.; Gnyp, M.L.; Hennig, S.D.; Li, F.; Miao, Y.X.; Chen, X.P.; Jia, L.L.; Bareth, G. Multi-Temporal Hyperspectral and Radar Remote Sensing for Estimating Winter Wheat Biomass in the North China Plain. Photogramm. Fernerkund. Geoinf. 2012, 2012, 281–298. [Google Scholar] [CrossRef]

- Sadeghi, Y.; St-Onge, B.; Leblon, B.; Prieur, J.F.; Simard, M. Mapping boreal forest biomass from a SRTM and TanDEM-X based on canopy height model and Landsat spectral indices. Int. J. Appl. Earth Obs. Geoinf. 2018, 68, 202–213. [Google Scholar] [CrossRef]

- Santi, E.; Paloscia, S.; Pettinato, S.; Fontanelli, G.; Mura, M.; Zolli, C.; Maselli, F.; Chiesi, M.; Bottai, L.; Chirici, G. The potential of multifrequency SAR images for estimating forest biomass in Mediterranean areas. Remote Sens. Environ. 2017, 200, 63–73. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.Y.; Li, D.; Wu, M.Q.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Yue, J.B.; Yang, G.J.; Tian, Q.J.; Feng, H.K.; Xu, K.J.; Zhou, C.Q. Estimate of winter-wheat above-ground biomass based on UAV ultrahigh-ground-resolution image textures and vegetation indices. ISPRS J. Photogramm. Remote Sens. 2019, 150, 226–244. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Gil-Docampo, M.L.; Arza-García, M.; Ortiz-Sanz, J.; Martínez-Rodríguez, S.; Marcos-Robles, J.L.; Sánchez-Sastre, L.F. Above-ground biomass estimation of arable crops using UAV-based SfM photogrammetry. Geocarto Int. 2019, 35, 687–699. [Google Scholar] [CrossRef]

- Zhu, W.X.; Sun, Z.G.; Peng, J.B.; Huang, Y.H.; Li, J.; Zhang, J.Q.; Yang, B.; Liao, X.H. Estimating Maize Above-Ground Biomass Using 3D Point Clouds of Multi-Source Unmanned Aerial Vehicle Data at Multi-Spatial Scales. Remote Sens. 2019, 11, 2678. [Google Scholar] [CrossRef] [Green Version]

- Haghighattalab, A.; Gonzalez Perez, L.; Mondal, S.; Singh, D.; Schinstock, D.; Rutkoski, J.; Ortiz-Monasterio, I.; Singh, R.P.; Goodin, D.; Poland, J. Application of unmanned aerial systems for high throughput phenotyping of large wheat breeding nurseries. Plant Methods 2016, 12, 35. [Google Scholar] [CrossRef] [Green Version]

- Roth, L.; Aasen, H.; Walter, A.; Liebisch, F. Extracting leaf area index using viewing geometry effects-A new perspective on high-resolution unmanned aerial system photography. ISPRS J. Photogramm. Remote Sens. 2018, 141, 161–175. [Google Scholar] [CrossRef]

- Karunaratne, S.; Thomson, A.; Morse-McNabb, E.; Wijesingha, J.; Stayches, D.; Copland, A.; Jacobs, J. The Fusion of Spectral and Structural Datasets Derived from an Airborne Multispectral Sensor for Estimation of Pasture Dry Matter Yield at Paddock Scale with Time. Remote Sens. 2020, 12, 2017. [Google Scholar] [CrossRef]

- Poley, L.G.; Laskin, D.N.; McDermid, G.J. Quantifying Aboveground Biomass of Shrubs Using Spectral and Structural Metrics Derived from UAS Imagery. Remote Sens. 2020, 12, 2199. [Google Scholar] [CrossRef]

- Wang, C.Y.; Myint, S.W. A Simplified Empirical Line Method of Radiometric Calibration for Small Unmanned Aircraft Systems-Based Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Duffy, J.P.; Cunliffe, A.M.; DeBell, L.; Sandbrook, C.; Wich, S.A.; Shutler, J.D.; Myers-Smith, I.H.; Varela, M.R.; Anderson, K. Location, location, location: Considerations when using lightweight drones in challenging environments. Remote Sens. Ecol. Conserv. 2018, 4, 7–19. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; Malenovsky, Z.; King, D.H.; Robinson, S.A. Spatial Co-Registration of Ultra-High Resolution Visible, Multispectral and Thermal Images Acquired with a Micro-UAV over Antarctic Moss Beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef] [Green Version]

- Crusiol, L.G.T.; Nanni, M.R.; Silva, G.F.C.; Furlanetto, R.H.; Gualberto, A.A.D.; Gasparotto, A.D.; De Paula, M.N. Semi professional digital camera calibration techniques for Vis/NIR spectral data acquisition from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2717–2736. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Hakala, T.; Honkavaara, E.; Saari, H.; Mäkynen, J.; Kaivosoja, J.; Pesonen, L.; Pölönen, I. Spectral imaging from UAVs under varying illumination conditions. In Proceedings of the UAV-g 2013, Rostock, Germany, 4–6 September 2013; pp. 189–194. [Google Scholar]

- Li, H.W.; Zhang, H.; Zhang, B.; Chen, Z.C.; Yang, M.H.; Zhang, Y.Q. A Method Suitable for Vicarious Calibration of a UAV Hyperspectral Remote Sensor. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3209–3223. [Google Scholar] [CrossRef]

- Assmann, J.J.; Kerby, J.T.; Cunliffe, A.M.; Myers-Smith, I.H. Vegetation monitoring using multispectral sensors—Best practices and lessons learned from high latitudes. J. Unmanned Veh. Syst. 2019, 7, 54–75. [Google Scholar] [CrossRef] [Green Version]

- Kelcey, J.; Lucieer, A. Sensor correction of a 6-band multispectral imaging sensor for UAV remote sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Harwin, S.; Lucieer, A. Assessing the Accuracy of Georeferenced Point Clouds Produced via Multi-View Stereopsis from Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Banerjee, B.P.; Raval, S.A.; Cullen, P.J. Alignment of UAV-hyperspectral bands using keypoint descriptors in a spectrally complex environment. Remote Sens. Lett. 2018, 9, 524–533. [Google Scholar] [CrossRef]

- Bartier, P.M.; Keller, C.P. Multivariate interpolation to incorporate thematic surface data using inverse distance weighting (IDW). Comput. Geosci. 1996, 22, 795–799. [Google Scholar] [CrossRef]

- Blackburn, G.A. Quantifying chlorophylls and caroteniods at leaf and canopy scales: An evaluation of some hyperspectral approaches. Remote Sens. Environ. 1998, 66, 273–285. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral vegetation indices and their relationships with agricultural crop characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Caicedo, J.P.R.; Verrelst, J.; Munoz-Mari, J.; Moreno, J.; Camps-Valls, G. Toward a Semiautomatic Machine Learning Retrieval of Biophysical Parameters. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1249–1259. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote sensing of chlorophyll concentration in higher plant leaves. Synerg. Use Multisens. Data Land Process. 1998, 22, 689–692. [Google Scholar] [CrossRef]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Roujean, J.L.; Breon, F.M. Estimating Par Absorbed by Vegetation from Bidirectional Reflectance Measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 2014, 22, 229–242. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Klouček, T.; Moravec, D.; Komárek, J.; Lagner, O.; Štych, P. Selecting appropriate variables for detecting grassland to cropland changes using high resolution satellite data. PeerJ 2018, 6, e5487. [Google Scholar] [CrossRef] [PubMed]

- Kasser, M.; Egels, Y. Digital Photogrammetry; Taylor & Franci: London, UK; New York, NY, USA, 2002. [Google Scholar]

- Popescu, S.C.; Wynne, R.H. Seeing the trees in the forest: Using lidar and multispectral data fusion with local filtering and variable window size for estimating tree height. Photogramm. Eng. Remote Sens. 2004, 70, 589–604. [Google Scholar] [CrossRef] [Green Version]

- Popescu, S.C.; Wynne, R.H.; Nelson, R.F. Estimating plot-level tree heights with lidar: Local filtering with a canopy-height based variable window size. Comput. Electron. Agric. 2002, 37, 71–95. [Google Scholar] [CrossRef]

- Liu, J.; Chen, P.; Xu, X. Estimating wheat coverage using multispectral images collected by unmanned aerial vehicles and a new sensor. In Proceedings of the 2018 7th International Conference on Agro-geoinformatics, Hangzhou, China, 6–9 August 2018; pp. 1–5. [Google Scholar]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, L.; Han, W.; Shao, G. Fractional Vegetation Cover Extraction Method of Winter Wheat Based on UAV Remote Sensing and Vegetation Index. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2018, 49, 212–221. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Sankaran, S.; Zhou, J.F.; Khot, L.R.; Trapp, J.J.; Mndolwa, E.; Miklas, P.N. High-throughput field phenotyping in dry bean using small unmanned aerial vehicle based multispectral imagery. Comput. Electron. Agric. 2018, 151, 84–92. [Google Scholar] [CrossRef]

- Pradhan, S.; Bandyopadhyay, K.K.; Sahoo, R.N.; Sehgal, V.K.; Singh, R.; Gupta, V.K.; Joshi, D.K. Predicting Wheat Grain and Biomass Yield Using Canopy Reflectance of Booting Stage. J. Indian Soc. Remote Sens. 2014, 42, 711–718. [Google Scholar] [CrossRef]

- Kross, A.; McNairn, H.; Lapen, D.; Sunohara, M.; Champagne, C. Assessment of RapidEye vegetation indices for estimation of leaf area index and biomass in corn and soybean crops. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 235–248. [Google Scholar] [CrossRef] [Green Version]

- Furbank, R.T.; Tester, M. Phenomics--technologies to relieve the phenotyping bottleneck. Trends Plant Sci. 2011, 16, 635–644. [Google Scholar] [CrossRef] [PubMed]

- Yendrek, C.R.; Tomaz, T.; Montes, C.M.; Cao, Y.; Morse, A.M.; Brown, P.J.; McIntyre, L.M.; Leakey, A.D.; Ainsworth, E.A. High-Throughput Phenotyping of Maize Leaf Physiological and Biochemical Traits Using Hyperspectral Reflectance. Plant Physiol. 2017, 173, 614–626. [Google Scholar] [CrossRef] [PubMed]

- Ma, Y.; Fang, S.H.; Peng, Y.; Gong, Y.; Wang, D. Remote Estimation of Biomass in Winter Oilseed Rape (Brassica napus L.) Using Canopy Hyperspectral Data at Different Growth Stages. Appl. Sci. 2019, 9, 545. [Google Scholar] [CrossRef] [Green Version]

- Pérez-Harguindeguy, N.; Díaz, S.; Garnier, E.; Lavorel, S.; Poorter, H.; Jaureguiberry, P.; Bret-Harte, M.S.; Cornwell, W.K.; Craine, J.M.; Gurvich, D.E.; et al. New handbook for standardised measurement of plant functional traits worldwide. Aust. J. Bot. 2013, 61, 167–234. [Google Scholar] [CrossRef]

- Baltsavias, E.; Gruen, A.; Eisenbeiss, H.; Zhang, L.; Waser, L.T. High-quality image matching and automated generation of 3D tree models. Int. J. Remote Sens. 2008, 29, 1243–1259. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The Utility of Image-Based Point Clouds for Forest Inventory: A Comparison with Airborne Laser Scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef] [Green Version]

- Järnstedt, J.; Pekkarinen, A.; Tuominen, S.; Ginzler, C.; Holopainen, M.; Viitala, R. Forest variable estimation using a high-resolution digital surface model. ISPRS J. Photogramm. Remote Sens. 2012, 74, 78–84. [Google Scholar] [CrossRef]

- Kipp, S.; Mistele, B.; Baresel, P.; Schmidhalter, U. High-throughput phenotyping early plant vigour of winter wheat. Eur. J. Agron. 2014, 52, 271–278. [Google Scholar] [CrossRef]

- Reddersen, B.; Fricke, T.; Wachendorf, M. A multi-sensor approach for predicting biomass of extensively managed grassland. Comput. Electron. Agric. 2014, 109, 247–260. [Google Scholar] [CrossRef]

- Ferrio, J.P.; Villegas, D.; Zarco, J.; Aparicio, N.; Araus, J.L.; Royo, C. Assessment of durum wheat yield using visible and near-infrared reflectance spectra of canopies. Field Crops Res. 2005, 94, 126–148. [Google Scholar] [CrossRef]

- Gao, S.; Niu, Z.; Huang, N.; Hou, X.H. Estimating the Leaf Area Index, height and biomass of maize using HJ-1 and RADARSAT-2. Int. J. Appl. Earth Obs. Geoinf. 2013, 24, 1–8. [Google Scholar] [CrossRef]

| Indices | Equation | Reference |

|---|---|---|

| Normalized difference vegetation index | [10] | |

| Enhanced vegetation index | [71] | |

| Green normalized difference vegetation index | [72] | |

| Normalized difference red-edge index | [73] | |

| Renormalized difference vegetation index | [74] | |

| Optimized soil adjusted vegetation index | [12] | |

| Modified simple ratio | [75] | |

| Modified chlorophyll absorption ratio index 1 | [76] | |

| Modified chlorophyll absorption ratio index 2 | [76] | |

| Modified triangular vegetation index 1 | [76] | |

| Modified triangular vegetation index 2 | [76] | |

| Pigment specific simple ratio for chlorophyll a | [68] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Banerjee, B.P.; Spangenberg, G.; Kant, S. Fusion of Spectral and Structural Information from Aerial Images for Improved Biomass Estimation. Remote Sens. 2020, 12, 3164. https://doi.org/10.3390/rs12193164

Banerjee BP, Spangenberg G, Kant S. Fusion of Spectral and Structural Information from Aerial Images for Improved Biomass Estimation. Remote Sensing. 2020; 12(19):3164. https://doi.org/10.3390/rs12193164

Chicago/Turabian StyleBanerjee, Bikram Pratap, German Spangenberg, and Surya Kant. 2020. "Fusion of Spectral and Structural Information from Aerial Images for Improved Biomass Estimation" Remote Sensing 12, no. 19: 3164. https://doi.org/10.3390/rs12193164

APA StyleBanerjee, B. P., Spangenberg, G., & Kant, S. (2020). Fusion of Spectral and Structural Information from Aerial Images for Improved Biomass Estimation. Remote Sensing, 12(19), 3164. https://doi.org/10.3390/rs12193164