1. Introduction

The process of construction mainly includes construction preparation, project construction, project acceptance, etc. The lofting is included in the construction project and project acceptance. It is the process of interpreting construction plans and marking the location of points or axis. Lofting is performed to ensure a project is built according to engineering design plans [

1,

2] and it is an essential and basic link in building construction which runs through the whole construction project.

The quality of a project’s lofting depends on its efficiency and cost. In the early stages, limited by the lofting tools and methods, several operators were required to invest a lot of energy and time in lofting and calibration at the construction site. In the past decade, with the rapid development of surveying and mapping industry, the appearance of total station has greatly improved the lofting efficiency. As an instrument integrating the functions of measuring horizontal angle, vertical angle, distance, and elevation difference, it can be applied in almost all surveying fields and is easy to operate [

3,

4]. However, both the harsh environment of most construction sites, such as tunnels, bridges, and high-rise buildings and the atrocious weather have a non-negligible influence on the operators and the construction period. In recent years, robot technology has been continuously developed and widely used in agriculture and forestry monitoring [

5,

6,

7], industrial measurement and control [

8,

9], indoor monitoring [

10,

11], and other fields. Many researchers apply unmanned aerial vehicles, unmanned ground robots, underwater robots, etc. to measurement and control area.

However, the use of ground mobile robots in lofting is still rare, and it is not easy to realize “unmanned lofting”. First, lofting requires high precision, many rubber wheel robots have low flexibility, which affects the accuracy of localization. Second, although Global Positioning System-Real Time Kinematic (GPS-RTK) lofting method is convenient [

12], due to the high-attenuation of GPS signals indoors, this method cannot be used in the indoor environment. Third, most indoor lofting environment has few or similar features which also makes the method based on feature matching not applicable to the special environment of lofting [

13,

14].

In the field of surveying and mapping, lofting instruments and methods are continuously updating. At present, the most convenient instruments are GPS-RTK lofting instrument and Building Information Modeling (BIM) laser lofting instrument, of which GPS-RTK lofting instrument is not suitable for the indoor environment. BIM laser lofting instrument is a lofting instrument combining BIM model and laser localization system. Unlike the total station, this instrument can be operated by a single operator, greatly reducing manpower. It locks the prism through laser localization system to obtain the three-dimensional coordinates of the prism on the construction site. BIM provides the instrument with an independent coordinate system and necessary geometric information for lofting [

15]. Workers can record lofting errors according to the model, and only need to establish a coordinate system according to the actual of the project in the model building stage, without complicated coordinate conversion. During lofting, only one worker is needed to hold a prism and walks around the construction site to find the points to be lofted according to the instructions of the Personal Digital Assistant (PDA). Currently, many companies have mass-produced this kind of instrument, such as Japan’s Topcon Company, Switzerland’s Leica Company, and America’s Trimble Company. In this paper, an intelligent lofting system is designed on the basis of this kind of instrument, and an autonomous mobile ground robot is constructed to replace humans. Among them, the instrument is BIM laser lofting instrument of Japan Topcon brand, model LN-100.

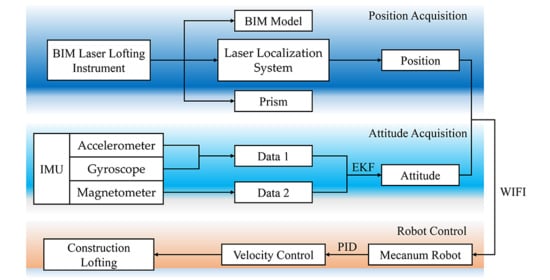

Therefore, this paper builds a robot platform using Mecanum wheels instead of traditional rubber wheels. We also combine Micro Electro Mechanical Systems-Inertial Measurement Unit (MEMS-IMU) (including accelerometer, gyroscope), magnetometer, wheel encoder, BIM laser lofting instrument, and other sensors to estimate the state of the ground robot. A wheel encoder is used to set the velocity of the robot. The BIM laser lofting instrument localizes the robot and sends the information to the embedded STM32F103 series processor mounted on the robot through WIFI communication and serial communication. Both the MEME-IMU and magnetometer provide the attitude of the robot. After that, we use the Extended Kalman Filter (EKF) algorithm to fuse these data to estimate the robot’s high-precision attitude. The STM32F103 series processor processes the data in real-time and controls the robot to move autonomously to the points to be lofted.

We mainly study the plane intelligent lofting in the indoor barrier-free environment. How to get the state of the ground robot and how to reach the points to be lofted in a timesaving and laborsaving manner is the focus of this paper. The ‘state’ here refers to the two-dimensional coordinates of the ground robot in the lofting environment x, y and Yaw angle . The main innovations and contributions of this paper are:

- (1)

The Meacanum wheels are deployed in the system, which improves localization accuracy from the hardware;

- (2)

In data acquisition, we discuss the localization and attitude separately which will effectively reduce the amount of estimation and calculation.

Figure 1 shows the integration framework;

- (3)

We adopt a simple and efficient ground robot motion control theory. It is suitable for Mecanum wheel robots and indoor barrier-free environment;

- (4)

We propose a system combining multi-sensors and BIM laser lofting instrument. It expands the relevant market and provides a reference for the future three-dimensional intelligent lofting under multi-scenes.

The paper is organized as follows.

Section 2 summarizes the related work of robot localization and lofting environmental adaptability. The design of the robot platform is shown in

Section 3. Brief introduction of BIM laser lofting instrument and acquisition of location information are introduced in

Section 4.

Section 5 presents multi-sensor data fusion for attitude estimation. The theory of robot motion control is described in

Section 6.

Section 7 shows the experimental results.

Section 8 is the discussion of the experimental results. Finally, the conclusion is given in

Section 9.

2. Related Work

There are many operating steps in construction lofting, such as setting up instruments, aligning, leveling, locating, and marking, among which locating accurately is a critical step. Therefore, for the robot to reach the control points accurately in a strange environment, it is essential to get the robot’s state.

Many scholars have carried out researches on the localization of robots in a strange environment. The related work mainly includes the requirement of precision, application environment, selecting and combining sensors, and others. Multi-sensors are usually used in these researches to estimate the state information of ground robots or flying robots. GPS-RTK positioning method is mature enough and has high positioning accuracy. Jiang [

16] has successfully used GPS technology for the layout of the bridge construction horizontal control network and the bridge axis lofting work, and achieved high enough lofting accuracy. However, this method is commonly used for outdoor navigation or positioning and is not suitable for the indoor environment. Simultaneous localization and mapping (SLAM) technology is currently the main method for robot localization in indoor environment. It can be applied in an indoor environment and can be used for real-time mapping of the environment so that the robots can work in this environment for a long time. Many indoor robots have adopted this technology [

17,

18]. However, this method usually needs to compare with the dynamic map to reduce the errors. Different construction sites are completely different, therefore, SLAM technology cannot be well applied in lofting. Ultra-wideband (UWB) is also one of the common methods for indoor localization, but its accuracy is not enough [

19,

20]. IMU as one of the most commonly used sensors for robot state estimation can be seen in almost all current robot localization researches. However, due to the huge accumulative errors caused by wheel slip, sensor drift, and other reasons [

21] (pp. 483–484), it can hardly be used alone in high-precision state estimation research. More sensors must be used for position or attitude correction, such as Lidar, camera or magnetometer, etc. Lidar/IMU [

22,

23,

24], camera/IMU [

25,

26,

27], Lidar/Camera/IMU [

28,

29,

30], and other multi-sensor combinations methods can estimate the robot’s state through feature matching algorithms. However, these methods still have problems such as insufficient real-time performance and environmental adaptability. Non-feature or similar features of the lofting environment also makes these methods inapplicable. Although these methods cannot be fully applied to lofting conditions, IMU is still very efficient in estimating robot state at present.

In [

31], the author proposed a method of using two GPS-RTK units to fuse IMU data to estimate the vehicle’s three-dimensional attitude, position, and velocity. However, this method cannot solve the robot localization problem in indoor or signal occlusion environment. Liu [

32] describes a robot localization method based on laser SLAM, but high-precision laser radar is often costly. To solve this problem, visual slam was proposed. This method can match depth data by using feature matching algorithm [

33], but the accuracy of this method needs to be corrected by loop detection which wastes a lot of time. A dim environment will also affect localization. In [

34], the author used the EKF algorithm to fuse multi-sensor data including laser SLAM, visual SLAM, IMU, magnetometer, and other sensors to locate the robot. It was verified that the method can be applied to indoor and outdoor environments through many experiments. These methods of fusing multi-sensor data have achieved good results from an unknown location in an unknown environment. However, as for lofting, the robot usually starts from a known point and the first party will provide at least two known points (or a known direction and a known point) and the points to be lofted. Therefore, the points to be lofted can be reached only by solving the relative localization relationship between points. The above-mentioned methods will increase the calculation amount and cost. In [

35], the author achieved state estimation by analyzing the kinematic model of the robot and corrected wheel-slippage error to a certain extent. However, this approach, which used IMU and wheel encoder requires very accurate motion estimation and cannot solve the problem of accumulative error fundamentally. The lack of external sensors for position correction will also produce huge accumulative errors after long-time or long-distance operation. Ferreira [

36] described the method of integrating sensors inside buildings and BIM to determine the location of people in buildings. Although this is the localization of people, it can be seen that BIM has a guiding significance for indoor localization in some degree. Considering the existing technologies, application scenarios, and the purpose of improving lofting efficiency, this paper focuses on the localization and navigation of ground robots. It is used in the indoor barrier-free plane environment by combining BIM laser lofting instrument, BIM model, wheel encoder, MEME-IMU, and magnetometer. The final experiment shows that the results meet the lofting accuracy requirements of most construction projects.

3. General Design

The intelligent lofting system mainly includes three parts: a ground robot, a BIM laser lofting instrument, and a user terminal. Among them, the ground robot moves on the construction site autonomously, communicates with the BIM laser lofting instrument by WIFI module, and processes the data sent by the lofting instrument and multi-sensors. BIM laser lofting instrument gets the robot plane position information in real-time and sends it to the robot and the user terminal through the WIFI module. The user terminal carries out monitoring, manual analysis, and data recording on the construction site according to the feedback information.

Figure 2 shows the general structure of the intelligent lofting system. This section mainly introduces the hardware design of the robot.

3.1. Robot Platform Design

The flexibility and robustness of the ground robot are the basic guarantees for high-precision localization so the size of the robot should not be too large. In this paper, the Mecanum Wheel Chassis of the Home of Balancing Trolley was selected as the chassis of the ground robot, and the secondary development was carried out on it. The chassis of the robot adopted aluminum alloy chassis with a total weight of about 3 KG. The overall size of the chassis was 200 × 250 × 70 mm. We deployed a 7 W DC motor to realize four-wheel drive, and used a 12 V lithium battery with a capacity of 3500 mah as the power supply. We also used the STM32F103 series processor as the control system of the robot, and connected the motor, and drive through the CAN bus. The maximum speed of it was 1.2 m/s, the maximum payload was 6 KG, and the maximum runtime can reach 5 h. In addition, the wheel was matched with an MG513 encoder deceleration motor, which can distinguish rotation of 0.23°.

The BIM laser lofting instrument can obtain the position of the prism on the construction site by linking with the prism through a laser beam. Therefore, the coordinate of the robot on the construction site can be obtained by combining the prism with the robot. To effectively combine the prism with the robot, the ground robot adopted a structure of double-layer. STM32F103 series processor, MEMS-IMU, and magnetometer were installed on the first layer, and a 360° prism and laser orientation device was fixed on the second layer by a customized chassis. This design can ensure the prism to receive laser beams at all angles and will not cause beam occlusion.

Figure 3 shows the overall design of the robot.

3.2. Mecanum Wheel

Traditional ground robots usually use rubber wheels as driving wheels, which are low cost, high load-bearing, and durable. However, they are difficult to rotate in the moving, which is not conducive to reaching points in a high-precision way. To solve this problem, the Mecanum wheel with 360° self-rotation characteristic was selected. The Mecanum wheel was invented by the Swedish engineer Bengt Ilon [

37], and it can translate and rotate in any direction in the plane through the interaction among a plurality of wheels. This section introduces the mechanical analysis of a single Mecanum wheel and the feasibility of self-rotation.

Figure 4a is the structural diagram of the Mecanum wheel, which mainly comprises a hub A and wheel roller B. A is the main support of the whole wheel, and B is a drum mounted on the hub. The angle between A and B is usually ±45°.

Figure 4b shows the force model of the bottom wheel. When the motor drives the wheel to rotate clockwise, friction force

F perpendicular to the centerline of the roller is generated.

F can be decomposed into a lateral direction force

Fx and a longitudinal direction force

Fy as shown by the dotted line. It can be seen that unlike a conventional rubber wheel, the Mecanum wheel generates an extra component force

Fx perpendicular to the forward direction.

According to the above-mentioned characteristics of the Mecanum wheel, the robot can self-rotate 360° through the cooperation of multiple wheels. In our system, there were four Mecanum wheels and each wheel equipped a separate motor drive. As shown in

Figure 5a, to verify the feasibility of self-rotation, taking the clockwise rotation of the robot as an example. When the wheels 1, 2, 3, and 4 rotate clockwise at the same velocity, they generate friction forces

F1,

F2,

F3 and

F4 perpendicular to the centerline of the wheel roller, and the forces are decomposed:

where

Fxi is the lateral component of force

Fi;

Fyi is the longitudinal component of force

Fi.

Due to the interaction between the forces, the force model can be simplified to

Figure 5b, of which, the angle between the hub axis and roller axis is 45°, so

Fxi =

Fyi(

i = 1,2,3,4). Taking

Fx1 +

Fx2 and

Fx3 +

Fx4 as an example, the two parallel forces are the same in magnitude and opposite in direction, so the generated force couple will make the robot produce a pure rotation effect and make it rotate. The cooperation of the four Mecanum wheels can provide the robot with 3 degrees of freedom necessary for the omnidirectional rotation in the horizontal plane.

3.3. Inverse Kinematic Analysis

The velocity control of the robot can ensure that it operates stably and efficiently.

Figure 6 shows the inverse kinematics analysis of the Mecanum wheel robot. The robot was equipped with four wheels symmetrically, wherein the angle

α = 45° in our system. When the robot is placed on the ground horizontally, the position and the angle corresponding to the center point

or of the robot are taken as state variables. The vehicle coordinate system ∑

or is established based on point

or, which is also the placement point of the prism.

At this time, the state of the robot in the vehicle coordinate system ∑

or is

, and the kinematic formula of each wheel is expressed as:

where

is the lateral velocity component in the vehicle coordinate system, with the right as positive,

is the longitudinal velocity component in the vehicle coordinate system, with the upward as positive,

is the angular velocity around the center of the car body, with counterclockwise as positive,

Vi (

i = 1, 2, 3, 4) is the linear velocity of wheel

i, a is half the length of the car, b is half the width of the car.

And the velocity of the robot in the navigation coordinate system ∑

on is:

where

V(n) is the horizontal velocity component, vertical velocity component, and angular velocity of the robot in the ∑

on,

is the transformation matrix from ∑

or to ∑

on.The velocity of each wheel in the navigation coordinate system can be obtained from Equations (2) and (3):

Although the velocity of the real motion model of the robot is calculated, the velocity of the wheels lags behind due to friction and other reasons during actual running. Direct velocity calculation is difficult to keep the car stable, therefore, this paper uses wheel encoders and PID control to set the velocities:

where

kp is the proportional coefficient,

ki is the integral coefficient,

kd is the differential coefficient,

,

is the real velocity of wheels which can be obtained from wheel encoder feedback.

To verify the stability of the velocity control, a set of experiments was carried out. We commanded the robot to reach the designated position (10, 0) from the origin (0, 0) in a straight line, with a total length of 10 m and 10 times experiments. The experimental results are shown in

Table 1:

It can be seen from

Table 1 that the maximum error is 10 mm, the Root Mean Squared Error (RMSE) is 2.1932 mm.

4. BIM Laser Lofting Instrument

In this paper, we use a BIM laser lofting instrument and 360° prism to get the high-precision position of the robot. BIM laser lofting instrument refers to a new generation of lofting instrument that combines BIM and laser dynamic beam orientation system. The instrument is easy to operate, and can be automatically leveled or manually leveled with one key after startup. “What we see is what we get” on the construction site is realized through highly visible guiding light, and each point is lofted independently without cumulative errors. Users can use the Personal Digital Assistant (PDA) to monitor the position of the prism in real-time and move it to the points to be lofted according to instructions, with the maximum lofting distance is up to 100 m and measuring accuracy is ±3 mm (Distance)/5″ (Angle). We selected the BIM laser lofting instrument of Topcon LN-100 to carry out our experiment.

Figure 7a shows the introduction of the instrument, and

Figure 7b is a 360° prism used together.

Table 2 shows the main parameters of the Topcon LN-100.

Among them, BIM mentioned above refers to a digital model with geometric information, state information, and professional attributes of building construction. In the process of lofting, the model mainly provides an independent coordinate system, known points, and position of points to be lofted. Users can intuitively observe the position relationship between prism and points to be lofted through the model.

Figure 8 is a BIM of a teaching building in Tongji University built by Autodesk Revit, where

Figure 8a is the overall appearance of the building model, and

Figure 8b is the first floor of the building.

This instrument provides four station layout methods, which are applicable to almost all lofting construction environment. The four methods are, respectively, Resection method, Reference Axis (Base Point and Reference Axis) Measurement method, Backsight Point (Known Point) Measurement method, and Backsight Point (Reference Axis on the Base Point) Measurement method.

Figure 9 is a schematic diagram of the four station setting methods, in which red points represent known positions, and blue triangles represent random positions:

Resection: the instrument is set up arbitrarily and measure two or more known points to establish a coordinate system;

Reference Axis (Base Point and Reference Axis) Measurement: the instrument is set up arbitrarily and measure the base point (0,0) and the point on the reference axis (x axis or y axis) to establish a coordinate system;

Backsight Point (Known Point) Measurement: position the instrument on a known point, measure another known point to establish a coordinate system;

Backsight Point (Reference Axis on the Base Point) Measurement: position the instrument on the base point (0,0), measure the point on the reference axis (x axis or y axis) to establish a coordinate system.

In our experiment, the 360° prism was fixed at the geometric center of the robot. The robot and Topcon LN-100 are connected via the WIFI communication module and Transmission Control Protocol (TCP). The position (x,y) of the prism received by the WIFI module was sent to the processor in real-time, wherein, the data transmission frequency is 20 Hz and the maximum communication distance can reach 100 m.

5. Multi-Sensors Fusion Algorithm

For the attitude estimation of the ground robot, it is usually output by multi-sensors. We use MEMS-IMU and magnetometer to get the attitude of the ground robot. Among them, magnetometer and accelerometer in MEMS-IMU output one group of robot attitude, and gyroscope in MEMS-IMU output another group of robot attitude. However, due to the defects and accumulative errors of a single sensor, neither of the two groups of estimations can be used as the attitude of the robot for a long time alone. Therefore, the EKF algorithm is adopted to fuse the two groups of data for optimal estimation of robot attitude. This part will introduce the EKF algorithm fusing multi-sensor data.

5.1. Sensors Angle Output

The common attitude measurement sensors cannot directly obtain angle data. For example, the data output by the gyroscope is usually the angular velocity of the robot and must be converted into angle through integration. This part briefly analyzes the conversion process of accelerometer, magnetometer, and gyroscope:

5.1.1. Accelerometer

The accelerometer can further get the attitude of the object by collecting acceleration components of gravity acceleration on each axis of the object. The navigation coordinate system in this paper is set as the east (

x) north (

y) sky (

z) coordinate system. As shown in

Figure 10, a calibrated accelerometer T

a is obliquely placed in space, its rotation process is

, and assumes that it rotates all in the positive direction of the attitude angle. It finally outputs the projection component

of the gravity acceleration g on the three axis

xc,

yc,

zc of the carrier coordinate system ∑

oc.

The rotation matrix is calculated as follows:

where g is the gravity acceleration, taking g = 9.8 m/s

2,

is the rotation matrix,

is the components of the gravitational acceleration on the three-axis of the carrier coordinate system ∑

oc,

φ,

θ and

ψ is the Roll angle, Pitch angle, and Yaw angle of accelerometer T

a in ∑

oc.

According to Equation (6), the converted angle of the data collected by the accelerometer is:

5.1.2. Magnetometer

The magnetometer can get the magnetic field intensity components on each axis of the object, and then calculate the Yaw angle

ψ. Its conversion principle is similar to the accelerometer, so it will not be illustrated here with a figure. First, without considering the magnetic declination, assume that a calibrated magnetometer

Tm is obliquely placed on the ground. Then,

Tm outputs the magnetic field intensity components

of the earth’s magnetic field

H on the three axis

xc,

yc,

zc of the carrier coordinate system ∑

oc. Finally, the Yaw angle

ψ can be calculated as follows:

However, in the actual running of the robot, it is impossible to keep a constant plane motion. The unevenness of the road surface and the ground gap will cause the robot to generate an instantaneous Pitch angle or Roll angle. Due to the influence of Pitch and Roll, Equation (8) is no longer valid. Therefore, without considering the magnetic declination, assume that magnetometer

Tm is placed obliquely in space and its rotation matrix is

, all rotation in the positive direction of the attitude angle. Then the rotation matrix can be calculated as follows:

where the Pitch angle

θ and Roll angle

ψ are known from Equation (7),

is the magnetic field intensity of point

oc in the earth’s magnetic field coordinate system, and

Hx = 0 because the geomagnetic direction is from the geomagnetic south pole to the geomagnetic north pole,

is the component of the earth’s magnetic field on the three-axis of the carrier coordinate system ∑

oc.

Simultaneous Equations (7) and (9) can get the Yaw angle

as follows:

The Yaw angle ψ obtained by magnetometer, Pitch angle θ, and Roll angle φ obtained by acceleration are fused with gyroscope data as a group of attitude.

5.1.3. Gyroscope

The gyroscope collects angular velocity of each axis when the object rotates and further gets the attitude of the object through integration with time.

As shown in

Figure 11, taking the Pitch angle as an example. Assuming that the gyroscope’s Pitch angle at time

is

, at time

is

, and its angular velocity during this period is a function

, then:

where

is the relationship between angular velocity on the axis

and time

.

The calculation of the

Roll angle and the Yaw angle

is the same as above, namely:

Pitch angle , Roll angle , and Yaw angle obtained by the gyroscope are fused with accelerometer and magnetometer data as another group of attitudes.

5.2. Data Fusion Algorithm

Kalman filter is one of the most efficient algorithms to fuse multi-sensor data when estimating the state of a robot at present. This algorithm continuously optimizes the state of the system by inputting observation data and outputs the optimal estimation of the state of the system. However, this algorithm is generally applicable to linear systems and is not applicable to the robot system in this paper. Considering the nonlinear characteristics of the system and the high efficiency of the fusion algorithm, we adopted the EKF algorithm for multi-sensor data fusion of nonlinear systems.

Extended Kalman Filter

In this study, the sensors were directly fixed to the robot and have been pre-calibrated. The state equation of the system is expressed as:

where regardless of the input variables of the system,

,

is state variable,

is the update time,

is a quaternion differential equation expression,

is process noise, which follows Gaussian distribution with a mean value of 0, namely

.

The state variable is selected as:

where

is a quaternion in the world coordinate system to represent the robot’s attitude,

represents gyro drift deviation on the axis

at time

k.

is expressed as:

where

represents the angular velocity on the axis

at time

k, which can be got from gyroscope.

The observation equation of the system is expressed as:

where

is the observation variable, and the accelerometer and magnetometer data are used for the observation,

is rotation matrix represented by quaternions,

is gravity acceleration,

and

refers to the north geomagnetic component and the vertical geomagnetic component of the navigation coordinate system ∑

on,

is the observation equation of accelerometer,

is the observation equation of magnetometer,

is observation noise, which follows Gaussian distribution with a mean value of 0, namely

.

From Equations (13) and (16), it can be seen that functions

and

are nonlinear and need to be linearized. EKF gets state transition matrices

and

by solving partial derivatives of nonlinear functions, that is Jacobi matrices:

where,

is the estimation of

,

is the partial derivative of

to

, and the expression is:

is the partial derivative of

to

, and the expression is:

is the partial derivative of

to

, and the expression is:

Finally, the state update equation is obtained:

where

is the prior estimation of

,

is the Kalman gain, and the expressions of

and

are as follows:

In Equation (24),

is the error covariance matrix of prior estimation at time

k, the expression is as follows:

where

is the error covariance matrix of posterior estimation at time

k − 1, its update equation is:

The update of state variables is obtained through Equation (22), the final attitude is output by the conversion relationship between quaternion and Euler angle. The Yaw angle

is taken as the main attitude basis in the lofting. The attitude solution diagram is shown in

Figure 12.

6. Motion Control

The robot can get its own actual position and attitude in an independent coordinate system through the BIM laser lofting instrument and fusion algorithm. However, how to reach the points to be lofted efficiently is still a key problem. Considering the time-saving purpose of lofting, we adopt a simple robot motion control theory based on the omnidirectional self-rotation characteristic of the Mecanum wheel.

As shown in

Figure 13, the robot is at point 1 and needs to reach the point 2 to be lofted. At this time, the position and attitude of the robot at point 1 is

, the position of point 2 is

which is provided by the first party. To complete the lofting of point 2, the robot needs to rotate the angle

around the geometric center and move forward

. The calculations about

and

can be solved by known data.

The solving formula are as follows:

where,

is the position of the robot at point 1,

is the attitude of the robot at point 1,

is the position of point 2,

is the angle between the line of point 1 and point 2 and the positive direction of the

x-axis,

is the required rotation angle of the robot,

is the distance between point 1 and point 2.

However, it is almost impossible for the robot to accurately rotate the angle

or move forward

. When the robot deviates from the expected trajectory, it will correct in real-time according to its position and attitude

at this time. The methods of solving

and

are similar to (27), namely:

where,

is the real-time position of the robot,

is the real-time attitude of the robot,

is the angle between the line between the real-time position of the robot and point 2 and the positive direction of the

x-axis,

is the required real-time rotation angle of the robot,

is the distance between the real-time position of the robot and point 2.

7. Experiment and Results

This part mainly introduces the power distribution of the robot and verifies the feasibility of the system, the localization accuracy of the robot is also verified through practical experiments.

7.1. Introduction of Power Distribution

The robot platform introduced in this paper was mainly equipped with wheel encoder, MEMS-IMU, and magnetometer sensors. It is used in combination with a BIM laser lofting instrument, which is an external sensor, to determine the point position in lofting. Among them, the wheel encoder is used to set the velocity of the robot. The BIM laser lofting instrument links with a prism fixed at the top of the robot and outputs the position information of the robot under 20 Hz. After that, the position information is sent to the embedded STM32F103 series processor of the robot through WIFI communication for data processing. MEMS-IMU and magnetometer estimate the robot attitude through EKF. The data is sent to the STM32F103 series processor through serial communication and the fusion algorithm is executed by the processor. The processor has 72 MHz CPU, 125 Dmips, 256 kb flash memory and can output the attitude estimation at 200 Hz.

7.2. Experimental Results

To verify the accuracy of localization, this experiment was carried out in an indoor barrier-free environment. The sensors were pre-corrected and the minor errors in initial orientation and alignment were ignored. We adopted the custom coordinate system method and set the instrument arbitrarily.

Figure 14 is the points layout and result diagram of this experiment, 12 points were selected for the experiment, of which 2 red points were the initial points ((0, 0) was the installation position of the BIM laser lofting instrument, and (1, 0) was the initial position of the robot), and the remaining 10 black points were the points to be lofted, ‘+’ were the lofting result of 10 black points. Experimental distance from 0.72 m to 29 m. The experimental results are shown in

Table 3.

The localization accuracy of the robot is crucial for lofting.

Table 3 shows the positions of ten points to be lofted and the actual localization accuracy. It can be seen from

Table 3, the average localization error in the experiment is 7.84 mm, RMSE is 1.427 mm, and the maximum error is the only 10.6 mm, which meets most of the lofting requirements. To compare the lofting time between our method and the traditional method, we use a total station to stake out the same ten points. For 10 points, the robot takes 7.43 min, and the total station takes 19.6 min. It can be seen that the robot lofting method proposed in this paper improves efficiency by about 163.8% compared with the traditional lofting method and reduces manpower.

8. Discussion

The intelligent lofting system proposed in this article has practical application values for many projects, such as indoor decoration and indoor lofting, and can improve the efficiency of lofting under the premise of high enough accuracy.

Table 4 is the accuracy control standards for indoor lofting in GB 50210 and GB50242 of China.

According to

Table 3 and

Table 4, our method meets the standards of most indoor lofting projects. But for the maximum localization error, we think it is due to the error of the BIM laser lofting instrument itself and robot manufacturing error. The sensors’ resolution and the environment of the experiment site will also have an impact on accuracy. Among them, the lofting environment is one of the main factors that affect accuracy. The rugged ground will have a serious impact on the balance of the Mecanum wheel, which will also affect our subsequent outdoor lofting research. Improving the localization algorithm and the robot motion control theory to further improve lofting accuracy is also one of the future research directions of this paper.

As for the issue of lofting time, whether it is the method proposed in this article or the traditional method, we record the ground marking time as 10s. This time is more realistic, although the marking time of the robot may be shorter in the future. The leveling time of the instrument cannot be ignored, because fast leveling is also one of the advantages of the BIM laser lofting instrument.

9. Conclusions

We propose an intelligent lofting system for the indoor barrier-free plane environment combined with a BIM laser lofting instrument. This method has certain application value for indoor decoration, indoor point layout, indoor equipment pre-placement, etc. In this paper, the design of the robot platform, velocity control, robot localization, and motion control theory are described. From the final experimental results, it can be seen that the method has achieved the expected goal. For the most important localization problem, we propose a method of combining internal and external multi-sensor data to estimate the state of the robot. Compared with the traditional method, the accuracy of this method meets the requirements of most indoor lofting, and the efficiency is higher, and the robot platform runs well.

The proposal of this method has certain significance for some large-area, boring and repetitive indoor lofting work, such as tile position lofting in large office buildings, indoor decoration, factory equipment pre-placement, etc. Compared with the traditional total station lofting method, our method further reduces the manpower through the robot, improves the lofting speed, and expands the sales path of the BIM laser lofting instrument under the premise of ensuring the lofting accuracy. However, its main limitation at present is that it is only suitable for two-dimensional and indoor Barrier-free lofting environment, which is difficult to meet most of the outdoor lofting projects or indoor obstacle environment. The maintenance of the robot also costs.

Further work will adopt more sensors to the system, focus on intelligent lofting in indoor and outdoor obstacle environment, and improve localization algorithm and motion control theory to improve the localization accuracy. We believe that this method can effectively solve the existing problems in lofting and greatly improve lofting efficiency.