Meta-analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-environmental Monitoring Using Machine Learning and Statistical Models

Abstract

:1. Introduction

2. Data Processing Workflow

2.1. UAV Data Collection

2.2. UAV Data Processing

2.3. Machine Learning and Statistical Models

2.4. Accuracy Assessment

3. Method

4. Results and Discussion

4.1. General Characteristics of Studies

4.2. UAV and Agro-environmental Applications

4.3. UAV and Sensor Types

4.4. UAV and Image Overlapping

4.5. UAV Image Processing Software

4.6. UAV and Flight Height

4.7. UAV and Ancillary Data

4.8. Classification Performance

4.9. Regression Performance

5. Conclusions

- China and the USA account for the bulk of the UAV research with 27% and 16% usage shares, respectively. However, new opportunities for the processing of UAV data are being provided across the world, particularly in northern European countries.

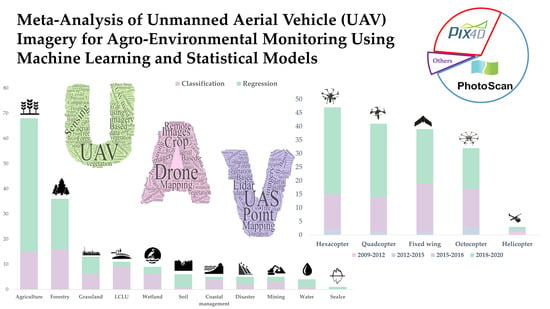

- The use of machine learning and statistical models in UAV remote sensing applications has increased since 2014. In particular, most of them were published in 2018-2019 with a 59% share. From the perspective of platform type, hexacopters were the most popular platform with a 30% share, followed by quadcopters, fixed-wings, and octocopters with approximately equal shares of about 25%, 24%, and 19%, respectively.

- Various remote sensing applications have been used to combine UAV image processing and machine learning and statistical models due to the advantages of these algorithms. The top three UAV applications were agriculture (42%), forestry (22%), and grassland mapping (8%).

- In terms of sensor type, visible sensor technology (53%) was the most commonly used sensor with the highest overall accuracy (92.9%) among classification articles. Canon was the most popular brand used in this review.

- From an image overlap perspective, agriculture and grassland applications have the same median of forward-and-side overlap at about 80% and 70%, respectively. The forestry boxplot showed a higher median of forwarding and side overlap (85%, 73%), and the wetland showed a lower overlap (75%, 70%).

- Of the case studies presenting utilized processing software (140 studies), 62% used Agisoft PhotoScan to process UAV remote sensing data (80 studies), followed by Pix4D at about 30% (39 studies).

- Among all studies in this review, 103 studies (62%) utilized ancillary data in their processing.

- In-situ measurements are common in regression applications with a 62% share (64 studies), compared to classification ones with only 24 studies. On the other hand, satellite images only used for classification accounted for a 4% share.

- Classification using deep learning method achieved the highest overall accuracy (94.8%) followed by MLC, SVM, and RF with 91.28%, 90.08%, and 89% overall accuracy, respectively.

- Visible sensors achieved the highest median overall accuracies with share of about 92.9 followed by hyperspectral, multispectral, and LiDAR sensors with the share of 85%, 89%, and 70%, respectively.

- Pixel-based classification methods are in the majority with a lower median of overall accuracy (88.82%), rather than object-based approaches (94.45%). From a temporal scope perspective, multi-temporal achieved the higher median overall accuracy of the about 94.9% compared to the single-date with the share of about 89.9%.

- Regression was the primary method used in this review, with a 62% share. The most common regression model was linear regression (68%), followed by RF (11%).

Author Contributions

Funding

Conflicts of Interest

Appendix A

| No. | Attribute | Description | Categories |

|---|---|---|---|

| 1 | Title | Title of the article | |

| 2 | First author | ||

| 3 | Affiliation | ||

| 4 | Journal | Refereed journal | |

| 5 | Year of publication | ||

| 6 | Citation | ||

| 7 | Application | Disciplinary topic | Agriculture; Forestry; Grassland; Soil; Sea ice; Wetland; Water; Marine; Mining; Land cover/Land use; Coastal management; Disaster management |

| 8 | Method | Classification; Regression | |

| 9 | Study area | Geographical location of study area | |

| 10 | Ancillary data | Including field measurement or additional data | |

| 11 | Extracted feature | Features used for classification such as spectral or texture indices | |

| 12 | # extracted features | Number of features | |

| 13 | Processing unit | Classification processing unit | Pixel; object |

| 14 | Assessment indices | Classification or regression accuracy assessment | Overall Accuracy (OA); User Accuracy (UA); Producer’s Accuracy (PA); Kappa coefficient; RMSE, R2 |

| 15 | Processing environment | Software used for photogrammetry processing | Pix4DMapper; Agisoft Photoscan |

| 16 | ML environment | Software used for Machine learning and statistical analysis | Matlab; R; ENVI; eCognition; Python; SAS, SPSS; ArcGIS |

| 17 | Control system | Flight planning apps and ground control systems | |

| 18 | Platform Name | Manufacturer, make, and model | |

| 19 | Platform type | Fixed-wing; Helicopter; Quadcopter; Hexacopter; Octocopter | |

| 20 | Platform weight | Measured in kg | |

| 21 | Flight height | Measured in m | |

| 22 | Temporal Scope | Single date; Multi-temporal | |

| 23 | Sensor Name | Manufacturer, make, and model | |

| 24 | Sensor type | Visible; LiDAR; Multispectral; Hyperspectral; Thermal | |

| 25 | Focal length (mm) | Distance between the lens and the image sensor | |

| 26 | Image resolution (Pixel) | Number of pixels in an image | |

| 27 | Pixel size (µm) | The size of each Pixel measured in Micron | |

| 28 | Frame rate (fps) | Frame frequency | |

| 29 | Field of view (degree) | The angular extent of a given scene that is imaged by a camera | |

| 30 | Forward/Side overlap (%) | Image overlap percentage | |

| 31 | GSD (cm) | Distance between two consecutive pixel centers measured on the ground | |

| 32 | # GCPs | Number of collected ground control points | |

| 33 | GPS | Global positioning system | DGPS; GPS RTK; GPS PPK |

| 34 | Calibration method | Procedures for accurate location of images |

References

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben-Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Dabrowska-Zielinska, K.; Budzynska, M.; Malek, I.; Bojanowski, J.; Bochenek, Z.; Lewinski, S. Assessment of crop growth conditions for agri–environment ecosystem for modern landscape management. In Remote Sensing for a Changing Europe, Proceedings of the 28th Symposium of the European Association of Remote Sensing Laboratories, Istanbul, Turkey, 2–5 June 2008; IOS Press: Amsterdam, The Netherlands, 2009. [Google Scholar]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Lillesand, T.; Kiefer, R.W.; Chipman, J. Remote Sensing and Image Interpretation; John Wiley and Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Müllerová, J.; Brůna, J.; Bartaloš, T.; Dvořák, P.; Vítková, M.; Pyšek, P. Timing Is Important: Unmanned Aircraft vs. Satellite Imagery in Plant Invasion Monitoring. Front. Plant Sci. 2017, 8, 887. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef] [Green Version]

- Tao, C.V. Mobile mapping technology for road network data acquisition. J. Geospat. Eng. 2000, 2, 1–14. [Google Scholar]

- Hunt, J.E.R.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; Mccarty, G.W. Acquisition of NIR-Green-Blue Digital Photographs from Unmanned Aircraft for Crop Monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef] [Green Version]

- Pádua, L.; Vanko, J.; Hruška, J.; Adão, T.; Sousa, J.J.; Peres, E.; Morais, R. UAS, sensors, and data processing in agroforestry: A review towards practical applications. Int. J. Remote Sens. 2017, 38, 2349–2391. [Google Scholar] [CrossRef]

- Zheng, H.; Li, W.; Jiang, J.; Liu, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, Y.; Yao, X. A Comparative Assessment of Different Modeling Algorithms for Estimating Leaf Nitrogen Content in Winter Wheat Using Multispectral Images from an Unmanned Aerial Vehicle. Remote Sens. 2018, 10, 2026. [Google Scholar] [CrossRef] [Green Version]

- Song, Y.; Wang, J. Winter Wheat Canopy Height Extraction from UAV-Based Point Cloud Data with a Moving Cuboid Filter. Remote Sens. 2019, 11, 1239. [Google Scholar] [CrossRef] [Green Version]

- Zhang, S.; Zhao, G.; Lang, K.; Su, B.; Chen, X.; Xi, X.; Zhang, H. Integrated Satellite, Unmanned Aerial Vehicle (UAV) and Ground Inversion of the SPAD of Winter Wheat in the Reviving Stage. Sensors 2019, 19, 1485. [Google Scholar] [CrossRef] [Green Version]

- Buchaillot, M.L.; Gracia-Romero, A.; Vergara-Diaz, O.; Zaman-Allah, M.A.; Tarekegne, A.; Cairns, J.E.; Prasanna, B.M.; Araus, J.L.; Kefauver, S.C. Evaluating Maize Genotype Performance under Low Nitrogen Conditions Using RGB UAV Phenotyping Techniques. Sensors 2019, 19, 1815. [Google Scholar] [CrossRef] [Green Version]

- Thorp, K.; Thompson, A.L.; Harders, S.J.; French, A.; Ward, R.W. High-Throughput Phenotyping of Crop Water Use Efficiency via Multispectral Drone Imagery and a Daily Soil Water Balance Model. Remote Sens. 2018, 10, 1682. [Google Scholar] [CrossRef] [Green Version]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.-H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef] [Green Version]

- Ampatzidis, Y.; Partel, V. UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Enciso, J.; Avila, C.A.; Jung, J.; Elsayed-Farag, S.; Chang, A.; Yeom, J.; Landivar, J.; Maeda, M.; Chavez, J.C. Validation of agronomic UAV and field measurements for tomato varieties. Comput. Electron. Agric. 2019, 158, 278–283. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Smith, R.B.; De Pauw, E. Hyperspectral Vegetation Indices and Their Relationships with Agricultural Crop Characteristics. Remote Sens. Environ. 2000, 71, 158–182. [Google Scholar] [CrossRef]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Brisco, B.; Motagh, M. Wetland Water Level Monitoring Using Interferometric Synthetic Aperture Radar (InSAR): A Review. Can. J. Remote Sens. 2018, 44, 247–262. [Google Scholar] [CrossRef]

- Su, T.-C. A study of a matching pixel by pixel (MPP) algorithm to establish an empirical model of water quality mapping, as based on unmanned aerial vehicle (UAV) images. Int. J. Appl. Earth Obs. Geoinf. 2017, 58, 213–224. [Google Scholar] [CrossRef]

- Jonassen, M.O.; Tisler, P.; Altstädter, B.; Scholtz, A.; Vihma, T.; Lampert, A.; König-Langlo, G.; Lüpkes, C. Application of remotely piloted aircraft systems in observing the atmospheric boundary layer over Antarctic sea ice in winter. Polar Res. 2015, 34, 25651. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves, J.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Larsen, G.; Peddle, D.R. Mapping land-based oil spills using high spatial resolution unmanned aerial vehicle imagery and electromagnetic induction survey data. J. Appl. Remote Sens. 2018, 12, 1. [Google Scholar] [CrossRef]

- Honkavaara, E.; Eskelinen, M.A.; Pölönen, I.; Saari, H.; Ojanen, H.; Mannila, R.; Holmlund, C.; Hakala, T.; Litkey, P.; Rosnell, T.; et al. Remote Sensing of 3-D Geometry and Surface Moisture of a Peat Production Area Using Hyperspectral Frame Cameras in Visible to Short-Wave Infrared Spectral Ranges Onboard a Small Unmanned Airborne Vehicle (UAV). IEEE Trans. Geosci. Remote Sens. 2016, 54, 5440–5454. [Google Scholar] [CrossRef] [Green Version]

- Ge, X.; Wang, J.; Ding, J.; Cao, X.; Zhang, Z.; Liu, J.; Li, X. Combining UAV-based hyperspectral imagery and machine learning algorithms for soil moisture content monitoring. PeerJ 2019, 7, e6926. [Google Scholar] [CrossRef]

- Peng, J.; Biswas, A.; Jiang, Q.; Zhao, R.; Hu, J.; Hu, B.; Shi, Z. Estimating soil salinity from remote sensing and terrain data in southern Xinjiang Province, China. Geoderma 2019, 337, 1309–1319. [Google Scholar] [CrossRef]

- Wang, S.; Garcia, M.; Ibrom, A.; Jakobsen, J.; Köppl, C.J.; Mallick, K.; Looms, M.C.; Bauer-Gottwein, P. Mapping Root-Zone Soil Moisture Using a Temperature–Vegetation Triangle Approach with an Unmanned Aerial System: Incorporating Surface Roughness from Structure from Motion. Remote Sens. 2018, 10, 1978. [Google Scholar] [CrossRef] [Green Version]

- Al-Rawabdeh, A.; He, F.; Moussa, A.; El-Sheimy, N.; Habib, A. Using an Unmanned Aerial Vehicle-Based Digital Imaging System to Derive a 3D Point Cloud for Landslide Scarp Recognition. Remote Sens. 2016, 8, 95. [Google Scholar] [CrossRef] [Green Version]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. The use of fixed–wing UAV photogrammetry with LiDAR DTM to estimate merchantable volume and carbon stock in living biomass over a mixed conifer–broadleaf forest. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 767–777. [Google Scholar] [CrossRef]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Michez, A.; Piégay, H.; Lisein, J.; Claessens, H.; Lejeune, P. Classification of riparian forest species and health condition using multi-temporal and hyperspatial imagery from unmanned aerial system. Environ. Monit. Assess. 2016, 188, 1–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Garza, B.N.; Ancona, V.; Enciso, J.; Perotto-Baldiviezo, H.; Kunta, M.; Simpson, C. Quantifying Citrus Tree Health Using True Color UAV Images. Remote Sens. 2020, 12, 170. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Holloway, J.; Mengersen, K. Statistical Machine Learning Methods and Remote Sensing for Sustainable Development Goals: A Review. Remote Sens. 2018, 10, 1365. [Google Scholar] [CrossRef] [Green Version]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species classification using Unmanned Aerial Vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random Forests for land cover classification. Patt. Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Motagh, M. Random forest wetland classification using ALOS-2 L-band, RADARSAT-2 C-band, and TerraSAR-X imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 13–31. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Lovitt, J.; Rahman, M.M.; McDermid, G.J. Assessing the Value of UAV Photogrammetry for Characterizing Terrain in Complex Peatlands. Remote Sens. 2017, 9, 715. [Google Scholar] [CrossRef] [Green Version]

- Bian, J.; Zhang, Z.; Chen, J.; Chen, H.; Cui, C.; Li, X.; Chen, S.; Fu, Q. Simplified Evaluation of Cotton Water Stress Using High Resolution Unmanned Aerial Vehicle Thermal Imagery. Remote Sens. 2019, 11, 267. [Google Scholar] [CrossRef] [Green Version]

- Vanegas, F.; Bratanov, D.; Powell, K.; Weiss, J.; Gonzalez, F. A Novel Methodology for Improving Plant Pest Surveillance in Vineyards and Crops Using UAV-Based Hyperspectral and Spatial Data. Sensors 2018, 18, 260. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Peng, J.; Zhou, Y.; Xu, D.; Zhao, R.; Jiang, Q.; Fu, T.; Wang, F.; Shi, Z. Quantitative Estimation of Soil Salinity Using UAV-Borne Hyperspectral and Satellite Multispectral Images. Remote Sens. 2019, 11, 736. [Google Scholar] [CrossRef] [Green Version]

- Salamí, E.; Barrado, C.; Pastor, E. UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef] [Green Version]

- Poley, L.G.; McDermid, G.J. A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems. Remote Sens. 2020, 12, 1052. [Google Scholar] [CrossRef] [Green Version]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2016, 38, 2427–2447. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef] [Green Version]

- Yao, H.; Wen, B.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098. [Google Scholar] [CrossRef]

- Tmušić, G.; Manfreda, S.; Aasen, H.; James, M.R.; Gonçalves, G.; Ben Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef] [Green Version]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef] [Green Version]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Akanksha; Martín-Torres, F.J.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Gaffey, C.; Bhardwaj, A. Applications of Unmanned Aerial Vehicles in Cryosphere: Latest Advances and Prospects. Remote Sens. 2020, 12, 948. [Google Scholar] [CrossRef] [Green Version]

- Mesas-Carrascosa, F.-J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.-M.; Borra-Serrano, I.; López-Granados, F. Assessing Optimal Flight Parameters for Generating Accurate Multispectral Orthomosaicks by UAV to Support Site-Specific Crop Management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef] [Green Version]

- Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A. Assessing Radiometric Correction Approaches for Multi-Spectral UAS Imagery for Horticultural Applications. Remote Sens. 2018, 10, 1684. [Google Scholar] [CrossRef] [Green Version]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef] [Green Version]

- Mlambo, R.; Woodhouse, I.H.; Gerard, F.; Anderson, K. Structure from Motion (SfM) Photogrammetry with Drone Data: A Low Cost Method for Monitoring Greenhouse Gas Emissions from Forests in Developing Countries. Forests 2017, 8, 68. [Google Scholar] [CrossRef] [Green Version]

- Díaz-Varela, R.A.; De La Rosa, R.; León, L.; Zarco-Tejada, P.J. High-Resolution Airborne UAV Imagery to Assess Olive Tree Crown Parameters Using 3D Photo Reconstruction: Application in Breeding Trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Peña, J.-M.; Torres-Sánchez, J.; Jiménez-Brenes, F.M.; Valencia-Gredilla, F.; Recasens, J.; López-Granados, F.; Recasens, J. Mapping Cynodon Dactylon Infesting Cover Crops with an Automatic Decision Tree-OBIA Procedure and UAV Imagery for Precision Viticulture. Remote Sens. 2019, 12, 56. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Hassan-Esfahani, L.; Torres-Rua, A.; Jensen, A.; McKee, M. Assessment of Surface Soil Moisture Using High-Resolution Multi-Spectral Imagery and Artificial Neural Networks. Remote Sens. 2015, 7, 2627–2646. [Google Scholar] [CrossRef] [Green Version]

- Tavus, M.R.; Eker, M.E.; Şenyer, N.; Karabulut, B. Plant counting by using k-NN classification on UAVs images. In Proceedings of the 23nd Signal Processing and Communications Applications Conference (SIU), Malatya, Turkey, 16–19 May 2015; pp. 1058–1061. [Google Scholar]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving Soybean Leaf Area Index from Unmanned Aerial Vehicle Hyperspectral Remote Sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef] [Green Version]

- Jaakkola, A.; Hyyppä, J.; Yu, X.; Kukko, A.; Kaartinen, H.; Liang, X.; Hyyppä, H.; Wang, Y. Autonomous Collection of Forest Field Reference—The Outlook and a First Step with UAV Laser Scanning. Remote Sens. 2017, 9, 785. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Yi, S.; Qin, Y.; Wang, X. Improving estimates of fractional vegetation cover based on UAV in alpine grassland on the Qinghai–Tibetan Plateau. Int. J. Remote Sens. 2016, 37, 1922–1936. [Google Scholar] [CrossRef]

- Jing, R.; Gong, Z.; Zhao, W.; Pu, R.; Deng, L. Above-bottom biomass retrieval of aquatic plants with regression models and SfM data acquired by a UAV platform—A case study in Wild Duck Lake Wetland, Beijing, China. ISPRS J. Photogramm. Remote Sens. 2017, 134, 122–134. [Google Scholar] [CrossRef]

- Yuan, W.; Li, J.; Bhatta, M.; Shi, Y.; Baenziger, P.S.; Ge, Y. Wheat Height Estimation Using LiDAR in Comparison to Ultrasonic Sensor and UAS. Sensors 2018, 18, 3731. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. Remote sensing and geographic information system data integration: Error sources and Research Issues. Photogramm. Eng. Remote Sens. 1991, 57, 677–687. [Google Scholar]

- Ramezan, C.A.; Warner, T.A.; Maxwell, A.E. Evaluation of Sampling and Cross-Validation Tuning Strategies for Regional-Scale Machine Learning Classification. Remote Sens. 2019, 11, 185. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Int. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [Green Version]

- Kraaijenbrink, P.D. High-resolution insights into the dynamics of Himalayan debris-covered glaciers. In Proceedings of the AGU Fall Meeting Abstracts, Washington, DC, USA, 10–14 December 2018. [Google Scholar]

- Barnas, A.F.; Felege, C.J.; Rockwell, R.F.; Ellis-Felege, S.N. A pilot(less) study on the use of an unmanned aircraft system for studying polar bears (Ursus maritimus). Polar Biol. 2018, 41, 1055–1062. [Google Scholar] [CrossRef]

- Rossini, M.; Di Mauro, B.; Garzonio, R.; Baccolo, G.; Cavallini, G.; Mattavelli, M.; De Amicis, M.; Colombo, R. Rapid melting dynamics of an alpine glacier with repeated UAV photogrammetry. Geomorphology 2018, 304, 159–172. [Google Scholar] [CrossRef]

- Romero, M.; Luo, Y.; Su, B.; Fuentes, S. Vineyard water status estimation using multispectral imagery from an UAV platform and machine learning algorithms for irrigation scheduling management. Comput. Electron. Agric. 2018, 147, 109–117. [Google Scholar] [CrossRef]

- Aboutalebi, M.; Allen, L.N.; Torres-Rua, A.F.; McKee, M.; Coopmans, C. Estimation of soil moisture at different soil levels using machine learning techniques and unmanned aerial vehicle (UAV) multispectral imagery. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; International Society for Optics and Photonics: Baltimore, MD, USA, 2019; Volume 11008, p. 110080S. [Google Scholar]

- Brieger, F.; Herzschuh, U.; Pestryakova, L.A.; Bookhagen, B.; Zakharov, E.S.; Kruse, S. Advances in the Derivation of Northeast Siberian Forest Metrics Using High-Resolution UAV-Based Photogrammetric Point Clouds. Remote Sens. 2019, 11, 1447. [Google Scholar] [CrossRef] [Green Version]

- Stuart, M.B.; Mcgonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef] [Green Version]

- Elsner, P.; Dornbusch, U.; Thomas, I.; Amos, D.; Bovington, J.; Horn, D. Coincident beach surveys using UAS, vehicle mounted and airborne laser scanner: Point cloud inter-comparison and effects of surface type heterogeneity on elevation accuracies. Remote Sens. Environ. 2018, 208, 15–26. [Google Scholar] [CrossRef]

- DJI—Der Marktführer im Bereich ziviler Drohnen- und Luftbildtechnologie. Available online: https://www.dji.com/de (accessed on 8 June 2020).

- Mesas-Carrascosa, F.; Rumbao, I.C.; Torres-Sánchez, J.; García-Ferrer, A.; Peña, J.M.; Granados, F.L. Accurate ortho-mosaicked six-band multispectral UAV images as affected by mission planning for precision agriculture proposes. Int. J. Remote Sens. 2016, 38, 2161–2176. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2017, 19, 115–133. [Google Scholar] [CrossRef]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.M.; Van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote. Sens. 2019, 11, 1252. [Google Scholar] [CrossRef] [Green Version]

- Isacsson, M. Snow layer mapping by remote sensing from Unmanned Aerial Vehicles: A mixed method study of sensor applications for research in Arctic and Alpine environments. Master′s Thesis, Royal Institute of Technology, Stockholm, Sweden, 2018. [Google Scholar]

- Agrisoft LLC. Agisoft Metashape User Manual: Professional Edition, Version 1.5. St. Petersburg; Agrisoft LLC: Yogyakarta, Indonesia, 2019. [Google Scholar]

- James, M.; Robson, S.; D’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.; Myint, S.W. A Simplified Empirical Line Method of Radiometric Calibration for Small Unmanned Aircraft Systems-Based Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1876–1885. [Google Scholar] [CrossRef]

- Stöcker, C.; Nex, F.; Koeva, M.; Gerke, M. Quality Assessment of Combined Imu/Gnss Data for Direct Georeferencing in the Context Of Uav-Based Mapping. ISPRS Int. Arch. Photogramm. Remote Sens. 2017, 42, 355–361. [Google Scholar] [CrossRef] [Green Version]

- Padró, J.-C.; Muñoz, F.-J.; Planas, J.; Pons, X. Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 130–140. [Google Scholar] [CrossRef]

- Zhu, X.; Meng, L.; Zhang, Y.; Weng, Q.; Morris, J. Tidal and Meteorological Influences on the Growth of Invasive Spartina alterniflora: Evidence from UAV Remote Sensing. Remote Sens. 2019, 11, 1208. [Google Scholar] [CrossRef] [Green Version]

- Collin, A.; Ramambason, C.; Pastol, Y.; Casella, E.; Rovere, A.; Thiault, L.; Espiau, B.; Siu, G.; Lerouvreur, F.; Nakamura, N.; et al. Very high resolution mapping of coral reef state using airborne bathymetric LiDAR surface-intensity and drone imagery. Int. J. Remote Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Wang, L.; Li, X.; Gong, H.; Shi, C.; Zhong, R.; Liu, X. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. Geoinf. 2017, 61, 22–31. [Google Scholar] [CrossRef]

- Gruszczyński, W.; Matwij, W.; Ćwiąkała, P. Comparison of low-altitude UAV photogrammetry with terrestrial laser scanning as data-source methods for terrain covered in low vegetation. ISPRS J. Photogramm. Remote Sens. 2017, 126, 168–179. [Google Scholar] [CrossRef]

- Seier, G.; Stangl, J.; Schöttl, S.; Sulzer, W.; Sass, O. UAV and TLS for monitoring a creek in an alpine environment, Styria, Austria. Int. J. Remote Sens. 2017, 38, 2903–2920. [Google Scholar] [CrossRef]

- Komárek, J.; Klouček, T.; Prosek, J. The potential of Unmanned Aerial Systems: A tool towards precision classification of hard-to-distinguish vegetation types? Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 9–19. [Google Scholar] [CrossRef]

- Cruzan, M.B.; Weinstein, B.G.; Grasty, M.R.; Kohrn, B.F.; Hendrickson, E.C.; Arredondo, T.M.; Thompson, P.G. Small Unmanned Aerial Vehicles (Micro-Uavs, Drones) in Plant Ecology. Appl. Plant Sci. 2016, 4, 1600041. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very Deep Convolutional Neural Networks for Complex Land Cover Mapping Using Multispectral Remote Sensing Imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef] [Green Version]

- Rezaee, M.; Mahdianpari, M.; Zhang, Y.; Salehi, B. Deep Convolutional Neural Network for Complex Wetland Classification Using Optical Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3030–3039. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A systematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Guo, Q.; Kelly, M.; Gong, P.; Liu, D.; Kelly, M. An Object-Based Classification Approach in Mapping Tree Mortality Using High Spatial Resolution Imagery. GiSci. Remote Sens. 2007, 44, 24–47. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Gutiérrez, P.A.; Peña, J.M.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. An Experimental Comparison for the Identification of Weeds in Sunflower Crops via Unmanned Aerial Vehicles and Object-Based Analysis. In Lecture Notes in Computer Science; Springer Science and Business Media LLC: Berlin, Germany, 2015; Volume 9094, pp. 252–262. [Google Scholar]

- Forkuor, G.; Hounkpatin, O.K.L.; Welp, G.; Thiel, M. High Resolution Mapping of Soil Properties Using Remote Sensing Variables in South-Western Burkina Faso: A Comparison of Machine Learning and Multiple Linear Regression Models. PLoS ONE 2017, 12, e0170478. [Google Scholar] [CrossRef]

| No. | Title | Ref | Year | Journal | Content |

|---|---|---|---|---|---|

| 1 | UAS, sensors, and data processing in agroforestry: a review towards practical applications | [9] | 2017 | IJRS | A review on technological advancements in UAVs and imaging sensors in agroforestry applications |

| 2 | Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use | [55] | 2012 | Remote. Sens. | A review of UAV platform characteristics, applications, and regulations |

| 3 | Unmanned aerial systems for photogrammetry and remote sensing: A review | [56] | 2014 | ISPRS JPRS | A review of the recent unmanned aircraft, sensing, navigation, orientation and general data processing developments for UAS photogrammetry and remote sensing |

| 4 | UAV Flight Experiments Applied to the Remote Sensing of Vegetated Areas | [47] | 2014 | Remote. Sens. | A review of UAV remote sensing applications in vegetated areas monitoring |

| 5 | Remote sensing platforms and sensors: A survey | [57] | 2015 | ISPRS JPRS | A review of remote sensing technologies, platforms and sensors |

| 6 | UAVs as remote sensing platform in glaciology: Present applications and future prospects | [58] | 2016 | Remote. Sens. of Environ. | A review on polar and alpine applications of UAV |

| 7 | A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications | [53] | 2017 | IJRS | A meta-analysis review on techniques and procedures used in terrestrial remote-sensing applications |

| 8 | Hyperspectral Imaging: A Review on UAV-Based Sensors, Data Processing and Applications for Agriculture and Forestry | [50] | 2017 | Remote. Sens. | A review of UAV-based hyperspectral remote sensing for agriculture and forestry |

| 9 | Forestry applications of UAVs in Europe: a review | [49] | 2017 | ISPRS JPRS | A review of UAV-based forestry applications and regulatory framework for UAV operation in the European Union/of the technology and of scientific applications in the forest sector |

| 10 | On the Use of Unmanned Aerial Systems for Environmental Monitoring | [1] | 2018 | Remote. Sens. | An overview of applications of UAS in natural and agricultural ecosystem monitoring |

| 11 | Unmanned Aerial Vehicle for Remote Sensing Applications—A Review | [51] | 2019 | Remote. Sens. | A review of UAVs remote sensing data processing and applications |

| 12 | A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems | [48] | 2020 | Remote. Sens. | A systematic review of UAS-borne passive sensors for vegetation AGB estimation |

| 13 | Current Practices in UAS-based Environmental Monitoring | [54] | 2020 | Remote. Sens. | A review of studies in UAV-based environmental mapping using passive sensors |

| 14 | Applications of Unmanned Aerial Vehicles in cryosphere: Latest Advances and Prospects | [59] | 2020 | Remote. Sens. | A review on applications of UAVs within glaciology, snow, permafrost, and polar research |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eskandari, R.; Mahdianpari, M.; Mohammadimanesh, F.; Salehi, B.; Brisco, B.; Homayouni, S. Meta-analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-environmental Monitoring Using Machine Learning and Statistical Models. Remote Sens. 2020, 12, 3511. https://doi.org/10.3390/rs12213511

Eskandari R, Mahdianpari M, Mohammadimanesh F, Salehi B, Brisco B, Homayouni S. Meta-analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-environmental Monitoring Using Machine Learning and Statistical Models. Remote Sensing. 2020; 12(21):3511. https://doi.org/10.3390/rs12213511

Chicago/Turabian StyleEskandari, Roghieh, Masoud Mahdianpari, Fariba Mohammadimanesh, Bahram Salehi, Brian Brisco, and Saeid Homayouni. 2020. "Meta-analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-environmental Monitoring Using Machine Learning and Statistical Models" Remote Sensing 12, no. 21: 3511. https://doi.org/10.3390/rs12213511

APA StyleEskandari, R., Mahdianpari, M., Mohammadimanesh, F., Salehi, B., Brisco, B., & Homayouni, S. (2020). Meta-analysis of Unmanned Aerial Vehicle (UAV) Imagery for Agro-environmental Monitoring Using Machine Learning and Statistical Models. Remote Sensing, 12(21), 3511. https://doi.org/10.3390/rs12213511