Detection of Undocumented Building Constructions from Official Geodata Using a Convolutional Neural Network

Abstract

:1. Introduction

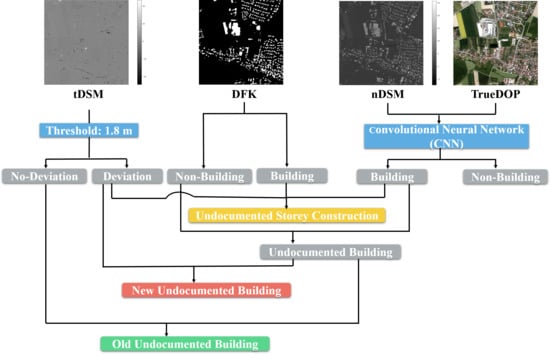

- (1)

- A new framework for the automatic detection of undocumented building constructions is proposed, which has integrated the state-of-the-art CNNs and fully harnessed official geodata. The proposed framework can identify old undocumented buildings, new undocumented buildings, and undocumented story construction according to their year and type of construction. Specifically, a CNN model is firstly exploited for the semantic segmentation of stacked nDSM and orthophoto with RGB bands (TrueDOP) data. Then, this derived binary map of “building” and “non-building” pixels is utilized to identify different types of undocumented building constructions through automatic comparison with the DFK and tDSM.

- (2)

- Our building detection results are compared with those obtained from two conventional solutions utilized in the state of Bavaria, Germany. With a large collection of reference data, this comparison has statistical sense. Our method can significantly reduce the false alarm rate, which has demonstrated the use of CNN for the robust detection of buildings at large-scale.

- (3)

- In order to offer insights for similar large-scale building detection tasks, we have investigated the transferability issue and sampling strategies further by using reference data of selected districts in the state of Bavaria, Germany and employing CNNs. It should be noted that this work is in an advanced position to study the practical strategies for the task of large-scale building detection, as we implement such high quality and resolution official geodata at large-scale.

2. Related Work

2.1. Two Conventional Strategies for the Detection of Undocumented Buildings

2.2. Shallow Learning Methods for Building Detection

2.3. Deep Learning Methods for Building Detection

3. Study Area and Official GeoData

4. Methodology

4.1. The Proposed Framework for the Detection of Undocumented Building Constructions

4.2. A CNN Model for Building Detection

Network Architecture

5. Experiment

5.1. Data Preprocessing

5.2. Experiment Setup

5.3. Training Details

- (1)

- FC-DenseNet is composed of four DenseNet blocks in both encoder and decoder, and one bottleneck block connecting them, which is also a DenseNet block. In each DenseNet block, we utilize 5 convolutional layers.

- (2)

- FCN-8s adopts a VGG16 architecture [44] as the backbone.

- (3)

- U-Net is composed of five blocks in both the encoder and decoder. Each block in the encoder has two convolution layers, and in the decoder it has one transposed convolution layer.

5.4. Evaluation Metrics

6. Results

6.1. Results of Undocumented Building Constructions from Proposed Framework

6.2. Results of Building Detections from Proposed Framework

6.2.1. Comparison with Two Conventional Solutions

6.2.2. Comparison with Other CNNs

7. Discussion

7.1. Transferability Investigation

7.2. Sampling Strategy Investigation

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Arlinger, K.; Roschlaub, R. Calculation and update of a 3D building model of Bavaria using LiDAR, image matching and cadastre information. In Proceedings of the 8th International 3D GeoInfo Conference, Istanbul, Turkey, 27–29 November 2013; pp. 28–29. [Google Scholar]

- Aringer, K.; Roschlaub, R. Bavarian 3D building model and update concept based on LiDAR, image matching and cadastre information. In Innovations in 3D Geo-Information Sciences; Springer: Berlin/Heidelberg, Germany, 2014; pp. 143–157. [Google Scholar]

- Geßler, S.; Krey, T.; Möst, K.; Roschlaub, R. Mit Datenfusionierung Mehrwerte schaffen—Ein Expertensystem zur Baufallerkundung. DVW Mitt. 2019, 2, 159–187. [Google Scholar]

- Roschlaub, R.; Möst, K.; Krey, T. Automated Classification of Building Roofs for the Updating of 3D Building Models Using Heuristic Methods. PFG J. Photogramm. Remote Sens. Geoinf. Sci. 2020, 88, 85–97. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Huang, X.; Gong, J. Deep neural network for remote-sensing image interpretation: Status and perspectives. Natl. Sci. Rev. 2019, 6, 1082–1086. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X.X. Learning to Pay Attention on Spectral Domain: A Spectral Attention Module-Based Convolutional Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 58, 110–122. [Google Scholar] [CrossRef]

- Qiu, C.; Mou, L.; Schmitt, M.; Zhu, X.X. Local climate zone-based urban land cover classification from multi-seasonal Sentinel-2 images with a recurrent residual network. ISPRS J. Photogramm. Remote Sens. 2019, 154, 151–162. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral-spatial-temporal features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2018, 57, 924–935. [Google Scholar] [CrossRef] [Green Version]

- Caye Daudt, R.; Le Saux, B.; Boulch, A.; Gousseau, Y. Guided anisotropic diffusion and iterative learning for weakly supervised change detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Hua, Y.; Mou, L.; Zhu, X.X. Relation Network for Multilabel Aerial Image Classification. IEEE Trans. Geosci. Remote. Sens. 2020. [Google Scholar] [CrossRef] [Green Version]

- Hua, Y.; Mou, L.; Zhu, X.X. Recurrently exploring class-wise attention in a hybrid convolutional and bidirectional LSTM network for multi-label aerial image classification. ISPRS J. Photogramm. Remote Sens. 2019, 149, 188–199. [Google Scholar] [CrossRef]

- Qiu, C.; Schmitt, M.; Geiß, C.; Chen, T.H.K.; Zhu, X.X. A framework for large-scale mapping of human settlement extent from Sentinel-2 images via fully convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 163, 152–170. [Google Scholar] [CrossRef]

- He, C.; Liu, Z.; Gou, S.; Zhang, Q.; Zhang, J.; Xu, L. Detecting global urban expansion over the last three decades using a fully convolutional network. Environ. Res. Lett. 2019, 14, 034008. [Google Scholar] [CrossRef]

- Shi, Y.; Li, Q.; Zhu, X.X. Building Footprint Generation Using Improved Generative Adversarial Networks. IEEE Geosci. Remote Sens. Lett. 2018, 16, 603–607. [Google Scholar] [CrossRef] [Green Version]

- Shi, Y.; Li, Q.; Zhu, X.X. Building segmentation through a gated graph convolutional neural network with deep structured feature embedding. ISPRS J. Photogramm. Remote Sens. 2020, 159, 184–197. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Shi, Y.; Huang, X.; Zhu, X.X. Building Footprint Generation by Integrating Convolution Neural Network With Feature Pairwise Conditional Random Field (FPCRF). IEEE Trans. Geosci. Remote Sens. 2020. [Google Scholar] [CrossRef]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic segmentation of slums in satellite images using transfer learning on fully convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Demuzere, M.; Bechtel, B.; Mills, G. Global transferability of local climate zone models. Urban Clim. 2019, 27, 46–63. [Google Scholar] [CrossRef]

- Li, Q.; Qiu, C.; Ma, L.; Schmitt, M. Mapping the Land Cover of Africa at 10 m Resolution from Multi-Source Remote Sensing Data with Google Earth Engine. Remote Sens. 2020, 12, 602. [Google Scholar] [CrossRef] [Green Version]

- San, D.K.; Turker, M. Building extraction from high resolution satellite images using Hough transform. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 1063–1068. [Google Scholar]

- Ok, A.O. Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectral GeoEye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Inglada, J. Automatic recognition of man-made objects in high resolution optical remote sensing images by SVM classification of geometric image features. ISPRS J. Photogramm. Remote Sens. 2007, 62, 236–248. [Google Scholar] [CrossRef]

- Yang, H.L.; Yuan, J.; Lunga, D.; Laverdiere, M.; Rose, A.; Bhaduri, B. Building extraction at scale using convolutional neural network: Mapping of the united states. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2600–2614. [Google Scholar] [CrossRef] [Green Version]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Bischke, B.; Helber, P.; Folz, J.; Borth, D.; Dengel, A. Multi-task learning for segmentation of building footprints with deep neural networks. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1480–1484. [Google Scholar]

- Bittner, K.; Adam, F.; Cui, S.; Körner, M.; Reinartz, P. Building footprint extraction from VHR remote sensing images combined with normalized DSMs using fused fully convolutional networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2615–2629. [Google Scholar] [CrossRef] [Green Version]

- Ji, S.; Wei, S.; Lu, M. Fully convolutional networks for multisource building extraction from an open aerial and satellite imagery data set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Jégou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The one hundred layers tiramisu: Fully convolutional densenets for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 11–19. [Google Scholar]

- Li, X.; Yao, X.; Fang, Y. Building-A-Nets: Robust Building Extraction from High-Resolution Remote Sensing Images with Adversarial Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3680–3687. [Google Scholar] [CrossRef]

- Ressl, C.; Brockmann, H.; Mandlburger, G.; Pfeifer, N. Dense Image Matching vs. Airborne Laser Scanning—Comparison of two methods for deriving terrain models. Photogramm. Fernerkund. Geoinf. 2016, 2016, 57–73. [Google Scholar] [CrossRef]

- Griffiths, D.; Boehm, J. Improving public data for building segmentation from Convolutional Neural Networks (CNNs) for fused airborne lidar and image data using active contours. ISPRS J. Photogramm. Remote Sens. 2019, 154, 70–83. [Google Scholar] [CrossRef]

- Vargas-Muñoz, J.E.; Lobry, S.; Falcão, A.X.; Tuia, D. Correcting rural building annotations in OpenStreetMap using convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2019, 147, 283–293. [Google Scholar] [CrossRef] [Green Version]

- Sirmacek, B.; Unsalan, C. Building detection from aerial images using invariant color features and shadow information. In Proceedings of the 2008 23rd International Symposium on Computer and Information Sciences, Istanbul, Turkey, 27–29 October 2008; pp. 1–5. [Google Scholar]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The importance of skip connections in biomedical image segmentation. In Deep Learning and Data Labeling for Medical Applications; Springer: Berlin/Heidelberg, Germany, 2016; pp. 179–187. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Kaiser, P.; Wegner, J.D.; Lucchi, A.; Jaggi, M.; Hofmann, T.; Schindler, K. Learning aerial image segmentation from online maps. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6054–6068. [Google Scholar] [CrossRef]

| Data Set | Temporal Information | Spatial Resolution | Size | Channels |

|---|---|---|---|---|

| Normalized Digital Surface Model (nDSM) | year 2017 | 0.4 m | 2500 × 2500 | 1 |

| Temporal Digital Surface Model (tDSM) | from year 2014 to year 2017 | 0.4 m | 2500 × 2500 | 1 |

| Orthophoto with RGB bands (TrueDOP) | year 2017 | 0.4 m | 2500 × 2500 | 3 |

| Digital Cadastral Map (DFK) | year 2017 | 0.4 m | 2500 × 2500 | 1 |

| District | Number of Training Patches | Number of Validation Patches |

|---|---|---|

| Ansbach | 67,965 | 18,077 |

| Wolfratshausen | 14,982 | 3671 |

| Kulmbach | 24,998 | 5679 |

| Kronach | 19,987 | 5112 |

| Landau | 34,964 | 8733 |

| Deggendorf | 38,454 | 9763 |

| Landshut | 60,957 | 15,090 |

| Muenchen | 88,364 | 22,213 |

| Regensburg | 47,947 | 11,941 |

| Hemau | 9481 | 2243 |

| Rosenheim | 59,141 | 14,789 |

| Wasserburg | 14,150 | 3567 |

| Schweinfurt | 54,951 | 13,759 |

| Weilheim | 76,959 | 19,202 |

| Method | Overall Accuracy | Precision | Recall | F1 Score | IoU |

|---|---|---|---|---|---|

| Filter-based method | 97.6% | 59.7% | 82.3% | 69.3% | 53.0% |

| Comparison-based method | 90.4% | 24.1% | 89.0% | 37.9% | 23.4% |

| CNN method | 99.0% | 84.6% | 85.5% | 85.1% | 74.0% |

| Method | Overall Accuracy | Precision | Recall | F1 Score | IoU |

|---|---|---|---|---|---|

| FCN-8s | 98.8% | 82.5% | 80.1% | 81.2% | 68.4% |

| U-Net | 98.8% | 81.5% | 82.3% | 81.9% | 69.4% |

| FC-DenseNet | 99.0% | 84.6% | 85.5% | 85.1% | 74.0% |

| Trained Model | Train and Validation District | Test District | Overall Accuracy | Precision | Recall | F1 Score | IoU |

|---|---|---|---|---|---|---|---|

| 1 | Ansbach | Bad Toelz | 98.2% | 75.3% | 69.4% | 72.3% | 56.6% |

| 2 | 14 districts | Bad Toelz | 99.0% | 84.6% | 85.5% | 85.1% | 74.0% |

| 1 | Ansbach | Nuernburg | 92.4% | 86.9% | 78.0% | 82.2% | 69.8% |

| 2 | 14 districts | Nuernburg | 94.6% | 87.6% | 84.7% | 86.1% | 75.6% |

| Trained Model | Train and Validation District | Test District | Overall Accuracy | Precision | Recall | F1 Score | IoU |

|---|---|---|---|---|---|---|---|

| 1 | Ansbach | Ansbach | 98.9% | 90.9% | 90.3% | 90.5% | 82.7% |

| 2 | 14 districts | Ansbach | 98.8% | 91.3% | 89.3% | 90.3% | 82.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Shi, Y.; Auer, S.; Roschlaub, R.; Möst, K.; Schmitt, M.; Glock, C.; Zhu, X. Detection of Undocumented Building Constructions from Official Geodata Using a Convolutional Neural Network. Remote Sens. 2020, 12, 3537. https://doi.org/10.3390/rs12213537

Li Q, Shi Y, Auer S, Roschlaub R, Möst K, Schmitt M, Glock C, Zhu X. Detection of Undocumented Building Constructions from Official Geodata Using a Convolutional Neural Network. Remote Sensing. 2020; 12(21):3537. https://doi.org/10.3390/rs12213537

Chicago/Turabian StyleLi, Qingyu, Yilei Shi, Stefan Auer, Robert Roschlaub, Karin Möst, Michael Schmitt, Clemens Glock, and Xiaoxiang Zhu. 2020. "Detection of Undocumented Building Constructions from Official Geodata Using a Convolutional Neural Network" Remote Sensing 12, no. 21: 3537. https://doi.org/10.3390/rs12213537

APA StyleLi, Q., Shi, Y., Auer, S., Roschlaub, R., Möst, K., Schmitt, M., Glock, C., & Zhu, X. (2020). Detection of Undocumented Building Constructions from Official Geodata Using a Convolutional Neural Network. Remote Sensing, 12(21), 3537. https://doi.org/10.3390/rs12213537