Combining Segmentation Network and Nonsubsampled Contourlet Transform for Automatic Marine Raft Aquaculture Area Extraction from Sentinel-1 Images

Abstract

:1. Introduction

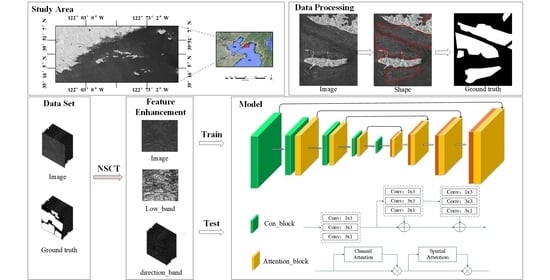

- To address the low signal-to-noise ratio problem in SAR images, we enhanced Sentinel-1 images with the NSCT [24] to strengthen the subject contour features and directional features.

- To capture better feature representations, we combined several modules in U-net. Multiscale convolution was used to fit the multisize characteristics of marine raft aquaculture areas, asymmetric convolution was selected to address the floating raft strip geometric features, and the attention module was adopted to focus on both spatial and channel interrelationships.

2. Feature Analysis of Marine Raft Aquaculture Areas

2.1. Scattering Characteristics

2.2. Structural Characteristics

- Multisize characteristics:The multisize nature of marine raft aquaculture areas is twofold. Overall, the aquaculture regions are scattered, with varying regional range sizes and inconsistent densities. Locally, the strips in the aquaculture areas are uniform in width, vary in length, and have narrow sea lanes, which vary in width between rafts. Thus, the method design needs to consider a method that can fit multisize features, and the use of a single feature sensibility field makes avoiding missing detailed information difficult.

- Strip-like geometric contour characteristics:Floating rafts are made of ropes in series with floating balls and have distinct strip geometric characteristics in an image. The non-centric symmetry of this type of rectangle needs to be noted when using convolution to extract targets.

- Outstanding directionality:The arrangement of floating rafts within an aquaculture area is directional, has explicit main directions, and is generally parallel to the shoreline.

3. Methods

3.1. Feature Enhancement

3.2. Fully Convolutional Networks

3.2.1. Convolution Block

- Multiscale ConvolutionSince the marine raft aquaculture areas vary in size and the spatial structures of the areas consist of large strip rafts and narrow sea lanes, we proposed the extraction of the features by a multiscale convolutional kernel, which is an appropriate choice. On the one hand, multiscale convolution extracts the information of the large-scale strip rafts and the detailed information of the narrow sea lanes. On the other hand, multiscale convolution can also capture features effectively, regardless of the size differences among the areas. When convolution kernels of different sizes, such as 3 × 3, 5 × 5, or 7 × 7, are applied simultaneously to extract feature maps, the computational complexity of the model increases. Inspired by the GoogLeNet architecture, we designed a multiscale convolutional kernel, as shown in Figure 8a. Due to the computational characteristics of convolution, the computational effects of two 3 × 3 convolution kernels are equivalent to that of a 5 × 5 convolution kernel, and the computational effects of three 3 × 3 convolution kernels are equivalent to that of a 7 × 7 convolution kernel [29,30,31,32]. Therefore, in this paper, the feature map fusion of multiscale convolutional kernels was achieved through series and parallel convolution kernels, which led to the extraction of features. Then, in the basic unit of each encoder, 3/5/7, three-scale receptive field information was obtained through three 3 × 3 convolution kernels.

- Asymmetric convolutionThe sensory field of common convolution is a rectangle with equal length and width, and thus it is difficult to capture the shape features of the non-centric symmetrical target. In consideration of the remarkable geometric structure of strip rafts, we selected asymmetric convolution kernels of sizes 1 × 3 and 3 × 1 for additive fusion with the results extracted from the 3 × 3 convolutional kernels.

3.2.2. Attention Block

- Channel AttentionThe simple 2D convolution operation focuses only on the relationship among pixels within the sensory field and ignores the dependencies between channels. Channel attention links the features of each channel in order to focus on key information, such as the primary direction of the raft culture area, more effectively. As shown in Figure 9, the feature map was globally averaged to obtain a feature map of size [batch-size, channel, 1, 1]. Then, a 1 × 1 convolution was used to learn the correlation between each channel. Finally, the sigmoid function was used to obtain information about the weights assigned to each channel to adjust the feature information for the next level of inflow.

- Spatial AttentionIn addition to the dependency among channels, the overall spatial relationship also has a great influence on the extraction result. As shown in Figure 10, the spatial attention module first normalized the number of channels and then learnt the higher-dimensional features under a larger sensory field through convolution, thus reducing the flow of redundant information of low-dimensional features to the lower convolution and focusing on the overall information of the target.Although the semantic segmentation network proposed in this paper adopts a U-shaped structure similar to U-net, it is different from U-net. The key to the difference lies in the design of the encoder. The network proposed in this paper additively merges multi-scale convolution and asymmetric convolution at the encoder stage to form basic coding units, and connects channel attention and spatial attention in series between these basic units.

4. Experiment, Results, and Analysis

4.1. Study Area and Experimental Data

4.1.1. Study Area

4.1.2. Dataset

4.2. Verification Experiment

4.2.1. Ablation Experiment

4.2.2. Applied Experiment

4.3. Comparative Experiment

5. Discussion

6. Conclusions

- Feature enhancement: In response to the low signal-to-noise ratio problem in SAR images, the floating raft features were enhanced using the NSCT. The low-frequency sub-band obtained by decomposing the original SAR image with the NSCT was used to enhance the contour features, and the high-frequency sub-bands were used to supplement details with direction information.

- Improved semantic segmentation network: Multiscale feature fusion was introduced to better recognize large rafts and small seaways with less edge adhesion. Asymmetric convolution was adopted to capture the characteristics of floating raft strip distribution by screening the geometric features. Attention module was added to improve the integrity and smoothness in view of the grayscale variance in the homogeneous region of the SAR image caused by speckle noise.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| FCN | Fully convolutional network |

| RefineNet | Multi-path refinement networks |

| PSPNet | Pyramid scene parsing network |

| RCF | Richer convolutional features network |

| UPS-net | Improved U-Net with a PSE structure |

| DS-HCN | Dual-scale homogeneous convolutional neural network |

| CBAM | Convolutional block attention module |

| ECA-Net | Efficient channel attention for deep convolutional neural networks |

References

- FAO. World Fisheries and Aquaculture Overview 2018; FAO Fisheries Department: Rome, Italy, 2018. [Google Scholar]

- Zhang, Y.; He, P.; Li, H.; Li, G.; Liu, J.; Jiao, F.; Zhang, J.; Huo, Y.; Shi, X.; Su, R.; et al. Ulva prolifera green-tide outbreaks and their environmental impact in the Yellow Sea, China. Neurosurgery 2019, 6, 825–838. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Fan, W. Theory and Technology of Fishery Remote Sensing Applications; Science China Press: Beijing, China, 2014. [Google Scholar]

- Deng, G.; Wu, H.Y.; Guo, P.P.; Li, M.Z. Evolution and development trend of marine raft cultivation model in China. Chin. Fish. Econ. 2013, 31, 164–169. [Google Scholar]

- Fan, J.; Zhao, J.; An, W.; Hu, Y. Marine Floating Raft Aquaculture Detection of GF-3 PolSAR Images Based on Collective Multikernel Fuzzy Clustering. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 99, 1–14. [Google Scholar] [CrossRef]

- Fan, J.; Zhang, F.; Zhao, D.; Wen, S.; Wei, B. Information extraction of marine raft aquaculture based on high-resolution satellite remote sensing SAR images. In Proceedings of the Second China Coastal Disaster Risk Analysis and Management Symposium, Hainan, China, 29 November 2014. [Google Scholar]

- Zhu, H. Review of SAR Oceanic Remote Sensing Technology. Mod. Radar 2010, 2, 1–6. [Google Scholar]

- Chu, J.L.; Zhao, D.Z.; Zhang, F.S.; Wei, B.Q.; Li, C.M.; Suo, A.N. Monitormethod of rafts cultivation by remote sense-A case of Changhai. Mar. Environ. Sci. 2008, 27, 35–40. [Google Scholar]

- Fan, J.; Chu, J.; Geng, J.; Zhang, F. Floating raft aquaculture information automatic extraction based on high resolution SAR images. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 3898–3901. [Google Scholar]

- Hu, Y.; Fan, J.; Wang, J. Target recognition of floating raft aquaculture in SAR image based on statistical region merging. In Proceedings of the 2017 Seventh International Conference on Information Science and Technology (ICIST), Da Nang, Vietnam, 16–19 April 2017; pp. 429–432. [Google Scholar]

- Geng, J.; Fan, J.; Wang, H. Weighted fusion-based representation classifiers for marine floating raft detection of SAR images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 444–448. [Google Scholar] [CrossRef]

- Geng, J.; Fan, J.C.; Chu, J.L.; Wang, H.Y. Research on marine floating raft aquaculture SAR image target recognition based on deep collaborative sparse coding network. Acta Autom. Sin. 2016, 42, 593–604. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Liu, Y.; Cheng, M.M.; Hu, X.; Wang, K.; Bai, X. Richer convolutional features for edge detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3000–3009. [Google Scholar]

- Yueming, L.; Xiaomei, Y.; Zhihua, W.; Chen, L. Extracting raft aquaculture areas in Sanduao from high-resolution remote sensing images using RCF. Acta Oceanol. Sin. 2019, 41, 119–130. [Google Scholar]

- Shi, T.; Xu, Q.; Zou, Z.; Shi, Z. Automatic raft labeling for remote sensing images via dual-scale homogeneous convolutional neural network. Remote Sens. 2018, 10, 1130. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Fei, D.; Shao, G.; Lu, Y.; Chu, J. Extracting raft aquaculture areas from remote sensing images via an improved U-net with a PSE structure. Remote Sens. 2019, 11, 2053. [Google Scholar] [CrossRef] [Green Version]

- Da Cunha, A.L.; Zhou, J.; Do, M.N. The nonsubsampled contourlet transform: Theory, design, and applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef] [Green Version]

- Fan, J.; Wang, D.; Zhao, J.; Song, D.; Han, M.; Jiang, D. National sea area use dynamic monitoring based on GF-3 SAR imagery. J. Radars 2017, 6, 456–472. [Google Scholar]

- Madsen, S.N. Spectral properties of homogeneous and nonhomogeneous radar images. IEEE Trans. Aerosp. Electron. Syst. 1987, 4, 583–588. [Google Scholar] [CrossRef] [Green Version]

- Geng, J. Research on Deep Learning Based Classification Method for SAR Remote Sensing Images. Ph.D. Thesis, Dalian University of Technology, Dalian, China, 2018. [Google Scholar]

- Jiao, J.; Zhao, B.; Zhang, H. A new space image segmentation method based on the non-subsampled contourlet transform. In Proceedings of the 2010 Symposium on Photonics and Optoelectronics, Chengdu, China, 19–21 June 2010; pp. 1–5. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. arXiv 2016, arXiv:1602.07261. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; So Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 11534–11542. [Google Scholar]

- Party History Research Office of the CPC Changhai County Committee. Changhai Yearbook (Archive) 2017; Liaoning Nationality Publishing House: Dandong, China, 2018; pp. 29–31. [Google Scholar]

- Yan, J.; Wang, P.; Lin, X.; Tang, C. Analysis of the Scale Structure of Mariculture Using Sea in Changhai County Based on GIS. Ocean Dev. Manag. 2016, 33, 99–101. [Google Scholar]

- Liu, S.; Hu, Q.; Liu, T.; Zhao, J. Overview of Research on Synthetic Aperture Radar Image Denoising Algorithm. J. Arms Equip. Eng. 2018, 39, 106–112+252. [Google Scholar]

- Yu, W. SAR Image Segmentation Based on the Adaptive Frequency Domain Information and Deep Learning. Master’s Thesis, XIDIAN University, Xi’an, China, 2014. [Google Scholar]

- Yin, D.; Gontijo Lopes, R.; Shlens, J.; Cubuk, E.D.; Gilmer, J. A Fourier Perspective on Model Robustness in Computer Vision. Adv. Neural Inform. Process. Syst. 2019, 32, 13276–13286. [Google Scholar]

- Wang, H.; Wu, X.; Huang, Z.; Xing, E.P. High-frequency Component Helps Explain the Generalization of Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020; pp. 8684–8694. [Google Scholar]

- Hoberg, T.; Rottensteiner, F.; Feitosa, R.Q.; Heipke, C. Conditional Random Fields for Multitemporal and Multiscale Classification of Optical Satellite Imagery. IEEE Trans. Geoence Remote Sens. 2015, 53, 659–673. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

| Dataset | Time | Number of Images | Size | Number of Patches |

|---|---|---|---|---|

| Training | 9.16/9.22/10.10 | 3 | (512, 512) | 7421 |

| Validation | 10.22 | 1 | (512, 512) | 2617 |

| Testing | 10.16 | 1 | (6217, 3644) | / |

| Method | IOU | F1 | Precision | Recall |

|---|---|---|---|---|

| U-net | 77.2% | 87.1% | 93.0% | 81.9% |

| Attention_block + U-net | 78.2% | 87.8% | 86.4% | 89.2% |

| Attention + Con_block + U-net | 81.4% | 89.7% | 87.4% | 92.1% |

| NSCT + Attention + Con_block + U-net | 83.0% | 90.7% | 89.1% | 92.3% |

| Method | IOU | F1 | Precision | Recall |

|---|---|---|---|---|

| NSCT + Attention + Con_block + U-net | 83.8% | 91.2% | 96.1% | 86.8% |

| Method | IOU | F1 | Precision | Recall |

|---|---|---|---|---|

| UPS-net | 74.8% | 85.6% | 91.7% | 80.3% |

| Ours | 83.0% | 90.7% | 89.1% | 92.3% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Wang, C.; Ji, Y.; Chen, J.; Deng, Y.; Chen, J.; Jie, Y. Combining Segmentation Network and Nonsubsampled Contourlet Transform for Automatic Marine Raft Aquaculture Area Extraction from Sentinel-1 Images. Remote Sens. 2020, 12, 4182. https://doi.org/10.3390/rs12244182

Zhang Y, Wang C, Ji Y, Chen J, Deng Y, Chen J, Jie Y. Combining Segmentation Network and Nonsubsampled Contourlet Transform for Automatic Marine Raft Aquaculture Area Extraction from Sentinel-1 Images. Remote Sensing. 2020; 12(24):4182. https://doi.org/10.3390/rs12244182

Chicago/Turabian StyleZhang, Yi, Chengyi Wang, Yuan Ji, Jingbo Chen, Yupeng Deng, Jing Chen, and Yongshi Jie. 2020. "Combining Segmentation Network and Nonsubsampled Contourlet Transform for Automatic Marine Raft Aquaculture Area Extraction from Sentinel-1 Images" Remote Sensing 12, no. 24: 4182. https://doi.org/10.3390/rs12244182

APA StyleZhang, Y., Wang, C., Ji, Y., Chen, J., Deng, Y., Chen, J., & Jie, Y. (2020). Combining Segmentation Network and Nonsubsampled Contourlet Transform for Automatic Marine Raft Aquaculture Area Extraction from Sentinel-1 Images. Remote Sensing, 12(24), 4182. https://doi.org/10.3390/rs12244182