Mapping Forested Wetland Inundation in the Delmarva Peninsula, USA Using Deep Convolutional Neural Networks

Abstract

:1. Introduction

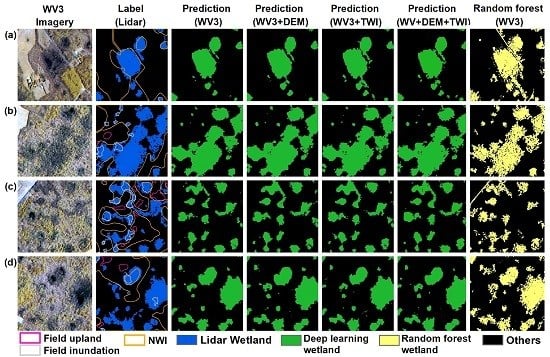

2. Materials and Methods

2.1. Study Area

2.2. Data Sources

2.3. Deriving Wetland Inundation Labels from Lidar Intensity

2.4. Deep Learning Network Training and Classification

2.5. Classification Assessment

2.5.1. Pixel-Level Assessment against Field Data

2.5.2. Object-Level Assessment against Lidar Intensity-Derived Inundation Labels

3. Results

3.1. Classification Accuracy at the Pixel Level

3.2. Classification Accuracy at the Object Level

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| WV3 | WorldView-3 |

| Lidar | Light Detection and Ranging |

| DEM | Digital Elevation Model |

| TWI | Topographic Wetness Index |

| NWI | National Wetlands Inventory |

| CONUS | Contiguous United States |

| USDA | U.S. Department of Agriculture |

| NAIP | National Agriculture Imagery Program |

| USFWS | U.S. Fish and Wildlife Service |

| CNN | Convolutional Neural Network |

| SAGA | System for Automated Geoscientific Analysis |

| FLAASH | Fast Line-of-sight Atmospheric Analysis of Hypercubes |

| OA | Overall Accuracy |

| TP | Number of True Positives |

| TN | Number of True Negatives |

| N | Total Number of Pixels |

| FP | Number of False Positives |

| FN | Number of False Negatives |

| F1-Score | Weighted Average of the Precision and Recall |

| Kappa coefficient | Consistency of the Predicted Classes with the Ground Truth |

| pe | Hypothetical Probability of Chance Agreement |

| IoU | Intersection Over Union or Jaccard index |

| R2 | R-squared |

| RMSE | Root Mean Square Error |

| U-Net | Convolutional Network Architecture |

References

- Tiner, R.W. Geographically isolated wetlands of the United States. Wetlands 2003, 23, 494–516. [Google Scholar] [CrossRef]

- Cohen, M.J.; Creed, I.F.; Alexander, L.; Basu, N.B.; Calhoun, A.J.; Craft, C.; D’Amico, E.; DeKeyser, E.; Fowler, L.; Golden, H.E.; et al. Do geographically isolated wetlands influence landscape functions? Proc. Natl. Acad. Sci. USA 2016, 113, 1978–1986. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lang, M.W.; Kasischke, E.S. Using C-Band Synthetic Aperture Radar Data to Monitor Forested Wetland Hydrology in Maryland’s Coastal Plain, USA. IEEE Trans. Geosci. Remote Sens. 2008, 46, 535–546. [Google Scholar] [CrossRef]

- Stedman, S.; Dahl, T.E. Status and Trends of Wetlands in the Coastal Watersheds of the Eastern United States 1998 to 2004. Available online: https://www.fws.gov/wetlands/Documents/Status-and-Trends-of-Wetlands-in-the-Coastal-Watersheds-of-the-Eastern-United-States-1998-to-2004.pdf (accessed on 14 February 2020).

- DeVries, B.; Huang, C.; Lang, M.; Jones, J.; Huang, W.; Creed, I.; Carroll, M. Automated Quantification of Surface Water Inundation in Wetlands Using Optical Satellite Imagery. Remote Sens. 2017, 9, 807. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.; Peng, Y.; Lang, M.; Yeo, I.-Y.; McCarty, G. Wetland inundation mapping and change monitoring using Landsat and airborne LiDAR data. Remote Sens. Environ. 2014, 141, 231–242. [Google Scholar] [CrossRef]

- Jin, H.; Huang, C.; Lang, M.W.; Yeo, I.-Y.; Stehman, S.V. Monitoring of wetland inundation dynamics in the Delmarva Peninsula using Landsat time-series imagery from 1985 to 2011. Remote Sens. Environ. 2017, 190, 26–41. [Google Scholar] [CrossRef] [Green Version]

- Zou, Z.; Xiao, X.; Dong, J.; Qin, Y.; Doughty, R.B.; Menarguez, M.A.; Zhang, G.; Wang, J. Divergent trends of open-surface water body area in the contiguous United States from 1984 to 2016. Proc. Natl. Acad. Sci. USA 2018, 115, 3810–3815. [Google Scholar] [CrossRef] [Green Version]

- Huang, W.; DeVries, B.; Huang, C.; Lang, M.; Jones, J.; Creed, I.; Carroll, M. Automated Extraction of Surface Water Extent from Sentinel-1 Data. Remote Sens. 2018, 10, 797. [Google Scholar] [CrossRef] [Green Version]

- Bolanos, S.; Stiff, D.; Brisco, B.; Pietroniro, A. Operational Surface Water Detection and Monitoring Using Radarsat 2. Remote Sens. 2016, 8, 285. [Google Scholar] [CrossRef] [Green Version]

- Lang, M.W.; McCarty, G.W. Lidar Intensity for Improved Detection of Inundation Below the Forest Canopy. Wetlands 2009, 29, 1166–1178. [Google Scholar] [CrossRef]

- Vanderhoof, M.K.; Distler, H.E.; Mendiola, D.T.G.; Lang, M. Integrating Radarsat-2, Lidar, and Worldview-3 Imagery to Maximize Detection of Forested Inundation Extent in the Delmarva Peninsula, USA. Remote Sens. 2017, 9, 105. [Google Scholar] [CrossRef] [Green Version]

- Wu, Q.; Lane, C.R.; Li, X.; Zhao, K.; Zhou, Y.; Clinton, N.; DeVries, B.; Golden, H.E.; Lang, M.W. Integrating LiDAR data and multi-temporal aerial imagery to map wetland inundation dynamics using Google Earth Engine. Remote Sens. Environ. 2019, 228, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Lang, M.; McCarty, G.; Oesterling, R.; Yeo, I.-Y. Topographic Metrics for Improved Mapping of Forested Wetlands. Wetlands 2012, 33, 141–155. [Google Scholar] [CrossRef]

- Chan, R.H.; Chung-Wa, H.; Nikolova, M. Salt-and-pepper noise removal by median-type noise detectors and detail-preserving regularization. IEEE Trans. Image Process. 2005, 14, 1479–1485. [Google Scholar] [CrossRef] [PubMed]

- Khatami, R.; Mountrakis, G.; Stehman, S.V. A meta-analysis of remote sensing research on supervised pixel-based land-cover image classification processes: General guidelines for practitioners and future research. Remote Sens. Environ. 2016, 177, 89–100. [Google Scholar] [CrossRef] [Green Version]

- Ding, P.; Zhang, Y.; Deng, W.-J.; Jia, P.; Kuijper, A. A light and faster regional convolutional neural network for object detection in optical remote sensing images. ISPRS J. Photogramme. Remote Sens. 2018, 141, 208–218. [Google Scholar] [CrossRef]

- Kellenberger, B.; Marcos, D.; Tuia, D. Detecting mammals in UAV images: Best practices to address a substantially imbalanced dataset with deep learning. Remote Sens. Environ. 2018, 216, 139–153. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Han, L.; Dong, Y.; Shi, Y.; Huang, W.; Han, L.; González-Moreno, P.; Ma, H.; Ye, H.; Sobeih, T. A Deep Learning-Based Approach for Automated Yellow Rust Disease Detection from High-Resolution Hyperspectral UAV Images. Remote Sens. 2019, 11, 1554. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Huang, J.; Ao, Z.; Lao, D.; Xin, Q. Deep Learning Approaches for the Mapping of Tree Species Diversity in a Tropical Wetland Using Airborne LiDAR and High-Spatial-Resolution Remote Sensing Images. Forests 2019, 10, 1047. [Google Scholar] [CrossRef] [Green Version]

- Flood, N.; Watson, F.; Collett, L. Using a U-net convolutional neural network to map woody vegetation extent from high resolution satellite imagery across Queensland, Australia. Int. J. Appl. Earth Obs. Geoinf. 2019, 82. [Google Scholar] [CrossRef]

- Li, R.; Liu, W.; Yang, L.; Sun, S.; Hu, W.; Zhang, F.; Li, W. DeepUNet: A Deep Fully Convolutional Network for Pixel-Level Sea-Land Segmentation. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2018, 11, 3954–3962. [Google Scholar] [CrossRef] [Green Version]

- Lowrance, R.; Altier, L.S.; Newbold, J.D.; Schnabel, R.R.; Groffman, P.M.; Denver, J.M.; Correll, D.L.; Gilliam, J.W.; Robinson, J.L.; Brinsfield, R.B.; et al. Water Quality Functions of Riparian Forest Buffers in Chesapeake Bay Watersheds. Environ. Manage 1997, 21, 687–712. [Google Scholar] [CrossRef]

- Shedlock, R.J.; Denver, J.M.; Hayes, M.A.; Hamilton, P.A.; Koterba, M.T.; Bachman, L.J.; Phillips, P.J.; Banks, W.S. Water-quality assessment of the Delmarva Peninsula, Delaware, Maryland, and Virginia; Rresults of Investigations, 1987-91; 2355A; USGS: Reston, VA, USA, 1999. [Google Scholar]

- Ator, S.W.; Denver, J.M.; Krantz, D.E.; Newell, W.L.; Martucci, S.K. A Surficial Hydrogeologic Framework for the Mid-Atlantic Coastal Plain; 1680; USGS: Reston, VA, USA, 2005. [Google Scholar]

- Homer, C.; Dewitz, J.; Yang, L.M.; Jin, S.; Danielson, P.; Xian, G.; Coulston, J.; Herold, N.; Wickham, J.; Megown, K. Completion of the 2011 National Land Cover Database for the Conterminous United States - Representing a Decade of Land Cover Change Information. Photogramm. Eng. Rem. S 2015, 81, 345–354. [Google Scholar] [CrossRef]

- Vanderhoof, M.K.; Distler, H.E.; Lang, M.W.; Alexander, L.C. The influence of data characteristics on detecting wetland/stream surface-water connections in the Delmarva Peninsula, Maryland and Delaware. Wetlands Ecol. Manage. 2017, 26, 63–86. [Google Scholar] [CrossRef]

- Lang, M.; McDonough, O.; McCarty, G.; Oesterling, R.; Wilen, B. Enhanced Detection of Wetland-Stream Connectivity Using LiDAR. Wetlands 2012, 32, 461–473. [Google Scholar] [CrossRef]

- Li, X.; McCarty, G.W.; Lang, M.; Ducey, T.; Hunt, P.; Miller, J. Topographic and physicochemical controls on soil denitrification in prior converted croplands located on the Delmarva Peninsula, USA. Geoderma 2018, 309, 41–49. [Google Scholar] [CrossRef]

- Li, X.; McCarty, G.W.; Karlen, D.L.; Cambardella, C.A.; Effland, W. Soil Organic Carbon and Isotope Composition Response to Topography and Erosion in Iowa. J. Geophys. Res. Biogeosci. 2018, 123, 3649–3667. [Google Scholar] [CrossRef] [Green Version]

- Lang, M.W.; Kim, V.; McCarty, G.W.; Li, X.; Yeo, I.Y.; Huang, C. Improved Detection of Inundation under Forest Canopy Using Normalized LiDAR Intensity Data. Remote Sens. 2020, 12, 707. [Google Scholar] [CrossRef] [Green Version]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, p.; Wu, C. ResUNet-a: A deep learning framework for semantic segmentation of remotely sensed data. arXiv 2019, arXiv:1904.00592. [Google Scholar]

- Zhu, W.; Huang, Y.; Zeng, L.; Chen, X.; Liu, Y.; Qian, Z.; Du, N.; Fan, W.; Xie, X. AnatomyNet: Deep learning for fast and fully automated whole-volume segmentation of head and neck anatomy. Med. Phys. 2019, 46, 576–589. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Choi, S.-S.; Cha, S.-H.; Tappert, C.C. A survey of binary similarity and distance measures. J. Syst. Cyberne. Inform. 2010, 8, 43–48. [Google Scholar]

- Li, J.; Chen, J.; Sun, Y. Research of Color Composite of WorldView-2 Based on Optimum Band Combination. Int. J. Adv. Informa. Sci. Service Sci. 2013, 5, 791–798. [Google Scholar] [CrossRef]

- Hayes, M.M.; Miller, S.N.; Murphy, M.A. High-resolution landcover classification using Random Forest. Remote Sens. Lett. 2014, 5, 112–121. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Swain, P.H.; Ersoy, O.K. Neural Network Approaches Versus Statistical-Methods in Classification of Multisource Remote-Sensing Data. IEEE Trans. Geosci. Remote Sens. 1990, 28, 540–552. [Google Scholar] [CrossRef]

| Data | Description | Acquisition Data | Spatial Resolution |

|---|---|---|---|

| WorldView-3 | Eight bands multispectral imagery (wavelengths: 400–1040 nm) | 6 April 2015 | 2 m |

| Lidar intensity | One band normalized intensity image (wavelengths: 1064 nm) | 27 March 2007 | 1 m |

| Lidar DEM | Three separate lidar collections in Maryland and Delaware | April–June 2003, March–April 2006, April 2007 | 2 m |

| Field polygons | Ground-inundated and upland polygons using global positioning systems | 16 March–6 April 2015 | Shapefile |

| NWI | National Wetlands Inventory Version 2 dataset for Chesapeake Bay | 2013, 2007 | Shapefile |

| Random forest inundation map | Wetland inundation map using random forest based on WV3 imagery by Vanderhoof et al. [12] | 6 April 2015 | 2 m |

| Prediction (WV3) | Prediction (WV3 + DEM) | Prediction (WV3 + TWI) | Prediction (WV3 + DEM + TWI) | Random Forest (WV3) | |

|---|---|---|---|---|---|

| Overall Accuracy (%) | 92 | 95 | 95 | 95 | 91 |

| Precision (%) | 99 | 100 | 99 | 99 | 98 |

| Recall (%) | 84 | 90 | 91 | 89 | 83 |

| F1 score | 0.91 | 0.95 | 0.95 | 0.94 | 0.90 |

| Kappa | 0.84 | 0.90 | 0.90 | 0.89 | 0.81 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, L.; McCarty, G.W.; Zhang, X.; Lang, M.W.; Vanderhoof, M.K.; Li, X.; Huang, C.; Lee, S.; Zou, Z. Mapping Forested Wetland Inundation in the Delmarva Peninsula, USA Using Deep Convolutional Neural Networks. Remote Sens. 2020, 12, 644. https://doi.org/10.3390/rs12040644

Du L, McCarty GW, Zhang X, Lang MW, Vanderhoof MK, Li X, Huang C, Lee S, Zou Z. Mapping Forested Wetland Inundation in the Delmarva Peninsula, USA Using Deep Convolutional Neural Networks. Remote Sensing. 2020; 12(4):644. https://doi.org/10.3390/rs12040644

Chicago/Turabian StyleDu, Ling, Gregory W. McCarty, Xin Zhang, Megan W. Lang, Melanie K. Vanderhoof, Xia Li, Chengquan Huang, Sangchul Lee, and Zhenhua Zou. 2020. "Mapping Forested Wetland Inundation in the Delmarva Peninsula, USA Using Deep Convolutional Neural Networks" Remote Sensing 12, no. 4: 644. https://doi.org/10.3390/rs12040644

APA StyleDu, L., McCarty, G. W., Zhang, X., Lang, M. W., Vanderhoof, M. K., Li, X., Huang, C., Lee, S., & Zou, Z. (2020). Mapping Forested Wetland Inundation in the Delmarva Peninsula, USA Using Deep Convolutional Neural Networks. Remote Sensing, 12(4), 644. https://doi.org/10.3390/rs12040644