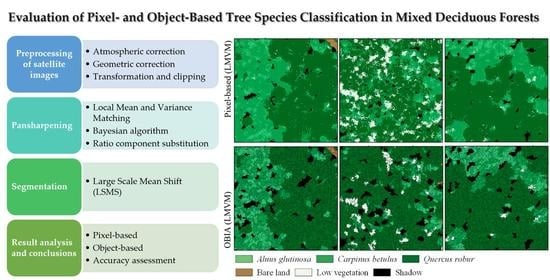

An Evaluation of Pixel- and Object-Based Tree Species Classification in Mixed Deciduous Forests Using Pansharpened Very High Spatial Resolution Satellite Imagery

Abstract

:1. Introduction

2. Materials

Study Area and Data

- cross-track view angles of −0.5°

- mean sun azimuth of 158.6°

- sun elevation angle of 66.6°

- mean in-track of −29.2°

- mean off-nadir view angle of 29.2°.

3. Methods

3.1. WorldView-3 Satellite Imagery Preprocessing

3.2. Pansharpening

- i,j—pixel coordinates

- w,h—window size

- —pansharpened imagery

- —high-resolution imagery

- —low-resolution imagery

- —local mean of LR imagery

- —local mean of HR imagery

- —local standard deviation (SD) of LR imagery

- —local SD of HR imagery

- g(Z)—set of functionals

- E—a random errors vector (considered to be independent of Z).

3.3. Segmentation

3.4. Image Classification and Accuracy Assessment

- Pixel-based classification using pansharpened WV-3 imagery (Bayes, RCS, LMVM) and RF algorithm and

- Object-based classification using pansharpened WV-3 imagery (Bayes, RCS, LMVM) and RF algorithm.

4. Results

4.1. Pixel-Based Classification of Pansharpened Imagery

4.2. Object-Based Classification of Pansharpened Imagery

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Balenović, I.; Simic Milas, A.; Marjanović, H. A Comparison of Stand-Level Volume Estimates from Image-Based Canopy Height Models of Different Spatial Resolutions. Remote Sens. 2017, 9, 205. [Google Scholar] [CrossRef] [Green Version]

- Fassnacht, F.E.; Latifi, H.; Sterenczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Lechner, A.M.; Foody, G.M.; Doreen, S.; Boyd, D.S. Applications in Remote Sensing to Forest Ecology and Management. One Earth 2020, 2, 405–412. [Google Scholar] [CrossRef]

- Knorn, J.; Rabe, A.; Radeloff, V.C.; Kuemmerle, T.; Kozak, J.; Hostert, P. Land cover mapping of large areas using chain classification of neighboring Landsat satellite images. Remote Sens. Environ. 2009, 113, 957–964. [Google Scholar] [CrossRef]

- Griffiths, P.; Linden, S.; Kuemmerle, T.; Hostert, P. A pixel-based Landsat compositing algorithm for large area land cover mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2088–2101. [Google Scholar] [CrossRef]

- Chaves, M.E.D.; Picoli, C.A.M.; Sanches, I.D. Recent Applications of Landsat 8/OLI and Sentinel-2/MSI for Land Use and Land Cover Mapping: A Systematic Review. Remote Sens. 2020, 12, 3062. [Google Scholar] [CrossRef]

- Li, X.; Chen, W.; Cheng, X.; Liao, Y.; Chen, G. Comparison and integration of feature reduction methods for land cover classification with RapidEye imagery. Multimed. Tools Appl. 2017, 76, 23041–23057. [Google Scholar] [CrossRef]

- Mas, J.F.; Lemoine-Rodríguez, R.; González-López, R.; López-Sánchez, J.; Piña-Garduño, A.; Herrera-Flores, E. Land use/land cover change detection combining automatic processing and visual interpretation. Eur. J. Remote Sens. 2017, 50, 626–635. [Google Scholar] [CrossRef]

- Nampak, H.; Pradhan, B.; Rizeei, H.M.; Park, H.J. Assessment of land cover and land use change impact on soil loss in a tropical catchment by using multitemporal SPOT-5 satellite images and Revised Universal Soil Loss Equation model. Land Degrad. Dev. 2018, 29, 3440–3455. [Google Scholar] [CrossRef]

- Saini, R.; Ghosh, S.K. Analyzing the impact of red-edge band on land use land cover classification using multispectral RapidEye imagery and machine learning techniques. J. Appl. Remote Sens. 2019, 13, 044511. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Wagner, F.H.; Aragão, L.E.; Shimabukuro, Y.E.; de Souza Filho, C.R. Tree species classification in tropical forests using visible to shortwave infrared WorldView-3 images and texture analysis. ISPRS J. Photogramm. Remote Sens. 2019, 149, 119–131. [Google Scholar] [CrossRef]

- Lelong, C.C.D.; Tshingomba, U.K.; Soti, V. Assessing Worldview-3 multispectral imaging abilities to map the tree diversity in semi-arid parklands. Int. J. Appl. Earth Obs. Geoinf. 2020, 93, 102211. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 1, 1–15. [Google Scholar] [CrossRef]

- Li, H.; Jing, L.; Tang, Y. Assessment of Pansharpening Methods Applied to WorldView-2 Imagery Fusion. Sensors 2017, 17, 89. [Google Scholar] [CrossRef] [PubMed]

- Duran, J.; Buades, A.; Coll, B.; Sbert, C.; Blanchet, G. A survey of pansharpening methods with a new band-decoupled variational model. ISPRS J. Photogramm. Remote Sens. 2017, 125, 78–105. [Google Scholar] [CrossRef] [Green Version]

- Mhangara, P.; Mapurisa, W.; Mudau, N. Comparison of Image Fusion Techniques Using Satellite Pour l’Observation de la Terre (SPOT) 6 Satellite Imagery. Appl. Sci. 2020, 10, 1881. [Google Scholar] [CrossRef] [Green Version]

- Ghodekar, H.R.; Deshpande, A.S.; Scholar, P.G. Pan-sharpening based on non-subsampled contourlet transform. Int. J. Eng. Sci. 2016, 1, 2831. [Google Scholar]

- Stavrakoudis, D.G.; Dragozi, E.; Gitas, I.Z.; Karydas, C.G. Decision Fusion Based on Hyperspectral and Multispectral Satellite Imagery for Accurate Forest Species Mapping. Remote Sens. 2014, 6, 6897–6928. [Google Scholar] [CrossRef] [Green Version]

- Lin, C.; Popescu, S.C.; Thomson, G.; Tsogt, K.; Chang, C.I. Classification of Tree Species in Overstorey Canopy of Subtropical Forest Using QuickBird Images. PLoS ONE 2015, 10, e0125554. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gilbertson, J.; Kemp, J.; Van Niekerk, A. Effect of pan-sharpening multi-temporal Landsat 8 imagery for crop type differentiation using different classification techniques. Comput. Electron. Agric. 2017, 134, 151–159. [Google Scholar] [CrossRef] [Green Version]

- Witharana, C.; Bhuiyan, M.A.E.; Liljedahl, A.; Kanevskiy, M.; Epstein, H.; Jones, B.; Daanen, R.; Griffin, C.; Kent, K.; Jones, M. Understanding the synergies of deep learning and data fusion of multispectral and panchromatic high resolution commercial satellite imagery for automated ice-wedge polygon detection. ISPRS J. Photogramm. Remote Sens. 2020, 170, 174–191. [Google Scholar] [CrossRef]

- Ghosh, A.; Joshi, P.K. Assessment of pan-sharpened very high-resolution WorldView-2 images. Int. J. Remote Sens. 2013, 34, 8336–8359. [Google Scholar] [CrossRef]

- Gašparović, M.; Klobučar, D. Mapping Floods in Lowland Forest Using Sentinel-1 and Sentinel-2 Data and an Object-Based Approach. Forests 2021, 12, 553. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, Y.-Q.; Chan, J.C.-W. Hyperspectral and Multispectral Image Fusion via Deep Two-Branches Convolutional Neural Network. Remote Sens. 2018, 10, 800. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree species classification with Random forest using very high spatial resolution 8-band worldView-2 satellite data. Remote Sens. 2012, 4, 2661–2693. [Google Scholar] [CrossRef] [Green Version]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Su, T.; Zhang, S. Local and global evaluation for remote sensing image segmentation. ISPRS J. Photogramm. Remote Sens. 2017, 130, 256–276. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Li, Y.; Xu, W.; Chen, H.; Jiang, J.; Li, X. A Novel Framework Based on Mask R-CNN and Histogram Thresholding for Scalable Segmentation of New and Old Rural Buildings. Remote Sens. 2021, 13, 1070. [Google Scholar] [CrossRef]

- Bhuiyan, M.A.E.; Witharana, C.; Liljedahl, A.K. Use of Very High Spatial Resolution Commercial Satellite Imagery and Deep Learning to Automatically Map Ice-Wedge Polygons across Tundra Vegetation Types. J. Imaging 2020, 6, 137. [Google Scholar] [CrossRef]

- Mahmoud, A.; Mohamed, S.; El-Khoribi, R.; AbdelSalam, H. Object Detection Using Adaptive Mask RCNN in Optical Remote Sensing Images. Int. J. Intell. Eng. Syst. 2020, 13, 65–76. [Google Scholar] [CrossRef]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building extraction from satellite images using mask R-CNN with building boundary regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 242–251. [Google Scholar]

- Ocer, N.; Jovanovska Kaplan, G.; Erdem, F.; Matci, D.; Avdan, U. Tree extraction from multi-scale UAV images using Mask R-CNN with FPN. Remote Sens. Lett. 2020, 11, 847–856. [Google Scholar] [CrossRef]

- Chiang, C.-Y.; Barnes, C.; Angelov, P.; Jiang, R. Deep Learning-Based Automated Forest Health Diagnosis from Aerial Images. IEEE Access 2020, 8, 144064–144076. [Google Scholar] [CrossRef]

- Michel, J.; Youssefi, D.; Grizonnet, M. Stable Mean-Shift Algorithm and Its Application to the Segmentation of Arbitrarily Large Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 952–964. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Clark, M.L.; Roberts, D.A. Species-level differences in hyperspectral metrics among tropical rainforest trees as determined by a tree-based classifier. Remote Sens. 2012, 4, 1820–1855. [Google Scholar] [CrossRef] [Green Version]

- Feret, J.-B.; Asner, G.P. Tree Species Discrimination in Tropical Forests Using Airborne Imaging Spectroscopy. IEEE Trans. Geosci. Remote Sens. 2013, 51, 73–84. [Google Scholar] [CrossRef]

- Cho, M.A.; Malahlela, O.; Ramoelo, A. Assessing the utility worldview-2 imagery for tree species mapping in south african subtropical humid forest and the conservation implications: Dukuduku forest patch as case study. Int. J. Appl. Earth Obs. Geoinf. 2015, 38, 349–357. [Google Scholar] [CrossRef]

- Ghosh, A.; Joshi, P.K. A comparison of selected classification algorithms for mapping bamboo patches in lower Gangetic plains using very high resolution WorldView 2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 298–311. [Google Scholar] [CrossRef]

- Shojanoori, R.; Shafri, H.; Mansor, S.; Ismail, M.H. The use of worldview-2 satellite data in urban tree species mapping by object-based image analysis technique. Sains Malaysiana 2016, 45, 1025–1034. [Google Scholar]

- Li, D.; Ke, Y.; Gong, H.; Li, X. Object-Based Urban Tree Species Classification Using Bi-Temporal WorldView-2 and WorldView-3 Images. Remote Sens. 2015, 7, 16917–16937. [Google Scholar] [CrossRef] [Green Version]

- Majid, I.A.; Latif, Z.A.; Adnan, N.A. Tree Species Classification Using WorldView-3 Data. In Proceedings of the IEEE 7th Control and System Graduate Research Colloquium, UiTM Shah Alam, Shah Alam, Malaysia, 8 August 2016; pp. 73–76. [Google Scholar]

- Deur, M.; Gašparović, M.; Balenović, I. Tree Species Classification in Mixed Deciduous Forests Using Very High Spatial Resolution Satellite Imagery and Machine Learning Methods. Remote Sens. 2020, 12, 3926. [Google Scholar] [CrossRef]

- Gašparović, M.; Dobrinić, D.; Medak, D. Geometric accuracy improvement of WorldView-2 imagery using freely available DEM data. Photogramm. Rec. 2019, 34, 266–281. [Google Scholar] [CrossRef]

- Xu, Q.; Zhang, Y.; Li, B. Recent advances in pansharpening and key problems in applications. Int. J. Image Data Fusion 2014, 5, 175–195. [Google Scholar] [CrossRef]

- Gašparović, M.; Jogun, T. The effect of fusing Sentinel-2 bands on land-cover classification. Int. J. Remote Sens. 2018, 39, 822–841. [Google Scholar] [CrossRef]

- Zhang, Y. Understanding image fusion. Photogramm. Eng. Remote Sens. 2004, 70, 657–661. [Google Scholar]

- Wang, Z.; Ziou, D.; Armenakis, C.; Li, D.; Li, Q. A comparative analysis of image fusion methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1391–1402. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W.; Li, Z.; Atkinson, P.M. Fusion of Sentinel-2 images. Remote Sens. Environ. 2016, 187, 241–252. [Google Scholar] [CrossRef] [Green Version]

- Karathanassi, V.; Kolokousis, P.; Ioannidou, S. A comparison study on fusion methods using evaluation indicators. Int. J. Remote Sens. 2007, 28, 2309–2341. [Google Scholar] [CrossRef]

- Fasbender, D.; Radoux, J.; Bogaert, P. Bayesian data fusion for adaptable image pansharpening. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1847–1857. [Google Scholar] [CrossRef]

- De Luca, G.; Silva, J.M.N.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-Based Land Cover Classification of Cork Oak Woodlands using UAV Imagery and Orfeo ToolBox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef] [Green Version]

- Fukunaga, K.; Hostetler, L. The estimation of the gradient of a density function, with applications in pattern recognition. IEEE Trans. Inf. Theory 1975, 21, 32–40. [Google Scholar] [CrossRef] [Green Version]

- Orfeo ToolBox Documentation. Available online: https://www.orfeo-toolbox.org/CookBook/ (accessed on 17 December 2020).

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Cutler, D.R.; Edwards, T.C.; Beard, K.H.; Cutler, A.; Hess, K.T.; Gibson, J.; Lawler, J.J. Random Forests for Classification in Ecology. Ecology 2007, 88, 2783–2792. [Google Scholar] [CrossRef]

- Gašparović, M.; Dobrinić, D. Comparative Assessment of Machine Learning Methods for Urban Vegetation Mapping Using Multitemporal Sentinel-1 Imagery. Remote Sens. 2020, 12, 1952. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Trisasongko, B.H.; Panuju, D.R.; Paull, D.J.; Jia, X.; Griffin, A.L. Comparing six pixel-wise classifiers for tropical rural land cover mapping using four forms of fully polarimetric SAR data. Int. J. Remote Sens. 2017, 38, 3274–3293. [Google Scholar] [CrossRef]

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Bechtel, B.; Demuzere, M.; Stewart, I.D. A Weighted Accuracy Measure for Land Cover Mapping: Comment on Johnson et al. Local Climate Zone (LCZ) Map Accuracy Assessments Should Account for Land Cover Physical Characteristics that Affect the Local Thermal Environment. Remote Sens. 2019, 11, 2420. Remote Sens. 2020, 12, 1769. [Google Scholar] [CrossRef]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Whyte, A.; Ferentinos, K.P.; Petropoulos, G.P. A new synergistic approach for monitoring wetlands using Sentinels -1 and 2 data with object-based machine learning algorithms. Environ. Model. Softw. 2018, 104, 40–54. [Google Scholar] [CrossRef] [Green Version]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Dorigo, W.; Lucieer, A.; Podobnikar, T.; Carni, A. Mapping invasive Fallopia japonica by combined spectral, spatial, and temporal analysis of digital orthophotos. Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 185–195. [Google Scholar] [CrossRef]

- Karlson, M.; Ostwald, M.; Reese, H.; Roméo, B.; Boalidioa, T. Assessing the potential of multi-seasonal WorldView-2 imagery for mapping West African agroforestry tree species. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 80–88. [Google Scholar] [CrossRef]

- Verlic, A.; Duric, N.; Kokalj, Z.; Marsetic, A.; Simoncic, P.; Ostir, K. Tree species classification using WorldView-2 satellite images and laser scanning data in a natural urban forest. Šumarski List 2014, 138, 477–488. [Google Scholar]

- Waser, L.T.; Küchler, M.; Jütte, K.; Stampfer, T. Evaluating the Potential of WorldView-2 Data to Classify Tree Species and Different Levels of Ash Mortality. Remote Sens. 2014, 6, 4515–4545. [Google Scholar] [CrossRef] [Green Version]

- Sabat-Tomala, A.; Raczko, E.; Zagajewski, B. Comparison of Support Vector Machine and Random Forest Algorithms for Invasive and Expansive Species Classification Using Airborne Hyperspectral Data. Remote Sens. 2020, 12, 516. [Google Scholar] [CrossRef] [Green Version]

- Kupidura, P. The Comparison of Different Methods of Texture Analysis for Their Efficacy for Land Use Classification in Satellite Imagery. Remote Sens. 2019, 11, 1233. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Peerbhay, K.Y.; Mutanga, O.; Ismail, R. Investigating the capability of few strategically placed WorldView-2 multispectral bands to discriminate forest species in KwaZulu-Natal, South Africa. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 307–316. [Google Scholar] [CrossRef]

- Galal, O.; Onisimo, M.; Elfatih, M.A.-R.; Elhadi, A. Performance of Support Vector Machines and Artificial Neural Network for Mapping Endangered Tree Species Using WorldView-2 Data in Dukuduku Forest, South Africa. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4825–4840. [Google Scholar]

- Varin, M.; Chalghaf, B.; Joanisse, G. Object-Based Approach Using Very High Spatial Resolution 16-Band WorldView-3 and LiDAR Data for Tree Species Classification in a Broadleaf Forest in Quebec, Canada. Remote Sens. 2020, 12, 3092. [Google Scholar] [CrossRef]

- Wang, H.; Zhao, Y.; Pu, R.; Zhang, Z. Mapping Robinia Pseudoacacia Forest Health Conditions by Using Combined Spectral, Spatial, and Textural Information Extracted from IKONOS Imagery and Random Forest Classifier. Remote Sens. 2015, 7, 9020–9044. [Google Scholar] [CrossRef] [Green Version]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban Tree Species Classification Using a WorldView-2/3 and LiDAR Data Fusion Approach and Deep Learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef] [Green Version]

- Ibarrola-Ulzurrun, E.; Gonzalo-Martín, C.; Marcello, J. Influence of Pansharpening in Obtaining Accurate Vegetation Maps. Can. J. Remote Sens. 2017, 43, 528–544. [Google Scholar] [CrossRef] [Green Version]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multiresolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.I.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Prošek, J.; Šímová, P. UAV for mapping shrubland vegetation: Does fusion of spectral and vertical information derived from a single sensor increase the classification accuracy? Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 151–162. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef] [Green Version]

| Class | Bayes | RCS | LMVM |

|---|---|---|---|

| Alnus glutinosa | 209 | 242 | 226 |

| Carpinus betulus | 806 | 1046 | 935 |

| Quercus robur | 955 | 1217 | 1234 |

| Bare land | 185 | 202 | 182 |

| Low vegetation | 406 | 518 | 425 |

| Shadow | 72 | 79 | 62 |

| Total | 2633 | 3304 | 3064 |

| Bayes | ||||||||

| Class (Latin name) | A. glutinosa | C. betulus | Q. robur | Bare land | Low vegetation | Shadow | Total | UA |

| A. glutinosa | 400 | 281 | 3 | 0 | 15 | 0 | 699 | 57% |

| C. betulus | 685 | 6821 | 41 | 0 | 199 | 0 | 7746 | 88% |

| Q. robur | 9 | 4 | 16126 | 0 | 5296 | 0 | 21435 | 75% |

| Bare land | 0 | 0 | 1 | 3233 | 201 | 0 | 3435 | 94% |

| Low vegetation | 128 | 36 | 374 | 0 | 3545 | 0 | 4083 | 87% |

| Shadow | 0 | 0 | 1 | 0 | 0 | 582 | 583 | 100% |

| Total | 1222 | 7142 | 16546 | 3233 | 9256 | 582 | OA = 81% | |

| PA | 33% | 96% | 97% | 100% | 38% | 100% | k = 0.72 | |

| RCS | ||||||||

| Class (Latin name) | A. glutinosa | C. betulus | Q. robur | Bare land | Low vegetation | Shadow | Total | UA |

| A. glutinosa | 232 | 117 | 4 | 0 | 8 | 1 | 362 | 64% |

| C. betulus | 851 | 7009 | 66 | 0 | 257 | 1 | 8184 | 86% |

| Q. robur | 12 | 3 | 16135 | 0 | 3892 | 1 | 20043 | 81% |

| Bare land | 0 | 0 | 0 | 3233 | 159 | 0 | 3392 | 95% |

| Low vegetation | 127 | 13 | 336 | 0 | 4940 | 0 | 5416 | 91% |

| Shadow | 0 | 0 | 5 | 0 | 0 | 579 | 584 | 99% |

| Total | 1222 | 7142 | 16546 | 3233 | 9256 | 582 | OA = 85% | |

| PA | 19% | 98% | 98% | 100% | 53% | 99% | k = 0.78 | |

| LMVM | ||||||||

| Class (Latin name) | A. glutinosa | C. betulus | Q. robur | Bare land | Low vegetation | Shadow | Total | UA |

| A. glutinosa | 481 | 160 | 11 | 0 | 35 | 0 | 687 | 70% |

| C. betulus | 640 | 6964 | 19 | 0 | 142 | 3 | 7768 | 90% |

| Q. robur | 16 | 1 | 16423 | 0 | 1517 | 8 | 17965 | 91% |

| Bare land | 0 | 0 | 9 | 3233 | 217 | 0 | 3459 | 93% |

| Low vegetation | 85 | 17 | 84 | 0 | 7345 | 0 | 7531 | 98% |

| Shadow | 0 | 0 | 0 | 0 | 0 | 571 | 571 | 100% |

| Total | 1222 | 7142 | 16546 | 3233 | 9256 | 582 | OA = 92% | |

| PA | 39% | 98% | 99% | 100% | 79% | 98% | k = 0.89 | |

| Class (Latin Name) | Bayes | RCS | LMVM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| FoM (%) | O (%) | C (%) | FoM (%) | O (%) | C (%) | FoM (%) | O (%) | C (%) | |

| A. glutinosa | 26.30 | 2.16 | 0.79 | 17.16 | 2.61 | 0.34 | 33.68 | 1.95 | 0.54 |

| C. betulus | 84.55 | 0.85 | 2.44 | 84.27 | 0.35 | 3.09 | 87.64 | 0.47 | 2.12 |

| Q. robur | 73.79 | 1.11 | 13.98 | 78.88 | 1.08 | 10.29 | 90.80 | 0.32 | 4.06 |

| Bare land | 94.12 | 0.00 | 0.53 | 95.31 | 0.00 | 0.42 | 93.47 | 0.00 | 0.60 |

| Low vegetation | 36.20 | 15.04 | 1.42 | 50.76 | 11.36 | 1.25 | 77.79 | 5.03 | 0.49 |

| Shadow | 99.83 | 0.00 | 0.00 | 98.64 | 0.01 | 0.01 | 98.11 | 0.03 | 0.00 |

| A | 81 | 85 | 92 | ||||||

| Weighted OA | 84 | 87 | 94 | ||||||

| Weighted k | 0.68 | 0.75 | 0.89 | ||||||

| Bayes | ||||||||

| Class (Latin name) | A. glutinosa | C. betulus | Q. robur | Bare land | Low vegetation | Shadow | Total | UA |

| A. glutinosa | 2111 | 1105 | 0 | 0 | 0 | 0 | 3216 | 66% |

| C. betulus | 1017 | 13,457 | 0 | 0 | 0 | 0 | 14,474 | 93% |

| Q. robur | 0 | 48 | 32,803 | 53 | 8622 | 0 | 41,526 | 79% |

| Bare land | 0 | 0 | 0 | 7797 | 0 | 0 | 7797 | 100% |

| Low vegetation | 80 | 0 | 165 | 0 | 7455 | 0 | 7700 | 97% |

| Shadow | 0 | 0 | 0 | 0 | 0 | 3019 | 3019 | 100% |

| Total | 3208 | 14,610 | 32,968 | 7850 | 16,077 | 3019 | OA = 86% | |

| PA | 66% | 92% | 99% | 99% | 46% | 100% | k = 0.80 | |

| RCS | ||||||||

| Class (Latin name) | A. glutinosa | C. betulus | Q. robur | Bare land | Low vegetation | Shadow | Total | UA |

| A. glutinosa | 611 | 347 | 0 | 0 | 0 | 0 | 958 | 64% |

| C. betulus | 1532 | 15,710 | 119 | 0 | 255 | 0 | 17,616 | 89% |

| Q. robur | 0 | 22 | 38,199 | 0 | 4146 | 0 | 42,367 | 90% |

| Bare land | 0 | 0 | 0 | 7409 | 0 | 0 | 7409 | 100% |

| Low vegetation | 135 | 0 | 262 | 0 | 12,897 | 0 | 13,294 | 97% |

| Shadow | 0 | 0 | 0 | 0 | 0 | 2778 | 2778 | 100% |

| Total | 2278 | 16,079 | 38,580 | 7409 | 17,298 | 2778 | OA = 92% | |

| PA | 27% | 98% | 99% | 100% | 75% | 100% | k = 0.88 | |

| LMVM | ||||||||

| Class (Latin name) | A. glutinosa | C. betulus | Q. robur | Bare land | Low vegetation | Shadow | Total | UA |

| A. glutinosa | 2097 | 225 | 82 | 0 | 0 | 0 | 2404 | 87% |

| C. betulus | 427 | 17,253 | 0 | 0 | 0 | 0 | 17,680 | 98% |

| Q. robur | 0 | 0 | 41,735 | 0 | 2520 | 0 | 44,255 | 94% |

| Bare land | 0 | 0 | 0 | 8313 | 0 | 0 | 8313 | 100% |

| Low vegetation | 170 | 90 | 0 | 0 | 14,816 | 0 | 15,076 | 98% |

| Shadow | 0 | 0 | 0 | 0 | 0 | 2931 | 2931 | 100% |

| Total | 2694 | 17,568 | 41,817 | 8313 | 17,336 | 2931 | OA = 96% | |

| PA | 78% | 98% | 100% | 100% | 85% | 100% | k = 0.94 | |

| Class (Latin Name) | Bayes | RCS | LMVM | ||||||

|---|---|---|---|---|---|---|---|---|---|

| FoM (%) | O (%) | C (%) | FoM (%) | O (%) | C (%) | FoM (%) | O (%) | C (%) | |

| A. glutinosa | 48.95 | 1.41 | 1.42 | 23.28 | 1.97 | 0.41 | 69.88 | 0.66 | 0.34 |

| C. betulus | 86.11 | 1.48 | 1.31 | 87.35 | 0.44 | 2.26 | 95.88 | 0.35 | 0.47 |

| Q. robur | 78.68 | 0.21 | 11.22 | 89.36 | 0.45 | 4.94 | 94.13 | 0.09 | 2.78 |

| Bare land | 99.32 | 0.07 | 0.00 | 100.00 | 0.00 | 0.00 | 100.00 | 0.00 | 0.00 |

| Low vegetation | 45.67 | 11.09 | 0.32 | 72.88 | 5.21 | 0.47 | 84.20 | 2.78 | 0.29 |

| Shadow | 100.00 | 0.00 | 0.00 | 100.00 | 0.00 | 0.00 | 100.00 | 0.00 | 0.00 |

| A | 86 | 92 | 96 | ||||||

| Weighted OA | 89 | 94 | 97 | ||||||

| Weighted k | 0.79 | 0.88 | 0.94 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deur, M.; Gašparović, M.; Balenović, I. An Evaluation of Pixel- and Object-Based Tree Species Classification in Mixed Deciduous Forests Using Pansharpened Very High Spatial Resolution Satellite Imagery. Remote Sens. 2021, 13, 1868. https://doi.org/10.3390/rs13101868

Deur M, Gašparović M, Balenović I. An Evaluation of Pixel- and Object-Based Tree Species Classification in Mixed Deciduous Forests Using Pansharpened Very High Spatial Resolution Satellite Imagery. Remote Sensing. 2021; 13(10):1868. https://doi.org/10.3390/rs13101868

Chicago/Turabian StyleDeur, Martina, Mateo Gašparović, and Ivan Balenović. 2021. "An Evaluation of Pixel- and Object-Based Tree Species Classification in Mixed Deciduous Forests Using Pansharpened Very High Spatial Resolution Satellite Imagery" Remote Sensing 13, no. 10: 1868. https://doi.org/10.3390/rs13101868

APA StyleDeur, M., Gašparović, M., & Balenović, I. (2021). An Evaluation of Pixel- and Object-Based Tree Species Classification in Mixed Deciduous Forests Using Pansharpened Very High Spatial Resolution Satellite Imagery. Remote Sensing, 13(10), 1868. https://doi.org/10.3390/rs13101868