Selection of Lee Filter Window Size Based on Despeckling Efficiency Prediction for Sentinel SAR Images

Abstract

:1. Introduction

- Filter performance depends upon many factors, including the parameter settings used. Parameters can be varied and set depending on the filter type. These parameters include scanning window size [12,13], thresholds [17,18,25], a block size [25], parameters of variance stabilizing transforms [17,18], and the number of blocks processed jointly within nonlocal despeckling approaches [17,20].

- Despeckling (denoising) performance considerably depends on image properties. For simpler structure images (that contain large homogeneous regions), a better performance is usually achieved compared to complex structure images (which contain a lot of edges, small-sized objects and textures) [26,27,28].

- Speckle properties also influence a filter performance. There are filters applicable to speckle with a probability density function (PDF) close to Gaussian but there is no such restriction for some other filters. The spatial correlation of the speckle (and noise in general) plays a key role in the efficiency of its suppression [29,30]. This means that the spatial correlation of the speckle should be known in advance or pre-estimated [31] and then taken into account in filter and/or its parameters’ selection.

- Filter performance can be assessed using different quantitative criteria; for SAR image denoising, it is common to use peak signal-to-noise ratio (PSNR) and an efficient number of looks [11,17,18,19,20], although other criteria are applicable as well. In particular, it has become popular to use visual quality metrics [32,33,34]. Despeckling methods can be also characterized from the viewpoint of the efficiency of image classification after processing [35,36,37,38]. The SSIM metric [39] has become popular in remote sensing applications but this metric is clearly not the best visual quality metric [40,41,42] among those designed due to the current moment.

2. Image/Noise Model and Filter Efficiency Criteria

+ PSNR-HVS-M9 + PSNR-HVS-M11)/4,

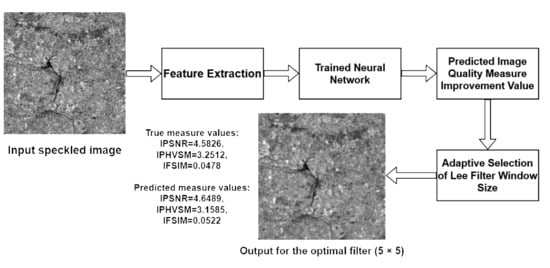

3. Filtering Efficiency Prediction Using Trained Neural Network

3.1. Proposed Approach

3.2. Neural Network Input Parameters

4. NN Training Results

5. Adaptive Selection of Window Size

- To perform prediction for only one parameter, e.g., IPSNR, for all possible scanning window sizes, and to choose the window size for which the predicted metric (e.g., IPSNR) is the largest.

- To jointly analyze two or three metrics (e.g., IPSNR and IPHVSM or IPSNR, IPHVSM, and IFSIM) and undertake a decision (there probably are many algorithms to do this).

- To obtain three decisions based on the separate analysis of IPSNR, IPHVSM, and IFSIM as in the first item and then to apply the majority vote algorithm of some other decision rule.

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing, 3rd ed.; Academic Press: San Diego, CA, USA, 2007. [Google Scholar]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging: From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2009; p. 422. [Google Scholar]

- Kussul, N.; Lemoine, G.; Gallego, F.J.; Skakun, S.; Lavreniuk, M.; Shelestov, A. Parcel-Based Crop Classification in Ukraine Using Landsat-8 Data and Sentinel-1A Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2500–2508. [Google Scholar] [CrossRef]

- Mullissa, A.G.; Persello, C.; Tolpekin, V. Fully Convolutional Networks for Multi-Temporal SAR Image Classification. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3338–6635. [Google Scholar] [CrossRef]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef] [Green Version]

- Ferrentino, E.; Buono, A.; Nunziata, F.; Marino, A.; Migliaccio, M. On the Use of Multipolarization Satellite SAR Data for Coastline Extraction in Harsh Coastal Environments: The Case of Solway Firth. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 249–257. [Google Scholar] [CrossRef]

- Nascimento, A.; Frery, A.; Cintra, R. Detecting Changes in Fully Polarimetric SAR Imagery with Statistical Information Theory. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1380–1392. [Google Scholar] [CrossRef] [Green Version]

- Deledalle, C.; Denis, L.; Tabti, S.; Tupin, F. MuLoG, or How to Apply Gaussian Denoisers to Multi-Channel SAR Speckle Reduction? IEEE Trans. Image Process. 2017, 26, 4389–4403. [Google Scholar] [CrossRef] [Green Version]

- Arienzo, A.; Argenti, F.; Alparone, L.; Gherardelli, M. Accurate Despeckling and Estimation of Polarimetric Features by Means of a Spatial Decorrelation of the Noise in Complex PolSAR Data. Remote Sens. 2020, 12, 331. [Google Scholar] [CrossRef] [Green Version]

- Touzi, R. Review of Speckle Filtering in the Context of Estimation Theory. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2392–2404. [Google Scholar] [CrossRef]

- Oliver, C.; Quegan, S. Understanding Synthetic Aperture Radar Images; SciTech Publishing: Raleigh, NC, USA, 2004. [Google Scholar]

- Lee, J. Digital Image Enhancement and Noise Filtering by Use of Local Statistics. IEEE Trans. Pattern Anal. Mach. Intell. 1980, PAMI-2, 165–168. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frost, V.; Stiles, J.; Shanmugan, K.; Holtzman, J. A Model for Radar Images and Its Application to Adaptive Digital Filtering of Multiplicative Noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, PAMI-4, 157–166. [Google Scholar] [CrossRef]

- Argenti, F.; Lapini, A.; Bianchi, T.; Alparone, L. A Tutorial on Speckle Reduction in Synthetic Aperture Radar Images. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–35. [Google Scholar] [CrossRef] [Green Version]

- Kupidura, P. Comparison of Filters Dedicated to Speckle Suppression in SAR Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI–B7, 269–276. [Google Scholar] [CrossRef]

- Lee, J.; Grunes, M.; Schuler, D.; Pottier, E.; Ferro-Famil, L. Scattering-model-based speckle filtering of polarimetric SAR data. IEEE Trans. Geosci. Remote Sens. 2006, 44, 176–187. [Google Scholar] [CrossRef]

- Cozzolino, D.; Parrilli, S.; Scarpa, G.; Poggi, G.; Verdoliva, L. Fast Adaptive Nonlocal SAR Despeckling. IEEE Geosci. Remote Sens. Lett. 2014, 11, 524–528. [Google Scholar] [CrossRef] [Green Version]

- Solbo, S.; Eltoft, T. A Stationary Wavelet-Domain Wiener Filter for Correlated Speckle. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1219–1230. [Google Scholar] [CrossRef]

- Lee, J.S.; Wen, J.H.; Ainsworth, T.; Chen, K.S.; Chen, A. Improved Sigma Filter for Speckle Filtering of SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 202–213. [Google Scholar] [CrossRef]

- Parrilli, S.; Poderico, M.; Angelino, C.; Verdoliva, L. A nonlocal SAR image denoising algorithm based on LLMMSE wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 2012, 50, 606–616. [Google Scholar] [CrossRef]

- Sun, Z.; Zhang, Z.; Chen, Y.; Liu, S.; Song, Y. Frost Filtering Algorithm of SAR Images with Adaptive Windowing and Adaptive Tuning Factor. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1097–1101. [Google Scholar] [CrossRef]

- Wu, B.; Zhou, S.; Ji, K. A novel method of corner detector for SAR images based on Bilateral Filter. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 2734–2737. [Google Scholar] [CrossRef]

- Gupta, A.; Tripathi, A.; Bhateja, V. Despeckling of SAR Images via an Improved Anisotropic Diffusion Algorithm. Adv. Intell. Syst. Comput. 2013, 747–754. [Google Scholar] [CrossRef]

- Fracastoro, G.; Magli, E.; Poggi, G.; Scarpa, G.; Valsesia, D.; Verdoliva, L. Deep learning methods for SAR image despeckling: Trends and perspectives. arXiv 2020, arXiv:2012.05508. [Google Scholar]

- Tsymbal, O.; Lukin, V.; Ponomarenko, N.; Zelensky, A.; Egiazarian, K.; Astola, J. Three-state locally adaptive texture preserving filter for radar and optical image processing. EURASIP J. Appl. Signal Process. 2005, 2005, 1185–1204. [Google Scholar] [CrossRef] [Green Version]

- Chatterjee, P.; Milanfar, P. Is Denoising Dead? IEEE Trans. Image Process. 2010, 19, 895–911. [Google Scholar] [CrossRef]

- Rubel, O.; Lukin, V.; de Medeiros, F. Prediction of Despeckling Efficiency of DCT-based filters Applied to SAR Images. In Proceedings of the International Conference on Distributed Computing in Sensor Systems, Fortaleza, Brazil, 10–12 June 2015; pp. 159–168. [Google Scholar] [CrossRef]

- Rubel, O.; Lukin, V.; Rubel, A.; Egiazarian, K. NN-Based Prediction of Sentinel-1 SAR Image Filtering Efficiency. Geosciences 2019, 9, 290. [Google Scholar] [CrossRef] [Green Version]

- Rubel, O.; Lukin, V.; Egiazarian, K. Additive Spatially Correlated Noise Suppression by Robust Block Matching and Adaptive 3D Filtering. J. Imaging Sci. Technol. 2018, 62, 60401–1. [Google Scholar] [CrossRef]

- Goossens, B.; Pizurica, A.; Philips, W. Removal of Correlated Noise by Modeling the Signal of Interest in the Wavelet Domain. IEEE Trans. Image Process. 2009, 18, 1153–1165. [Google Scholar] [CrossRef] [PubMed]

- Colom, M.; Lebrun, M.; Buades, A.; Morel, J. Nonparametric Multiscale Blind Estimation of Intensity-Frequency-Dependent Noise. IEEE Trans. Image Process. 2015, 24, 3162–3175. [Google Scholar] [CrossRef] [PubMed]

- Dellepiane, S.; Angiati, E. Quality assessment of despeckled SAR images. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium 2011, Vancouver, BC, Canada, 24–29 July 2011; pp. 3803–3806. [Google Scholar] [CrossRef]

- Rubel, O.; Rubel, A.; Lukin, V.; Carli, M.; Egiazarian, K. Blind Prediction of Original Image Quality for Sentinel Sar Data. In Proceedings of the 2019 8th European Workshop on Visual Information Processing (EUVIP), Roma, Italy, 28–31 October 2019; pp. 105–110. [Google Scholar] [CrossRef]

- Wang, P.; Patel, V. Generating high quality visible images from SAR images using CNNs. In Proceedings of the IEEE Radar Conference (RadarConf18), Oklahoma City, OK, USA, 23–27 April 2018; pp. 570–575. [Google Scholar] [CrossRef] [Green Version]

- Lukin, V.; Abramov, S.; Krivenko, S.; Kurekin, A.; Pogrebnyak, O. Analysis of classification accuracy for pre-filtered multichannel remote sensing data. J. Expert Syst. Appl. 2013, 40, 6400–6411. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Florida, FL, USA, 1999; ISBN 978-0-87371-986-5. [Google Scholar]

- Kumar, T.G.; Murugan, D.; Rajalakshmi, K.; Manish, T.I. Image enhancement and performance evaluation using various filters for IRS-P6 Satellite Liss IV remotely sensed data. Geofizika 2015, 179–189. [Google Scholar] [CrossRef]

- Yuan, T.; Zheng, X.; Hu, X.; Zhou, W.; Wang, W. A Method for the Evaluation of Image Quality According to the Recognition Effectiveness of Objects in the Optical Remote Sensing Image Using Machine Learning Algorithm. PLoS ONE 2014, 9, e86528. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, W.; Jay Kuo, C.C. Perceptual visual quality metrics: A survey. J. Vis. Commun. Image Represent. 2011, 22, 297–312. [Google Scholar] [CrossRef]

- Chandler, D. Seven Challenges in Image Quality Assessment: Past, Present, and Future Research. ISRN Signal Process. 2013, 2013, 1–53. [Google Scholar] [CrossRef]

- Bosse, S.; Maniry, D.; Muller, K.; Wiegand, T.; Samek, W. Deep Neural Networks for No-Reference and Full-Reference Image Quality Assessment. IEEE Trans. Image Process. 2018, 27, 206–219. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- European Space Agency. Earth Online. Available online: https://earth.esa.int/documents/653194/656796/Speckle_Filtering.pdf (accessed on 10 March 2021).

- Lee, J.; Ainsworth, T.; Wang, Y. A review of polarimetric SAR speckle filtering. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5303–5306. [Google Scholar] [CrossRef]

- Milanfar, P. A Tour of Modern Image Filtering: New Insights and Methods, Both Practical and Theoretical. IEEE Signal Process. Mag. 2013, 30, 106–128. [Google Scholar] [CrossRef] [Green Version]

- Zemliachenko, A.; Lukin, V.; Djurovic, I.; Vozel, B. On potential to improve DCT-based denoising with local threshold. In Proceedings of the 2018 7th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 10–14 June 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Abramov, S.; Lukin, V.; Rubel, O.; Egiazarian, K. Prediction of performance of 2D DCT-based filter and adaptive selection of its parameters. In Proceedings of the Electronic Imaging 2020, Burlingame, CA, USA, 26–30 January 2020; pp. 319-1–319-7. [Google Scholar] [CrossRef]

- Rubel, O.; Abramov, S.; Lukin, V.; Egiazarian, K.; Vozel, B.; Pogrebnyak, A. Is Texture Denoising Efficiency Predictable? Int. J. Pattern Recognit. Artif. Intell. 2018, 32, 1860005. [Google Scholar] [CrossRef] [Green Version]

- Rubel, O.; Lukin, V.; Rubel, A.; Egiazarian, K. Prediction of Lee filter performance for Sentinel-1 SAR images. In Proceedings of the Electronic Imaging 2020, Burlingame, CA, USA, 26–30 January 2020; pp. 371-1–371-7. [Google Scholar] [CrossRef]

- Lukin, V.; Rubel, O.; Kozhemiakin, R.; Abramov, S.; Shelestov, A.; Lavreniuk, M.; Meretsky, M.; Vozel, B.; Chehdi, K. Despeckling of Multitemporal Sentinel SAR Images and Its Impact on Agricultural Area Classification. Recent Adv. Appl. Remote Sens. 2018. [Google Scholar] [CrossRef] [Green Version]

- Abramova, V.; Abramov, S.; Lukin, V.; Egiazarian, K. Blind Estimation of Speckle Characteristics for Sentinel Polarimetric Radar Images. In Proceedings of the IEEE Microwaves, Radar and Remote Sensing Symposium (MRRS), Kiev, Ukraine, 29–31 August 2017; pp. 263–266. [Google Scholar] [CrossRef]

- Ponomarenko, N.; Silvestri, F.; Egiazarian, K.; Carli, M.; Astola, J.; Lukin, V. On between-coefficient contrast masking of DCT basis functions. In Proceedings of the 3rd International Workshop on Video Processing and Quality Metrics for Consumer Electronics (VPQM), Scottsdale, AZ, USA, 25–26 January 2007; p. 4. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ponomarenko, N.; Lukin, V.; Astola, J.; Egiazarian, K. Analysis of HVS-Metrics’ Properties Using Color Image Database TID2013. Adv. Concepts Intell. Vis. Syst. 2015, 613–624. [Google Scholar] [CrossRef]

- Cameron, C.; Windmeijer, A. An R-squared measure of goodness of fit for some common nonlinear regression models. J. Econom. 1997, 77, 329–342. [Google Scholar] [CrossRef]

- Xian, G.; Shi, H.; Anderson, C.; Wu, Z. Assessment of the Impacts of Image Signal-to-Noise Ratios in Impervious Surface Mapping. Remote Sens. 2019, 11, 2603. [Google Scholar] [CrossRef] [Green Version]

| Predicted Metric | Scanning Window Size | RMSE | |

|---|---|---|---|

| IPSNR | 5 × 5 | 0.234 | 0.976 |

| IPSNR | 7 × 7 | 0.289 | 0.986 |

| IPSNR | 9 × 9 | 0.319 | 0.989 |

| IPSNR | 11 × 11 | 0.355 | 0.990 |

| IPHVSM | 5 × 5 | 0.208 | 0.966 |

| IPHVSM | 7 × 7 | 0.303 | 0.983 |

| IPHVSM | 9 × 9 | 0.351 | 0.988 |

| IPHVSM | 11 × 11 | 0.396 | 0.989 |

| IFSIM | 5 × 5 | 0.007 | 0.984 |

| IFSIM | 7 × 7 | 0.011 | 0.987 |

| IFSIM | 9 × 9 | 0.016 | 0.986 |

| IFSIM | 11 × 11 | 0.019 | 0.985 |

| Predicted Metric | Scanning Window Size | RMSE | |

|---|---|---|---|

| IPSNR | 5 × 5 | 0.263 | 0.966 |

| IPSNR | 7 × 7 | 0.328 | 0.98 |

| IPSNR | 9 × 9 | 0.361 | 0.985 |

| IPSNR | 11 × 11 | 0.399 | 0.986 |

| IPHVSM | 5 × 5 | 0.229 | 0.951 |

| IPHVSM | 7 × 7 | 0.338 | 0.975 |

| IPHVSM | 9 × 9 | 0.396 | 0.983 |

| IPHVSM | 11 × 11 | 0.446 | 0.985 |

| IFSIM | 5 × 5 | 0.008 | 0.981 |

| IFSIM | 7 × 7 | 0.013 | 0.983 |

| IFSIM | 9 × 9 | 0.017 | 0.982 |

| IFSIM | 11 × 11 | 0.021 | 0.980 |

| Predicted Metric | Scanning Window Size | RMSE | |

|---|---|---|---|

| IPSNR | 5 × 5 | 0.326 | 0.946 |

| IPSNR | 7 × 7 | 0.412 | 0.967 |

| IPSNR | 9 × 9 | 0.466 | 0.974 |

| IPSNR | 11 × 11 | 0.512 | 0.977 |

| IPHVSM | 5 × 5 | 0.279 | 0.929 |

| IPHVSM | 7 × 7 | 0.431 | 0.961 |

| IPHVSM | 9 × 9 | 0.513 | 0.971 |

| IPHVSM | 11 × 11 | 0.573 | 0.974 |

| IFSIM | 5 × 5 | 0.01 | 0.969 |

| IFSIM | 7 × 7 | 0.016 | 0.973 |

| IFSIM | 9 × 9 | 0.023 | 0.969 |

| IFSIM | 11 × 11 | 0.028 | 0.964 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rubel, O.; Lukin, V.; Rubel, A.; Egiazarian, K. Selection of Lee Filter Window Size Based on Despeckling Efficiency Prediction for Sentinel SAR Images. Remote Sens. 2021, 13, 1887. https://doi.org/10.3390/rs13101887

Rubel O, Lukin V, Rubel A, Egiazarian K. Selection of Lee Filter Window Size Based on Despeckling Efficiency Prediction for Sentinel SAR Images. Remote Sensing. 2021; 13(10):1887. https://doi.org/10.3390/rs13101887

Chicago/Turabian StyleRubel, Oleksii, Vladimir Lukin, Andrii Rubel, and Karen Egiazarian. 2021. "Selection of Lee Filter Window Size Based on Despeckling Efficiency Prediction for Sentinel SAR Images" Remote Sensing 13, no. 10: 1887. https://doi.org/10.3390/rs13101887

APA StyleRubel, O., Lukin, V., Rubel, A., & Egiazarian, K. (2021). Selection of Lee Filter Window Size Based on Despeckling Efficiency Prediction for Sentinel SAR Images. Remote Sensing, 13(10), 1887. https://doi.org/10.3390/rs13101887

.png)