Gradient Boosting Machine and Object-Based CNN for Land Cover Classification

Abstract

:1. Introduction

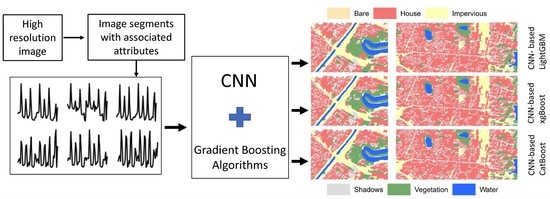

2. Data and Methods

2.1. Study Area and Training Data Preparation

2.2. Gradient Boosting Classifiers

2.3. Object-Based CNN with GBM Algorithms

2.4. Accuracy Assessment

3. Result and Discussions

4. Future Remarks

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Kranjčić, N.; Medak, D.; Župan, R.; Rezo, M. Support Vector Machine Accuracy Assessment for Extracting Green Urban Areas in Towns. Remote Sens. 2019, 11, 655. [Google Scholar] [CrossRef] [Green Version]

- Tan, X.; Song, Y.; Xiang, W. Remote Sensing Image Classification Based on SVM and Object Semantic. In Geo-Informatics in Resource Management and Sustainable Ecosystem; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- El-Melegy, M.T.; Ahmed, S.M. Neural Networks in Multiple Classifier Systems for Remote-Sensing Image Classification. In Soft Computing in Image Processing: Recent Advances; Nachtegael, M., van der Weken, D., Kerre, E.E., Philips, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 65–94. [Google Scholar]

- Bui, Q.-T.; Van, M.P.; Hang, N.T.T.; Nguyen, Q.-H.; Linh, N.X.; Hai, P.M.; Tuan, T.A.; Van Cu, P. Hybrid model to optimize object-based land cover classification by meta-heuristic algorithm: An example for supporting urban management in Ha Noi, Viet Nam. Int. J. Digit. Earth 2018, 12, 1118–1132. [Google Scholar] [CrossRef]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Tian, S.; Zhang, X.; Tian, J.; Sun, Q. Random Forest Classification of Wetland Landcovers from Multi-Sensor Data in the Arid Region of Xinjiang, China. Remote Sens. 2016, 8, 954. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Helber, P.; Bischke, B.; Dengel, A.; Borth, D. EuroSAT: A Novel Dataset and Deep Learning Benchmark for Land Use and Land Cover Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2217–2226. [Google Scholar] [CrossRef] [Green Version]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Bui, Q.-T.; Van Pham, M.; Nguyen, Q.-H.; Nguyen, L.X.; Pham, H.M. Whale Optimization Algorithm and Adaptive Neuro-Fuzzy Inference System: A hybrid method for feature selection and land pattern classification. Int. J. Remote Sens. 2019, 40, 5078–5093. [Google Scholar] [CrossRef]

- Bui, Q.T.; Nguyen, Q.H.; Pham, V.M.; Pham, V.D.; Tran, M.H.; Tran, T.T.; Nguyen, H.D.; Nguyen, X.L.; Pham, H.M. A Novel Method for Multispectral Image Classification by Using Social Spider Optimization Algorithm Integrated to Fuzzy C-Mean Clustering. Can. J. Remote Sens. 2019, 45, 42–53. [Google Scholar] [CrossRef]

- Kim, M.; Lee, J.; Han, D.; Shin, M.; Im, J.; Quackenbush, L.J.; Gu, Z. Convolutional Neural Network-Based Land Cover Classification Using 2-D Spectral Reflectance Curve Graphs With Multitemporal Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4604–4617. [Google Scholar] [CrossRef]

- Marcos, D.; Volpi, M.; Kellenberger, B.; Tuia, D. Land cover mapping at very high resolution with rotation equivariant CNNs: Towards small yet accurate models. ISPRS J. Photogramm. Remote Sens. 2018, 145, 96–107. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Wang, Y.; Zhang, Q.; Xiang, S.; Pan, C. Gated Convolutional Neural Network for Semantic Segmentation in High-Resolution Images. Remote Sens. 2017, 9, 446. [Google Scholar] [CrossRef] [Green Version]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. Learning Low Dimensional Convolutional Neural Networks for High-Resolution Remote Sensing Image Retrieval. Remote Sens. 2017, 9, 489. [Google Scholar] [CrossRef] [Green Version]

- Srivastava, S.; Vargas-Muñoz, J.E.; Tuia, D. Understanding urban landuse from the above and ground perspectives: A deep learning, multimodal solution. Remote Sens. Environ. 2019, 228, 129–143. [Google Scholar] [CrossRef] [Green Version]

- Scarpa, G.; Gargiulo, M.; Mazza, A.; Gaetano, R. A CNN-Based Fusion Method for Feature Extraction from Sentinel Data. Remote Sens. 2018, 10, 236. [Google Scholar] [CrossRef] [Green Version]

- Tuna, C.; Unal, G.; Sertel, E. Single-frame super resolution of remote-sensing images by convolutional neural networks. Int. J. Remote Sens. 2018, 39, 2463–2479. [Google Scholar] [CrossRef]

- Tsagkatakis, G.; Aidini, A.; Fotiadou, K.; Giannopoulos, M.; Pentari, A.; Tsakalides, P. Survey of Deep-Learning Approaches for Remote Sensing Observation Enhancement. Sensors 2019, 19, 3929. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hu, X.; Yuan, Y. Deep-Learning-Based Classification for DTM Extraction from ALS Point Cloud. Remote Sens. 2016, 8, 730. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Xu, W.; Chen, H.; Jiang, J.; Li, X. A Novel Framework Based on Mask R-CNN and Histogram Thresholding for Scalable Segmentation of New and Old Rural Buildings. Remote Sens. 2021, 13, 1070. [Google Scholar] [CrossRef]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G. Building Extraction from Satellite Images Using Mask R-CNN with Building Boundary Regularization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Tiede, D.; Schwendemann, G.; Alobaidi, A.; Wendt, L.; Lang, S. Mask R-CNN-based building extraction from VHR satellite data in operational humanitarian action: An example related to Covid-19 response in Khartoum, Sudan. Trans. GIS 2021, 25. [Google Scholar] [CrossRef]

- Hu, C.; Huo, L.-Z.; Zhang, Z.; Tang, P. Multi-Temporal Landsat Data Automatic Cloud Removal Using Poisson Blending. IEEE Access 2020, 8, 46151–46161. [Google Scholar] [CrossRef]

- Sharma, A.; Liu, X.; Yang, X. Land cover classification from multi-temporal, multi-spectral remotely sensed imagery using patch-based recurrent neural networks. Neural Netw. 2018, 105, 346–355. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sharma, A.; Liu, X.; Yang, X.; Shi, D. A patch-based convolutional neural network for remote sensing image classification. Neural Netw. 2017, 95, 19–28. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Yuan, Q.; Li, J.; Li, Z.; Shen, H.; Zhang, L. Thick cloud and cloud shadow removal in multitemporal imagery using progressively spatio-temporal patch group deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 162, 148–160. [Google Scholar] [CrossRef]

- Lucic, M.; Kurach, K.; Michalski, M.; Gelly, S.; Bousquet, O. Are GANs created equal? A large-scale study. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA, 2018; pp. 698–707. [Google Scholar]

- Pham, V.-D.; Bui, Q.-T. Spatial resolution enhancement method for Landsat imagery using a Generative Adversarial Network. Remote Sens. Lett. 2021, 12, 654–665. [Google Scholar] [CrossRef]

- Lee, J.; Han, D.; Shin, M.; Im, J.; Quackenbush, L.J. Different Spectral Domain Transformation for Land Cover Classification Using Convolutional Neural Networks with Multi-Temporal Satellite Imagery. Remote Sens. 2020, 12, 1097. [Google Scholar] [CrossRef] [Green Version]

- Ren, X.; Guo, H.; Li, S.; Wang, S.; Li, J. A Novel Image Classification Method with CNN-XGBoost Model. In Digital Forensics and Watermarking; Springer International Publishing: Cham, Switzerland, 2017. [Google Scholar]

- Liu, S.; Qi, Z.; Li, X.; Yeh, A.G.-O. Integration of Convolutional Neural Networks and Object-Based Post-Classification Refinement for Land Use and Land Cover Mapping with Optical and SAR Data. Remote Sens. 2019, 11, 690. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Du, S.; Emery, W.J. Object-Based Convolutional Neural Network for High-Resolution Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully Convolutional Networks and Geographic Object-Based Image Analysis for the Classification of VHR Imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Martins, V.; Kaleita, A.L.; Gelder, B.K.; da Silveira, H.L.F.; Abe, C.A. Exploring Object-Based CNN Architecture for Land Cover Classification of High-Resolution Remote Sensing Data. Available online: https://ui.adsabs.harvard.edu/abs/2019AGUFMIN51D0672M/abstract (accessed on 17 June 2021).

- Zhou, K.; Ming, D.; Lv, X.; Fang, J.; Wang, M. CNN-based Land Cover Classification Combining Stratified Segmentation and Fusion of Point Cloud and Very High-Spatial Resolution Remote Sensing Image Data. Remote Sens. 2019, 11, 2065. [Google Scholar] [CrossRef] [Green Version]

- Park, J.H.; Inamori, T.; Hamaguchi, R.; Otsuki, K.; Kim, J.E.; Yamaoka, K. RGB Image Prioritization Using Convolutional Neural Network on a Microprocessor for Nanosatellites. Remote Sens. 2020, 12, 3941. [Google Scholar] [CrossRef]

- Abdalla, A.; Cen, H.; Abdel-Rahman, E.; Wan, L.; He, Y. Color Calibration of Proximal Sensing RGB Images of Oilseed Rape Canopy via Deep Learning Combined with K-Means Algorithm. Remote Sens. 2019, 11, 3001. [Google Scholar] [CrossRef] [Green Version]

- Bhuiyan, M.A.; Witharana, C.; Liljedahl, A.K.; Jones, B.M.; Daanen, R.; Epstein, H.E.; Kent, K.; Griffin, C.G.; Agnew, A. Understanding the Effects of Optimal Combination of Spectral Bands on Deep Learning Model Predictions: A Case Study Based on Permafrost Tundra Landform Mapping Using High Resolution Multispectral Satellite Imagery. J. Imaging 2020, 6, 97. [Google Scholar] [CrossRef]

- Li, Y.; Majumder, A.; Zhang, H.; Gopi, M. Optimized Multi-Spectral Filter Array Based Imaging of Natural Scenes. Sensors 2018, 18, 43. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rahman, S.; Irfan, M.; Raza, M.; Ghori, K.M.; Yaqoob, S.; Awais, M. Performance Analysis of Boosting Classifiers in Recognizing Activities of Daily Living. Int. J. Environ. Res. Public Health 2020, 17, 1082. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, H.; Gong, P.; Wang, J.; Clinton, N.; Bai, Y.; Liang, S. Annual dynamics of global land cover and its long-term changes from 1982 to 2015. Earth Syst. Sci. Data 2020, 12, 1217–1243. [Google Scholar] [CrossRef]

- Machado, M.R.; Karray, S.; Sousa, I.T.d. LightGBM: An Effective Decision Tree Gradient Boosting Method to Predict Customer Loyalty in the Finance Industry. In Proceedings of the 2019 14th International Conference on Computer Science & Education (ICCSE), Toronto, ON, Canada, 19–21 August 2019. [Google Scholar]

- Jozdani, S.E.; Johnson, B.A.; Chen, D. Comparing Deep Neural Networks, Ensemble Classifiers, and Support Vector Machine Algorithms for Object-Based Urban Land Use/Land Cover Classification. Remote Sens. 2019, 11, 1713. [Google Scholar] [CrossRef] [Green Version]

- Jun, M.-J. A comparison of a gradient boosting decision tree, random forests, and artificial neural networks to model urban land use changes: The case of the Seoul metropolitan area. Int. J. Geogr. Inf. Sci. 2021, 1–19. [Google Scholar] [CrossRef]

- Kaur, H.; Koundal, D.; Kadyan, V. Image Fusion Techniques: A Survey. Arch. Comput. Methods Eng. 2021, 1–23. [Google Scholar] [CrossRef]

- Wang, Q.; Blackburn, G.A.; Onojeghuo, A.O.; Dash, J.; Zhou, L.; Zhang, Y.; Atkinson, P.M. Fusion of Landsat 8 OLI and Sentinel-2 MSI Data. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3885–3899. [Google Scholar] [CrossRef] [Green Version]

- Yilmaz, V.; Yilmaz, C.S.; Güngör, O.; Shan, J. A genetic algorithm solution to the gram-schmidt image fusion. Int. J. Remote Sens. 2019, 41, 1458–1485. [Google Scholar] [CrossRef]

- Huang, G.; Wu, L.; Ma, X.; Zhang, W.; Fan, J.; Yu, X.; Zeng, W.; Zhou, H. Evaluation of CatBoost method for prediction of reference evapotranspiration in humid regions. J. Hydrol. 2019, 574, 1029–1041. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Red Hook, NY, USA, 4–9 December 2017; Curran Associates Inc.: Long Beach, CA, USA, 2017; pp. 3149–3157. [Google Scholar]

- McGlinchy, J.; Johnson, B.; Muller, B.; Joseph, M.; Diaz, J. Application of UNet Fully Convolutional Neural Network to Impervious Surface Segmentation in Urban Environment from High Resolution Satellite Imagery. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

| Bands | Spectral Range (µm) | Spatial Resolution (m) | |

|---|---|---|---|

| Original image | Blue (B) | 0.455–0.525 | 6.0 |

| Green (G) | 0.530–0.590 | 6.0 | |

| Red (R) | 0.625–0.695 | 6.0 | |

| Near-Infrared (NIR) | 0.760–0.890 | 6.0 | |

| Panchromatic | 0.450–0.745 | 1.5 | |

| Fusion | (B, G, R, NIR) | 1.5 |

| Object Features | No. of Features | Description |

|---|---|---|

| Min pixel value | 6 | Min pixel values for 6 bands |

| Max pixel value | 6 | Max pixel values for 6 bands |

| Mean pixel value | 6 | Mean pixel values for 6 bands |

| Standard deviation | 6 | Standard deviation of pixel value |

| Min_PP | 6 | Mean pure pixel |

| Max_PP | 6 | Max pure pixel |

| Mean_PP | 6 | Mean pure pixel |

| Standard deviation_PP | 6 | Standard deviation of pure pixel |

| Circular | 1 | Circular |

| Compactness | 1 | Compactness |

| Elongation | 1 | Elongation |

| Rectangular | 1 | Rectangular |

| Total | 52 |

| Training/Validation/Testing Samples. | Sample Numbers. (80% Is Randomly Selected and Used for Training, 20% Is Used for Testing) | Samples for Visualization | Sample Numbers |

|---|---|---|---|

| Bare soil | 890 (images) | Bare soil | 71 |

| Impervious surface | 4716 | Impervious surface | 376 |

| Shadows | 7786 | Shadows | 620 |

| Vegetation | 6502 | Vegetation | 518 |

| Water | 330 | Water | 26 |

| House | 30,010 | House | 2.390 |

| Total | 50,234 | Total | 4.000 |

| Metrics | CNN with Machine Learning Classifiers | Metrics | Classifiers with Tabulated Data | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| FC | XGBoost | LightGBM | CatBoost | RF | SVM | XGBoost | LightGBM | CatBoost | ||

| OA | 0.8868 | 0.8905 | 0.8956 | 0.8956 | OA | 0.8401 | 0.8268 | 0.8499 | 0.8533 | 0.8414 |

| Loss | 0.4623 | 0.4601 | 0.4553 | 0.4523 | RMSE | 0.1599 | 0.1625 | 0.1501 | 0.1466 | 0.1561 |

| Error | 0.1431 | 0.1404 | 0.1394 | 0.1393 | ||||||

| CNN- LightGBM | Classified | CNN- FC | Classified | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Vege | House | Shad | Imper | Water | Bare | PA | Vege | House | Shad | Imper | Water | Bare | PA | |||

| Reference | Vege | 571 | 56 | 70 | 12 | 1 | 0 | 0.8046 | Vege | 563 | 62 | 59 | 24 | 0 | 1 | 0.7941 |

| House | 67 | 6646 | 176 | 65 | 0 | 2 | 0.9554 | House | 79 | 6573 | 200 | 100 | 0 | 4 | 0.9448 | |

| Shad | 122 | 144 | 1256 | 4 | 5 | 0 | 0.8200 | Shad | 139 | 126 | 1234 | 24 | 7 | 0 | 0.8060 | |

| Imper | 64 | 142 | 30 | 422 | 0 | 2 | 0.6386 | Imper | 49 | 146 | 27 | 438 | 0 | 0 | 0.6633 | |

| Water | 1 | 1 | 6 | 0 | 24 | 0 | 0.7442 | Water | 1 | 1 | 5 | 1 | 24 | 0 | 0.7442 | |

| Bare | 10 | 17 | 1 | 50 | 0 | 79 | 0.5071 | Bare | 10 | 24 | 0 | 46 | 0 | 76 | 0.4882 | |

| UA | 0.6844 | 0.9484 | 0.8165 | 0.7627 | 0.8000 | 0.9469 | OA = 0.8956 | UA | 0.6696 | 0.9482 | 0.8091 | 0.6912 | 0.7619 | 0.9279 | OA = 0.8868 | |

| CNN- CatBoost | Classified | CNN- XGBoost | ||||||||||||||

| Vege | House | Shad | Imper | Water | Bare | PA | Vege | House | Shad | Imper | Water | Bare | PA | |||

| Reference | Vege | 571 | 51 | 69 | 18 | 1 | 0 | 0.8046 | Vege | 564 | 46 | 73 | 22 | 1 | 3 | 0.7952 |

| House | 65 | 6649 | 170 | 67 | 0 | 5 | 0.9558 | House | 68 | 6618 | 173 | 92 | 0 | 6 | 0.9512 | |

| Shad | 113 | 136 | 1273 | 7 | 3 | 0 | 0.8312 | Shad | 117 | 130 | 1266 | 13 | 4 | 1 | 0.8268 | |

| Imper | 62 | 169 | 27 | 396 | 0 | 6 | 0.6004 | Imper | 61 | 176 | 29 | 388 | 0 | 7 | 0.5881 | |

| Water | 1 | 1 | 7 | 0 | 23 | 0 | 0.7209 | Water | 1 | 1 | 7 | 0 | 22 | 0 | 0.6977 | |

| Bare | 8 | 30 | 1 | 33 | 0 | 84 | 0.5403 | Bare | 9 | 23 | 1 | 36 | 0 | 87 | 0.5592 | |

| UA | 0.6968 | 0.9448 | 0.8228 | 0.7621 | 0.8611 | 0.8837 | OA = 0.8956 | UA | 0.6881 | 0.9462 | 0.8169 | 0.7043 | 0.8108 | 0.8429 | OA = 0.8905 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bui, Q.-T.; Chou, T.-Y.; Hoang, T.-V.; Fang, Y.-M.; Mu, C.-Y.; Huang, P.-H.; Pham, V.-D.; Nguyen, Q.-H.; Anh, D.T.N.; Pham, V.-M.; et al. Gradient Boosting Machine and Object-Based CNN for Land Cover Classification. Remote Sens. 2021, 13, 2709. https://doi.org/10.3390/rs13142709

Bui Q-T, Chou T-Y, Hoang T-V, Fang Y-M, Mu C-Y, Huang P-H, Pham V-D, Nguyen Q-H, Anh DTN, Pham V-M, et al. Gradient Boosting Machine and Object-Based CNN for Land Cover Classification. Remote Sensing. 2021; 13(14):2709. https://doi.org/10.3390/rs13142709

Chicago/Turabian StyleBui, Quang-Thanh, Tien-Yin Chou, Thanh-Van Hoang, Yao-Min Fang, Ching-Yun Mu, Pi-Hui Huang, Vu-Dong Pham, Quoc-Huy Nguyen, Do Thi Ngoc Anh, Van-Manh Pham, and et al. 2021. "Gradient Boosting Machine and Object-Based CNN for Land Cover Classification" Remote Sensing 13, no. 14: 2709. https://doi.org/10.3390/rs13142709

APA StyleBui, Q. -T., Chou, T. -Y., Hoang, T. -V., Fang, Y. -M., Mu, C. -Y., Huang, P. -H., Pham, V. -D., Nguyen, Q. -H., Anh, D. T. N., Pham, V. -M., & Meadows, M. E. (2021). Gradient Boosting Machine and Object-Based CNN for Land Cover Classification. Remote Sensing, 13(14), 2709. https://doi.org/10.3390/rs13142709