Lithology Classification Using TASI Thermal Infrared Hyperspectral Data with Convolutional Neural Networks

Abstract

:1. Introduction

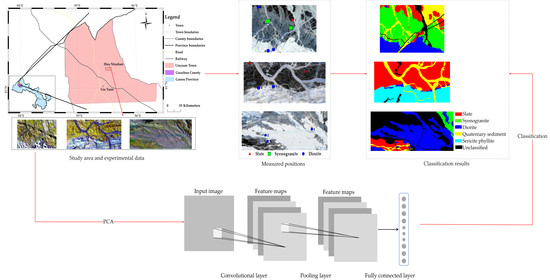

2. Data Description

2.1. Study Area

2.2. Data Preprocessing

2.2.1. Atmospheric Correction

2.2.2. Temperature Emissivity Separation

2.3. Reference Map Generation

2.3.1. MNF Transformation

2.3.2. Field Surveying Data

2.3.3. Confirmation of Lithology Type

3. Convolutional Neural Networks

3.1. One-Dimensional CNN

3.2. Two-Dimensional CNN

3.3. Three-Dimensional CNN

4. Experimental Results and Analysis

4.1. Classification Results

4.1.1. Liuyuan 1

4.1.2. Liuyuan 2

4.1.3. Liuyuan 3

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Van der Meer, F.D.; van der Werff, H.M.A.; van Ruitenbeek, F.J.A.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; Smeth, J.B.D.; Woldai, T. Multi- and Hyperspectral Geologic Remote Sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Cloutis, E.A. Review Article Hyperspectral Geological Remote Sensing: Evaluation of Analytical Techniques. Int. J. Remote Sens. 1996, 17, 2215–2242. [Google Scholar] [CrossRef]

- Chen, X.; Warner, T.A.; Campagna, D.J. Integrating Visible, Near-Infrared and Short-Wave Infrared Hyperspectral and Multispectral Thermal Imagery for Geological Mapping at Cuprite, Nevada. Remote Sens. Environ. 2007, 110, 344–356. [Google Scholar] [CrossRef] [Green Version]

- Salles, R.D.R.; de Souza Filho, C.R.; Cudahy, T.; Vicente, L.E.; Monteiro, L.V.S. Hyperspectral Remote Sensing Applied to Uranium Exploration: A Case Study at the Mary Kathleen Metamorphic-Hydrothermal U-REE Deposit, NW, Queensland, Australia. J. Geochem. Explor. 2017, 179, 36–50. [Google Scholar] [CrossRef]

- Zhang, T.-T.; Liu, F. Application of Hyperspectral Remote Sensing in Mineral Identification and Mapping. In Proceedings of the 2012 2nd International Conference on Computer Science and Network Technology (ICCSNT 2012), Changchun, China, 29–31 December 2012; pp. 103–106. [Google Scholar]

- Ni, L.; Xu, H.; Zhou, X. Mineral Identification and Mapping by Synthesis of Hyperspectral VNIR/SWIR and Multispectral TIR Remotely Sensed Data with Different Classifiers. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3155–3163. [Google Scholar] [CrossRef]

- Ninomiya, Y.; Fu, B. Thermal Infrared Multispectral Remote Sensing of Lithology and Mineralogy Based on Spectral Properties of Materials. Ore Geol. Rev. 2019, 108, 54–72. [Google Scholar] [CrossRef]

- Asadzadeh, S.; de Souza Filho, C.R. A Review on Spectral Processing Methods for Geological Remote Sensing. Int. J. Appl. Earth Obs. 2016, 47, 69–90. [Google Scholar] [CrossRef]

- Cui, J.; Yan, B.; Dong, X.; Zhang, S.; Zhang, J.; Tian, F.; Wang, R. Temperature and Emissivity Separation and Mineral Mapping Based On Airborne TASI Hyperspectral Thermal Infrared Data. Int. J. Appl. Earth Obs. 2015, 40, 19–28. [Google Scholar] [CrossRef]

- Boubanga-Tombet, S.; Huot, A.; Vitins, I.; Heuberger, S.; Veuve, C.; Eisele, A.; Hewson, R.; Guyot, E.; Marcotte, F.; Chamberland, M. Thermal Infrared Hyperspectral Imaging for Mineralogy Mapping of a Mine Face. Remote Sens. 2018, 10, 1518. [Google Scholar] [CrossRef] [Green Version]

- Black, M.; Riley, T.R.; Ferrier, G.; Fleming, A.H.; Fretwell, P.T. Automated Lithological Mapping Using Airborne Hyperspectral Thermal Infrared Data: A Case Study from Anchorage Island, Antarctica. Remote Sens. Environ. 2016, 176, 225–241. [Google Scholar] [CrossRef] [Green Version]

- Ninomiya, Y.; Fu, B.; Cudahy, T.J. Detecting Lithology with Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) Multispectral Thermal Infrared “Radiance-at-Sensor” Data. Remote Sens. Environ. 2005, 99, 127–139. [Google Scholar] [CrossRef]

- Reath, K.A.; Ramsey, M.S. Exploration of Geothermal Systems Using Hyperspectral Thermal Infrared Remote Sensing. J. Volcanol. Geothem. Res. 2013, 265, 27–38. [Google Scholar] [CrossRef]

- Kurata, K.; Yamaguchi, Y. Integration and Visualization of Mineralogical and Topographical Information Derived from ASTER and DEM Data. Remote Sens. 2019, 11, 162. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Zhang, L.; Zhao, X.; Yang, H.; Yang, K.; Zhang, X.; Wang, S.; Sun, H. A New Method of Mineral Absorption Feature Extraction from Vegetation Covered Area. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5437–5440. [Google Scholar]

- Vignesh, K.M.; Kiran, Y. Comparative Analysis of Mineral Mapping for Hyperspectral and Multispectral Imagery. Arab. J. Geosci. 2020, 13, 160. [Google Scholar] [CrossRef]

- Ni, L.; Wub, H. Mineral Identification and Classification by Combining Use of Hyperspectral VNIR/SWIR and Multispectral TIR Remotely Sensed Data. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 3317–3320. [Google Scholar]

- Krupnik, D.; Khan, S.D. High-Resolution Hyperspectral Mineral Mapping: Case Studies in the Edwards Limestone, Texas, USA and Sulfide-Rich Quartz Veins from the Ladakh Batholith, Northern Pakistan. Minerals. 2020, 10, 967. [Google Scholar] [CrossRef]

- Chen, X.; Chen, J.; Pan, J. Using Geochemical Imaging Data to Map Nickel Sulfide Deposits in Daxinganling, China. SN Appl. Sci. 2021, 3, 324. [Google Scholar] [CrossRef]

- Villa, P.; Pepe, M.; Boschetti, M.; de Paulis, R. Spectral Mapping Capabilities of Sedimentary Rocks Using Hyperspectral Data in Sicily, Italy. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 2625–2628. [Google Scholar]

- Hecker, C.; van Ruitenbeek, F.J.A.; van der Werff, H.M.A.; Bakker, W.H.; Hewson, R.D.; van der Meer, F.D. Spectral Absorption Feature Analysis for Finding Ore: A Tutorial on Using the Method in Geological Remote Sensing. IEEE Geosci. Remote Sens. Mag. 2019, 7, 51–71. [Google Scholar] [CrossRef]

- Kopačková, V.; Koucká, L. Integration of Absorption Feature Information from Visible to Longwave Infrared Spectral Ranges for Mineral Mapping. Remote Sens. 2017, 9, 1006. [Google Scholar] [CrossRef] [Green Version]

- Kumar, C.; Chatterjee, S.; Oommen, T.; Guha, A. Automated Lithological Mapping by Integrating Spectral Enhancement Techniques and Machine Learning Algorithms Using AVIRIS-NG Hyperspectral Data in Gold-Bearing Granite-Greenstone Rocks in Hutti, India. Int. J. Appl. Earth Obs. 2020, 86, 102006. [Google Scholar] [CrossRef]

- Wan, S.; Lei, T.C.; Ma, H.L.; Cheng, R.W. The Analysis on Similarity of Spectrum Analysis of Landslide and Bareland through Hyper-Spectrum Image Bands. Water 2019, 11, 2414. [Google Scholar] [CrossRef] [Green Version]

- Othman, A.; Gloaguen, R. Improving Lithological Mapping by SVM Classification of Spectral and Morphological Features: The Discovery of a New Chromite Body in the Mawat Ophiolite Complex (Kurdistan, NE Iraq). Remote Sens. 2014, 6, 6867–6896. [Google Scholar] [CrossRef] [Green Version]

- Okada, N.; Maekawa, Y.; Owada, N.; Haga, K.; Shibayama, A.; Kawamura, Y. Automated Identification of Mineral Types and Grain Size Using Hyperspectral Imaging and Deep Learning for Mineral Processing. Minerals 2020, 10, 809. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, H.; Shen, Q. Spectral–Spatial Classification of Hyperspectral Imagery with 3D Convolutional Neural Network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Wei, F.; Feng, F.; Wang, C. Spatial–Spectral Feature Refinement for Hyperspectral Image Classification Based on Attention-Dense 3D-2D-CNN. Sensors 2020, 20, 5191. [Google Scholar] [CrossRef]

- Hinton, G.E.; Osindero, S.; Teh, Y. A Fast Learning Algorithm for Deep Belief Nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef]

- Ding, H.; Xu, L.; Wu, Y.; Shi, W. Classification of Hyperspectral Images by Deep Learning of Spectral-Spatial Features. Arab. J. Geosci. 2020, 13, 464. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Y.; Lv, Z.; Li, S.; Liu, S.; Nandi, A.K. Landslide Inventory Mapping from Bitemporal Images Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 982–986. [Google Scholar] [CrossRef]

- Zuo, R.; Xiong, Y.; Wang, J.; Carranza, E.J.M. Deep Learning and Its Application in Geochemical Mapping. Earth Sci. Rev. 2019, 192, 1–14. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based On Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Yu, C.; Han, R.; Song, M.; Liu, C.; Chang, C. A Simplified 2D-3D CNN Architecture for Hyperspectral Image Classification Based on Spatial–Spectral Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2485–2501. [Google Scholar] [CrossRef]

- Cracknell, M.J.; Reading, A.M. Geological Mapping Using Remote Sensing Data: A Comparison of Five Machine Learning Algorithms, Their Response to Variations in the Spatial Distribution of Training Data and the Use of Explicit Spatial Information. Comput. Geosci. 2014, 63, 22–33. [Google Scholar] [CrossRef] [Green Version]

- Jing, C.; Bokun, Y.; Runsheng, W.; Feng, T.; Yingjun, Z.; Dechang, L.; Suming, Y.; Wei, S. Regional-scale Mineral Mapping Using ASTER VNIR/SWIR Data and Validation of Reflectance and Mineral Map Products Using Airborne Hyperspectral CASI/SASI Data. Int. J. Appl. Earth Obs. 2014, 33, 127–141. [Google Scholar] [CrossRef]

- Gillespie, A.; Rokugawa, S.; Matsunaga, T.; Cothern, J.S.; Hook, S.; Kahle, A.B. A Temperature and Emissivity Separation Algorithm for Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) Images. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1113–1126. [Google Scholar] [CrossRef]

- Watson, K. Spectral Ratio Method for Measuring Emissivity. Remote Sens. Environ. 1992, 42, 113–116. [Google Scholar] [CrossRef]

- Zhao, H.; Deng, K.; Li, N.; Wang, Z.; Wei, W. Hierarchical Spatial-Spectral Feature Extraction with Long Short Term Memory (LSTM) for Mineral Identification Using Hyperspectral Imagery. Sensors 2020, 20, 6854. [Google Scholar] [CrossRef] [PubMed]

- Othman, A.A.; Gloaguen, R. Integration of Spectral, Spatial and Morphometric Data into Lithological Mapping: A comparison of Different Machine Learning Algorithms in the Kurdistan Region, NE Iraq. J. Asian Earth Sci. 2017, 146, 90–102. [Google Scholar] [CrossRef]

- Cheng, G.; Xie, X.; Han, J.; Guo, L.; Xia, G. Remote Sensing Image Scene Classification Meets Deep Learning: Challenges, Methods, Benchmarks, and Opportunities. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3735–3756. [Google Scholar] [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 4489–4497. [Google Scholar]

- Dong, Y.; Yang, C.; Zhang, Y. Deep Metric Learning with Online Hard Mining for Hyperspectral Classification. Remote Sens. 2021, 13, 1368. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D–2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2020, 17, 277–281. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When Deep Learning Meets Metric Learning: Remote Sensing Image Scene Classification via Learning Discriminative CNNs. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep Convolutional Neural Networks for Hyperspectral Image Classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef] [Green Version]

| Emissivity Image | Measured Positions | Lithologic Types | Compositions | Field Measured Spectrum | Indoor Measured Spectrum |

|---|---|---|---|---|---|

| Liuyuan 1 | 1 | Slate detritus | — | LY1_1 | LY1_YP_1 |

| 2 | Syenogranite | — | LY1_2 | — | |

| 3 | Syenogranite detritus | Orthoclase (38.7%) + quartz (27.1%) + biotite (18.9%) + monoclinic pyroxene (15.3%) | LY1_3 | LY1_YP_2 | |

| 4 | Syenogranite detritus | — | LY1_4 | LY1_YP_3 | |

| 5 | Syenogranite | — | LY1_5 | — | |

| 6 | Diorite | — | — | LY1_YP_4 | |

| 7 | Diorite | Albite (32.1%) + hornblende (17.0%) + diopside (17%) + quartz (15.3%) + montmorillonite (10.5%) + biotite (8.1%) | LY1_6 | LY1_YP_5 | |

| 8 | Diorite | Chlorite (41.6%) + albite (30.1%) + quartz (13.2%) + biotite (7.6%)+ diopside (7.4%) | LY1_7 | LY1_YP_6 | |

| Liuyuan 2 | 1 | Slate | Quartz (49.7%) + anorthite (36.9%) + seraphinite (14.7%) | LY2_1 | LY2_YP_1 |

| 2 | Slate | — | — | LY2_YP_2 | |

| 3 | Diorite | — | — | LY2_YP_3 | |

| 4 | Diorite | Albite (51.3%) + quartz (24.6%) + biotite (24.1%) | — | LY2_YP_4 | |

| Liuyuan 3 | 1 | Diorite | Orthoclase (51.9%) + magnesia hornblende (39.3%) + albite (14.7%) + biotite (13.9%) + pyroxene (13.1%) + quartz (9.1%) | — | LY3_YP_1 |

| 2 | Diorite | — | — | LY3_YP_2 | |

| 3 | Diorite | Magnesia hornblende (48%) + albite (40.2%) + bixbyite (6.0%) + quartz (5.8%) | LY3_1 | LY3_YP_3 |

| Emissivity Image | Lithologic Types | Classification Reference Map | Category Label | ||

|---|---|---|---|---|---|

| Liuyuan 1 | Slate |  | |||

| Syenogranite | |||||

| Diorite | |||||

| Quaternary sediment | |||||

| Unclassified | |||||

| Liuyuan 2 | Slate |  | |||

| Diorite | |||||

| Quaternary sediment | |||||

| Sericite phyllite | |||||

| Unclassified | |||||

| Liuyuan 3 | Slate |  | |||

| Syenogranite | |||||

| Diorite | |||||

| Quaternary sediment | |||||

| Unclassified | |||||

| Lithology | SAM | SID | FCLSU | SVM | RF | NN | 1-D CNN | 2-D CNN | 3-D CNN |

|---|---|---|---|---|---|---|---|---|---|

| Slate | 69.43 | 93.59 | 86.26 | 82.91 | 84.02 | 83.90 | 85.27 | 93.00 | 93.97 |

| Syenogranite | 87.83 | 84.91 | 64.61 | 90.57 | 90.67 | 87.59 | 89.36 | 94.72 | 95.55 |

| Diorite | 52.48 | 50.82 | 75.53 | 89.96 | 90.74 | 88.91 | 90.10 | 98.40 | 98.32 |

| Quaternary sediment | 75.60 | 48.16 | 84.89 | 65.33 | 70.48 | 64.67 | 65.11 | 89.02 | 89.12 |

| OA (%) | 75.87 | 72.12 | 73.42 | 84.68 | 86.01 | 81.27 | 84.38 | 94.18 | 94.70 |

| AA (%) | 71.34 | 69.37 | 77.82 | 82.19 | 83.98 | 80.48 | 82.46 | 93.78 | 94.24 |

| Kappa | 0.6393 | 0.5869 | 0.6314 | 0.7707 | 0.7915 | 0.7757 | 0.7673 | 0.9142 | 0.9217 |

| Lithology | SAM | SID | FCLSU | SVM | RF | NN | 1-D CNN | 2-D CNN | 3-D CNN |

|---|---|---|---|---|---|---|---|---|---|

| Slate | 87.50 | 97.60 | 94.03 | 96.59 | 96.77 | 95.52 | 96.59 | 98.22 | 98.29 |

| Diorite | 69.66 | 60.09 | 70.79 | 53.07 | 59.37 | 52.48 | 56.24 | 82.97 | 83.97 |

| Quaternary sediment | 75.47 | 57.42 | 60.00 | 59.70 | 64.58 | 59.32 | 57.24 | 87.32 | 88.94 |

| Sericite phyllite | 68.92 | 36.52 | 76.52 | 87.41 | 87.80 | 84.71 | 87.44 | 97.73 | 98.02 |

| OA (%) | 79.06 | 70.54 | 82.45 | 86.93 | 88.03 | 85.39 | 86.61 | 96.07 | 96.47 |

| AA (%) | 75.39 | 62.91 | 75.34 | 74.19 | 77.13 | 73.01 | 74.38 | 91.56 | 93.11 |

| Kappa | 0.6794 | 0.5237 | 0.7228 | 0.7879 | 0.8063 | 0.7641 | 0.7825 | 0.9367 | 0.9432 |

| Lithology | SAM | SID | FCLSU | SVM | RF | NN | 1-D CNN | 2-D CNN | 3-D CNN |

|---|---|---|---|---|---|---|---|---|---|

| Slate | 85.46 | 84.32 | 84.76 | 94.39 | 94.89 | 91.76 | 93.72 | 97.04 | 96.18 |

| Syenogranite | 31.63 | 63.27 | 25.88 | 91.45 | 91.03 | 91.32 | 91.19 | 97.46 | 97.49 |

| Diorite | 93.71 | 96.55 | 87.77 | 99.13 | 99.32 | 99.26 | 98.93 | 99.53 | 99.61 |

| Quaternary sediment | 85.60 | 22.28 | 85.06 | 59.57 | 61.91 | 60.60 | 64.36 | 87.55 | 89.36 |

| OA (%) | 86.60 | 86.89 | 81.68 | 96.22 | 96.49 | 96.01 | 96.16 | 98.53 | 98.56 |

| AA (%) | 74.1 | 66.61 | 70.86 | 86.13 | 86.79 | 85.73 | 87.05 | 95.39 | 95.66 |

| Kappa | 0.7107 | 0.7549 | 0.6285 | 0.9117 | 0.9177 | 0.9062 | 0.9105 | 0.9659 | 0.9664 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Wu, K.; Xu, H.; Xu, Y. Lithology Classification Using TASI Thermal Infrared Hyperspectral Data with Convolutional Neural Networks. Remote Sens. 2021, 13, 3117. https://doi.org/10.3390/rs13163117

Liu H, Wu K, Xu H, Xu Y. Lithology Classification Using TASI Thermal Infrared Hyperspectral Data with Convolutional Neural Networks. Remote Sensing. 2021; 13(16):3117. https://doi.org/10.3390/rs13163117

Chicago/Turabian StyleLiu, Huize, Ke Wu, Honggen Xu, and Ying Xu. 2021. "Lithology Classification Using TASI Thermal Infrared Hyperspectral Data with Convolutional Neural Networks" Remote Sensing 13, no. 16: 3117. https://doi.org/10.3390/rs13163117

APA StyleLiu, H., Wu, K., Xu, H., & Xu, Y. (2021). Lithology Classification Using TASI Thermal Infrared Hyperspectral Data with Convolutional Neural Networks. Remote Sensing, 13(16), 3117. https://doi.org/10.3390/rs13163117