Daytime Cloud Detection Algorithm Based on a Multitemporal Dataset for GK-2A Imagery

Abstract

:1. Introduction

2. Materials

2.1. GK-2A

2.2. Comparison Dataset

GK-2A Cloud Mask

2.3. Validation Dataset

2.3.1. Suomi-NPP

2.3.2. CALIPSO

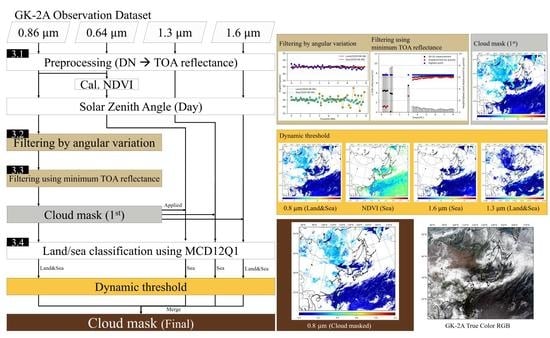

3. Methodology

- Preprocessing;

- Filtering by angular variation;

- Filtering using minimum TOA reflectance;

- Dynamic threshold.

3.1. Preprocessing

3.2. Filtering Technique by Angular Variation

3.3. Filtering Technique Using Minimum TOA Reflectance

3.4. Dynamic Threshold

3.4.1. NDVI

3.4.2. Near-Infrared

4. Results

4.1. Qualitative Comparison

4.2. Validation with the VIIRS Cloud Product

4.3. Validation with the CALIPSO Cloud Product

5. Discussions

6. Summary and Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mahajan, S.; Ftaniya, B. Cloud detection methodologies: Variants and development—A review. Complex Intell. Syst. 2020, 6, 251–261. [Google Scholar] [CrossRef] [Green Version]

- Yin, J.; Porporato, A. Diurnal cloud cycle biases in climate models. Nat. Commun. 2017, 8, 2269. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Norris, J.R.; Allen, R.J.; Evan, A.T.; Zelinka, M.D.; O’Dell, C.W.; Klein, S.A. Evidence for climate change in the satellite cloud record. Nature 2016, 536, 72–75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- GCOS. Available online: https://gcos.wmo.int/en/essential-climate-variables/clouds (accessed on 15 April 2021).

- Marais, I.Z.; Preez, J.A.; Steyn, W.H. An optimal image transform for threshold-based cloud detection using heteroscedastic discriminant analysis. Int. J. Remote Sens. 2011, 32, 1713–1729. [Google Scholar] [CrossRef]

- Gutman, G.G. Satellite daytime image classification for global studies of Earth’s surface parameters from polar orbiters. Int. J. Remote Sens. 1992, 13, 1–17. [Google Scholar] [CrossRef]

- Chen, P.; Srinivasan, R.; Fedosejevs, G. An automated cloud detection method for daily NOAA 16 advanced very high resolution radiometer data over Texas and Mexico. J. Geophys. Res. Atmos. 2003, 108, 4742. [Google Scholar] [CrossRef]

- Du, W.; Qin, Z.; Fan, J.; Gao, M.; Wang, F.; Abbasi, B. An efficient approach to remove thick cloud in VNIR bands of multi-temporal remote sensing images. Remote Sens. 2019, 11, 1284. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Wei, J.; Wang, J.; Mi, X.; Guo, Y.; Lv, Y.; Yang, Y.; Gan, P.; Zhou, X.; Jia, C.; et al. A Universal dynamic threshold cloud detection algorithm (UDTCDA) supported by a prior surface reflectance database. J. Geophys. Res. Atmos. 2016, 121, 7172–7196. [Google Scholar] [CrossRef]

- Drönner, J.; Korfhage, N.; Egli, S.; Mühling, M.; Thies, B.; Bendix, J.; Freisleben, B.; Seeger, B. Fast cloud segmentation using convolutional neural networks. Remote Sens. 2018, 10, 1782. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Fan, R.; Bilal, M.; Yang, X.; Wang, J.; Li, W. Multilevel cloud detection for high-resolution remote sensing imagery using multiple convolutional neural networks. ISPRS Int. J. Geoinf. 2018, 7, 181. [Google Scholar] [CrossRef] [Green Version]

- Frey, R.A.; Ackerman, S.A.; Liu, Y.; Strabala, K.I.; Zhang, H.; Key, J.R.; Wang, X. Cloud detection with MODIS. Part I: Improvements in the MODIS cloud mask for collection 5. J. Atmos. Ocean. Technol. 2008, 25, 1057–1072. [Google Scholar] [CrossRef]

- Ackerman, S.A.; Strabala, K.I.; Menzel, S.W.; Frey, R.A.; Moeller, C.C.; Gumley, L.E. Discriminating clear sky from clouds with MODIS. J. Geophys. Res. 1998, 103, 32141–32157. [Google Scholar] [CrossRef]

- Dybbroe, A.; Karlsson, K.G.; Thoss, A. NWCSAF AVHRR cloud detection and analysis using dynamic thresholds and radiative transfer modeling. Part II: Tuning and validation. J. Appl. Meteorol. Climatol. 2005, 44, 55–71. [Google Scholar] [CrossRef]

- Jang, J.C.; Lee, S.; Sohn, E.H.; Noh, Y.J.; Miller, S.D. Combined dust detection algorithm for Asian dust events over East Asia using GK2A/AMI: A case study in October 2019. Asia-Pacific J. Atmos. Sci. 2021, 1–20. [Google Scholar] [CrossRef]

- Kim, H.; Lee, B. GK-2A AMI Algorithm Theoretical Basis Document: Cloud Mask; National Meteorological Satellite Center: Jincheon-gun, Korea, 2019; pp. 1–37. [Google Scholar]

- Lubin, D.; Morrow, E. Evaluation of an AVHRR cloud detection and classification method over the central Arctic ocean. J. Appl. Meteorol. Climatol. 1998, 37, 166–183. [Google Scholar] [CrossRef]

- Liu, Y.; Key, J.R.; Frey, R.A.; Ackerman, S.A.; Menzel, W.P. Nighttime polar cloud detection with MODIS. Remote Sens. Environ. 2004, 92, 181–194. [Google Scholar] [CrossRef]

- Yeom, J.M.; Roujean, J.L.; Han, K.S.; Lee, K.S.; Kim, H.W. Thin cloud detection over land using background surface reflectance based on the BRDF model applied to geostationary ocean color imager (GOCI) satellite data sets. Remote Sens. Environ. 2020, 239, 111610. [Google Scholar] [CrossRef]

- Stöckli, R.; Bojanowski, J.S.; John, V.O.; Duguay-Tetzlaff, A.; Bourgeois, Q.; Schulz, J.; Hollmann, R. Cloud detection with historical geostationary satellite sensors for climate applications. Remote Sens. 2019, 11, 1052. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Woodcock, C.E. Automated cloud, cloud shadow, and snow detection in multitemporal landsat data: An algorithm designed specifically for monitoring land cover change. Remote Sens. Environ. 2014, 152, 217–234. [Google Scholar] [CrossRef]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Qiu, S.; Zhu, Z.; Woodcock, C.E. Cirrus clouds that adversely affect landsat 8 images: What are they and how to detect them? Remote Sens. Environ. 2020, 246, 1–17. [Google Scholar] [CrossRef]

- Belgiu, M.; Dragut, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Sim, S.; Im, J.; Park, S.; Park, H.; Ahn, M.H.; Chan, P. Icing detection over East Asia from geostationary satellite data using machine learning approaches. Remote Sens. 2018, 10, 631. [Google Scholar] [CrossRef] [Green Version]

- Han, D.; Lee, J.; Im, J.; Sim, S.; Lee, S.; Han, H. A novel framework of detecting convective initiation combining automated sampling, machine learning, and repeated model tuning from geostationary satellite data. Remote Sens. 2019, 11, 1454. [Google Scholar] [CrossRef] [Green Version]

- Taravat, A.; Proud, S.; Peronaci, S.; Del Frate, F.; Oppelt, N. Multilayer perceptron neural networks model for meteosat second generation SEVIRI daytime cloud masking. Remote Sens. 2015, 7, 1529–1539. [Google Scholar] [CrossRef] [Green Version]

- Xie, F.; Shi, M.; Shi, Z. Multilevel cloud detection in remote sensing images based on deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3631–3640. [Google Scholar] [CrossRef]

- Yang, J.; Guo, J.; Yue, H.; Liu, Z.; Hu, H.; Li, K. CDnet: CNN-based cloud detection for remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6195–6211. [Google Scholar] [CrossRef]

- National Meteorological Satellite Center (NMSC). Available online: http://datasvc.nmsc.kma.go.kr/datasvc/html/base/cmm/selectPage.do?page=static.openApi2 (accessed on 12 August 2021).

- Cao, C.; Xiong, J.; Blonki, S.; Liu, Q.; Uprety, S.; Shao, X.; Bai, Y.; Weng, F. Suomi NPP VIIRS sensor data record verification, validation, and long-term performance monitoring. J. Geophys. Res. Atmos. 2013, 118, 11664–11678. [Google Scholar] [CrossRef]

- EARTHDATA. Available online: https://earthdata.nasa.gov/ (accessed on 12 August 2021).

- Kopp, T.J.; Thomas, W.; Heidinger, A.K.; Botambekov, D.; Frey, R.A.; Hutchison, K.D.; Iisager, B.D.; Brueske, K.; Reed, B. The VIIRS cloud mask: Progress in the first year of S-NPP toward a common cloud detection scheme. J. Geophys. Res. Atmos. 2014, 119, 2441–2456. [Google Scholar] [CrossRef]

- Stephens, G.L.; Vane, D.G.; Boain, R.J.; Mace, G.G.; Sasse, K.; Wang, J.; Illingworth, A.J.; Oconnor, E.J.; Rossow, W.B.; Durden, S.L.; et al. The CLOUDSAT mission and the A-TRAIN: A new dimension of space-based observations of clouds and precipitation. Bull. Amer. Meteor. Soc. 2002, 83, 1771–1790. [Google Scholar] [CrossRef] [Green Version]

- Winder, D.M.; Vaughan, M.A.; Omar, A.; Hu, Y.; Powell, K.A.; Liu, Z.; Hunt, W.H.; Young, S.A. Overview of the CALIPSO mission and CALIOP data processing algorithms. J. Atmos. Ocean. Technol. 2009, 26, 2310–2323. [Google Scholar] [CrossRef]

- Lee, K.H. 3-D perspectives of atmospheric aerosol optical properties over Northeast Asia using LIDAR on-board the CALIPSO satellite. Korean J. Remote Sens. 2014, 30, 559–570. [Google Scholar] [CrossRef]

- Hocking, J.; Francis, P.N.; Saunders, R. Cloud detection in meteosat second generation imagery at the met office. Meteorol. Appl. 2011, 18, 307–323. [Google Scholar] [CrossRef]

- Sun, L.; Mi, X.; Wei, J.; Wang, J.; Tian, X.; Yu, H.; Gan, P. A cloud detection algorithm-generating method for remote sensing data at visible to short-wave infrared wavelengths. ISPRS J. Photogramm. Remote Sens. 2017, 124, 70–88. [Google Scholar] [CrossRef]

- National Renewable Energy Laboratory (NREL). Available online: https://www.nrel.gov/grid/solar-resource/spectra.html (accessed on 12 August 2021).

- Gueymard, C.A. The sun’s total and spectral irradiance for solar energy applications and solar radiation models. Sol. Energy 2004, 76, 423–453. [Google Scholar] [CrossRef]

- Trishchenko, A.P. Solar irradiance and effective brightness temperature for SWIR channels of AVHRR/NOAA and GOES imagers. J. Atmos. Ocean. Technol. 2005, 23, 198–210. [Google Scholar] [CrossRef]

- Shen, H.; Li, H.; Qian, Y.; Zhang, L.; Yuan, Q. An effective thin cloud removal procedure for visible remote sensing images. ISPRS J. Photogramm. Remote Sens. 2014, 96, 224–235. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Chen, L.; Xu, B. Spatially and temporal weighted regression: A novel method to produce continuous cloud-free Landsat imagery. IEEE Trans. Geosci. Remote Sens. 2017, 55, 27–37. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Liao, P.S.; Chen, T.S.; Chung, P.C. A fast algorithm for multilevel thresholding. J. Inf. Sci. Eng. 2001, 17, 713–727. [Google Scholar]

- Huang, D.Y.; Lin, T.W.; Hu, W.C. Automatic multilevel thresholding based on two-stage OTSU’s method with cluster determination by valley estimation. Int. J. Innov. Comput. Inf. Control. 2011, 7, 5631–5644. [Google Scholar]

- Liang, D.; Zuo, Y.; Huang, L.; Zhao, J.; Teng, L.; Yang, F. Evaluation of consistency of MODIS land cover product (MCD12Q1) based on chines 30 m GlobLand30 datasets: A case study in anhui province, China. ISPRS Int. J. Geo-Inf. 2015, 4, 2519–2541. [Google Scholar] [CrossRef] [Green Version]

- Schreyers, L.; Emmerik, T.; Biermann, L.; Lay, Y.L. Spotting green tides over Brittany from space: Three decades of monitoring with landsat imagery. Remote Sens. 2021, 13, 1408. [Google Scholar] [CrossRef]

- Xiong, Q.; Wang, Y.; Liu, D.; Ye, S.; Du, Z.; Liu, W.; Huang, J.; Su, W.; Zhu, D.; Yao, X.; et al. A cloud detection approach based on hybrid multispectral features with dynamic thresholds for GF-1 remote sensing images. Remote Sens. 2020, 12, 450. [Google Scholar] [CrossRef] [Green Version]

- Ya’acob, N.; Azize, A.B.M.; Mahmon, N.A.; Yusof, A.L.; Azmi, N.F.; Mustafa, N. Temporal forest change detection and forest health assessment using remote sensing. IOP Conf. Ser. Earth Environ. Sci. 2014, 19, 12017. [Google Scholar] [CrossRef] [Green Version]

- Escadafal, R. Remote sensing drylands: When soils come into the picture. Ci. Tróp. Recif. 2017, 41, 33–50. [Google Scholar]

- National Meteorological Satellite Center (NMSC). Available online: http://wiki.nmsc.kma.go.kr/doku.php?id=start (accessed on 28 April 2021).

- Dozier, J. Spectral signature of alpine snow cover from LANDSAT Thematic Mapper. Remote Sens. Environ. 1989, 28, 9–22. [Google Scholar] [CrossRef]

- Heidinger, A.K.; Frey, R.; Pavolonis, M. Relative merits of the 1.6 and 3.75 μm channels of the AVHRR/3 for cloud detection. Can. J. Remote Sens. 2014, 30, 182–194. [Google Scholar] [CrossRef]

- Institute for Basic Science (IBS). Available online: https://www.ibs.re.kr/cop/bbs/BBSMSTR_000000000735/selectBoardArticle.do?nttId=19049 (accessed on 28 April 2021).

- Korea Meteorological Administration (KMA). Available online: https://m.blog.naver.com/PostView.naver?blogId=kma_131&logNo=222145676520&referrerCode=0&searchKeyword=10%EC%9B%94 (accessed on 1 July 2021).

- Korea Meteorological Administration (KMA). Available online: https://www.korea.kr/news/policyBriefingView.do?newsId=148605200 (accessed on 1 July 2021).

- Frey, R.A.; Ackerman, S.A.; Holz, R.E.; Dutcher, S.; Griffith, Z. The continuity MODIS-VIIRS cloud mask. Remote Sens. 2020, 12, 3334. [Google Scholar] [CrossRef]

- Frey, R.A.; Heidinger, A.K.; Hutchison, K.D.; Dutcher, S. VIIRS cloud mask validation exercises. AGU Fall Meeting Abstracts, San Francisco, CA, USA, 5–9 December; 2011. 0265. [Google Scholar]

- National Meteorological Satellite Center (NMSC). Available online: https://nmsc.kma.go.kr/homepage/html/satellite/viewer/selectSatViewer.do?dataType=operSat# (accessed on 12 August 2021).

| Band | 0.64 μm | 0.86 μm | 1.38 μm | 1.6 μm |

|---|---|---|---|---|

| 1638.95 | 977.48 | 360.87 | 246.16 |

| Precision | Recall | Accuracy | FPR | F1score | |||

|---|---|---|---|---|---|---|---|

| VIIRS withConfident Clouds | June | GK-2A (Multitemporal) | 0.905 | 0.981 | 0.912 | 0.249 | 0.939 |

| GK-2A (NMSC) | 0.758 | 0.731 | 0.631 | 0.644 | 0.744 | ||

| October | GK-2A (Multitemporal) | 0.832 | 0.982 | 0.856 | 0.395 | 0.901 | |

| GK-2A (NMSC) | 0.622 | 0.741 | 0.520 | 0.945 | 0.677 | ||

| VIIRS withConfident and Probably Clouds | June | GK-2A (Multitemporal) | 0.911 | 0.977 | 0.912 | 0.281 | 0.943 |

| GK-2A (NMSC) | 0.807 | 0.913 | 0.772 | 0.645 | 0.857 | ||

| October | GK-2A (Multitemporal) | 0.842 | 0.972 | 0.856 | 0.395 | 0.902 | |

| GK-2A (NMSC) | 0.712 | 0.911 | 0.695 | 0.740 | 0.799 | ||

| Number | Precision | Recall | Accuracy | FPR | F1score | |||

|---|---|---|---|---|---|---|---|---|

| CALIPSO Cloud | June | GK-2A (Multitemporal) | 199,807 | 0.891 | 0.982 | 0.902 | 0.284 | 0.934 |

| GK-2A (NMSC) | 210,415 | 0.784 | 0.935 | 0.775 | 0.601 | 0.853 | ||

| October | GK-2A (Multitemporal) | 190,382 | 0.911 | 0.989 | 0.930 | 0.184 | 0.949 | |

| GK-2A (NMSC) | 191,877 | 0.687 | 0.933 | 0.691 | 0.713 | 0.791 | ||

| Precision | Recall | Accuracy | FPR | F1score | ||

|---|---|---|---|---|---|---|

| GK-2A (Multitemporal) | 1 (Overall Steps) | 0.911 | 0.977 | 0.912 | 0.281 | 0.943 |

| 2 (Using only filtering techniques) | 0.881 | 0.949 | 0.866 | 0.379 | 0.914 | |

| 3 (Using only the dynamic threshold) | 0.711 | 0.652 | 0.542 | 0.783 | 0.681 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Choi, J. Daytime Cloud Detection Algorithm Based on a Multitemporal Dataset for GK-2A Imagery. Remote Sens. 2021, 13, 3215. https://doi.org/10.3390/rs13163215

Lee S, Choi J. Daytime Cloud Detection Algorithm Based on a Multitemporal Dataset for GK-2A Imagery. Remote Sensing. 2021; 13(16):3215. https://doi.org/10.3390/rs13163215

Chicago/Turabian StyleLee, Soobong, and Jaewan Choi. 2021. "Daytime Cloud Detection Algorithm Based on a Multitemporal Dataset for GK-2A Imagery" Remote Sensing 13, no. 16: 3215. https://doi.org/10.3390/rs13163215

APA StyleLee, S., & Choi, J. (2021). Daytime Cloud Detection Algorithm Based on a Multitemporal Dataset for GK-2A Imagery. Remote Sensing, 13(16), 3215. https://doi.org/10.3390/rs13163215