Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review

Abstract

:1. Introduction

1.1. Motivation

1.2. Research Methodology and Criteria for Comparison

- Elsevier;

- Taylor & Francis;

- Springer;

- Wiley;

- IEEE;

- Informa;

- MDPI;

- Hindawi.

- Google Scholar (http://www.scholar.google.com, accessed on 5 November 2021);

- ResearchGate (https://www.researchgate.net/, accessed on5 November 2021);

- Academia (https://www.academia.edu/, accessed on 5 November 2021);

- Publons (https://publons.com/, accessed on 5 November 2021).

- The DL model/architecture used because this directly affects the requirements for the hardware part, as shown by Pourghassemi et al. [15]. In particular, the possibility of using neural networks on families of single-board computers was included in the review process.

- The number of images (i.e., dataset size) used for training the neural network.

- The types of platforms used to collect the images, with an option of whether these were mobile.

- The time of day.

- The training and inference time, overall speed of the DL model, and memory requirements. We examined whether authors used low-cost tools for training models, such as Google Collab (https://colab.research.google.com/, accessed on 5 November 2021). Expensive GPU or GPU farms significantly complicated the process of verifying the results presented by the authors.

- The availability of open-source code for the DL model and dataset used for training/testing. The type of license was not considered.

- The dataset type and quality.

- The camera used and the distance from the points of interest (i.e., weeds), and the number/volume of weeds captured on images. The dimensions of the camera, and whether it was installed on a vehicle, were also considered.

1.3. Contribution and Previous Reviews

2. A Brief Overview of DL

2.1. The History: Birth, Decline and Prosperity

2.2. Architecture and Advantages of CNN

2.3. DL and CNN in Generic Object Detection in Agriculture

3. Datasets and Image Pre-Processing

3.1. Datasets for Training Neural Networks

3.2. Image Preprocessing

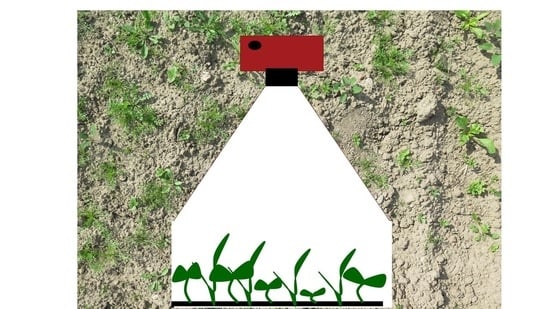

3.3. Available Weed Detection Systems

4. DL for Weed Detection

4.1. The Curse of Dense Scenes in Agricultural Fields

4.2. State-of-the-Art Methods in Weed Detection

5. Technical Aspects

5.1. Models and Architectures

5.2. Future Directions

5.3. Detection of Small Objects

5.4. Complexity vs. Processing Capacity

- DeepStream SDK—software that allows the use of multiple neural networks to process each video stream, making it possible to apply different deep ML techniques;

- The AWS IoT Greengrass Platform, which extends AWS Edge Web Services by enabling them to work locally with data;

- The RAPIDS suite of software libraries, based on the CUDA-X AI, makes it possible to work continuously, complete data processing, and analyze pipelines entirely on GPUs;

- Google Colab is a similar service to Jupyter-Notebook that has been offering free access to GPU Instances for a long time. Colab GPUs have been updated to the new NVIDIA T4 GPUs. This update opens up new software packages, allowing experimentation with RAPIDS at Colab;

- NVIDIA TensorRT is an SDK for high performance DL output. It includes a DL inference optimizer and runtime that provides low latency and high throughput for DL inference applications. TensorRT is a very promising direction for single-board computers because we can obtain 39 FPS by using tkDNN + TensorRT [150] with Jetson Nano. tkDNN is a deep neural network library built with cuDNN and tensorRT primitives specifically designed to run on NVIDIA Jetson boards. This requires a conversion of Darknet weights to TensorRT weights using the TensorFlow version of YOLOv4 (https://github.com/hunglc007/tensorflow-yolov4-tflite#convert-to-tensorrt, accessed on 5 November 2021) or the Pytorch version of YOLOv4 (https://github.com/Tianxiaomo/pytorch-YOLOv4#5-onnx2tensorrt, accessed on 5 November 2021).

5.5. Limitations

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Nomenclature

| AI | Artificial Intelligence |

| CNNs | Convolutional Neural Networks |

| CV | Computer Vision |

| DCNNs | Deep Convolutional Neural Networks |

| DL | Deep Learning |

| ML | Machine Learning |

Appendix A

Appendix B

| No. | Place | Detection Task | Camera | Accuracy, % | Weed Position Used for | Dataset | Neural Network | Disadvantage | Weed Type | Grown Crop | References |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | In greenhouse by automation line | For an approximate determination of the position | Kinect v2 sensor | 66 | Robotic intra-row weed control | Available | AdaBoost | Low accuracy | All that are not broccoli | Broccoli | [50] |

| 2 | By unmanned aerial vehicle in field | To create weed maps | RGB camera | 89 | Weed control using agricultural vehicle | Not available | Automatic object-based classification method | Complexity of customization | All that are not corn | Corn | [151] |

| 3 | For precise positioning of the weed | GoPro Hero3 Silver Edition | 87.69 | Herbicide use | Not available | Random Forest classifier | Low accuracy | All that are not sugarcane | Sugarcane | [152] | |

| Field weed density evaluation | RGB cameras | 93.40 | For statistics | Not available | U-net | No way to control weeds | All that are not corn | Corn | [153] | ||

| 4 | Autonomousrobot on thefield | To classify tasks | RGB camera | 92.5 | Robotic weed control | Not available | SVM was used as the classifier | Low recognition speed | Bindweed and bristles (field bindweed and annual bindweed) | Sugar beet fields were studied. | [154] |

| 5 | To determine the approximate position | CanonEOS 60D | 60 | Robotic weed control | Available | R-CNN | Detection problem with small weed | [155] | |||

| 6 | To determine the exact position | RGB camera | 82.13 | Mechanical weed control | Available | ResNet50 | Slowness | All that are not sugar beet | Sugar beet | [156] | |

| 7 | Vehicle | To determine the approximate position | Sony IMX220 | 90.3 | Herbicide application | Not available | AFCP algorithm | Harms both weeds and corn | All that are not corn | Corn | [157] |

References

- FAO. NSP-Weeds. Available online: http://www.fao.org/agriculture/crops/thematic-sitemap/theme/biodiversity/weeds/en/ (accessed on 18 August 2021).

- Kudsk, P.; Streibig, J.S. Herbicides and two edge-sword. Weed Res. 2003, 43, 90–102. [Google Scholar] [CrossRef]

- Harrison, J.L. Pesticide Drift and the Pursuit of Environmental Justice; MIT Press: Cambridge, MA, USA; London, UK, 2011; Available online: https://www.jstor.org/stable/j.ctt5hhd79 (accessed on 5 November 2021).

- Lemtiri, A.; Colinet, G.; Alabi, T.; Cluzeau, D.; Zirbes, L.; Haubruge, E.; Francis, F. Impacts of earthworms on soil components and dynamics. A review. Biotechnol. Agron. Soc. Environ. 2014, 18, 121–133. Available online: https://popups.uliege.be/1780-4507/index.php?id=16826&file=1&pid=10881 (accessed on 18 August 2021).

- Pannacci, E.; Farneselli, M.; Guiducci, M.; Tei, F. Mechanical weed control in onion seed production. Crop. Prot. 2020, 135, 105221. [Google Scholar] [CrossRef]

- Rehman, T.; Qamar, U.; Zaman, Q.Z.; Chang, Y.K.; Schumann, A.W.; Corscadden, K.W. Development and field evaluation of a machine vision based in-season weed detection system for wild blueberry. Comput. Electron. Agric. 2019, 162, 1–3. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Andreasen, C. A concept of a compact and inexpensive device for controlling weeds with laser beams. Agron. 2020, 10, 1616. [Google Scholar] [CrossRef]

- Raj, R.; Rajiv, P.; Kumar, P.; Khari, M. Feature based video stabilization based on boosted HAAR Cascade and representative point matching algorithm. Image Vis. Comput. 2020, 101, 103957. [Google Scholar] [CrossRef]

- Kaur, J.; Sinha, P.; Shukla, R.; Tiwari, V. Automatic Cataract Detection Using Haar Cascade Classifier. In Data Intelligence Cognitive Informatics; Springer: Singapore, 2021. [Google Scholar] [CrossRef]

- Abouzahir, A.; Sadik, M.; Sabir, E. Bag-of-visual-words-augmented Histogram of Oriented Gradients for efficient weed detection. Biosyst. Eng. 2021, 202, 179–194. [Google Scholar] [CrossRef]

- Che’Ya, N.; Dunwoody, E.; Gupta, M. Assessment of Weed Classification Using Hyperspectral Reflectance and Optimal Multispectral UAV Imagery. Agronomy 2021, 11, 1435. [Google Scholar] [CrossRef]

- De Rainville, F.M.; Durand, A.; Fortin, F.A.; Tanguy, K.; Maldague, X.; Panneton, B.; Simard, M.J. Bayesian classification and unsupervised learning for isolating weeds in row crops. Pattern Anal. Applic. 2014, 17, 401–414. [Google Scholar] [CrossRef]

- Islam, N.; Rashid, M.; Wibowo, S.; Xu, C.Y.; Morshed, A.; Wasimi, S.A.; Moore, S.; Rahman, S.M. Early Weed Detection Using Image Processing and Machine Learning Techniques in an Australian Chilli Farm. Agriculture 2021, 11, 387. [Google Scholar] [CrossRef]

- Hung, C.; Xu, Z.; Sukkarieh, S. Feature Learning Based Approach for Weed Classification Using High Resolution Aerial Images from a Digital Camera Mounted on a UAV. Remote Sens. 2014, 6, 12037–12054. [Google Scholar] [CrossRef] [Green Version]

- Pourghassemi, B.; Zhang, C.; Lee, J. On the Limits of Parallelizing Convolutional Neural Networks on GPUs, In Proceedings of the SPAA ‘20: 32nd ACM Symposium on Parallelism in Algorithms and Architectures. virtual event, USA, 15−17 July,2020. [Google Scholar] [CrossRef]

- Kulkarni, A.; Deshmukh, G. Advanced Agriculture Robotic Weed Control System. Int. J. Adv. Res. Electr. Electron. Instrum. Eng. 2013, 2, 10. Available online: https://www.ijareeie.com/upload/2013/october/43Advanced.pdf (accessed on 2 September 2021).

- Wang, N.; Zhang, E.; Dowell, Y.; Sun, D. Design of an optical weed sensor using plant spectral characteristic. Am. Soc. Agric. Biol. Eng. 2001, 44, 409–419. [Google Scholar] [CrossRef]

- Gikunda, P.; Jouandeau, N. Modern CNNs for IoT Based Farms. arXiv 2019, arXiv:1907.07772v1. [Google Scholar]

- Jouandeau, N.; Gikunda, P. State-Of-The-Art Convolutional Neural Networks for Smart Farms: A Review. Science and Information (SAI) Conference, Londres, UK, July 2017. Available online: https://hal.archives-ouvertes.fr/hal-02317323 (accessed on 16 August 2021).

- Saleem, M.; Potgieter, J.; Arif, K. Automation in Agriculture by Machine and Deep Learning Techniques: A Review of Recent Developments. Precis. Agric. 2021, 22, 2053–2091. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef] [Green Version]

- Jiang, B.; He, J.; Yang, S.; Fu, H.; Li, H. Fusion of machine vision technology and AlexNet-CNNs deep learning network for the detection of postharvest apple pesticide residues. Artif. Intell. Agric. 2019, 1, 1–8. [Google Scholar] [CrossRef]

- Liu, H.; Lee, S.; Saunders, C. Development of a machine vision system for weed detection during both of off-season. Amer. J. Agric. Biol. Sci. 2014, 9, 174–193. [Google Scholar] [CrossRef] [Green Version]

- Watchareeruetai, U.; Takeuchi, Y.; Matsumoto, T.; Kudo, H.; Ohnishi, N. Computer Vision Based Methods for Detecting Weeds in Lawns. Mach. Vis. Applic. 2006, 17, 287–296. [Google Scholar] [CrossRef]

- Padmapriya, S.; Bhuvaneshwari, P. Real time Identification of Crops, Weeds, Diseases, Pest Damage and Nutrient Deficiency. Internat. J. Adv. Res. Educ. Technol. 2018, 5, 1. Available online: http://ijaret.com/wp-content/themes/felicity/issues/vol5issue1/bhuvneshwari.pdf (accessed on 17 August 2021).

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J.; et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019, 9, 118–124. [Google Scholar] [CrossRef]

- Downey, D.; Slaughter, K.; David, C. Weeds accurately mapped using DGPS and ground-based vision identification. Calif. Agric. 2004, 58, 218–221. Available online: https://escholarship.org/uc/item/9136d0d2 (accessed on 16 August 2021). [CrossRef] [Green Version]

- Cun, Y.; Boser, B.; Dencker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. Available online: http://yann.lecun.com/exdb/publis/pdf/lecun-89e.pdf (accessed on 16 August 2021).

- Wen, X.; Jing, H.; Yanfeng, S.; Hui, Z. Advances in Convolutional Neural Networks. In Advances in Deep Learning; Aceves-Fernndez, M.A., Ed.; IntechOpen: London, UK, 2020. [Google Scholar] [CrossRef]

- Gothai, P.; Natesan, S. Weed Identification using Convolutional Neural Network and Convolutional Neural Network Architectures, Conference. In Proceedings of the 2020 Fourth International Conference on Computing Methodologies and Communication (ICCMC), Surya Engineering College, Erode, India, 1–13 March 2020. [Google Scholar] [CrossRef]

- Su, W.-H. Crop plant signalling for real-time plant identification in smart farm: A systematic review and new concept in artificial intelligence for automated weed control. Artif. Intelli. Agric. 2020, 4, 262–271. [Google Scholar] [CrossRef]

- Li, Y.; Nie, J.; Chao, X. Do we really need deep CNN for plant diseases identification? Comput. Electron. Agric. 2020, 178, 105803. [Google Scholar] [CrossRef]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. SPRS J. Photogram. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Advances in Computer Vision. CVC 2019. Advances in Intelligent Systems and Computing; Arai, K., Kapoor, S., Eds.; Springer: Cham, Switzerland, 2020; Volume 943. [Google Scholar] [CrossRef] [Green Version]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Ren, Y.; Cheng, X. Review of convolutional neural network optimization and training in image processing. In Tenth International Symposium on Precision Engineering Measurements and Instrumentation 2018; SPIE.digital library: Kunming, China, 2019. [Google Scholar] [CrossRef]

- Gorach, T. Deep convolution neural networks—A review. Intern. Res. J. Eng. Technol. 2018, 5, 439–452. Available online: https://d1wqtxts1xzle7.cloudfront.net/57208511/IRJET-V5I777.pdf?1534583803=&response-content-disposition=inline%3B+filename%3DIRJET_DEEP_CONVOLUTIONAL_NEURAL_NETWORKS.pdf&Expires=1629185322&Signature=LdFCNz-FOJU2UC1DaF38SfcA0IRYByc51~4cv6e8DC3JD4Z6L936t9GoLuQE5Bg-9v9HSnvf6ObIT64xIes0IGtb-QPR-qPPm9LKLdc1xKRdQ8jq8fKvIwKhQtdTYumrXL5aijjHSTO1Rcu8Gs2pta~zkiC1~zfONjYrOWDhSsj5O9CKGKLW2z7j1tER5QyqkYWrgycIWyytROREB5moD~7i3WNYJjnbxr7QrOSUVTVJ6YQ2hR35cmcKQLf45RhTgm5SaP3VzqK27kz9m3HCNSBqhNL5hbZgJBi5vODpyl2qinuZL1vhwJey9ouDlz6ajTQIe53cvNTbLxXGiFOqDA__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA (accessed on 17 August 2021).

- Naranjo-Torres, J.; Mora, M.; Hernández-García, R.; Barrientos, R. Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020, 10, 3443. [Google Scholar] [CrossRef]

- Jiao, J.; Zhao, M.; Lin, J.; Liang, K. A comprehensive review on convolutional neural network in machine fault diagnosis. Neurocomputing 2020, 417, 36–63. [Google Scholar] [CrossRef]

- He, T.; Kong, R.; Holmes, A.; Nguyen, M.; Sabuncu, M.R.; Eickhoff, S.B.; Bzdok, D.; Feng, J.; Yeo, B.T.T. Deep neural networks and kernel regression achieve comparable accuracies for functional connectivity prediction of behaviour and demographics. NeuroImage 2020, 206, 116276. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Kittikunakorn, N.; Sorman, B.; Xi, H.; Chen, A.; Marsh, M.; Mongeau, A.; Piché, N.; Williams, R.O.; Skomski, D. Application of Deep Learning Convolutional Neural Networks for Internal Tablet Defect Detection: High Accuracy, Throughput, and Adaptability. J. Pharma. Sci. 2020, 109, 1547–1557. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aydoğan, M.; Karci, A. Improving the accuracy using pre-trained word embeddings on deep neural networks for Turkish text classification. Phys. A Stat. Mech. Its Appl. 2020, 541, 123288. [Google Scholar] [CrossRef]

- Agarwal, M.; Gupta, S.; Biswas, K. Development of Efficient CNN model for Tomato crop disease identification. Sustain. Comput. Inform. Syst. 2020, 28, 100407. [Google Scholar] [CrossRef]

- Boulent, J.; Foucher, S.; Théau, J.; Charles, P. Convolutional Neural Networks for the Automatic Identification of Plant Diseases. Front. Plant Sci. 2019, 10, 941. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jiang, Y.; Li, C. Convolutional Neural Networks for Image-Based High Throughput Plant Phenotyping: A Review. Plant Phenomics 2020, 2020, 4152816. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Noon, S.; Amjad, M.; Qureshi, M.; Mannan, A. Use of deep learning techniques for identification of plant leaf stresses: A review. Sustain. Comput. Inf. Systems 2020, 28, 100443. [Google Scholar] [CrossRef]

- Mishra, S.; Sachan, R.; Rajpal, D. Deep Convolutional Neural Network based Detection System for Real-time Corn Plant Disease Recognition. Procedia Comput. Sci. 2020, 167, 2003–2010. [Google Scholar] [CrossRef]

- Badhan, S.K.; Dsilva, D.M.; Sonkusare, R.; Weakey, S. Real-Time Weed Detection using Machine Learning and Stereo-Vision. In Proceedings of the 2021 6th International Conference for Convergence in Technology (I2CT), Pune, India, 2–4 April 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Gai, J. Plants Detection, Localization and Discrimination using 3D Machine Vision for Robotic Intra-row Weed Control. Graduate Theses and Dissertations, Iowa State University, Ames, IA, USA, 2016. [Google Scholar] [CrossRef]

- Gottardi, M. A CMOS/CCD image sensor for 2D real time motion estimation. Sens. Actuators A Phys. 1995, 46, 251–256. [Google Scholar] [CrossRef]

- Helmers, H.; Schellenberg, M. CMOS vs. CCD sensors in speckle interferometry. Opt. Laser Technol. 2003, 35, 587–593. [Google Scholar] [CrossRef]

- Silfhout, R.; Kachatkou, A. Fibre-optic coupling to high-resolution CCD and CMOS image sensors. Nucl. Instr. Methods Phys. Res. Sect. A Accel. Spectrum. Detect. Ass. Equip. 2008, 597, 266–269. [Google Scholar] [CrossRef]

- Krishna, B.; Rekulapellim, N.; Kauda, B.P. Materials Today: Proceedings. Comparison of different deep learning frameworks. Mater. Today Proc. 2020, in press. [Google Scholar] [CrossRef]

- Trung, W.; Maleki, F.; Romero, F.; Forghani, R.; Kadoury, S. Overview of Machine Learning: Part 2: Deep Learning for Medical Image Analysis. Neuroimaging Clin. N. Am. 2020, 30, 417–431. [Google Scholar] [CrossRef]

- Wang, P.; Fan, E.; Wang, P. Comparative analysis of image classification algorithms based on traditional machine learning and deep learning. Pattern Recognit. Lett. 2021, 141, 61–67. [Google Scholar] [CrossRef]

- Bui, D.; Tsangaratos, P.; Nguyen, V.; Liem, N.; Trinh, P. Comparing the prediction performance of a Deep Learning Neural Network model with conventional machine learning models in landslide susceptibility assessment. CATENA 2020, 188, 104426. [Google Scholar] [CrossRef]

- Kamilaris, A.; Brik, C.; Karatsiolis, S. Training Deep Learning Models via Synthetic Data: Application in Unmanned Aerial Vehicles. In Proceedings of the CAIP 2019, the Workshop on Deep-Learning Based Computer Vision for UAV, Salerno, Italy, 6 September 2019. [Google Scholar]

- Barth, R.; IJsselmuiden, J.; Hemming, J.; Van Henten, E.J. Data synthesis methods for semantic segmentation in agriculture: A Capsicum annuum dataset. Comput. Electron. Agri. 2018, 144, 284–296. [Google Scholar] [CrossRef]

- Zichao, J. A Novel Crop Weed Recognition Method Based on Transfer Learning from VGG16 Implemented by Keras. OP Conf. Ser. Mater. Sci. Eng. 2019, 677, 032073. [Google Scholar] [CrossRef]

- Chen, D.; Lu, Y.; Yong, S. Performance Evaluation of Deep Transfer Learning on Multiclass Identification of Common Weed Species in Cotton Production Systems. arXiv 2021, arXiv:2110.04960v1. [Google Scholar]

- Espejo-Garcia, B.; Mylonas, N.; Athanasakos, L.; Spyros Fountas, S.; Vasilakoglou, I. Towards weeds identification assistance through transfer learning. Comput. Electron. Agric. 2020, 171, 105306. [Google Scholar] [CrossRef]

- Al-Qurran, R.; Al-Ayyoub, M.; Shatnawi, A. Plant Classification in the Wild: A Transfer Learning Approach. In Proceedings of the 2018 International Arab Conference on Information Technology (ACIT), Werdanye, Lebanon, 28–30 November 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Pajares, G.; Garcia-Santillam, I.; Campos, Y.; Montalo, M. Machine-vision systems selection for agricultural vehicles: A guide. Imaging 2016, 2, 34. [Google Scholar] [CrossRef] [Green Version]

- Shorten, C.; Khoshgoftaar, T. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Zheng, Y.; Kong, J.; Jin, X.; Wang, X. CropDeep: The Crop Vision Dataset for Deep-Learning-Based Classification and Detection in Precision Agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sudars, K.; Jasko, J.; Namatevsa, I.; Ozola, L.; Badaukis, N. Dataset of annotated food crops and weed images for robotic computer vision control. Data Brief 2020, 31, 105833. [Google Scholar] [CrossRef]

- Cap, Q.H.; Tani, H.; Uga, H.; Kagiwada, S.; Lyatomi, H. LASSR: Effective Super-Resolution Method for Plant Disease Diagnosis. arXiv 2020, arXiv:2010.06499. [Google Scholar] [CrossRef]

- Zhu, J.; Park, T.; Isola, P.; Efros, A. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. arXiv 2020, arXiv:1703.10593. [Google Scholar]

- Huang, Z.; Ke, W.; Huang, D. Improving Object Detection with Inverted Attention. In Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Snowmass, CO, USA, 1–5 March 2020. [Google Scholar]

- He, C.; Lai, S.; Lam, K. Object Detection with Relation Graph Inference. In Proceedings of the ICASSP 2019–2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019. [Google Scholar]

- Champ, J.; Mora-Fallas, A.; Goëau, H.; Mata-Montero, E.; Bonnet, P.; Joly, A. Instance segmentation for the fine detection of crop and weed plants by precision agricultural robots. Appl. Plant Sci. 2020, 8, e11373. [Google Scholar] [CrossRef]

- Lameski, P.; Zdravevski, E.; Trajkovik, V.; Kulakov, A. Weed Detection Dataset with RGB Images Taken Under Variable Light Conditions. In ICT Innovations 2017. Communications in Computer and Information Science; Trajanov, D., Bakeva, V., Eds.; Springer: Cham, Switzerland, 2017; Volume 778. [Google Scholar] [CrossRef]

- Giselsson, T.M.; Jørgensen, R.N.; Jensen, P.K.; Dyrmann, M.; Midtiby, H.S. A Public Image Database for Benchmark of Plant Seedling Classification Algorithms. arXiv 2017, arXiv:1711.05458. [Google Scholar]

- Cicco, M.; Potena, C.; Grisetti, G.; Pretto, A. Automatic Model Based Dataset Generation for Fast and Accurate Crop and Weeds Detection. arXiv 2016, arXiv:1612.03019. [Google Scholar]

- Lu, Y.; Young, S. A survey of public datasets for computer vision tasks in precision agriculture. Comput. Electron. Agric. 2020, 178, 105760. [Google Scholar] [CrossRef]

- Faisal, F.; Hossain, B.; Emam, H. Performance Analysis of Support Vector Machine and Bayesian Classifier for Crop and Weed Classification from Digital Images. World Appl. Sci. 2011, 12, 432–440. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.390.9311&rep=rep1&type=pdf (accessed on 18 August 2021).

- Dyrmann, M. Automatic Detection and Classification of Weed Seedlings under Natural Light Conditions. Det Tekniske Fakultet.University of Southern Denmark. 2017. Available online: https://pure.au.dk/portal/files/114969776/MadsDyrmannAfhandlingMedOmslag.pdf (accessed on 18 August 2021).

- Chang, C.; Lin, K. Smart Agricultural Machine with a Computer Vision Based Weeding and Variable-Rate Irrigation Scheme. Robotics 2018, 7, 38. [Google Scholar] [CrossRef] [Green Version]

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Abhisesh, S. Machine Vision System for Robotic Apple Harvesting in Fruiting Wall Orchards. Ph.D. Thesis, Department of Biological Systems Engineering, Washington State University, Pullman, WA, USA, December 2016. Available online: https://research.libraries.wsu.edu/xmlui/handle/2376/12033 (accessed on 18 August 2021).

- Qiu, Q.; Fan, Z.; Meng, Z.; Zhang, Q.; Cong, Y.; Li, B.; Wang, N.; Zhao, C. Extended Ackerman Steering Principle for the coordinated movement control of a four wheel drive agricultural mobile robot. Comput. Electron. Agric. 2018, 152, 40–50. [Google Scholar] [CrossRef]

- Ren, G.; Lin, T.; Ying, Y.; Chowdhary, G.; Ting, K.C. Agricultural robotics research applicable to poultry production: A review. Comput. Electron. Agric. 2020, 169, 105216. [Google Scholar] [CrossRef]

- Asha, R.; Aman, M.; Pankaj, M.; Singh, A. Robotics-automation and sensor based approaches in weed detection and control: A review. Intern. J. Chem. Stud. 2020, 8, 542–550. [Google Scholar] [CrossRef]

- Shinde, A.; Shukla, M. Crop detection by machine vision for weed management. Intern. J. Adv. Eng. Technol. 2014, 7, 818–826. Available online: https://www.academia.edu/38850273/CROP_DETECTION_BY_MACHINE_VISION_FOR_WEED_MANAGEMENT (accessed on 18 August 2021).

- Raja, R.; Nguyen, T.; Vuong, V.L.; Slaughter, D.C.; Fennimore, S.A. RTD-SEPs: Real-time detection of stem emerging points and classification of crop-weed for robotic weed control in producing tomato. Biosyst. Eng. 2020, 195, 152–171. [Google Scholar] [CrossRef]

- Sirikunkitti, S.; Chongcharoen, K.; Yoongsuntia, P.; Ratanavis, A. Progress in a Development of a Laser-Based Weed Control System. In Proceedings of the 2019 Research, Invention, and Innovation Congress (RI2C), Bangkok, Thailand, 11–13 December 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Mathiassen, S.; Bak, T.; Christensen, S.; Kudsk, P. The effect of laser treatment as a weed control method. Biosyst. Eng. 2006, 95, 497–505. [Google Scholar] [CrossRef] [Green Version]

- Xiong, Y.; Ge, Y.; Liang, Y.; Blackmore, S. Development of a prototype robot and fast path-planning algorithm for static laser weeding. Comput. Electron. Agric. 2017, 142, 494–503. [Google Scholar] [CrossRef]

- Marx, C.; Barcikowski, S.; Hustedt, M.; Haferkamp, H.; Rath, T. Design and application of a weed damage model for laser-based weed control. Biosyst. Eng. 2012, 113, 148–157. [Google Scholar] [CrossRef]

- Librán-Embid, F.; Klaus, F.; Tscharntke, T.; Grass, I. Unmanned aerial vehicles for biodiversity-friendly agricultural landscapes—A systematic review. Sci. Total Environ. 2020, 732, 139204. [Google Scholar] [CrossRef]

- Boursianis, A.; Papadopoulou, M.; Diamantoulakis, P.; Liopa-Tsakalidi, A.; Barouchas, P.; Salahas, G.; Karagiannidis, G.; Wan, S.; Goudos, S.K. Internet of Things (IoT) and Agricultural Unmanned Aerial Vehicles (UAVs) in smart farming: A comprehensive review. Internet Things 2020, 7, 100187. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e019630213. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hunter, J.; Gannon, T.W.; Richardson, R.J.; Yelverton, F.H.; Leon, R.G. Integration of remote-weed mapping and an autonomous spraying unmanned aerial vehicle for site-specific weed management. Pest. Manag. Sci. 2020, 76, 1386–1392. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cerro, J.; Ulloa, C.; Barrientos, A.; Rivas, J. Unmanned Aerial Vehicles in Agriculture: A Survey. Agronomy 2021, 11, 203. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J. A novel approach to estimating the competitive ability of Cirsium arvense in cereals using unmanned aerial vehicle imagery. Weed Res. 2020, 60, 150–160. [Google Scholar] [CrossRef]

- Rijk, L.; Beedie, S. Precision Weed Spraying using a Multirotor UAV. In Proceedings of the10th International Micro-Air Vehicles Conference, Melbourne, Australia, 30 November 2018. [Google Scholar]

- Liang, Y.; Yang, Y.; Chao, C. Low-Cost Weed Identification System Using Drones. In Proceedings of the Seventh International Symposium on Computing and Networking Workshops (CANDARW), Nagasaki, Japan, 26–29 November 2019; pp. 260–263. [Google Scholar]

- Zhang, Q.; Liu, Y.; Gong, C.; Chen, Y.; Yu, H. Applications of deep learning for dense scenes, analysis in agriculture: A review. Sensors 2020, 20, 1520. [Google Scholar] [CrossRef] [Green Version]

- Asad, M.; Bais, A. Weed Detection in Canola Fields Using Maximum Likelihood Classification and Deep Convolutional Neural Network. Inform. Process. Agric. 2020, 7, 535–545. [Google Scholar] [CrossRef]

- Shawky, O.; Hagag, A.; Dahshan, E.; Ismail, M. Remote sensing image scene classification using CNN-MLP with data augmentation. Optik 2020, 221, 165356. [Google Scholar] [CrossRef]

- Zhuoyao, Z.; Lei, S.; Qiang, H. Improved localization accuracy by LocNet for Faster R-CNN based text detection in natural scene images. Pattern Recognit. 2019, 96, 106986. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [Green Version]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 2021, 184, 106067. Available online: https://www.semanticscholar.org/paper/A-Survey-of-Deep-Learning-Techniques-for-Weed-from-Hasan-Sohel/80bfc6bcdf5d231122b7ffee17591c8fc14ce528 (accessed on 5 November 2021). [CrossRef]

- Rehman, T.U.; Mahmud, M.S.; Chang, Y.K.; Jin, J.; Shin, J. Current and future applications of statistical machine learning algorithms for agricultural machine vision systems. Comput. Electron. Agricult. 2019, 156, 585–605. [Google Scholar] [CrossRef]

- Osorio, K.; Puerto, A.; Pedraza, C.; Jamaica, D.; Rodríguez, L. A Deep Learning Approach for Weed Detection in Lettuce Crops Using Multispectral Images. AgriEngineering 2020, 2, 32. [Google Scholar] [CrossRef]

- Ferreira, A.S.; Freitas, D.M.; Gonçalves da Silva, G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Santos, L.; Santos, F.N.; Oliveira, P.M.; Shinde, P. Deep Learning Applications in Agriculture: A Short Review. In Robot 2019: Fourth Iberian Robotics Conference. Advances in Intelligent Systems and Computing; Silva, M., Luís Lima, J., Reis, L., Sanfeliu, A., Tardioli, D., Eds.; Springer: Cham, Switzerland, 2019; Volume 1092. [Google Scholar] [CrossRef]

- Dokic, K.; Blaskovic, L.; Mandusic, D. From machine learning to deep learning in agriculture—The quantitative review of trends. IOP Conf. Ser. Earth Environ. Sci. 2020, 614, 012138. Available online: https://iopscience.iop.org/article/10.1088/1755-1315/614/1/012138 (accessed on 18 August 2021). [CrossRef]

- Tian, H.; Wang, T.; Yadong, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation —A review. Inform. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Khaki, S.; Pham, H.; Han, Y.; Kuhl, A. Convolutional Neural Networks for Image-Based Corn Kernel Detection and Counting. arXiv 2020, arXiv:2003.12025v2. Available online: https://arxiv.org/pdf/2003.12025.pdf (accessed on 18 August 2021). [CrossRef] [PubMed]

- Yu, J.; Sharpe, S.; Schumann, A.; Boyd, N. Deep learning for image-based weed detection in turfgrass. Eur. J. Agron. 2019, 104, 78–84. [Google Scholar] [CrossRef]

- Yu, J.; Schumann, A.; Cao, Z.; Sharpe, S. Weed detection in perennial ryegrass with deep learning convolutional neural network. Front. Plant Sci. 2019, 10, 1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, J.; French, A.; Pound, M. Deep convolutional neural networks for image based Convolvulus sepium detection in sugar beet fields. Plant Methods 2020, 16, 29. [Google Scholar] [CrossRef] [Green Version]

- Scott, S. Comparison of Object Detection and Patch-Based Classification Deep Learning Models on Mid- to Late-Season Weed Detection in UAV Imagery. Remote Sens. 2020, 12, 2136. [Google Scholar] [CrossRef]

- Narvekar, C.; Rao, M. Flower classification using CNN and transfer learning in CNN- Agriculture Perspective. In Proceedings of the 3rd International Conference on Intelligent Sustainable Systems (ICISS), Thoothukudi, India, 3–5 December 2020; pp. 660–664. [Google Scholar] [CrossRef]

- Sharma, P.; Berwal, Y.; Ghai, W. Performance analysis of deep learning CNN models for disease detection in plants using image segmentation. Information. Process. Agric. 2020, 7, 566–574. [Google Scholar] [CrossRef]

- Du, X.; Lin, T.; Jin, P. SpineNet: Learning Scale-Permuted Backbone for Recognition and Localization. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 13–19 June 2020. [Google Scholar] [CrossRef]

- Koh, J.; Spangenberg, G.; Kant, S. Automated Machine Learning for High-Throughput Image-Based Plant Phenotyping. Remote Sens. 2021, 13, 858. [Google Scholar] [CrossRef]

- Shah, S.; Wu, W.; Lu, Q. AmoebaNet: An SDN-enabled network service for big data science. J. Netw. Comput. Appl. 2018, 119, 70–82. [Google Scholar] [CrossRef] [Green Version]

- Yao, L.; Xu, H.; Zhang, W. SM-NAS: Structural-to-Modular Neural Architecture Search for Object Detection. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12661–12668. [Google Scholar] [CrossRef]

- Jia, X.; Yang, X.; Yu, X.; Gao, H. A Modified CenterNet for Crack Detection of Sanitary Ceramics. In Proceedings of the IECON 2020—46th Annual Conference of the IEEE Industrial Electronics Society, 18–21 October 2020. [Google Scholar] [CrossRef]

- Zhao, K.; Yan, W.Q. Fruit Detection from Digital Images Using CenterNet. Geom. Vis. 2021, 1386, 313–326. [Google Scholar] [CrossRef]

- Xu, M.; Deng, Z.; Qi, L.; Jiang, Y.; Li, H.; Wang, Y.; Xing, X. Fully convolutional network for rice seedling and weed image segmentation at the seedling stage in paddy fields. PLoS ONE 2019, 14, e0215676. [Google Scholar] [CrossRef]

- Kong, J.; Wang, H.; Wang, X.; Jin, X.; Fang, X.; Lin, S. Multi-stream hybrid architecture based on cross-level fusion strategy for fine-grained crop species recognition in precision agriculture. Comput. Electron. Agric. 2021, 185, 106134. [Google Scholar] [CrossRef]

- Wosner, O. Detection in Agricultural Contexts: Are We Close to Human Level? Computer Vision—ECCV 2020 Workshops. Lect. Notes Comput. Sci. 2020, 12540. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Maleva, T.; Soloviev, V. Detecting Apples in Orchards Using YOLOv3 and YOLOv5 in General and Close-Up Images; Advances in Neural Networks—ISNN; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Wu, D.; Wu, Q.; Yin, X.; BoJiang, B.; Wang, H.; He, D.; Song, H. Lameness detection of dairy cows based on the YOLOv3 deep learning algorithm and a relative step size characteristic vector. Biosyst. Eng. 2020, 189, 150–163. [Google Scholar] [CrossRef]

- Waheed, A.; Goyal, M.; Gupta, D.; Khanna, A.; Hassanien, A.E.; Pandey, H.M. An optimized dense convolutional neural network model for disease recognition and classification in corn leaf. Comput. Electron. Agric. 2020, 175, 105456. [Google Scholar] [CrossRef]

- Atila, U.; Uçar, M.; Akyol, K.; Uçar, E. Plant leaf disease classification using EfficientNet deep learning model. Ecol. Inform. 2021, 61, 101182. [Google Scholar] [CrossRef]

- Pang, Y.; Shi, Y.; Gao, S.; Jiang, F.; Veeranampalayam-Sivakumar, A.-N.; Thomson, L.; Luck, J.; Liu, C. Improved crop row detection with deep neural network for early-season maize stand count in UAV imagery. Comput. Electron. Agric. 2020, 178, 105766. [Google Scholar] [CrossRef]

- Liang, F.; Tian, Z.; Dong, M.; Cheng, S.; Sun, L.; Li, H.; Chen, Y.; Zhang, G. Efficient neural network using pointwise convolution kernels with linear phase constraint. Neurocomputing 2021, 423, 572–579. [Google Scholar] [CrossRef]

- Taravat, A.; Wagner, M.P.; Bonifacio, R.; Petit, D. Advanced Fully Convolutional Networks for Agricultural Field Boundary Detection. Remote Sens. 2021, 13, 722. [Google Scholar] [CrossRef]

- Isufi, E.; Pocchiari, M.; Hanjalic, A. Accuracy-diversity trade-off in recommender systems via graph convolutions. Inf. Process. Managem. 2021, 58, 102459. [Google Scholar] [CrossRef]

- Wei, Y.; Gu, K.; Tan, L. A positioning method for maize seed laser-cutting slice using linear discriminant analysis based on isometric distance measurement. Inf. Process. Agric. 2021. [Google Scholar] [CrossRef]

- Koo, J.; Klabjan, D.; Utke, J. Combined Convolutional and Recurrent Neural Networks for Hierarchical Classification of Images. arXiv 2019, arXiv:1809.09574v3. [Google Scholar]

- Agarap, A.F.M. An Architecture Combining Convolutional Neural Network (CNN) and Support Vector Machine (SVM) for Image Classification. arXiv 2017, arXiv:1712.03541. [Google Scholar]

- Khaki, S.; Wang, L.; Archontoulis, S. A CNN-RNN Framework for Crop Yield Prediction. Front. Plant Sci. 2020, 10, 1750. [Google Scholar] [CrossRef]

- Dyrmann, M.; Jørgensen, R.H.; Midtiby, H.S. RoboWeedSupport—Detection of weed locations in leaf occluded cereal crops using a fully convolutional neural network. Adv. Anim. Biosci. 2017, 8, 842–847. [Google Scholar] [CrossRef]

- Barth, R.; Hemming, J.; Henten, V. Optimising realism of synthetic images using cycle generative adversarial networks for improved part segmentation. Comput. Electron. Agric. 2020, 173, 105378. [Google Scholar] [CrossRef]

- Nguyen, N.; Tien, D.; Thanh, D. An Evaluation of Deep Learning Methods for Small Object Detection. J. Electr. Comput. Eng. 2020, 3189691. [Google Scholar] [CrossRef]

- Chen, C.; Liu, M.; Tuzel, O.; Xiao, J. R-CNN for Small Object Detection. Comput. Vis. 2017, 10115. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, K.; Li, Y.; Zhang, D. Fruit detection for strawberry harvesting robot in non-structural environment based on Mask-RCNN. Comput. Electron. Agric. 2019, 163, 104846. [Google Scholar] [CrossRef]

- Boukhris, L.; Abderrazak, J.; Besbes, H. Tailored Deep Learning based Architecture for Smart Agriculture. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC). 15−19 June 2020, Limassol, Cyprus. [CrossRef]

- Basodi, S.; Chunya, C.; Zhang, H.; Pan, Y. Gradient Amplification: An efficient way to train deep neural networks. arXiv 2020, arXiv:2006,10560v1. [Google Scholar] [CrossRef]

- Kurniawan, A. Administering NVIDIA Jetson Nano. In IoT Projects with NVIDIA Jetson Nano; Programming Apress: Berkeley, CA, USA, 2021. [Google Scholar] [CrossRef]

- Kurniawan, A. NVIDIA Jetson Nano. In IoT Projects with NVIDIA Jetson Nano; Programming Apress: Berkeley, CA, USA, 2021. [Google Scholar] [CrossRef]

- Verucchi, M.; Brilli, G.; Sapienza, D.; Verasani, M.; Arena, M.; Gatti, F.; Capotondi, A.; Cavicchioli, R.; Bertogna, M.; Solieri, M. A Systematic Assessment of Embedded Neural Networks for Object Detection. In Proceedings of the 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA); pp. 937–944. [CrossRef]

- Gašparović, M.; Zrinjski, M.; Barković, D.; Radočaj, D. An automatic method for weed mapping in oat fields based on UAV imagery. Comput. Electron. Agric. 2020, 173, 105385. [Google Scholar] [CrossRef]

- Yano, I.H.; Alves, J.R.; Santiago, W.E.; Mederos, B.J.T. Identification of weeds in sugarcane fields through images taken by UAV and random forest classifier. IFAC-Pap. 2016, 49, 415–420. [Google Scholar] [CrossRef]

- Zhou, H.; Zhang, C. A Field Weed Density Evaluation Method Based on UAV Imaging and Modified U-Net. Remote Sens. 2021, 13, 310. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Sudars, K. Data For: Dataset of Annotated Food Crops and Weed Images for Robotic Computer Vision Control. Mendeley Data 2021, VI. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; He, R.; Gao, Z.; Li, C.; Zhai, Y.; Jiao, Y. Weed density detection method based on absolute feature corner points in field. Agronomy 2020, 10, 113. [Google Scholar] [CrossRef] [Green Version]

- Shorewala, S.; Ashfaque, A.R.S.; Verma, U. Weed Density and Distribution Estimation for Precision Agriculture Using Semi-Supervised Learning. arXiv 2021, arXiv:2011.02193. Available online: https://arxiv.org/abs/2011.02193 (accessed on 5 September 2021).

| Name | Size, Pixels | Plant/Object | Amount | References | |

|---|---|---|---|---|---|

| 1 | CropDeep | 1000 × 1000 | 31 different types of crops | 31,147 | [67] |

| 2 | Food crops and weed images | 720 × 1280 | 6 food crops and 8 weed species | 1118 | [68] |

| 3 | DeepWeeds | 256 × 256 | 8 different weed species and various off-target (or negative) plants native to Australia. | 17,509 | [26] |

| 4 | Crop and weed | 1200 x 2048 | Maize, weeds | 2489 | [72] |

| 5 | Dataset with RGB images taken under variable light conditions | 3264 × 2448 | Carrot and weed | 39 | [73] |

| 6 | Crop and weed | 1200 × 2048 | 6 food crops and 8 weed species | 1176 | [63] |

| 7 | V2 Plant seedlings Dataset | 10 pixels per mm. | 960 unique plants | 5539 | [74] |

| 8 | Early crop weed | 6000 × 4000 | tomato, cotton, velvetleaf and black nightshade | 508 | [62] |

| 9 | Weed detection dataset with RGB images taken under variable light conditions | 3200 × 2400 | carrot seedlings with weeds | 39 | [73] |

| 10 | Datasets for sugar beet crop/weed detection | 1200 × 2048 | Capsella bursa pastoris | 8518 | [75] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rakhmatulin, I.; Kamilaris, A.; Andreasen, C. Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review. Remote Sens. 2021, 13, 4486. https://doi.org/10.3390/rs13214486

Rakhmatulin I, Kamilaris A, Andreasen C. Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review. Remote Sensing. 2021; 13(21):4486. https://doi.org/10.3390/rs13214486

Chicago/Turabian StyleRakhmatulin, Ildar, Andreas Kamilaris, and Christian Andreasen. 2021. "Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review" Remote Sensing 13, no. 21: 4486. https://doi.org/10.3390/rs13214486

APA StyleRakhmatulin, I., Kamilaris, A., & Andreasen, C. (2021). Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review. Remote Sensing, 13(21), 4486. https://doi.org/10.3390/rs13214486