Consistent Long-Term Monthly Coastal Wetland Vegetation Monitoring Using a Virtual Satellite Constellation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Research Setting in Apalachicola Bay

2.2. Sensor Data Collection and Pre-Processing

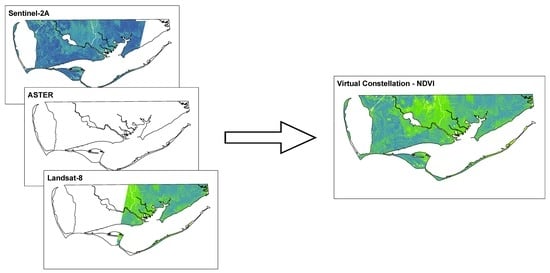

2.3. Virtual Constellation Development

Random Forest Model

2.4. Application and Testing of Virtual Constellation in Apalachicola Bay

2.4.1. Selecting the Baseline Sensor

2.4.2. Input Preparation

2.4.3. Selection of Training Data

2.4.4. Validation and Performance

3. Results

3.1. Sensor NDVI Value Agreement

3.2. Data Fusion and Reconstruction

3.3. Analysis of Virtual Constellation Model Performance

3.4. Sensitivity of Virtual Constellation Performance to Initial L8 Cloud Cover

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wulder, M.A.; Hilker, T.; White, J.C.; Coops, N.C.; Masek, J.G.; Pflugmacher, D.; Crevier, Y. Virtual constellations for global terrestrial monitoring. Remote Sens. Environ. 2015, 170, 62–76. [Google Scholar] [CrossRef] [Green Version]

- Dassenakis, M.; Paraskevopoulou, V.; Cartalis, C.; Adaktilou, N.; Katsiabani, K. Remote sensing in coastal water monitoring: Applications in the eastern Mediterranean Sea (IUPAC Technical Report). Pure Appl. Chem. 2011, 84, 335–375. [Google Scholar] [CrossRef]

- Xu, W.; Xie, A.; Huang, J.; Huang, B. A Method of Identifying Degradation of Ruoergai Wetland in Sichuan. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. 882–885. [Google Scholar]

- Barbier, E. Valuing Ecosystem Services for Coastal Wetland Protection and Restoration: Progress and Challenges. Resources 2013, 2, 213–230. [Google Scholar] [CrossRef] [Green Version]

- Barbier, E.B.; Hacker, S.D.; Kennedy, C.; Koch, E.W.; Stier, A.C.; Silliman, B.R. The value of estuarine and coastal ecosystem services. Ecol. Monogr. 2011, 81, 169–193. [Google Scholar] [CrossRef]

- Ozesmi, S.L.; Bauer, M.E.; Tiner, R.W.; Lang, M.W.; Klemas, V.V. Satellite remote sensing of wetlands. Wetl. Ecol. Manag. 2002, 10, 381–402. [Google Scholar] [CrossRef]

- Scheffler, D.; Frantz, D.; Segl, K. Spectral harmonization and red edge prediction of Landsat-8 to Sentinel-2 using land cover optimized multivariate regressors. Remote Sens. Environ. 2020, 241. [Google Scholar] [CrossRef]

- Gordon, H.R.; Wang, M. Retrieval of water-leaving radiance and aerosol optical thickness over the oceans with SeaWiFS: A preliminary algorithm. Appl. Opt. 1994, 33, 443–452. [Google Scholar] [CrossRef]

- Wald, L. Data fusion: A conceptual approach for an efficient exploitation of remote sensing images. In Proceedings of the 2nd International Conference “Fusion of Earth Data: Merging Point Measurements, Raster Maps and Remotely Sensed Images”, Sophia Antipolis, France, 28–30 January 1998; pp. 17–24. [Google Scholar]

- Campbell, A.D. Monitoring Salt Marsh Condition and Change with Satellite Remote Sensing. Ph.D. Thesis, University of Rhode Island, Kingston, RI, USA, 2018. [Google Scholar]

- Sun, C. Salt marsh mapping based on a short-time interval NDVI time-series from HJ-1 CCD imagery. In Proceedings of the American Geophysical Union Fall Meeting, San Francisco, CA, USA, 14–18 December 2015. [Google Scholar]

- Mo, Y.; Kearney, M.; Momen, B. Drought-associated phenological changes of coastal marshes in Louisiana. Ecosphere 2017, 8, e01811. [Google Scholar] [CrossRef]

- Tahsin, S.; Medeiros, S.C.; Singh, A. Resilience of coastal wetlands to extreme hydrologic events in Apalachicola Bay. Geophys. Res. Lett. 2016, 43, 7529–7537. [Google Scholar] [CrossRef]

- Civco, D.; Hurd, J.; Prisloe, S.; Gilmore, M. Characterization of coastal wetland systems using multiple remote sensing data types and analytical techniques. In Proceedings of the IEEE International Symposium on Geoscience and Remote Sensing, Denver, CO, USA, 31 July–4 August 2006; pp. 3442–3446. [Google Scholar]

- Zhukov, B.; Oertel, D.; Lanzl, F.; Reinhackel, G. Unmixing-based multisensor multiresolution image fusion. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1212–1226. [Google Scholar] [CrossRef]

- Roy, D.P.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS-Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Kulawardhana, R.W.; Thenkabail, P.S.; Vithanage, J.; Biradar, C.; Islam Md, A.; Gunasinghe, S.; Alankara, R. Evaluation of the Wetland Mapping Methods using Landsat ETM+ and SRTM Data. J. Spat. Hydrol. 2007, 7, 62–96. [Google Scholar] [CrossRef]

- Cihlar, J. Identification of contaminated pixels in AVHRR composite images for studies of land biosphere. Remote Sens. Environ. 1996, 56, 149–163. [Google Scholar] [CrossRef]

- Zhu, W.; Pan, Y.; He, H.; Wang, L.; Mou, M.; Liu, J. A changing-weight filter method for reconstructing a high-quality NDVI time series to preserve the integrity of vegetation phenology. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1085–1094. [Google Scholar] [CrossRef]

- Fan, X.; Liu, Y. Multisensor Normalized Difference Vegetation Index Intercalibration: A Comprehensive Overview of the Causes of and Solutions for Multisensor Differences. IEEE Geosci. Remote Sens. Mag. 2018, 6, 23–45. [Google Scholar] [CrossRef]

- Goodin, D.; Henebry, G. The effect of rescaling on fine spatial resolution NDVI data: A test using multi-resolution aircraft sensor data. Int. J. Remote Sens. 2002, 23, 3865–3871. [Google Scholar] [CrossRef]

- Fensholt, R.; Sandholt, I.; Proud, S.R.; Stisen, S.; Rasmussen, M.O. Assessment of MODIS sun-sensor geometry variations effect on observed NDVI using MSG SEVIRI geostationary data. Int. J. Remote Sens. 2010, 31, 6163–6187. [Google Scholar] [CrossRef]

- Roderick, M.; Smith, R.; Cridland, S. The precision of the NDVI derived from AVHRR observations. Remote Sens. Environ. 1996, 56, 57–65. [Google Scholar] [CrossRef]

- Galvão, L.S.; Vitorello, Í.; Almeida Filho, R. Effects of band positioning and bandwidth on NDVI measurements of Tropical Savannas. Remote Sens. Environ. 1999, 67, 181–193. [Google Scholar] [CrossRef]

- Padró, J.-C.; Pons, X.; Aragonés, D.; Díaz-Delgado, R.; García, D.; Bustamante, J.; Pesquer, L.; Domingo-Marimon, C.; González-Guerrero, Ò.; Cristóbal, J.; et al. Radiometric Correction of Simultaneously Acquired Landsat-7/Landsat-8 and Sentinel-2A Imagery Using Pseudoinvariant Areas (PIA): Contributing to the Landsat Time Series Legacy. Remote Sens. 2017, 9, 1319. [Google Scholar] [CrossRef] [Green Version]

- Claverie, M.; Ju, J.; Masek, J.G.; Dungan, J.L.; Vermote, E.F.; Roger, J.-C.; Skakun, S.V.; Justice, C. The Harmonized Landsat and Sentinel-2 surface reflectance data set. Remote Sens. Environ. 2018, 219, 145–161. [Google Scholar] [CrossRef]

- Zhang, H.K.; Roy, D.P.; Yan, L.; Li, Z.; Huang, H.; Vermote, E.; Skakun, S.; Roger, J.-C. Characterization of Sentinel-2A and Landsat-8 top of atmosphere, surface, and nadir BRDF adjusted reflectance and NDVI differences. Remote Sens. Environ. 2018, 215, 482–494. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Chen, J.; Chen, Y.; Li, J.; Yan, G.; Zhang, X. Analysis of NDVI and scaled difference vegetation index retrievals of vegetation fraction. Remote Sens. Environ. 2006, 101, 366–378. [Google Scholar] [CrossRef]

- Shang, R.; Zhu, Z. Harmonizing Landsat 8 and Sentinel-2: A time-series-based reflectance adjustment approach. Remote Sens. Environ. 2019, 235. [Google Scholar] [CrossRef]

- Li, W.; Du, Z.; Ling, F.; Zhou, D.; Wang, H.; Gui, Y.; Sun, B.; Zhang, X. A comparison of land surface water mapping using the normalized difference water index from TM, ETM+ and ALI. Remote Sens. 2013, 5, 5530–5549. [Google Scholar] [CrossRef] [Green Version]

- Wu, G.; Liu, Y. Satellite-based detection of water surface variation in China’s largest freshwater lake in response to hydro-climatic drought. Int. J. Remote Sens. 2014, 35, 4544–4558. [Google Scholar] [CrossRef]

- Flood, N. Comparing Sentinel-2A and Landsat 7 and 8 using surface reflectance over Australia. Remote Sens. 2017, 9, 659. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Ganguly, S.; Dungan, J.L.; Wang, W.; Nemani, R.R. Sentinel-2 MSI Radiometric Characterization and Cross-Calibration with Landsat-8 OLI. Adv. Remote Sens. 2017, 6, 147. [Google Scholar] [CrossRef] [Green Version]

- Gorroño, J.; Banks, A.C.; Fox, N.P.; Underwood, C. Radiometric inter-sensor cross-calibration uncertainty using a traceable high accuracy reference hyperspectral imager. ISPRS J. Photogramm. Remote Sens. 2017, 130, 393–417. [Google Scholar] [CrossRef] [Green Version]

- Hazaymeh, K.; Hassan, Q.K. Fusion of MODIS and Landsat-8 surface temperature images: A new approach. PLoS ONE 2015, 10, e0117755. [Google Scholar] [CrossRef]

- Arekhi, M.; Goksel, C.; Balik Sanli, F.; Senel, G. Comparative Evaluation of the Spectral and Spatial Consistency of Sentinel-2 and Landsat-8 OLI Data for Igneada Longos Forest. ISPRS Int. J. Geo. Inf. 2019, 8, 56. [Google Scholar] [CrossRef] [Green Version]

- Walker, J.; De Beurs, K.; Wynne, R.; Gao, F. Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology. Remote Sens. Environ. 2012, 117, 381–393. [Google Scholar] [CrossRef]

- Nie, Z.; Chan, K.K.Y.; Xu, B. Preliminary Evaluation of the Consistency of Landsat 8 and Sentinel-2 Time Series Products in An Urban Area—An Example in Beijing, China. Remote Sens. 2019, 11, 2957. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Wang, S.; Woodcock, C.E. Improvement and expansion of the Fmask algorithm: Cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015, 159, 269–277. [Google Scholar] [CrossRef]

- Xu, H.Q.; Zhang, T.J. Cross comparison of ASTER and landsat ETM+ multispectral measurements for NDVI and SAVI vegetation indices. Spectrosc. Spectr. Anal. 2011, 31, 1902–1907. [Google Scholar] [CrossRef]

- Mandanici, E.; Bitelli, G. Preliminary comparison of sentinel-2 and landsat 8 imagery for a combined use. Remote Sens. 2016, 8, 1014. [Google Scholar] [CrossRef] [Green Version]

- Chastain, R.; Housman, I.; Goldstein, J.; Finco, M.; Tenneson, K. Empirical cross sensor comparison of Sentinel-2A and 2B MSI, Landsat-8 OLI, and Landsat-7 ETM+ top of atmosphere spectral characteristics over the conterminous United States. Remote Sens. Environ. 2019, 221, 274–285. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J.L. Review article Multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef] [Green Version]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Tu, T.-M.; Su, S.-C.; Shyu, H.-C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Shettigara, V.K. A generalized component substitution technique for spatial enhancement of multispectral images using a higher resolution data set. Photogramm. Eng. Remote Sens. 1992, 58, 561–567. [Google Scholar] [CrossRef]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef] [Green Version]

- Chavez, P.S.; Stuart, C.S.; Jeffrey, A.A. Comparison of Three Different Methods to Merge Multiresolution and Multispectral Data: LANDSAT TM and SPOT Panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar] [CrossRef]

- Wei, Q.; Bioucas-Dias, J.; Dobigeon, N.; Tourneret, J.Y. Hyperspectral and Multispectral Image Fusion Based on a Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3658–3668. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Shi, W.; Atkinson, P.M.; Zhao, Y. Downscaling MODIS images with area-to-point regression kriging. Remote Sens. Environ. 2015, 166, 191–204. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Wang, Z.; Wang, Z.J.; Ward, R.K.; Wang, X. Deep learning for pixel-level image fusion: Recent advances and future prospects. Inf. Fusion 2018, 42, 158–173. [Google Scholar] [CrossRef]

- Seo, D.K.; Kim, Y.H.; Eo, Y.D.; Lee, M.H.; Park, W.Y. Fusion of SAR and Multispectral Images Using Random Forest Regression for Change Detection. ISPRS Int. J. Geo. Inf. 2018, 7, 401. [Google Scholar] [CrossRef] [Green Version]

- Tahsin, S.; Medeiros, S.C.; Hooshyar, M.; Singh, A. Optical cloud pixel recovery via machine learning. Remote Sens. 2017, 9, 527. [Google Scholar] [CrossRef] [Green Version]

- Martinuzzi, S.; Gould, W.A.; Ramos González, O.M. Creating Cloud-Free Landsat ETM+ Data Sets in Tropical Landscapes: Cloud and Cloud-Shadow Removal; General Technical Report IITF-GTR-32; United States Department of Agriculture, Forest Service, International Institute of Tropical Forestry: Washington, DC, USA, February 2007.

- Jarihani, A.A.; McVicar, T.R.; van Niel, T.G.; Emelyanova, I.V.; Callow, J.N.; Johansen, K. Blending landsat and MODIS data to generate multispectral indices: A comparison of “index-then-blend” and “Blend-Then-Index” approaches. Remote Sens. 2014, 6, 9213–9238. [Google Scholar] [CrossRef] [Green Version]

- Goyal, A.; Guruprasad, R.B. A novel blending algorithm for satellite-derived high resolution spatio-temporal normalized difference vegetation index. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XX, Berlin, Germany, 10 October 2018. [Google Scholar]

- NOAA. The Coastal Change Analysis Program (C-CAP) Regional Land Cover; NOAA Coastal Services Center: Charleston, SC, USA, 1995.

- Tahsin, S.; Medeiros, S.C.; Singh, A. Wetland Dynamics Inferred from Spectral Analyses of Hydro-Meteorological Signals and Landsat Derived Vegetation Indices. Remote Sens. 2020, 12, 12. [Google Scholar] [CrossRef] [Green Version]

- Batie, S.S.; Wilson, J.R. Economic Values Attributable to Virginia’s Coastal Wetlands as Inputs in Oyster Production. J. Agric. Appl. Econ. 1978, 10, 111–118. [Google Scholar] [CrossRef] [Green Version]

- Lenhardt, C. Delivering NASA Earth Observing System (EOS) Data with Digital Content Repository Technology. Available online: https://processing.eos.com (accessed on 14 January 2019).

- Rousel, J.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite—1 Symposium, NASA SP-351, Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Park, S.K.; Schowengerdt, R.A. Image reconstruction by parametric cubic convolution. Comput. Vis. Graph Image Process. 1983, 23, 258–272. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Kahle, A.B.; Tsu, H.; Kawakami, T.; Pniel, M. Overview of Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER). IEEE Trans. Geosci. Remote Sens. 1998, 36, 1062–1071. [Google Scholar] [CrossRef] [Green Version]

- Lynnes, C. A Simple, Scalable, Script-Based Science Processor. In Earth Science Satellite Remote Sensing; Qu, J.J., Gao, W., Kafatos, M., Murphy, R.E., Salomonson, V.V., Eds.; Springer: Berlin, Germany, 2007. [Google Scholar] [CrossRef]

- Krehbiel, C. Working with ASTER L1T Visible and Near Infrared (VNIR) Data in R. Available online: https://lpdaac.usgs.gov/resources/e-learning/working-aster-l1t-visible-and-near-infrared-vnir-data-r/ (accessed on 1 August 2020).

- Aghakouchak, A.; Mehran, A.; Norouzi, H.; Behrangi, A. Systematic and random error components in satellite precipitation data sets. Geophys. Res. Lett. 2012, 39. [Google Scholar] [CrossRef] [Green Version]

- Nay, J.; Burchfield, E.; Gilligan, J. A machine-learning approach to forecasting remotely sensed vegetation health. Int. J. Remote Sens. 2018, 39, 1800–1816. [Google Scholar] [CrossRef]

- Tian, Y.; Peters-Lidard, C.D.; Eylander, J.B.; Joyce, R.J.; Huffman, G.J.; Adler, R.F.; Hsu, K.L.; Turk, F.J.; Garcia, M.; Zeng, J. Component analysis of errors in Satellite-based precipitation estimates. J. Geophys. Res. Atmos. 2009, 114, D24101. [Google Scholar] [CrossRef] [Green Version]

- Rodriguez-Galiano, V.; Dash, J.; Atkinson, P.M. Intercomparison of satellite sensor land surface phenology and ground phenology in Europe. Geophys. Res. Lett. 2015, 42, 2253–2260. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Palmer, D.S.; O’Boyle, N.M.; Glen, R.C.; Mitchell, J.B. Random forest models to predict aqueous solubility. J. Chem. Inf. Modeling 2007, 47, 150–158. [Google Scholar] [CrossRef]

- Foga, S.; Scaramuzza, P.L.; Guo, S.; Zhu, Z.; Dilley Jr, R.D.; Beckmann, T.; Schmidt, G.L.; Dwyer, J.L.; Hughes, M.J.; Laue, B. Cloud detection algorithm comparison and validation for operational Landsat data products. Remote Sens. Environ. 2017, 194, 379–390. [Google Scholar] [CrossRef] [Green Version]

- Weier, J.; Herring, D. Measuring Vegetation: NDVI & EVI. Available online: https://earthobservatory.nasa.gov/features/MeasuringVegetation (accessed on 4 April 2019).

- Mueller-Wilm, U.; Devignot, O.; Pessiot, L. S2 MPC Sen2Cor Configuration and User Manual. Available online: https://step.esa.int/thirdparties/sen2cor/2.3.0/%5BL2A-SUM%5D%20S2-PDGS-MPC-L2A-SUM%20%5B2.3.0%5D.pdf (accessed on 1 August 2020).

- Topaloǧlu, R.H.; Sertel, E.; Musaoǧlu, N. Assessment of classification accuracies of Sentinel-2 and Landsat-8 data for land cover/use mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- GDAL/OGR Contributors. GDAL/OGR Geospatial Data Abstraction Software Library. 2020. Open Source Geospatial Foundation. Available online: https://gdal.org (accessed on 1 August 2020).

- Jagalingam, P.; Hegde, A.V. A Review of Quality Metrics for Fused Image. Aquat. Procedia 2015, 4, 133–142. [Google Scholar] [CrossRef]

- Nouha, M.; Saadi, A.; Mohamed Rached, B. Unmixing based Landsat ETM+ and ASTER image fusion for hybrid multispectral image analysis. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 3074–3077. [Google Scholar]

- Rogers, J.; Gunn, S. Identifying Feature Relevance Using a Random Forest; Springer: Berlin/Heidelberg, Germany, 2006; pp. 173–184. [Google Scholar]

- Alizad, K.; Hagen, S.C.; Morris, J.T.; Medeiros, S.C.; Bilskie, M.V.; Weishampel, J.F. Coastal wetland response to sea-level rise in a fluvial estuarine system. Earth’s Future 2016, 4, 483–497. [Google Scholar] [CrossRef]

- Alizad, K.; Hagen, S.C.; Morris, J.T.; Bacopoulos, P.; Bilskie, M.V.; Weishampel, J.F.; Medeiros, S.C. A coupled, two-dimensional hydrodynamic-marsh model with biological feedback. Ecol. Model. 2016, 327, 29–43. [Google Scholar] [CrossRef] [Green Version]

- Morris, J.T.; Sundareshwar, P.V.; Nietch, C.T.; Kjerfve, B.; Cahoon, D.R. Responses of coastal wetlands to rising sea level. Ecology 2002, 83, 2869–2877. [Google Scholar] [CrossRef]

- Swanson, K.M.; Drexler, J.Z.; Schoellhamer, D.H.; Thorne, K.M.; Casazza, M.L.; Overton, C.T.; Callaway, J.C.; Takekawa, J.Y. Wetland Accretion Rate Model of Ecosystem Resilience (WARMER) and Its Application to Habitat Sustainability for Endangered Species in the San Francisco Estuary. Estuaries Coasts 2014, 37, 476–492. [Google Scholar] [CrossRef]

| Sensor | Subsystem | Band | Spectral Range (µm) | Signal to Noise Ratio (SNR) | Spatial Resolution (m) | Swath Width (km) |

|---|---|---|---|---|---|---|

| Landsat 8 OLI | NIR | 5 | 0.85–0.87 | 204 | 30 | 185 |

| Red | 4 | 0.63–0.68 | 227 | |||

| Sentinel-2A MSI | NIR | 8A | 0.86–0.88 | 72 | 20 | 290 |

| Red | 4 | 0.65–0.68 | 142 | 10 | ||

| ASTER | NIR | 3N | 0.78–0.86 | 202 | 15 | 60 |

| Red | 2 | 0.63–0.69 | 306 |

| L8 NDVI | Month | Northing (m) | Easting (m) | S2A NDVI | ASTER NDVI |

|---|---|---|---|---|---|

| 0.53 | 12 | 692,842.0 | 3,295,065.0 | 0.49 | 0.37 |

| 0.50 | 12 | 692,872.0 | 3,295,065.0 | 0.53 | 0.40 |

| 0.41 | 4 | 668,722.0 | 3,298,545.0 | 0.42 | 0.26 |

| 0.34 | 4 | 668,752.0 | 3,298,545.0 | 0.69 | 0.21 |

| 0.14 | 9 | 653,625.0 | 3,299,865.0 | 0.15 | 0.20 |

| 0.12 | 9 | 653,655.0 | 3,299,865.0 | 0.11 | 0.29 |

| 0.11 | 6 | 704,565.0 | 3,306,645.0 | 0.18 | 0.24 |

| 0.13 | 6 | 704,625.0 | 3,306,645.0 | 0.63 | 0.23 |

| 0.65 | 5 | 660,435.0 | 3,306,135.0 | 0.71 | 0.69 |

| 0.64 | 5 | 660,465.0 | 3,306,135.0 | 0.69 | 0.65 |

| Synthetic Cloud Cover | R2 | RMSE | p-Value |

|---|---|---|---|

| 10% | 0.8129 | 0.009648 | <0.05 |

| 20% | 0.8146 | 0.009669 | <0.05 |

| 30% | 0.8127 | 0.009490 | <0.05 |

| 40% | 0.8356 | 0.009557 | <0.05 |

| 50% | 0.8246 | 0.009474 | <0.05 |

| 60% | 0.8179 | 0.009457 | <0.05 |

| 70% | 0.8113 | 0.009548 | <0.05 |

| 80% | 0.8403 | 0.009457 | <0.05 |

| 90% | 0.8155 | 0.009546 | <0.05 |

| 100% | 0.8193 | 0.009581 | <0.05 |

| ASTER to L8 NDVI | S2A NDVI to L8 | ||

|---|---|---|---|

| Features | Importance | Features | Importance |

| ASTER NDVI | 0.586 | S2A NDVI | 0.479 |

| Northing (m) | 0.222 | Northing (m) | 0.275 |

| Easting (m) | 0.185 | Easting (m) | 0.227 |

| Month | 0.014 | Month | 0.024 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tahsin, S.; Medeiros, S.C.; Singh, A. Consistent Long-Term Monthly Coastal Wetland Vegetation Monitoring Using a Virtual Satellite Constellation. Remote Sens. 2021, 13, 438. https://doi.org/10.3390/rs13030438

Tahsin S, Medeiros SC, Singh A. Consistent Long-Term Monthly Coastal Wetland Vegetation Monitoring Using a Virtual Satellite Constellation. Remote Sensing. 2021; 13(3):438. https://doi.org/10.3390/rs13030438

Chicago/Turabian StyleTahsin, Subrina, Stephen C. Medeiros, and Arvind Singh. 2021. "Consistent Long-Term Monthly Coastal Wetland Vegetation Monitoring Using a Virtual Satellite Constellation" Remote Sensing 13, no. 3: 438. https://doi.org/10.3390/rs13030438

APA StyleTahsin, S., Medeiros, S. C., & Singh, A. (2021). Consistent Long-Term Monthly Coastal Wetland Vegetation Monitoring Using a Virtual Satellite Constellation. Remote Sensing, 13(3), 438. https://doi.org/10.3390/rs13030438