New Orthophoto Generation Strategies from UAV and Ground Remote Sensing Platforms for High-Throughput Phenotyping

Abstract

:1. Introduction

2. Related Work

- The DSM is not precise enough to describe the covered object space (i.e., model each stalk, tassel/panicle, and leaf);

- The visibility/occlusion of DSM cells results in double-mapped areas in the orthophoto;

- The mosaicking process inevitably results in discontinuities across the boundary between two rectified images (i.e., at seamline locations).

3. Data Acquisition Systems and Dataset Description

3.1. Impact of Canopy on GNSS/INS-Derived Trajectory

3.2. Study Sites and Dataset Description

4. Proposed Methodology

4.1. Point Positioning Equations and Ortho-Rectification for Frame Cameras and Push-Broom Scanners

4.2. Smooth DSM Generation

4.3. Controlling Seamline Locations Away from Tassels/Panicles

4.4. Orthophoto Quality Assessment

5. Experimental Results and Discussion

- (a)

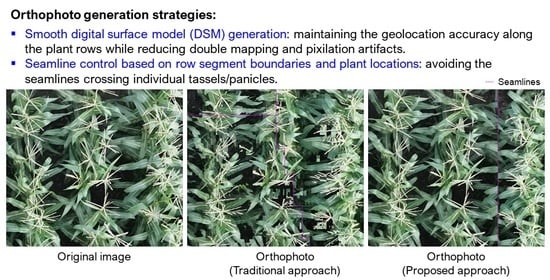

- Different approaches for smooth DSM generation, which can be used for both frame camera and push-broom scanner imagery, including the use of 90th percentile elevation within the different cells, cloth-simulation of such DSM, and elevation averaging within the row segments of cloth-based DSM;

- (b)

- A control strategy to avoid the seamlines crossing individual row segments within derived orthophotos from frame camera images and push-broom scanner scenes captured by a UAV platform;

- (c)

- A control strategy to avoid the seamlines crossing individual plant locations within derived orthophotos from frame camera images captured by a ground platform; and

- (d)

- Quality control metric to evaluate the visual characteristics of derived orthophotos from frame camera images captured by a UAV platform.

5.1. Impact of DSM Smoothing and Seamline Control Strategies on Derived Orthophotos from UAV Frame Camera Imagery

5.2. Quality Verification of Generated Orthophotos Using UAV Frame Camera and Push-Broom Scanner Imagery, as Well as Ground Push-Broom Scanner Imagery over Maize and Sorghum Fields

5.3. Quality Verification of Generated Orthophotos Using Ground Frame Camera Imagery

6. Conclusions and Directions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Araus, J.L.; Kefauver, S.C.; Zaman-Allah, M.; Olsen, M.S.; Cairns, J.E. Translating high-throughput phenotyping into genetic gain. Trends Plant Sci. 2018, 23, 451–466. [Google Scholar] [CrossRef] [Green Version]

- Hunt, E.R.; Dean Hively, W.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef] [Green Version]

- Zhao, J.; Zhang, X.; Gao, C.; Qiu, X.; Tian, Y.; Zhu, Y.; Cao, W. Rapid mosaicking of unmanned aerial vehicle (UAV) images for crop growth monitoring using the SIFT algorithm. Remote Sens. 2019, 11, 1226. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, I.; Eramian, M.; Ovsyannikov, I.; Van Der Kamp, W.; Nielsen, K.; Duddu, H.S.; Rumali, A.; Shirtliffe, S.; Bett, K. Automatic detection and segmentation of lentil crop breeding plots from multi-spectral images captured by UAV-mounted camera. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision, WACV 2019, Waikoloa, HI, USA, 7–11 January 2019; pp. 1673–1681. [Google Scholar]

- Chen, Y.; Baireddy, S.; Cai, E.; Yang, C.; Delp, E.J. Leaf segmentation by functional modeling. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; Volume 2019, pp. 2685–2694. [Google Scholar]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Miao, C.; Pages, A.; Xu, Z.; Rodene, E.; Yang, J.; Schnable, J.C. Semantic segmentation of sorghum using hyperspectral data identifies genetic associations. Plant Phenomics 2020, 2020, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time semantic segmentation of crop and weed for precision agriculture robots leveraging background knowledge in CNNs. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 2229–2235. [Google Scholar]

- Xu, R.; Li, C.; Paterson, A.H. Multispectral imaging and unmanned aerial systems for cotton plant phenotyping. PLoS ONE 2019, 14, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Ribera, J.; He, F.; Chen, Y.; Habib, A.F.; Delp, E.J. Estimating phenotypic traits from UAV based RGB imagery. arXiv 2018, arXiv:1807.00498. [Google Scholar]

- Ribera, J.; Chen, Y.; Boomsma, C.; Delp, E.J. Counting plants using deep learning. In Proceedings of the 2017 IEEE Global Conference on Signal and Information Processing (GlobalSIP), Montreal, QC, Canada, 14–16 November 2017; pp. 1344–1348. [Google Scholar]

- Valente, J.; Sari, B.; Kooistra, L.; Kramer, H.; Mücher, S. Automated crop plant counting from very high-resolution aerial imagery. Precis. Agric. 2020, 21, 1366–1384. [Google Scholar] [CrossRef]

- Habib, A.F.; Kim, E.-M.; Kim, C.-J. New Methodologies for True Orthophoto Generation. Photogramm. Eng. Remote Sens. 2007, 73, 25–36. [Google Scholar] [CrossRef] [Green Version]

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Evan Flatt, J.; Crawford, M. Boresight Calibration of GNSS/INS-Assisted Push-Broom Hyperspectral Scanners on UAV Platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1734–1749. [Google Scholar] [CrossRef]

- Ravi, R.; Lin, Y.J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous system calibration of a multi-LiDAR multi-camera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Gneeniss, A.S.; Mills, J.P.; Miller, P.E. In-flight photogrammetric camera calibration and validation via complementary lidar. ISPRS J. Photogramm. Remote Sens. 2015, 100, 3–13. [Google Scholar] [CrossRef] [Green Version]

- Zhou, T.; Hasheminasab, S.M.; Ravi, R.; Habib, A. LiDAR-aided interior orientation parameters refinement strategy for consumer-grade cameras onboard UAV remote sensing systems. Remote Sens. 2020, 12, 2268. [Google Scholar] [CrossRef]

- Wang, Q.; Yan, L.; Sun, Y.; Cui, X.; Mortimer, H.; Li, Y. True orthophoto generation using line segment matches. Photogramm. Rec. 2018, 33, 113–130. [Google Scholar] [CrossRef]

- Rau, J.Y.; Chen, N.Y.; Chen, L.C. True orthophoto generation of built-up areas using multi-view images. Photogramm. Eng. Remote Sens. 2002, 68, 581–588. [Google Scholar]

- Kuzmin, Y.P.; Korytnik, S.A.; Long, O. Polygon-based true orthophoto generation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 529–531. [Google Scholar]

- Amhar, F.; Jansa, J.; Ries, C. The generation of true orthophotos using a 3D building model in conjunction with a conventional DTM. Int. Arch. Photogramm. Remote Sens. 1998, 32, 16–22. [Google Scholar]

- Chandelier, L.; Martinoty, G. A radiometric aerial triangulation for the equalization of digital aerial images and orthoimages. Photogramm. Eng. Remote Sens. 2009, 75, 193–200. [Google Scholar] [CrossRef]

- Pan, J.; Wang, M.; Li, D.; Li, J. A Network-Based Radiometric Equalization Approach for Digital Aerial Orthoimages. IEEE Geosci. Remote Sens. Lett. 2010, 7, 401–405. [Google Scholar] [CrossRef]

- Milgram, D. Computer methods for creating photomosaics. IEEE Trans. Comput. 1975, 100, 1113–1119. [Google Scholar] [CrossRef]

- Kerschner, M. Seamline detection in colour orthoimage mosaicking by use of twin snakes. ISPRS J. Photogramm. Remote Sens. 2001, 56, 53–64. [Google Scholar] [CrossRef]

- Pan, J.; Wang, M. A Seam-line Optimized Method Based on Difference Image and Gradient Image. In Proceedings of the 2011 19th International Conference on Geoinformatics, Shanghai, China, 24–26 June 2011. [Google Scholar]

- Chon, J.; Kim, H.; Lin, C. Seam-line determination for image mosaicking: A technique minimizing the maximum local mismatch and the global cost. ISPRS J. Photogramm. Remote Sens. 2010, 65, 86–92. [Google Scholar] [CrossRef]

- Yu, L.; Holden, E.; Dentith, M.C.; Zhang, H.; Yu, L.; Holden, E.; Dentith, M.C.; Zhang, H. Towards the automatic selection of optimal seam line locations when merging optical remote-sensing images. Int. J. Remote Sens. 2012, 1161. [Google Scholar] [CrossRef]

- Fernandez, E.; Garfinkel, R.; Arbiol, R. Mosaicking of aerial photographic maps via seams defined by bottleneck shortest paths. Oper. Res. 1998, 46, 293–304. [Google Scholar] [CrossRef] [Green Version]

- Fernández, E.; Martí, R. GRASP for seam drawing in mosaicking of aerial photographic maps. J. Heuristics 1999, 5, 181–197. [Google Scholar] [CrossRef]

- Chen, Q.; Sun, M.; Hu, X.; Zhang, Z. Automatic seamline network generation for urban orthophoto mosaicking with the use of a digital surface model. Remote Sens. 2014, 6, 12334–12359. [Google Scholar] [CrossRef] [Green Version]

- Wan, Y.; Wang, D.; Xiao, J.; Lai, X.; Xu, J. Automatic determination of seamlines for aerial image mosaicking based on vector roads alone. ISPRS J. Photogramm. Remote Sens. 2013, 76, 1–10. [Google Scholar] [CrossRef]

- Pang, S.; Sun, M.; Hu, X.; Zhang, Z. SGM-based seamline determination for urban orthophoto mosaicking. ISPRS J. Photogramm. Remote Sens. 2016, 112, 1–12. [Google Scholar] [CrossRef]

- Guo, W.; Zheng, B.; Potgieter, A.B.; Diot, J.; Watanabe, K.; Noshita, K.; Jordan, D.R.; Wang, X.; Watson, J.; Ninomiya, S.; et al. Aerial imagery analysis—Quantifying appearance and number of sorghum heads for applications in breeding and agronomy. Front. Plant Sci. 2018, 871. [Google Scholar] [CrossRef] [Green Version]

- Duan, T.; Zheng, B.; Guo, W.; Ninomiya, S.; Guo, Y.; Chapman, S.C. Comparison of ground cover estimates from experiment plots in cotton, sorghum and sugarcane based on images and ortho-mosaics captured by UAV. Funct. Plant Biol. 2017, 44, 169–183. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Applanix APX-15 Datasheet. Available online: https://www.applanix.com/products/dg-uavs.htm (accessed on 26 April 2020).

- Velodyne Puck Lite Datasheet. Available online: https://velodynelidar.com/vlp-16-lite.html (accessed on 26 April 2020).

- Sony alpha7R. Available online: https://www.sony.com/electronics/interchangeable-lens-cameras/ilce-7r (accessed on 8 December 2020).

- Headwall Nano-Hyperspec Imaging Sensor Datasheet. Available online: http://www.analytik.co.uk/wp-content/uploads/2016/03/nano-hyperspec-datasheet.pdf (accessed on 5 January 2021).

- Applanix POSLV 125 Datasheet. Available online: https://www.applanix.com/products/poslv.htm (accessed on 26 April 2020).

- Velodyne Puck Hi-Res Datasheet. Available online: https://www.velodynelidar.com/vlp-16-hi-res.html (accessed on 26 April 2020).

- Velodyne HDL32E Datasheet. Available online: https://velodynelidar.com/hdl-32e.html (accessed on 26 April 2020).

- He, F.; Habib, A. Target-based and feature-based calibration of low-cost digital cameras with large field-of-view. In Proceedings of the ASPRS 2015 Annual Conference, Tampa, FL, USA, 4–8 May 2015. [Google Scholar]

- Ravi, R.; Shamseldin, T.; Elbahnasawy, M.; Lin, Y.J.; Habib, A. Bias impact analysis and calibration of UAV-based mobile LiDAR system with spinning multi-beam laser scanner. Appl. Sci. 2018, 8, 297. [Google Scholar] [CrossRef] [Green Version]

- Schwarz, K.P.; Chapman, M.A.; Cannon, M.W.; Gong, P. An integrated INS/GPS approach to the georeferencing of remotely sensed data. Photogramm. Eng. Remote Sens. 1993, 59, 1667–1674. [Google Scholar]

- Lin, Y.C.; Cheng, Y.T.; Zhou, T.; Ravi, R.; Hasheminasab, S.M.; Flatt, J.E.; Troy, C.; Habib, A. Evaluation of UAV LiDAR for mapping coastal environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Lin, Y.C.; Habib, A. Quality control and crop characterization framework for multi-temporal UAV LiDAR data over mechanized agricultural fields. Remote Sens. Environ. 2021, 256, 112299. [Google Scholar] [CrossRef]

- Karami, A.; Crawford, M.; Delp, E.J. Automatic Plant Counting and Location Based on a Few-Shot Learning Technique. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5872–5886. [Google Scholar] [CrossRef]

- Hasheminasab, S.M.; Zhou, T.; Habib, A. GNSS/INS-assisted structure from motion strategies for UAV-based imagery over mechanized agricultural fields. Remote Sens. 2020, 12, 351. [Google Scholar] [CrossRef] [Green Version]

| ID. | Data Collection Date | Crop | System | Sensors | Sensor-to-Object Distance (m) | Ground Speed (m/s) | Lateral Distance (m) |

|---|---|---|---|---|---|---|---|

| UAV-A1 | 17 July 2020 | Maize | UAV | LiDAR, RGB | 20 | 2.5 | 5 |

| UAV-A2 | UAV | RGB, hyperspectral | 40 | 5.0 | 9 | ||

| PR-A | PhenoRover | RGB, hyperspectral | 3–4 | 1.5 | 4 | ||

| UAV-B1 | 20 July 2020 | Sorghum | UAV | LiDAR, RGB | 20 | 2.5 | 5 |

| UAV-B2 | UAV | RGB, hyperspectral | 40 | 5.0 | 9 | ||

| PR-B | PhenoRover | hyperspectral | 3–4 | 1.5 | 4 |

| ID | Dataset | Sensor | Sensor-to-Object Distance (m) | Resolution (cm) | DSM | Seamline Control |

|---|---|---|---|---|---|---|

| i | UAV-A1 | RGB | 20 | 0.25 | 90th percentile | Voronoi network |

| ii | Cloth simulation | Voronoi network | ||||

| iii | Average elevation within a row segment | Voronoi network | ||||

| iv | 90th percentile | Row segment boundary | ||||

| v | Cloth simulation | Row segment boundary | ||||

| vi | Average elevation within a row segment | Row segment boundary |

| ID | Number of Established Matches | |||||

|---|---|---|---|---|---|---|

| Orthophoto i | Orthophoto ii | Orthophoto iii | Orthophoto iv | Orthophoto v | Orthophoto vi | |

| 1 | 868 | 1319 | 1610 | 1153 | 1802 | 2361 |

| 2 | 884 | 1504 | 1548 | 1118 | 2109 | 2273 |

| 3 | 136 | 248 | 463 | 720 | 1080 | 2329 |

| 4 | 651 | 1264 | 1829 | 998 | 1799 | 2788 |

| 5 | 185 | 418 | 616 | 830 | 1597 | 2452 |

| 6 | 780 | 1155 | 1303 | 1031 | 1701 | 2211 |

| 7 | 798 | 1297 | 1883 | 1074 | 1938 | 2890 |

| 8 | 1037 | 1618 | 1927 | 1481 | 2368 | 2935 |

| 9 | 966 | 1603 | 1651 | 1315 | 2474 | 2807 |

| 10 | 560 | 1409 | 1698 | 714 | 1981 | 2547 |

| ID | Dataset | Sensor | Sensor-to-Object Distance (m) | Resolution (cm) | DSM | Seamline Control |

|---|---|---|---|---|---|---|

| I | UAV-A1 | RGB | 20 | 0.25 | Average elevation within a row segment | Row segment boundary |

| II | UAV-A2 | RGB | 40 | 0.50 | ||

| III | PR-A | hyperspectral | 3–4 | 0.50 | ||

| IV | UAV-B1 | RGB | 20 | 0.25 | ||

| V | UAV-B2 | RGB | 40 | 0.50 | ||

| VI | PR-B | hyperspectral | 3–4 | 0.50 | ||

| VII | UAV-A2 | hyperspectral | 40 | 4 | Average elevation within a row segment | Voronoi network |

| VIII | UAV-A2 | Row segment boundary | ||||

| IX | UAV-B2 | Voronoi network | ||||

| X | UAV-B2 | Row segment boundary |

| ID | Dataset | Sensor | Sensor-to-Object Distance (m) | Resolution (cm) | DSM | Seamline Control |

|---|---|---|---|---|---|---|

| 1 | PR-A | RGB | 3–4 | 0.2 | Average elevation within a row segment | Voronoi network |

| 2 | Row segment boundary | |||||

| 3 | Plant boundary |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-C.; Zhou, T.; Wang, T.; Crawford, M.; Habib, A. New Orthophoto Generation Strategies from UAV and Ground Remote Sensing Platforms for High-Throughput Phenotyping. Remote Sens. 2021, 13, 860. https://doi.org/10.3390/rs13050860

Lin Y-C, Zhou T, Wang T, Crawford M, Habib A. New Orthophoto Generation Strategies from UAV and Ground Remote Sensing Platforms for High-Throughput Phenotyping. Remote Sensing. 2021; 13(5):860. https://doi.org/10.3390/rs13050860

Chicago/Turabian StyleLin, Yi-Chun, Tian Zhou, Taojun Wang, Melba Crawford, and Ayman Habib. 2021. "New Orthophoto Generation Strategies from UAV and Ground Remote Sensing Platforms for High-Throughput Phenotyping" Remote Sensing 13, no. 5: 860. https://doi.org/10.3390/rs13050860

APA StyleLin, Y. -C., Zhou, T., Wang, T., Crawford, M., & Habib, A. (2021). New Orthophoto Generation Strategies from UAV and Ground Remote Sensing Platforms for High-Throughput Phenotyping. Remote Sensing, 13(5), 860. https://doi.org/10.3390/rs13050860