1. Introduction

Coastal cliffs are specific coastal landforms characterized by steep rocky walls, which are present on about 52% of the global shoreline [

1,

2]. Their complex face topography, with ledge crevices and overhangs, is the result of geological, physical and environmental processes interplaying at the coast. The erosional processes of cliffs are mainly caused by the notching at their base due to wave forcing, and/or by the collapse of the cliff face due to the combination of atmospheric and marine processes [

2]. The current sea level rise in the actual climatic change scenario is further intensifying the erosional processes [

1].

It is fundamental to monitor the coastal cliffs face stability and to measure its variation, as coastal cliffs provide habitat to flora and fauna [

3], their collapse can cause injuries and fatalities [

4], and their erosion often threaten coastal communities and infrastructures worldwide [

5]. A wide range of methods have been used to monitor coastal cliff erosion, including historic cartographic mapping, aerial photography and photogrammetry, satellite imagery, global navigation satellite systems (GNSS), total stations, airborne and terrestrial laser scanning ([

1] and references therein). Nevertheless, in the last decade, the advent of Unmanned Aerial Systems (UAS) has improved the possibility of reconstructing the 3D complex cliff topography [

6,

7,

8,

9,

10]. In fact, UAS allows to (i) acquire high spatial resolution images at low-cost, (ii) monitor inaccessible areas, overcoming the logistical constraints typical of rock cliff environments, (iii) increase the frequency of the surveys needed to quantify the spatial and temporal cliff response to environmental forcing, which in turn, increases our understanding of rock fall magnitude–frequency relationships.

The 3D reconstruction of a coastal cliff from an image-based UAS survey consists of applying a Structure-from-Motion and Multi View Stereo (SfM-MVS) processing workflow, to generate a dense 3D point cloud, which is then used for building a reliable and detailed 3D model of the cliff surface [

11]. Unlike Terrestrial Laser Scanning (TLS) or Airbone Laser Scanning (ALS), SfM-MVS photogrammetry provides a low-cost, flexible, easy to use workflow and does not requires a highly skilled operator for data acquisition and processing [

1,

12,

13].

In the literature, the works devoted to the 3D reconstruction of coastal cliffs from UAS-based topographic surveys focused mainly on the image acquisition and georeferencing strategies, testing the influence of camera angle [

8], flight patterns [

7] and distribution of ground control points (GCPs) [

6,

7]. Jaud et al. [

8] showed that the use of nadiral images were irrelevant since the final point cloud can have many data gaps (zones without points). Taking the advantage of drones offer to change the imaging angle, the authors studied the impact of this parameter (ranging from nadir to 40° off-nadir) on the accuracy of the 3D reconstruction. Off-nadir aerial coverages were adopted in several UAS-based surveys such as in agroforestry [

14,

15], urban areas [

16,

17] and coastal cliffs [

7,

8,

11,

18]. In fact, the acquisition geometry of aerial coverages from UAS can be a key factor in the geometric accuracy of the Bundle Block Adjustment (BBA), the density of points in the cloud and the reduction of the occlusions, that is, in reducing the gaps (holes) in point clouds [

19]. Combining nadiral and oblique (off-nadir) images optimizes the photogrammetric surveys in stepped areas [

20], improves the accuracy of the generated 3D models, but also increases the processing time [

21,

22]. By placing the 90° angle off nadir for vertical cliffs, a high density of points in the reconstruction is commonly obtained [

7].

Several authors (e.g., [

8,

23,

24]) reported that optimal image network configuration, image number, overlap, spatial resolution, and illumination conditions are the key parameters to be considered for accurate tie point detection and for dense point cloud generation. However, the development of methods for quantifying the impact of image acquisition geometry on the quality of the point cloud (i.e., accuracy, density and data gaps) is still lacking. Due to the absence of purely 3D methodologies for analyzing point clouds, it common to reduce its dimensionality to 2D, by projecting the point cloud on a given plane. In [

25], this simple approach was used to detect and extract rockfall events of a vertical cliff from time series of TLS-based point clouds. In order to include the third spatial dimension on the analysis of multidimensional point patterns, an intuitive alternative is to voxelize the 3D space. In this context, voxel based techniques have been used for detecting and filling gaps in 3D surface reconstruction [

26,

27], for semantic segmentation and for classifying point clouds [

28], among other applications.

In the last decade, the recent advances in computer vision and the technological development of image sensors and UAS, triggered the appearance of commercial and open source SfM-MVS software packages and popularized the use of photogrammetry by the scientific community [

29]. The fully automated “black-box” commercial software packages use simple and optimized interfaces, which in general require the tuning of a few processing parameters, making their adoption easy for inexperienced users. Due to its low-cost, intuitive, easy and user-friendly Graphical User Interface (GUI), Agisoft Metashape (formerly called Photoscan) is perhaps one of the most used SfM-MVS software [

12,

30,

31,

32]. For the reconstruction of the 3D scene geometry from a set of overlapping images. Metashape uses a proprietary SfM-MVS workflow, where the technical details about the algorithms are not publicly known [

31]. Nonetheless, an important issue that is poorly addressed in the literature is a thorough insight into the impact of the main processing parameters of a given SfM-MVS software package on the accuracy of the 3D reconstruction [

33,

34].

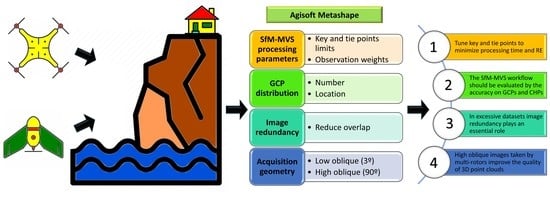

In this context, the objective of this paper was to assess the impact of the main SfM-MVS processing parameters, image redundancy and acquisition geometry of a fixed-wing and a multirotor UAS in the 3D reconstruction of a coastal cliff. By using one of the most used commercial software packages (Agisoft Metashape), a comprehensive analysis of the implemented processing workflow steps was performed, investigating the impact of tuning the default processing parameters and deriving the optimal ones. Adopting the derived optimal processing parameters, the impact of image redundancy in excessive image dataset acquired by multi-rotor was quantified by using two point cloud quality indicators: the point cloud density and data gaps. For the aim, a novel approach based on the voxelization of the point cloud was developed. Finally, the point cloud density and data gaps were used to evaluate the impact of the acquisition geometry of the aerial coverages carried out by each UAS. Overall, the analysis improved the technical knowledge required for the 3D reconstruction of coastal cliff faces by drones.

3. Materials and Methods

With the goal of identifying an optimal SfM-MVS processing workflow that maximizes the precision and accuracy of the dense clouds commonly used in cost-effective monitoring programs of coastal cliffs, the following sections describe (i) the strategies used by each UAS in image acquisition; (ii) a comprehensive analysis of the SfM-MVS processing workflow used in the 3D reconstruction of the cliff surface; (iii) the evaluation criteria used for charactering the quality of the dense point cloud generated in the processing workflow.

3.1. UAS Surveys and GCP Acquisition

Two flights were performed at the study area on the same date and time, 28 July 2019, under diffusive illumination conditions in order to avoid shadows. Two different UAS platforms were used: the fixed-wing SenseFly® (Cheseaux-sur-Lausanne, Switzerland) Ebee (hereinafter, Ebee), equipped with a Sony WX220 camera (4.45 mm focal length, 18 megapixel) and the multi-rotor DJI® (SZ DJI Technology Co., Shenzhen, China) Phantom 4 Pro (hereinafter, Phantom), equipped with a Sony FC6310 camera (8.80 mm focal length, 20 megapixel). The Ebee does not have a gimbal to point the camera in a given direction. However, the drone can acquire oblique images up to 45° off-nadir, by using a proprietary algorithm that runs onboard on the autopilot and places and orients the drone on the viewing angle defined previously by the user. The Phantom had a three-axis (roll, pitch and yaw) stabilization gimbal and can point the camera in any given viewing angle.

The Ebee flight mission plan was set on eMotion3 software defining a horizontal mapping block of 1.1 ha that was covered with cross flight pattern above the cliff and oblique image acquisition. The front and side overlaps were set to 70% and 65%, respectively. The aircraft flew for 7 min at an average flight altitude of 116 m above the beach (i.e., base of the cliff) and the camera pitch angle was set to 3° for the acquisition of the oblique imagery. Overall, the Ebee collected 53 images with an approximate Ground Sampling Distance (GSD) of 3.3 cm (

Figure 2b).

The Phantom flight mission plan was set on the Drone Harmony software [

35]. The flight path was set approximately parallel to the cliff, with the camera off-nadir angle (pitch) equal to 90°. The drone flew for 27 min and kept a distance from the cliff face varying from 40 to 60 m. It went up and down on the same line, following vertical stripes and pointing the camera alternatively to the right and to the left of about 10° around the vertical axis (yaw). The front and side overlaps were set to 85% and 70%, respectively. Thus, the vertical stripes were separated from each other by 20 m and two photographs were acquired each time the UAV ascended or descended 6 m (one on the right and one on the left). Finally, to integrate the whole scene, one horizontal stripe was added above the top of the cliff with the camera pointing down (nadir or pitch angle of 0°). The Phantom collected 448 images with a GSD varying from 1.1 to 1.6 cm (

Figure 2b).

Figure 2c shows the circular distributions of the attitude angles (ω, φ, κ) for the two image datasets. The mean direction (μ) and the resultant vector length (R) are a measure of viewing dispersion (0 means high variability and 1 means low variability) and are commonly used to characterize the angular distributions [

36]. Overall, the image data set acquired by the multi-rotor shows a higher variability for the two attitude angles ω and φ, which are the most important for the characterization of the vertical façade plan.

In this work 20 square chessboard targets (50 cm side), used later as Ground Control Points and/or independent Check Points (CHP), were evenly distributed (as much as possible) at three different height levels along the cliff: seven targets on the bottom of the cliff, nine in the intermediate step and four on the top (

Figure 2a). These targets were surveyed with a dual-frequency GNSS geodetic receiver (Geomax Zenith 10) in Network Real-Time Kinematic mode (NRTK). The coordinates were only acquired in fix status which ensures positional precision and accuracy at centimeter level [

37]. Additional GNSS parameters were simultaneously monitored and analyzed to understand individual target accuracy (Geometric Dilution of Precision or GDOP, number of satellites, Horizontal Standard Deviation (HSDV), and Vertical Standard Deviation (VSDV)).

3.2. 3D Reconstruction from UAS-Based Imagery and SFM-MVS

Generating a georeferenced and dense 3D point cloud from a block of overlapping images with a SfM-MVS method is usually done in five processing steps [

12,

37,

38,

39,

40]: (A) feature detection; (B) feature matching and geometric validation; (C) sparse 3D reconstruction by SfM; (D) scene geometry georeferencing by GCPs and refinement of the bundle adjustment by including camera self-calibration; (E) dense 3D reconstruction by multi-view stereo dense matching (see

Figure 3).

In step A, a Scale Invariant Feature Transform (SIFT) algorithm is commonly used to detect features (or key points) in each image. The key points are invariant to changes in image scale and orientation and partially invariant to photometric distortions and 3D camera view point [

41]. The number of key points in each image depends mainly on the texture and resolution [

38].

In step B, the key points, characterized by a unique descriptor, are matched in multiple images using, in general, an Approximate Nearest Neighbor (ANN) algorithm. The key point correspondences are then filtered, for each matched image pair, by imposing a geometric epipolar constraint with a RANdom SAmple Consensus (RANSAC) algorithm.

In step C, the geometrically corrected correspondences (i.e., tie points) are used to reconstruct, simultaneously, the 3D geometry of the scene (structure) and the image network geometry (motion) in an iterative bundle adjustment. In this step, the external orientation (extrinsic) parameters (EOP) describing the position and attitude of each image in an arbitrary coordinate system, and the internal camera (intrinsic) parameters (IOP), describing the camera calibration, are also estimated using only the image coordinates of the tie points as observations.

In step D, the sparse 3D point cloud is scaled and georeferenced in the GCP coordinate system, using a 3D similarity transformation (seven parameters) and taken as observations the GCP coordinates measured in general by a dual-frequency GNSS receivers. If a larger number of GCPs are measured and marked on the images where they appear, this additional information can be included in the bundle adjustment to refine the EOP, IOP and the 3D coordinates of the tie points. Using appropriate weights for the measurements of the image (tie points and GCP) and ground (GCP) coordinates, the bundle adjustment is re-run to minimize the reprojection and the georeferencing error.

In step E, the point density of the sparse cloud is increased by several orders of magnitude (in general two or three) by applying a MVS dense matching algorithm. Starting with the sparse cloud, the EOP and IOP (eventually the optimized ones) obtained in step 4, the computational intensive dense matching procedure produces, in the image space, depth maps for each group of images and finally merges them into a global surface estimate of the whole image block [

42].

Thorough the above five steps and according the characteristics of the image dataset and the methods and algorithms used in each step, several parameters and thresholds are needed to control and adjust the geometric quality of 3D reconstruction. The different SfM-MVS software packages that are currently used by the geoscience community, whether commercial or open-source, use different names and specific values for the processing parameters. In general, a typical SfM-MVS user performs his processing workflows with the default values recommended by the manufacturer [

12,

30,

31,

33,

42].

3.3. Impact of the Metashape Processing Parameters

The Metashape processing workflow consists basically in four steps (blue rectangle in

Figure 3): (1) determination of the image EOPs and camera IOPs by bundle adjustment (implemented in the GUI function

Align Photos); (2) tie points refinement (implemented in

Gradual Selection); (3) optimization of the extrinsic and intrinsic camera parameters based on the tie points refinement and eventually on the measured and identified GCPs (implemented in

Optimize Cameras); (4) dense reconstruction of the scene geometry (implemented in

Build Dense Cloud).

One of the major advantages of Metashape over other SfM-MVS software packages is the possibility of using python scripting Application Program Interface (API) for implementing automated, complex and repetitive processing workflows with minimal user intervention. When comparing the API (light blue rectangles in

Figure 3) with the GUI, additional steps are required. First, the Align Photos and Build Dense Cloud steps (step 1 and 4) are performed by two additional functions:

matchPhotos and

alignCameras for step 1, and

buildDepthMaps and

buildDenseCloud for step 4. Second, to replicate the Gradual Selection GUI function (step 2) it is necessary to develop a custom API function (in our case the

sparse_cloud_filtering).

At each step of the Metashape workflow the user can control and tune some of the preset processing parameters (

Table 1). However, in the literature only a few works reported the parameters values used in each of the processing steps (e.g., [

33,

43,

44]), whereas the majority adopted the default values given by the software (e.g., [

6,

11,

21]). Considering that the accuracy issues in the bundle adjustment are often due the systematic errors in the IOP (camera model) estimated during the self-calibration of the Align Photos step [

45], some authors indicated also the selection strategy and the parameters used in the Gradual Selection and Optimize cameras steps (e.g., [

34,

44]).

In order to assess the impact of processing parameters on the geometric accuracy of dense clouds generated by the Metashape software, a comprehensive analysis of the processing workflow steps was performed into four stages. In each consecutive stage, we started with the Metashape default values (

Table 1) and we modified successively the main influencing parameters (marked by (+) in

Table 1). After finding the optimal values, these parameters were fixed, and the next stage was started.

Stage I: impact of the number of key and tie points and choice of the Adaptive Camera Model Fitting (ACMF). The number of key points indicates the maximum number of features that can be used as tie point candidates. The tie points limit represents the maximum number of matching points that can be used to tie this image with another. The adaptive camera model parameter is related with the strategy that can be used to perform the autocalibration in the BBA. To analyze the impact of these three parameters on the Align Photos (step 1), two processing scenarios were considered (

Table 2). In the scenario 1 (PS-1), all the key points extracted from each image were used as tie point candidates (the tie point limit is set to 0). In scenario 2 (PS-2), to reduce the time spent in key point matching and thus to optimize the computational performance [

46], the maximum number of tie points was fixed to a specific value (7000). In both scenarios, the impact of the automatic selection of camera parameters to be included in the initial camera alignment was also analyzed by executing a second processing round enabling the software option Adaptive camera model, which prevents the divergence of some IOP during the BBA of datasets with weak geometry [

46].

Stage II: impact of GCPs number and distribution. Using the optimal values for the limits of tie and key points, the impact of the number and distribution of GCPs on the 3D point cloud was assessed. Following our previous work [

7], the impact of GCP was analyzed using three processing scenarios (

Table 2). In scenarios 3 (PS-3) and 4 (PS-4), GCPs were only considered on the beach and top of the cliff, but with different numbers and locations at each level: five GCPS (and thus 15 CHPs) for PS-3 and 10 GCPS (10 CHPs) for PS-4. In scenario 5 (PS-5), five more GCPS located at the intermediate level (artificial step level) were added to the previous scenario, making a total of 15 GCPs (and thus five CHPs).

Stage III: impact of observations weights. Using the best GCPs distribution derived for the optimum values of tie points and key points limit, the impact of weights on GCPs (marker accuracy pix) and tie points (tie points accuracy) on the generated 3D point cloud was assessed. It worth noting that the term accuracy is incorrectly used by Agisoft as these values represent the expected precision of each measurement, which are used to weigh the different types of observations in the BBA. In scenario 6 (PS-6), the impact of observation weights on the accuracy of the 3D point cloud was assessed by combining five possible values for the precision of the automatic image measurement of tie points (tie point accuracy pix parameter in

Table 2) and four values for the manual measurement of the targets (marker accuracy pix parameter in

Table 2). The measurement precision of each GCPs described by the observed HSDV and VSDV were used to assign the weight of each GCP in the horizontal and vertical components (marker accuracy m parameter in

Table 1), respectively.

Stage IV: impact of optimizing camera model. With appropriate parameters that minimize the Root Mean Square Error (RMSE) on GCPs and CHPs, the impact of refining the IOP and EOP through the optimize camera model step was assessed. In scenario 7 (PS-7), a threshold of 0.4 was applied to remove the tie points with the higher reprojection error. On the other hand, the tie points that are visible in three or fewer images may also contribute with higher reprojection errors. In this context, the impact of image count observation (ICO) on the reprojection error was also evaluated: tie points with three or less ICO, were removed by applying a filtering (or refinement) operation. To accomplish that, two additional steps were performed: (i) refinement of tie points; (ii) readjustment of IOP, EOP and 3D coordinates of tie points through a new self-calibrating BBA.

3.4. Impact of Image Redundancy and Acquisition Geometry

In excessive image datasets, the best compromise between the processing time and the geometric quality of the 3D cliff model can be achieved by discarding the images that do not bring any additional geometric information on the 3D reconstruction process. This approach is implemented in Metashape through the “Reduce overlap” GUI function [

46], which works on a coarse 3D model of the imaged object, generated by meshing the coarse point cloud obtained in the image alignment step. Although the algorithm is not documented in detail, it tries to find a minimal set of images such that each face of the rough 3D model is observed from predefined different image viewing angles [

46]. In Metashape the parameter Reduce overlap can be tuned by the user in order to perform three possible levels of image redundancy: high, medium and low. Using the low option 156 images were automatically removed from the original dataset (see

Section 4.4). This reduced image dataset was then used as Phantom image data set on

Section 4.1,

Section 4.2 and

Section 4.3.

Concerning the acquisition geometry, the angle of incidence, defined as the angle between the incident ray on the cliff and the normal to the cliff is the key parameter impacting the quality of the 3D reconstruction from an image block. In this work, two 3D indicators were used to assess the Reduce overlap parameter: the point cloud density and data gaps. Both metrics were developed using a novel approach based on the voxelization of the point cloud (see

Section 3.5.2).

3.5. Evaluation Criteria

3.5.1. Geospatial Errors

The RMSE is frequently used as a proxy for assessing the quality of a SfM-MVS photogrammetric workflow or as a metric for assessing the geometric accuracy of the generated geospatial products [

47]. For a one-dimensional variable

x, the RMSE is given by

where

and

are the

i-th predicted and measured value, respectively, and

the error, that is, the difference between predicted and measured values. Extending the equation (1) to the 3D case, the accuracy of the sparse cloud composed by

N points

can be used as a proxy of the BBA accuracy [

48], and computed as:

In order to assess the impact GCP distribution on the georeferenced point cloud (

) a weighted RMSE was used using the RMSEs obtained for both GCPs and CHPs, with weights (

and

) assigned to the number of points of each point type.

For example, in PS-3, and were and , respectively.

The Reprojection Error (RE) is also an important metric to assess the accuracy of the 3D reconstruction [

23]. RE quantifies the difference between points on the sparse cloud and the same points reprojected using the camera parameters. In other words, the distance between a point observed in an image and its reprojection is measured through the collinearity equations. In practice, using the image projection and reprojection coordinates, the RE is given by:

3.5.2. Point Cloud Density and Gap Detection

For estimating the volumetric density and detecting data gaps in dense point clouds, a simple approach based on the voxelization of the point cloud was proposed. In this voxelization, the cloud with its points distributed heterogeneously in 3D space, was converted into a regular 3D grid of cubic elements (i.e., voxels) with a certain size defined previously by the user. This binning procedure, which is based on a 3D histogram counting algorithm, enabled data reduction by assigning each point (or group of points) to a defined voxel. Moreover, it does not compromise the three-dimensional geometric characteristics of the object represented implicitly by the point cloud [

49]. After voxelization, the point density was estimated straightforward by counting, for each point, the number N of neighbors that were inside the corresponding voxel. The point density can also be expressed as a volumetric density by dividing, for each voxel, the number of points that are inside by the voxel volume.

Due to occlusions, shadows, vegetation, or lack of image overlap, a given area of the object may not be visible from at least two images. Thus, for a given point cloud generated by SfM-MVS photogrammetry, areas without points (i.e., gaps or holes) may appear on the imaged scene. Based on the assumption that the identification of meaningful gaps in point clouds can be related to point distribution and density a simple workflow based on morphological operations on 3D binary images was proposed (

Figure 4) and implemented in Matlab R2019b (The Math Works, Natick, MA, USA). In the first step, the point cloud was voxelized and a 3D binary image was created where each voxel was assigned the value one if it contained at least one point and zero otherwise. In the second step, each height level Z

i was analyzed individually and the empty voxels were used for estimating the path connecting two clusters of nonempty voxels. The nonempty clusters voxels were identified by a connected component analysis with 8-neighbors connectivity, while the path estimation was done by an Euclidean distance transform of a binary image [

50]. This distance transform identifies the empty voxels (gaps) that join two groups of nonempty voxels by the closest path. In the third step, the difference between the 3D path image obtained in the previous step and initial 3D image was computed to identify the voxel gaps. Finally, the centroids of the gap voxels were mapped back to the original point cloud for visualization purposes.

5. Discussion

Fully automated “black-blox” SfM-MVS photogrammetric packages with their default processing parameters, such as Metashape, provide simplified processing workflows appropriate for a wide range of users. However, a comprehensive understanding of the main processing parameters is necessary to control and adjust the quality of the 3D reconstruction to the characteristics of the image dataset.

In Metashape, the parameters related with the key and tie points had a huge impact on the processing time and a limited impact on the RE and on the RMSE of GCPs. The results obtained from the PS-1 and PS-2 for the two image datasets acquired with different imaging geometries, revealed an interesting pattern of these two metrics (see

Figure 6). The behavior of the combined impact of key and tie points limits on the RE and RMSE is different for the Ebee dataset and for the Phantom dataset. In both cases, increasing the number of tie points will decrease, at least in the limit, the RE. However, the observed behavior of the RE for the Phantom dataset, requires special care when setting the values of these two parameters. Although, the RMSE at the GCPs in the BBA had a more linear behavior, an appropriate setting of these two parameters (i.e., key points and tie points limits) is required to optimize the processing time and the 3D accuracy of the reconstruction. Not assigning a value for the tie points limit parameter implies that the time spent in the alignment step, the RE and the RMSE at GCPs in the BBA will only be related to the key points limit parameter, which may be not optimal. However, as pointed out by [

24], it possible to extend the Metashape workflow by including customized tie point filtering procedures in order to improve the quality of the dense 3D reconstruction.

Self-calibrating BBA is commonly used by Metashape users to determine the intrinsic geometry and camera distortion model. Although the ACFM parameter enables the automatic selection of camera parameters to be included in the BBA, the variability of the camera parameters is dependent on the key and tie points limits (see

Figure 9). For image datasets with complex acquisition geometries the camera parameters (except k1, i.e., a radial distortion coefficient) were less influenced by the number of key and tie points. As reported by [

45], the developing trend of UAV manufacturers to apply generic on-board lens distortion corrections may result in a preprocessed imagery for which the SfM-MVS processing software will try to model the large residual image distortions (i.e., the radial distortions) that remain after this black-box correction. To mitigate this effect on-board image distortion corrections should be turned off or, if possible, raw (uncorrected) should be acquired and processed. On the other hand, when the camera optimization was performed after a refinement step in a second BBA, their impact on the camera parameters was more effective and recommendable for the 3D reconstruction of costal cliffs. It is worth noting that, when the environmental conditions allow the use of Real-Time Kinematic UAS, the strategy used to define the number and distribution of GCP and to optimize the parameters of the subsequent self-calibrating BBA should be changed accordingly [

51,

52].

Image redundancy could be a key parameter for processing excessive image datasets acquired frequently in coastal surveys with multi-rotor UAS in manual operated flights or in autonomous operated flights performed by the autopilot and flight/mission planning software [

19]. The Metashape “Reduce overlap” tool was very effective for reducing the number of images and therefore for decreasing the memory requirements and processing time while maintaining the 3D point density, data gaps and the accuracy of the reconstructed cliff surface that could be achieved by using the full image dataset.

Quantifying the volumetric density and the data gaps of the dense point clouds generated by SfM-VMS is also crucial for assessing the impact of a given UAS-based acquisition geometry on the quality of the dense point cloud. The combined use of a multi-rotor and a flight/mission planning software allowed the execution of an aerial coverage with incidence angles adequate to the stepped and overhanging coastal cliff. This adequacy was quantified by the high spatial density and the low volume of data gaps of the dense clouds generated by processing the image blocks acquired with this approach. The acquisition geometry had also an impact on the processing time, probably caused by the difficulties found by the dense matching algorithm to correlate the large differences in scale present in each single image. As observed by [

20], the time spent by each image for generating the dense point cloud was less in the high oblique Phantom images than in the low oblique Ebee image (29.22 s vs. 43.26 s).

In a previous study [

7], the main advantages and disadvantages of each aircraft for alongshore 3D cliff monitoring were enounced. Given the results obtained by this work it is further recommended that the flight mission should be carefully planned in order to adapt the image acquisition geometry, the GSD and flight safety to the given project conditions.

6. Conclusions

In this paper a comprehensive analysis of the impact of the main Metashape processing parameters on the 3D reconstruction workflow was performed. To minimize both the processing time and the Reprojection Rrror (RE) of the alignment step the parameters related with the key and tie points limits should be tuned accordingly. To test the impact of the acquisition geometry on the quality of the generated SfM-MVS point clouds, two UAS platforms were used in the topographic survey of the cliff face: a fixed-wing (Ebee) and a multi-rotor (Phantom). Due to the differences in flight path design and the view angle of each payload camera, the number of images acquired by the multi-rotor were approximately eight times the number of images acquired by the fixed-wing.

Taking advantage of this excessive image dataset the impact of image redundancy on quality of the generated dense clouds was performed. First, using the functionality of “Reduce Overlap” Metashape function, three optimized datasets were generated from the full Phantom image dataset. Second, two developed 3D quality indicators, the point density and data gaps, were used for assessing, visually and quantitatively, the impact of the acquisition geometry of image blocks acquired by two aircrafts. Surprisingly, a high number of overlapping images is not a necessary condition for generating 3D point clouds with high density and low data gaps. Moreover, although the accuracy of the dense point clouds generated from the optimized workflows of these two aircrafts was similar (less than 1 cm), the results showed an expressive advantage of the multirotor in terms of processing time, point density and data gaps.

Generating high quality 3D point clouds of unsurveyed coastal cliffs with UAS can be seen as a two-step process. First, a high-density point cloud will be generated using a conventional flight mission/planning software adequate for vertical façade inspection. Second, by detecting the location and extension of the data gaps (or holes), a new optimized aerial coverage could be planned, using for example an extended 3D version of the approach presented in [

20], in order to imaging the areas where the gaps are presented. In addition, the 3D reconstruction of coastal cliffs by combining UAS-based surveys and SfM-MVS photogrammetry should also be documented in sufficient detail to be reproducible in time and space. In addition to the acquisition geometry of the aerial coverage (flight mission parameters) the values of the parameters involved in the processing workflow should be optimized in order to generate a high quality 3D point cloud. Future research will be concentrated on the optimization of the flight mission, on developing comprehensive image redundancy tools for excessive image datasets and on developing robust gap detection methods in multitemporal datasets.