1. Introduction

The 2030 Agenda for Sustainable Development of the United Nations [

1] has explicitly defined that ending hunger, achieving food security and promoting sustainable agriculture are primary goals for our planet. To this extent, remote sensing imagery [

2] plays a fundamental role as a supporting tool for detecting inadequate or poor crop growing conditions, monitoring crops and estimating their corresponding productions [

3]. Despite recent technological advances [

4,

5,

6], the increasing population and climate change still raise important challenges for agricultural systems in terms of productivity, security and sustainability [

7,

8], especially in the context of developing countries [

9]. Although there is not an established convention for such designation, developing countries are generally characterized by having underdeveloped industries that typically lead to important economic vulnerabilities based on the instability of agricultural production along other factors [

10]. For instance, it is the case in Southern Asia where the reliability and sustainability of agricultural production systems become particularly critical since many countries experience scarce diversification and productivity in this regard [

11]. One of the most representative cases is the country of Nepal, where agriculture is the principal economic activity and rice is its most important crop [

12]. However, the immediate and dynamic nature of global anthropogenic changes (including rising population and climatic extremes) generate a growing pressure to traditional agriculture which increasingly demands more accurate crop monitoring and yield prediction tools to ensure the stability of food supply [

13,

14].

In order to support the agricultural systems of Nepal and other analogous developing countries, freely available multi-temporal and multi-spectral remote sensing images have shown to be excellent tools to effectively monitor crops and their growth stages as well as estimating crop yields before harvest [

15,

16,

17]. Many works in the literature exemplify these facts. For instance, Peng et al. develop in [

18] a rice yield estimation model based on the Moderate Resolution Imaging Spectroradiometer (MODIS) and its primary production data. In [

19], Hong et al. also use MODIS for estimating rice yields but, in this case, combining the Normalized Difference Vegetation Index (NDVI) with meteorological data. Additionally, other authors analyze the use of alternative indexes for the rice yield estimation problem [

20]. Despite the initial success achieved by these and other important methods, the coarse spatial resolution of MODIS (i.e., 250 m) generally makes the use of more accurate remote sensing data preferred. It is the case of Siyal et al. who present in [

21] a study to estimate Pakistani rice yields from the Landsat Enhanced Thematic Mapper plus (ETM+) instrument, which provides an improved spatial resolution of 30 m. Analogously, Nuarsa et al. conduct in [

22] a similar study but focused on the Bali Province of Indonesia. Certainly, Landsat is able to provide important spatial resolution gains with respect to MODIS for rice yield estimation. However, its limited temporal resolution (16 days) is still an important constraint since it logically reduces the temporal availability of such estimates. As a result, other authors opt for jointly considering data from different instruments to relieve these intra-sensor limitations. For example, Setiyono et al. combine in [

23] MODIS and synthetic-aperture radar (SAR) data into a crop growth model in order to produce spatially enhanced rice yield estimates. Nonetheless, involving multi-sensor imagery generally leads to important data processing complexities, in terms of temporal gaps, spectral differences, spatial registration, etc., that may logically affects the resulting performance. In this regard, the possibility of using remote sensing data from sensors with better spatial, spectral and temporal nominal resolutions becomes very attractive for the accurate rice yield estimation.

The recent open availability of Sentinel-2 (S2) imagery with higher spatial, spectral and temporal resolutions has unlocked extensive opportunities for agricultural applications that logically include crop mapping and monitoring [

24,

25,

26]. As part of the Copernicus European Earth Observation program, the S2 mission offers global coverage of terrestrial surfaces by means of medium-resolution multi-spectral data [

27]. In particular, S2 includes two identical satellites (S2A launched on 23 June 2015 and S2B followed on 7 March 2017) that incorporate the Multi-Spectral Instrument (MSI). The S2 mission offers an innovative wide-swath of 290 km, a spatial resolution ranging from 10 m to 60 m, a spectral resolution with 13 bands in the visible, near infra-red and shortwave infrared of the electromagnetic spectrum and a temporal resolution with 5 days revisit frequency. With these features, S2 imagery has potential to overcome the aforementioned limitations with coarse satellite images and costly data sources in the yield prediction context. Different works in the remote sensing literature exemplify this trend. For instance, He et al. present in [

28] a study to demonstrate the potential of S2 biophysical data for the estimation of cotton yields in the southern United States. Analogously, Zhao et al. test in [

29] how different vegetation indices derived from S2 can be useful to accurately predict dry-land wheat yields across Northeastern Australia. In [

30], the authors develop a model to estimate potato yield using S2 data and several machine learning algorithms in the municipality of Cuellar, Castilla y León (Spain). Additionally, Kayad et al. conduct in [

31] a study to investigate the suitability of using S2 vegetation indices together with machine learning techniques to predict corn grain yield variability in Northern Italy. Hunt et al. also propose in [

32] combining S2 with environmental data (e.g., soil, meteorological, etc.) to produce accurate wheat crop yield estimates over different regions of the United Kingdom.

Notwithstanding all the conducted research on crop yield prediction, the advantages of S2 data in the context of developing countries still remains as an open-ended question due to the data scarcity problem often suffered by these particularly vulnerable regions. Existing yield estimation methods mostly rely on survey data and other important variables related to crop growth such as weather, precipitation and soil properties [

33,

34]. Whereas adequate high quality data can be regularly collected in developed countries [

35], the situation is rather different in developing countries where such complete and timely updated information is often very difficult to obtain or even not available [

36,

37,

38]. Undoubtedly, there are several international agencies and programs, e.g., Food and Agriculture Organization (FAO) and Famine Early Warning System (FEWS), that manage remote sensing data for crop monitoring and yield estimation from regional to global scales. However, these kinds of systems do not effectively fulfill the regional needs when it comes to national or local scale actions, including the rice crop yield prediction in the country of Nepal [

39]. In these circumstances, the free availability of S2 imagery motivates the use of this convenient data to address such challenges by building sustainable solutions for developing countries.

In order to obtain accurate crop yield estimates, not only high-quality remote sensing data but also advanced and intelligent algorithms are essential. To this extent, deep learning models, such as Convolutional Neural Networks (CNNs), have certainly shown a great potential in several important remote sensing applications, including crop yield prediction [

35,

40,

41]. Whereas traditional regression approaches often require feature engineering and field knowledge to extract relevant characteristics from images, deep learning models have the ability to automatically learn features from multiple data representation levels [

42]. As a result, the state-of-the-art on crop type classification and yield estimation has shifted from conventional machine learning algorithms to advanced deep learning classifiers and from depending on only spectral features of single images to jointly using spatial-spectral and temporal information for a better accuracy, e.g., [

43,

44,

45].

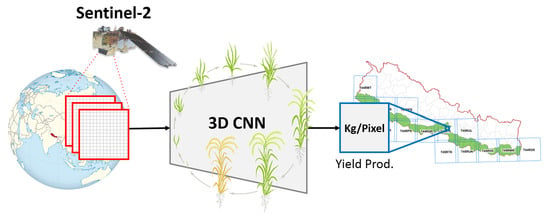

With all these considerations in mind, the use of deep learning technologies together with the advantages of S2 imagery can play a fundamental role in relieving current limitations of traditional rice crop yield estimation systems, which are mainly based on crop samples, data surveys and field verification reports [

46]. Nonetheless, the operational applicability of such technologies over S2 data has not yet been addressed in the context of developing countries rice production, which is precisely the gap that motivates this work. Specifically, this paper introduces the use of S2 data for rice crop yield prediction with the case study in Nepal and provides an operational deep learning-based framework to this end. Firstly, we review different state-of-the-art algorithms for yield estimation based on which the CNN approach is selected as the core technology of our proposed framework due to its excellent performance [

42]. Following, we build a new large-scale rice crop database of Nepal (RicePAL) made of multi-temporal S2 products from 2016 to 2018 and ground-truth rice yield data to validate the proposed approach performance. Thereafter, we propose a novel 3D CNN architecture for the accurate estimation of the rice production by taking advantage of multi-temporal S2 images together with supporting climate and soil information. Finally, an extensive experimental comparison, including several state-of-the-art regression and CNN-based crop yield prediction models, is conducted to validate the effectiveness of the proposed framework while analysing the impact of using different temporal and climate/soil data settings. In brief, the main contributions of this paper can be summarized as follows:

- 1.

The suitability of using S2 imagery to effectively estimate strategic crop yields in developing countries with a case of study in Nepal and its local rice production is investigated.

- 2.

A new large-scale rice crop database of Nepal (RicePAL) composed by multi-temporal S2 products and ground-truth rice yield information from 2016 to 2018 is built.

- 3.

A novel operational CNN-based framework adapted to the intrinsic data constraints of developing countries for the accurate rice yield estimation is designed.

- 4.

The effect of considering different temporal, climate and soil data configurations in terms of the resulting performance achieved by the proposed approach and several state-of-the-art regression and CNN-based yield estimation methods is studied. The codes related to this work will be released for reproducible research inside the remote sensing community (

https://github.com/rufernan/RicePAL accessed on 3 April 2021).

The rest of the paper is organized as follows.

Section 2 provides a comprehensive review of the existing state-of-the-art methods on crop yield estimation using remote sensing data.

Section 3 introduces the study area and also describes in details the dataset created in this work.

Section 4 presents the proposed framework while also describing the considered CNN-based architecture. In

Section 5, a comprehensive experimental comparison is conducted to discuss the findings of the experimental designs and performance comparisons in

Section 6. Finally,

Section 7 concludes the work and also provides some interesting remarks at plausible future research lines.

2. Related Work

In the literature, many studies have shown the advantages of using remote sensing data for crop yield estimation by employing statistical methods based on regression models. For instance, in [

47], a piece-wise linear regression method with a break-point is employed to predict corn and soybean yields using NDVI, surface temperature, precipitation and soil moisture. The work in [

48] used a step-wise regression method for estimating winter wheat yields using MODIS NDVI data. In [

49], the crop yield is estimated with the prediction error of about 10% in the United States Midwest by employing Ordinary Least Squares (OLS) regression model using time-series of MODIS products and a climate dataset. In summary, the classical approach for crop yield estimation is mostly based on the multivariate regression analysis using the relationship between crop yields and agro-environmental variables like vegetation indices, climatic variables and soil moisture. With the advances in machine learning, researchers have also applied other models to remote sensing data for crop yield prediction. For example, in [

50], the use of Artificial Neural Network (ANN) through a back-propagation algorithm is introduced in forecasting winter wheat crop yield using remote sensing data to overcome the problem that existed in using traditional statistical algorithms (especially regression models) due to nonlinear character of agricultural ecosystems. The study demonstrated a high accuracy of ANN compared to results from a multi-regression linear model. Among the most commonly used machine learning techniques, it is possible to highlight support vector regresion (SVR), Gaussian process regression (GPR) and multi-layer perceptron (MLP) [

51]. These techniques have contributed to improve the accuracy of crop yield prediction. However, they often have the disadvantage of requiring feature engineering, which makes other paradigms generally preferred.

One of the first works employing a deep learning approach for crop yield estimation is [

52]. Specifically, this study employed a Caffe-based deep learning regression model to estimate corn crop yield at county-level in the United States. The proposed architecture improved the performance of SVR. Additionally, the work presented in [

35] employed CNN and long short-term memory (LSTM) algorithms to predict county-level soybean yields in the United States under the assumption of location invariant. To address spatial and temporal dependencies across data points, the authors of the paper proposed the use of linear Gaussian Process (GP) layer on the top of these neural network architectures. The results demonstrated that CNN and LSTM approaches outperformed the other competing techniques, ridge regression, decision trees and a CNN with three hidden layers which were used as baselines. The final crop yield prediction was improved by the addition of linear GP resulting in a 30% reduction of root mean squared error (RMSE) from baselines. The location invariant assumption proposed by [

35] discards the spatial information of satellite imagery which can also be crucial information for crop yield estimation. To overcome this limitation, in [

40], a CNN approach based on 3D convolutions that consider both spatial and temporal features for yield prediction is introduced. Firstly, the authors replicated the Histogram CNN model of [

35] with the same input dataset and set it as baseline for their approach. Considering the computational cost, a channel compression model was applied to reduce the channel dimension from 10 to 3. Thereafter, a 3D convolution was stacked to the model. The proposed CNN architecture outperformed the replicated Histogram CNN and non-deep learning regressors used as baselines [

35] with an average RMSE of 355 kg per hectare (kg/ha). Continuing to the same data, [

41] proposed a novel CNN-LSTM model for end-of-season and in-season soybean yield prediction. The network consisted of a CNN followed by LSTM where the CNN learns the spatial features and the LSTM is used to learn the temporal features extracted by the CNN. The model achieved reduced RMSE of average 329.53 kg/ha which was better than CNN and LSTM models. Recently, Ref. [

53] applied a CNN model to predict crop yield using NDVI and red, green and blue bands acquired from Unmanned Aerial Vehicle (UAV). The result showed the better performance of CNN architecture with RGB (red, green and blue) data. Ref. [

54] proposed a novel CNN architecture which used two separate branches to process RGB and multi-spectral images from UAV to predict rice yield in Southern China. The resulting accuracy outperformed the traditional vegetation index-based regression model. The study also highlighted that, unlike the vegetation index-based regression model, the performance of CNN for yield estimation at the maturing stage is much better and more robust.

Based on the related literature [

42], CNN and LSTM are mostly used deep learning algorithms to address the problem for crop yield prediction with high accuracy. On the one hand, when these algorithms are used separately, in [

35] it was demonstrated that CNN achieved higher accuracy than LSTM. On the other hand, the combined model, CNN-LSTM performed relatively better in [

41]. However, the experiments in [

41] were conducted using a long-term dataset with a high temporal dimension. The recent availability in years of the Copernicus S2 data limits the use of the LSTM model in this work. Therefore, CNN is chosen as core technology for the rice crop yield estimation framework presented in this work. Exploring the performance of different CNN-based architectures, the CNN-3D model proposed by [

40] achieved the highest accuracy to date and, hence, 3D convolutions will serve as basis of the newly presented architecture.

6. Discussion

According to the quantitative results reported in

Table 3,

Table 4,

Table 5 and

Table 6, there are several important points which deserve to be mentioned regarding to the performance of the considered methods in the rice crop yield estimation task. When considering traditional regression-based techniques, i.e., LIN, RID, SVR and GPR, it is possible to observe that GPR consistently obtains the best result followed by SVR, whereas LIN and RID clearly show a lower performance. Note that all these regression functions have been trained using pixel-wise spectral information and, under this situation, the most powerful algorithms provide the best results due to the inherent complexity of the yield estimation problem [

42]. These outcomes are consistent throughout the four considered experimental scenarios, which reveals the better suitability of SVR and GPR for this kind of problem as supported by other works [

23,

77]. Nonetheless, the performance of these traditional techniques is very limited from a global perspective since our experimental results show that CNN-based models are able to produce substantial performance gains in the rice crop yield estimation task. As it is possible to see, any of the tested CNN-based networks is certainly able to outperform traditional regression methods by a large margin (regardless the considered patch size and experimental setting), which indicates the higher capabilities of the CNN technology to effectively estimate rice crop yields in Nepal with S2 data. On the one hand, the great potential of CNNs to extract highly discriminating features from a neighboring region (patch) allows generating more accurate yield predictions for a given pixel. On the other hand, the higher resolution of S2 (with respect to other traditional sensors, e.g., MODIS) can even make these convolutional features more informative to map ground-truth yield values in the context of this work.

When analyzing the global results of the considered CNN-based schemes (i.e., CNN-2D, CNN-3D and the proposed network), the proposed network achieves a remarkable improvement with respect to the regression baselines as well as CNN-2D and CNN-3D models. On average, CNN-2D and CNN-3D obtain a global RMSE of and kg/ha, whereas the average result of the proposed model is kg/ha. Even though the three considered CNN models are able to produce substantial gains with respect to the baseline regression methods, the proposed network exhibits the best overall performance, obtaining an average RMSE improvement of and units over CNN-2D and CNN-3D, respectively. By analyzing these results in more details, we will be able to provide a better understanding of the performance differences among the considered methods and experimental scenarios. In general, the experiments show that using a larger patch size generally provides better results, especially with CNN-3D and the proposed network. Intuitively, we can think that including a larger pixel neighbourhood may provide more information that can be effectively exploited by CNN models. However, the bigger the patch the more complex the data and the more likely to be affected by cloud coverage and other anomalies. Note that, as we increase the patch size, the extracted patches are more likely to be invalid and this fact prevents us to consider patches beyond a certain spatial limit.

In this work, we test five different patch sizes () and the best average results are always achieved with 27 and 33. To this extent, we can observe noteworthy performance differences among the tested CNN-based models. When fixing the patch size to small values (e.g., ), it is possible to see that CNN-2D obtains better results than CNN-3D, whereas considering larger patch sizes (e.g., ) makes CNN-3D work better. This observation can be explained from the perspective of the data and model complexities. As it was previously mentioned, the larger the patch size the higher the data complexity and, hence, intricate models are expected to better exploit such data. In this sense, the additional temporal dimension of CNN-3D increases the number of network parameters, which eventually makes this method take better advantage of larger patches. In contrast, the proposed network has been designed to control the total number of layers (likewise in CNN-2D) while defining 3D convolutional blocks that work for reducing over-fitting, which allows us to provide competitive advantages with respect to CNN-2D and CNN-3D models regardless the patch size.

In relation to the considered experimental settings, it is important to analyze how the different data sources and temporal timestamps affect the rice yield estimation task. In all the conducted experiments, we can observe that, in general, the joint use of SoS, PoS and EoS temporal information (i.e., S/P/E) consistently provides better results than using any other data combination. Despite the fact that it is possible to find few exceptions, this trend makes sense from an intuitive perspective since the better we model the rice crop cycle via the input images, the corresponding yield predictions are logically expected to be better. In the case of analyzing the effect of the different data sources (i.e., S2, +C and +S), we can also make important observations. To facilitate this analysis,

Table 1 presents a summary with the best RMSE (kg/ha) results for each experiment and method. As one can see, all the regression algorithms are able to obtain the best results when using S2 imagery together with climate and soil data (experiment 4). Precisely, this outcome indicates that the limitations of these traditional methods to uncover features from the pixel spatial neighbourhood make the use of supporting climate and soil data highly suitable. Nonetheless, the situation is rather different with CNN-based models. More specifically, it is possible to observe two general trends depending on the considered patch size. On the one hand, the use of climate and soil data (experiment 4) with small patches (e.g.,

) tends to provide certain performance gains in the rice crop yield estimation task. For instance, CNN-2D with

produces the minimum RMSE value in experiment 4, whereas CNN-3D and the proposed network do the same with

. On the other hand, the use of larger patches (e.g.,

) shows that S2 imagery (experiment 1) generally becomes more effective than other data source combinations. In the context of this work, this fact reveals that CNN-based methods do not necessarily require additional supporting data when using large input patches since they are able to uncover useful features from the pixel neighborhood. As it is possible to see in

Table 1, CNN-2D, CNN-3D and the proposed network obtain the best RMSE result in experiment 1 when considering

. Another important remark can be made when comparing the inclusion of climate (experiment 2) and soil data (experiment 3). According to the reported results, climate information seems to work slightly better than soil with traditionally regression algorithms, whereas CNN models show the opposite trend. Once again, these differences can be caused by the aforementioned data complexity since the number of soil bands (6) is higher than climate bands (4) and, hence, it may benefit all CNN models and particularly the proposed approach.

All in all, the newly defined network consistently shows the best performances over all the tested scenarios which indicate its higher suitability for estimating rice crop yields in Nepal from S2 data. The main advantage of the proposed architecture, over CNN-2D and CNN-3D, lies on its ability to effectively balance two aspects that play a key role in the crop yield estimation task: multi-temporal information and contextual limitations. Firstly, the presented approach takes advantage of 3D convolutions to introduce an additional temporal dimension to allow generating multi-temporal features which do not necessarily depends on the spectral information itself. That is, seasonal data can be better differentiated from the spectral ones and hence better exploited for the annual rice crop yield prediction. Secondly, the proposed network also accounts for the data limitations that may occur, especially in the context of developing countries. In other words, the use of 3D convolutions logically increases the model complexity and, in this scenario, the over-fitting problem may become an important issue with limited data. Note that it is possible see this effect when comparing the performances of CNN-2D and CNN-3D with small patch sizes, e.g.,

Table 3. To relieve this problem, we propose a new architecture which make use of two different 3D convolutional building blocks that work for reducing the network over-fitting. In contrast to CNN-3D, the proposed approach reduces the total number of convolutional layers. Besides, it avoids the need of using an initial compression module while defining new blocks to make the training process more effective regardless the input patch size and auxiliary data availability. These results are supported by the qualitative regression maps displayed in

Figure 5. According to the corresponding image details, the proposed approach provides the visual result most similar to the ground-truth rice yield map since it is able to relieve an important part of the output noise generated by the other yield prediction methods.

Figure 6 displays the regression plots corresponding to the results of experiment 4 with S/P/E-S2+C+S. As it is possible to see, CNN-3D is able to provide better results than CNN-2D, especially when increasing the patch size. Nonetheless, the regression adjustment provided by the proposed network is certainly the most accurate which supports its better performance for the problem of estimating rice crop yields in Nepal from S2 imagery.