Mapping Smallholder Maize Farms Using Multi-Temporal Sentinel-1 Data in Support of the Sustainable Development Goals

Abstract

:1. Introduction

2. Literature Review

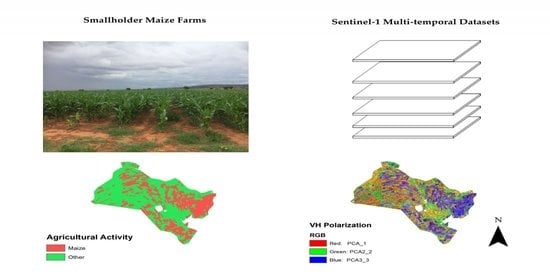

3. Materials and Methods

3.1. Study Area and Field Data Collection

3.2. Sentinel-1 Data Acquisition and Pre-Processing

3.3. Machine Learning Algorithms

3.4. Experimental Design

3.5. Accuracy Assessment and Smallholder Maize Area Estimation

4. Results

4.1. Accuracy Assessment

4.2. Variable Importance

4.3. Mapping and Area Estimate for Smallholder Maize Farms

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Richard, C. The United Nations World Water Development Report 2015: Water for a Sustainable World; UNESCO Publishing: Paris, France, 2015; ISBN 9789231000713. [Google Scholar]

- Abraham, M.; Pingali, P. Transforming Smallholder Agriculture to Achieve the SDGs. In The Role of Smallholder Farms in Food and Nutrition Security; Gomez y Paloma, S., Riesgo, L., Louhichi, K., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 173–209. ISBN 9783030421489. [Google Scholar]

- Charman, A.; Hodge, J. Food Security in the SADC Region: An Assessment of National Trade Strategy in the Context of the 2001–03 Food Crisis. In Food Insecurity, Vulnerability and Human Rights Failure; Guha-Khasnobis, B., Acharya, S.S., Davis, B., Eds.; Studies in Development Economics and Policy; Palgrave Macmillan UK: London, UK, 2007; pp. 58–81. ISBN 9780230589506. [Google Scholar]

- FAO. (Ed.) Building Climate Resilience for Food Security and Nutrition. In The State of Food Security and Nutrition in the World; FAO: Rome, Italy, 2018; ISBN 9789251305713. [Google Scholar]

- FAO. Food and Agriculture Organization of the United Nations OECD-FAO Agricultural Outlook 2016–2025; FAO: Rome, Italy, 2016; ISBN 9789264259508. [Google Scholar]

- Jari, B.; Fraser, G.C.G. An Analysis of Institutional and Technical Factors Influencing Agricultural Marketing amongst Smallholder Farmers in the Kat River Valley, Eastern Cape Province, South Africa. Afr. J. Agric. Res. 2009, 4, 1129–1137. [Google Scholar] [CrossRef]

- Aliber, M.; Hall, R. Support for smallholder farmers in South Africa: Challenges of scale and strategy. Dev. S. Afr. 2012, 29, 548–562. [Google Scholar] [CrossRef]

- Calatayud, P.-A.; Le Ru, B.P.; Berg, J.V.D.; Schulthess, F. Ecology of the African Maize Stalk Borer, Busseola fusca (Lepidoptera: Noctuidae) with Special Reference to Insect-Plant Interactions. Insects 2014, 5, 539–563. [Google Scholar] [CrossRef] [Green Version]

- Giller, K.E.; Rowe, E.C.; de Ridder, N.; van Keulen, H. Resource use dynamics and interactions in the tropics: Scaling up in space and time. Agric. Syst. 2006, 88, 8–27. [Google Scholar] [CrossRef]

- Santpoort, R. The Drivers of Maize Area Expansion in Sub-Saharan Africa. How Policies to Boost Maize Production Overlook the Interests of Smallholder Farmers. Land 2020, 9, 68. [Google Scholar] [CrossRef] [Green Version]

- Kogan, F. Food Security: The Twenty-First Century Issue. In Promoting the Sustainable Development Goals in North American Cities; Metzler, J.B., Ed.; Springer: Berlin/Heidelberg, Germany, 2018; pp. 9–22. [Google Scholar]

- Liu, L.; Xiao, X.; Qin, Y.; Wang, J.; Xu, X.; Hu, Y.; Qiao, Z. Mapping cropping intensity in China using time series Landsat and Sentinel-2 images and Google Earth Engine. Remote Sens. Environ. 2020, 239, 111624. [Google Scholar] [CrossRef]

- Chakhar, A.; Ortega-Terol, D.; Hernández-López, D.; Ballesteros, R.; Ortega, J.F.; Moreno, M.A. Assessing the Accuracy of Multiple Classification Algorithms for Crop Classification Using Landsat-8 and Sentinel-2 Data. Remote Sens. 2020, 12, 1735. [Google Scholar] [CrossRef]

- Baret, F.; Weiss, M.; Lacaze, R.; Camacho, F.; Makhmara, H.; Pacholcyzk, P.; Smets, B. GEOV1: LAI and FAPAR essential climate variables and FCOVER global time series capitalizing over existing products. Part1: Principles of development and production. Remote Sens. Environ. 2013, 137, 299–309. [Google Scholar] [CrossRef]

- Campbell, J.B.; Wynne, R.H. Introduction to Remote Sensing, 5th ed.; Guilford Press: New York, NY, USA, 2011; ISBN 9781609181765. [Google Scholar]

- Woodhouse, I.H. Introduction to Microwave Remote Sensing; CRC Press: Boca Raton, FL, USA, 2017; ISBN 9781315272573. [Google Scholar]

- Attema, E.; Davidson, M.; Floury, N.; Levrini, G.; Rosich-Tell, B.; Rommen, B.; Snoeij, P. Sentinel-1 ESA’s new European SAR mission. Remote Sens. 2007, 6744, 674403. [Google Scholar] [CrossRef]

- Jain, M.; Mondal, P.; DeFries, R.S.; Small, C.G.; Galford, G.L. Mapping cropping intensity of smallholder farms: A comparison of methods using multiple sensors. Remote Sens. Environ. 2013, 134, 210–223. [Google Scholar] [CrossRef] [Green Version]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Useya, J.; Chen, S. Exploring the Potential of Mapping Cropping Patterns on Smallholder Scale Croplands Using Sentinel-1 SAR Data. Chin. Geogr. Sci. 2019, 29, 626–639. [Google Scholar] [CrossRef] [Green Version]

- Kenduiywo, B.K.; Bargiel, D.; Soergel, U. Crop-type mapping from a sequence of Sentinel 1 images. Int. J. Remote Sens. 2018, 39, 6383–6404. [Google Scholar] [CrossRef]

- Whelen, T.; Siqueira, P. Time-series classification of Sentinel-1 agricultural data over North Dakota. Remote Sens. Lett. 2018, 9, 411–420. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13 August 2016; pp. 785–794. [Google Scholar]

- Elith, J.; Leathwick, J.R.; Hastie, T. A working guide to boosted regression trees. J. Anim. Ecol. 2008, 77, 802–813. [Google Scholar] [CrossRef] [PubMed]

- Piiroinen, R.; Heiskanen, J.; Mõttus, M.; Pellikka, P. Classification of Crops across Heterogeneous Agricultural Landscape in Kenya Using AisaEAGLE Imaging Spectroscopy Data. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 1–8. [Google Scholar] [CrossRef]

- Licciardi, G.; Marpu, P.R.; Chanussot, J.; Benediktsson, J.A. Linear Versus Nonlinear PCA for the Classification of Hyperspectral Data Based on the Extended Morphological Profiles. IEEE Geosci. Remote. Sens. Lett. 2011, 9, 447–451. [Google Scholar] [CrossRef] [Green Version]

- Chatziantoniou, A.; Psomiadis, E.; Petropoulos, G.P. Co-Orbital Sentinel 1 and 2 for LULC Mapping with Emphasis on Wetlands in a Mediterranean Setting Based on Machine Learning. Remote Sens. 2017, 9, 1259. [Google Scholar] [CrossRef] [Green Version]

- Karthikeyan, L.; Chawla, I.; Mishra, A.K. A review of remote sensing applications in agriculture for food security: Crop growth and yield, irrigation, and crop losses. J. Hydrol. 2020, 586, 124905. [Google Scholar] [CrossRef]

- Mufungizi, A.A.; Musakwa, W.; Gumbo, T. A land suitability analysis of the vhembe district, south africa, the case of maize and sorghum. ISPRS Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2020, 43, 1023–1030. [Google Scholar] [CrossRef]

- Skakun, S.; Kalecinski, N.; Brown, M.; Johnson, D.; Vermote, E.; Roger, J.-C.; Franch, B. Assessing within-Field Corn and Soybean Yield Variability from WorldView-3, Planet, Sentinel-2, and Landsat 8 Satellite Imagery. Remote Sens. 2021, 13, 872. [Google Scholar] [CrossRef]

- Ji, Z.; Pan, Y.; Zhu, X.; Wang, J.; Li, Q. Prediction of Crop Yield Using Phenological Information Extracted from Remote Sensing Vegetation Index. Sensors 2021, 21, 1406. [Google Scholar] [CrossRef] [PubMed]

- Kavvada, A.; Metternicht, G.; Kerblat, F.; Mudau, N.; Haldorson, M.; Laldaparsad, S.; Friedl, L.; Held, A.; Chuvieco, E. Towards delivering on the Sustainable Development Goals using Earth observations. Remote Sens. Environ. 2020, 247, 111930. [Google Scholar] [CrossRef]

- Cochran, F.; Daniel, J.; Jackson, L.; Neale, A. Earth Observation-Based Ecosystem Services Indicators for National and Subnational Reporting of the Sustainable Development Goals. Remote Sens. Environ. 2020, 244, 111796. [Google Scholar] [CrossRef] [PubMed]

- Abubakar, G.A.; Wang, K.; Shahtahamssebi, A.; Xue, X.; Belete, M.; Gudo, A.J.A.; Shuka, K.A.M.; Gan, M. Mapping Maize Fields by Using Multi-Temporal Sentinel-1A and Sentinel-2A Images in Makarfi, Northern Nigeria, Africa. Sustainability 2020, 12, 2539. [Google Scholar] [CrossRef] [Green Version]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Polly, J.; Hegarty-Craver, M.; Rineer, J.; O’Neil, M.; Lapidus, D.; Beach, R.; Temple, D.S. The use of Sentinel-1 and -2 data for monitoring maize production in Rwanda. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XXI, Strasbourg, France, 21 October 2019; Volume 11149, p. 111491Y. [Google Scholar] [CrossRef]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Canty, M.J. Image Analysis, Classification and Change Detection in Remote Sensing: With Algorithms for ENVI/IDL and Python, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2014; ISBN 9781466570382. [Google Scholar]

- SDM. Greater Sekhukhune Cross Border District Municipality Integrated Development Plan: 2019/20; SDM: Sekhukhune, Limpopo, South Africa, 2019. [Google Scholar]

- Siebert, S.J.; Van Wyk, A.E.; Bredenkamp, G.J.; Siebert, F. Vegetation of the rock habitats of the Sekhukhuneland Centre of Plan Endemism, South Africa. Bothalia Pretoria 2003, 33, 207–228. [Google Scholar] [CrossRef] [Green Version]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Filipponi, F. Sentinel-1 GRD Preprocessing Workflow. In Proceedings of the 3rd International Electronic Conference on Remote Sensing, Roma, Italy, 22 May–5 June 2019; Volume 18, p. 11. [Google Scholar] [CrossRef] [Green Version]

- Son, N.-T.; Chen, C.-F.; Chen, C.-R.; Minh, V.-Q. Assessment of Sentinel-1A data for rice crop classification using random forests and support vector machines. Geocarto Int. 2017, 1–32. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. Toward intelligent training of supervised image classifications: Directing training data acquisition for SVM classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Wan, S.; Chang, S.-H. Crop classification with WorldView-2 imagery using Support Vector Machine comparing texture analysis approaches and grey relational analysis in Jianan Plain, Taiwan. Int. J. Remote Sens. 2019, 40, 8076–8092. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Nobre, J.; Neves, R.F. Combining Principal Component Analysis, Discrete Wavelet Transform and XGBoost to Trade in the Financial Markets. Expert Syst. Appl. 2019, 125, 181–194. [Google Scholar] [CrossRef]

- Georganos, S.; Grippa, T.; VanHuysse, S.; Lennert, M.; Shimoni, M.; Wolff, E. Very High Resolution Object-Based Land Use–Land Cover Urban Classification Using Extreme Gradient Boosting. IEEE Geosci. Remote Sens. Lett. 2018, 15, 607–611. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Chen, Z.; Jiang, H.; Jing, W.; Sun, L.; Feng, M. Evaluation of Three Deep Learning Models for Early Crop Classification Using Sentinel-1A Imagery Time Series—A Case Study in Zhanjiang, China. Remote Sens. 2019, 11, 2673. [Google Scholar] [CrossRef] [Green Version]

- Skiena, S.S. Machine Learning. In The Data Science Design Manual; Skiena, S.S., Ed.; Texts in Computer Science; Springer International Publishing: Cham, Germany, 2017; pp. 351–390. ISBN 9783319554440. [Google Scholar]

- Aggarwal, C.C. (Ed.) Data Classification: Algorithms and Applications. In Data Mining and Knowledge Discovery Series; CRC Press: Boca Raton, FL, USA; Taylor & Francis Group: Boca Raton, FL, USA, 2014; ISBN 9781466586741. [Google Scholar]

- Petropoulos, G.P.; Kalaitzidis, C.; Vadrevu, K.P. Support vector machines and object-based classification for obtaining land-use/cover cartography from Hyperion hyperspectral imagery. Comput. Geosci. 2012, 41, 99–107. [Google Scholar] [CrossRef]

- Tong, X.-Y.; Xia, G.-S.; Lu, Q.; Shen, H.; Li, S.; You, S.; Zhang, L. Land-cover classification with high-resolution remote sensing images using transferable deep models. Remote Sens. Environ. 2020, 237, 111322. [Google Scholar] [CrossRef] [Green Version]

- Cucho-Padin, G.; Loayza, H.; Palacios, S.; Balcazar, M.; Carbajal, M.; Quiroz, R. Development of low-cost remote sensing tools and methods for supporting smallholder agriculture. Appl. Geomat. 2019, 12, 247–263. [Google Scholar] [CrossRef] [Green Version]

- Lewis, H.G.; Brown, M. A generalized confusion matrix for assessing area estimates from remotely sensed data. Int. J. Remote Sens. 2001, 22, 3223–3235. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data Principles and Practices, 2nd ed; CRS Press: Boca Raton, FL, USA; Taylor and Francis Group: London, UK, 2008. [Google Scholar]

- Olofsson, P.; Foody, G.M.; Stehman, S.V.; Woodcock, C.E. Making better use of accuracy data in land change studies: Estimating accuracy and area and quantifying uncertainty using stratified estimation. Remote Sens. Environ. 2013, 129, 122–131. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Flach, P.; Kull, M. Precision-Recall-Gain Curves: PR Analysis Done Right. Advances in Neural Information Processing Systems; NIPS: Bristol, UK, 2015. [Google Scholar]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Edwards, A.L. Note on the “correction for continuity” in testing the significance of the difference between correlated proportions. Psychometrika 1948, 13, 185–187. [Google Scholar] [CrossRef] [PubMed]

- De Leeuw, J.; Jia, H.; Yang, L.; Liu, X.; Schmidt, K.; Skidmore, A.K. Comparing accuracy assessments to infer superiority of image classification methods. Int. J. Remote Sens. 2006, 27, 223–232. [Google Scholar] [CrossRef]

- Manandhar, R.; Odeh, I.O.A.; Ancev, T. Improving the Accuracy of Land Use and Land Cover Classification of Landsat Data Using Post-Classification Enhancement. Remote Sens. 2009, 1, 330–344. [Google Scholar] [CrossRef] [Green Version]

- Sahin, E.K.; Colkesen, I. Performance analysis of advanced decision tree-based ensemble learning algorithms for landslide susceptibility mapping. Geocarto Int. 2019, 1–23. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Ndikumana, E.; Minh, D.H.T.; Baghdadi, N.; Courault, D.; Hossard, L. Deep Recurrent Neural Network for Agricultural Classification using multitemporal SAR Sentinel-1 for Camargue, France. Remote Sens. 2018, 10, 1217. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, R.; Zurita-Milla, R.; Izquierdo-Verdiguier, E.; De By, R.A. A Cloud-Based Multi-Temporal Ensemble Classifier to Map Smallholder Farming Systems. Remote Sens. 2018, 10, 729. [Google Scholar] [CrossRef] [Green Version]

- Dong, H.; Xu, X.; Wang, L.; Pu, F. Gaofen-3 PolSAR Image Classification via XGBoost and Polarimetric Spatial Information. Sensors 2018, 18, 611. [Google Scholar] [CrossRef] [Green Version]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Arias, M.; Campo-Bescós, M. Ángel; Álvarez-Mozos, J. Crop Classification Based on Temporal Signatures of Sentinel-1 Observations over Navarre Province, Spain. Remote Sens. 2020, 12, 278. [Google Scholar] [CrossRef] [Green Version]

- Boryan, C.; Yang, Z.; Mueller, R.; Craig, M. Monitoring US agriculture: The US Department of Agriculture, National Agricultural Statistics Service, Cropland Data Layer Program. Geocarto Int. 2011, 26, 341–358. [Google Scholar] [CrossRef]

| Search Criteria (Limited to Article, Book Chapter, and Book) | Scopus | Web of Science Core Collection |

|---|---|---|

| TITLE-ABS-KEY (remote AND sensing AND maize OR corn) | 1672 | 1942 |

| TITLE-ABS-KEY (remote AND sensing AND sdgs) | 49 | 66 |

| TITLE-ABS-KEY (remote AND sensing AND sdgs AND maize OR corn) | 1 | 1 |

| TITLE-ABS-KEY (remote AND sensing AND maize OR corn AND smallholder) | 35 | 43 |

| Model | Overall Accuracy | Cross- Validation | Confusion Matrix | |

|---|---|---|---|---|

| SVM | Planted Maize | Non-Planted | ||

| 0.971 | 0.89 +/−0,05 | 20,139 | 1457 | |

| 628 | 50,790 | |||

| Xgboost | Planted Maize | Non-Planted | ||

| 0.968 | 0.96 +/−0.02 | 20,115 | 1481 | |

| 825 | 50,593 | |||

| SVM | Xgboost | |||

| Classes | Planted Maize | Non-Planted | Planted Maize | Non-Planted |

| Precision | 0.97 | 0.972 | 0.961 | 0.972 |

| Recall | 0.933 | 0.988 | 0.931 | 0.984 |

| F1-Score | 0.951 | 0.98 | 0.946 | 0.978 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mashaba-Munghemezulu, Z.; Chirima, G.J.; Munghemezulu, C. Mapping Smallholder Maize Farms Using Multi-Temporal Sentinel-1 Data in Support of the Sustainable Development Goals. Remote Sens. 2021, 13, 1666. https://doi.org/10.3390/rs13091666

Mashaba-Munghemezulu Z, Chirima GJ, Munghemezulu C. Mapping Smallholder Maize Farms Using Multi-Temporal Sentinel-1 Data in Support of the Sustainable Development Goals. Remote Sensing. 2021; 13(9):1666. https://doi.org/10.3390/rs13091666

Chicago/Turabian StyleMashaba-Munghemezulu, Zinhle, George Johannes Chirima, and Cilence Munghemezulu. 2021. "Mapping Smallholder Maize Farms Using Multi-Temporal Sentinel-1 Data in Support of the Sustainable Development Goals" Remote Sensing 13, no. 9: 1666. https://doi.org/10.3390/rs13091666

APA StyleMashaba-Munghemezulu, Z., Chirima, G. J., & Munghemezulu, C. (2021). Mapping Smallholder Maize Farms Using Multi-Temporal Sentinel-1 Data in Support of the Sustainable Development Goals. Remote Sensing, 13(9), 1666. https://doi.org/10.3390/rs13091666