Attention-Unet-Based Near-Real-Time Precipitation Estimation from Fengyun-4A Satellite Imageries

Abstract

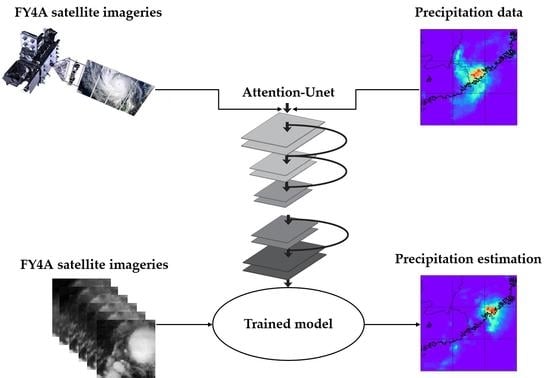

:1. Introduction

- (1)

- Adjust and test Attention-Unet model so that it is capable of extracting useful features from satellite information and support producing accurate precipitation estimation.

- (2)

- Evaluate the effectiveness of the proposed Attention-Unet model on precipitation identification and precipitation amount estimation by comparing its performance with CMORPH product which is an operational product around the world and FY4A-QPE as FY4A satellite’s operational precipitation estimation product.

- (3)

- Evaluate the capability of the proposed Attention-Unet model by comparing its performance with other deep learning models including Unet and the PERSIANN-CNN model.

- (4)

- Evaluate the performance of the Attention-Unet model by selecting an extreme precipitation event whose happened location is not calibrated areas, so as to test its potential for future application on the global scale.

2. Materials and Methods

2.1. Data and Study Area

2.1.1. Study Area

2.1.2. Fengyun 4A Satellite Data

2.1.3. Precipitation Products

2.2. Methodology

2.2.1. Network Introduction

2.2.2. Network Structure and Parameters

2.2.3. Baseline Models

- Unet

- 2

- PERSIANN-CNN

2.3. Experiment

2.3.1. Data Preprocessing

2.3.2. Hyperparameters Setting

2.3.3. Evaluation Metrics

2.3.4. Training Data and Precipitation Threshold Selection

3. Results

3.1. Comparison with Operational Precipitation Products

3.2. Comparison with Baseline Deep Learning Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hong, Y.; Gochis, D.; Cheng, J.-T.; Hsu, K.-L.; Sorooshian, S. Evaluation of PERSIANN-CCS Rainfall Measurement Using the NAME Event Rain Gauge Network. J. Hydrometeorol. 2007, 8, 469–482. [Google Scholar] [CrossRef] [Green Version]

- AghaKouchak, A.; Nakhjiri, N. A near real-time satellite-based global drought climate data record. Environ. Res. Lett. 2012, 7, 044037. [Google Scholar] [CrossRef] [Green Version]

- Anderson, J.; Chung, F.; Anderson, M.; Brekke, L.; Easton, D.; Ejeta, M.; Peterson, R.; Snyder, R. Progress on incorporating climate change into management of California’s water resources. Clim. Chang. 2007, 87, 91–108. [Google Scholar] [CrossRef] [Green Version]

- Ajami, N.K.; Hornberger, G.M.; Sunding, D.L. Sustainable water resource management under hydrological uncertainty. Water Resour. Res. 2008, 44, W11406. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.; Chen, S.; Bao, A.; Behrangi, A.; Hong, Y.; Ndayisaba, F.; Hu, J.; Stepanian, P.M. Early assessment of Integrated Multi-satellite Retrievals for Global Precipitation Measurement over China. Atmos. Res. 2016, 176–177, 121–133. [Google Scholar] [CrossRef]

- Yilmaz, K.K.; Hogue, T.S.; Hsu, K.L.; Sorooshian, S.; Gupta, H.V.; Wagener, T. Intercomparison of rain gauge, radar, and satellite-based precipitation estimates with emphasis on hydrologic forecasting. J. Hydrometeorol. 2005, 6, 497–517. [Google Scholar] [CrossRef]

- Sun, Q.H.; Miao, C.Y.; Duan, Q.Y.; Ashouri, H.; Sorooshian, S.; Hsu, K.L. A Review of Global Precipitation Data Sets: Data Sources, Estimation, and Intercomparisons. Rev. Geophys. 2018, 56, 79–107. [Google Scholar] [CrossRef] [Green Version]

- Xie, P.; Arkin, P.A. Global Precipitation: A 17-Year Monthly Analysis Based on Gauge Observations, Satellite Estimates, and Numerical Model Outputs. Bull. Am. Meteorol. Soc. 1997, 78, 2539–2558. [Google Scholar] [CrossRef]

- Castro, L.M.; Gironás, J.; Fernández, B. Spatial estimation of daily precipitation in regions with complex relief and scarce data using terrain orientation. J. Hydrol. 2014, 517, 481–492. [Google Scholar] [CrossRef]

- Kühnlein, M.; Appelhans, T.; Thies, B.; Nauß, T. Precipitation Estimates from MSG SEVIRI Daytime, Nighttime, and Twilight Data with Random Forests. J. Appl. Meteorol. Climatol. 2014, 53, 2457–2480. [Google Scholar] [CrossRef] [Green Version]

- Tang, G.; Ma, Y.; Long, D.; Zhong, L.; Hong, Y. Evaluation of GPM Day-1 IMERG and TMPA Version-7 legacy products over Mainland China at multiple spatiotemporal scales. J. Hydrol. 2016, 533, 152–167. [Google Scholar] [CrossRef]

- Yang, H. Multiscale Hydrologic Remote Sensing Perspectives and Applications, 1st ed.; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Shaojun, L.; Daxin, C.; Jing, H.; Yexing, G. Progress of the Satellite Remote Sensing Retrieval of Precipitation. Adv. Meteorol. Sci. Technol. 2021, 11, 28–33. [Google Scholar]

- Chern, J.; Matsui, T.; Kidd, C.; Mohr, K.; Kummerow, C.; Randel, D. Global Precipitation Estimates from Cross-Track Passive Microwave Observations Using a Physically Based Retrieval Scheme. J. Hydrometeorol. 2016, 17, 383–400. [Google Scholar]

- Marzano, F.S.; Palmacci, M.; Cimini, D.; Giuliani, G.; Turk, F.J. Multivariate statistical integration of Satellite infrared and microwave radiometric measurements for rainfall retrieval at the geostationary scale. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1018–1032. [Google Scholar] [CrossRef]

- Ba, M.B.; Gruber, A. GOES Multispectral Rainfall Algorithm (GMSRA). J. Appl. Meteorol. 2001, 40, 1500–1514. [Google Scholar] [CrossRef]

- Behrangi, A.; Hsu, K.L.; Imam, B.; Sorooshian, S.; Huffman, G.J.; Kuligowski, R.J. PERSIANN-MSA: A Precipitation Estimation Method from Satellite-Based Multispectral Analysis. J. Hydrometeorol. 2009, 10, 1414–1429. [Google Scholar] [CrossRef]

- Behrangi, A.; Imam, B.; Hsu, K.L.; Sorooshian, S.; Bellerby, T.J.; Huffman, G.J. REFAME: Rain Estimation Using Forward-Adjusted Advection of Microwave Estimates. J. Hydrometeorol. 2010, 11, 1305–1321. [Google Scholar] [CrossRef] [Green Version]

- Sorooshian, S.; Imam, B.; Hsu, K.-L.; Behrangi, A.; Kuligowski, R.J. Evaluating the Utility of Multispectral Information in Delineating the Areal Extent of Precipitation. J. Hydrometeorol. 2009, 10, 684–700. [Google Scholar]

- Kohrs, R.A.; Martin, D.W.; Mosher, F.R.; Medaglia, C.M.; Adamo, C. Over-Ocean Validation of the Global Convective Diagnostic. J. Appl. Meteorol. Climatol. 2008, 47, 525–543. [Google Scholar]

- Tjemkes, S.A.; van de Berg, L.; Schmetz, J. Warm water vapour pixels over high clouds as observed by METEOSAT. Contrib. Atmos. Phys. 1997, 70, 15–21. [Google Scholar]

- Sorooshian, S.; AghaKouchak, A.; Arkin, P.; Eylander, J.; Foufoula-Georgiou, E.; Harmon, R.; Hendrickx, J.M.H.; Imam, B.; Kuligowski, R.; Skahill, B.; et al. Advanced Concepts on Remote Sensing of Precipitation at Multiple Scales. Bull. Am. Meteorol. Soc. 2011, 92, 1353–1357. [Google Scholar] [CrossRef]

- Canziani, A.; Paszke, A.; Culurciello, E. An Analysis of Deep Neural Network Models for Practical Applications. arXiv 2016, arXiv:1208.2205. [Google Scholar]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Elman, J. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the NIPS’14: Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 2. [Google Scholar]

- Akbari Asanjan, A.; Yang, T.; Hsu, K.; Sorooshian, S.; Lin, J.; Peng, Q. Short-Term Precipitation Forecast Based on the PERSIANN System and LSTM Recurrent Neural Networks. J. Geophys. Res. Atmos. 2018, 123, 12543–12563. [Google Scholar] [CrossRef]

- Liu, Y.; Racah, E.; Prabhat; Correa, J.; Khosrowshahi, A.; Lavers, D.; Kunkel, K.; Wehner, M.; Collins, W. Application of Deep Convolutional Neural Networks for Detecting Extreme Weather in Climate Datasets. arXiv 2016, arXiv:1605.01156. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y. Convolutional LSTM Network A Machine Learning Approach for Precipitation Nowcasting. In Proceedings of the 29th Annual Conference on Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 11–12 December 2015. [Google Scholar]

- Gao, X.; Tao, Y.; Ihler, A.; Sorooshian, S.; Hsu, K. Precipitation Identification with Bispectral Satellite Information Using Deep Learning Approaches. J. Hydrometeorol. 2017, 18, 1271–1283. [Google Scholar]

- Tao, Y.M.; Hsu, K.; Ihler, A.; Gao, X.G.; Sorooshian, S. A Two-Stage Deep Neural Network Framework for Precipitation Estimation from Bispectral Satellite Information. J. Hydrometeorol. 2018, 19, 393–408. [Google Scholar] [CrossRef]

- Sadeghi, M.; Asanjan, A.A.; Faridzad, M.; Nguyen, P.; Hsu, K.; Sorooshian, S.; Braithwaite, D. PERSIANN-CNN: Precipitation Estimation from Remotely Sensed Information Using Artificial Neural Networks–Convolutional Neural Networks. J. Hydrometeorol. 2019, 20, 2273–2289. [Google Scholar] [CrossRef]

- Wang, C.; Xu, J.; Tang, G.; Yang, Y.; Hong, Y. Infrared Precipitation Estimation Using Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8612–8625. [Google Scholar] [CrossRef]

- Hayatbini, N.; Kong, B.; Hsu, K.-L.; Nguyen, P.; Sorooshian, S.; Stephens, G.; Fowlkes, C.; Nemani, R. Conditional Generative Adversarial Networks (cGANs) for Near Real-Time Precipitation Estimation from Multispectral GOES-16 Satellite Imageries—PERSIANN-cGAN. Remote Sens. 2019, 11, 2193. [Google Scholar] [CrossRef] [Green Version]

- Ganquan, W. The Multiple Channel Scanning Imager of “FY-4” Meteorological Satellite. In Proceedings of the 2004 Academic Conference of Chinese Optical Society, Hangzhou, China, 22 February 2004. [Google Scholar]

- Bellerby, T.; Todd, M.; Kniveton, D. Rainfall estimation from a combination of TRMM precipitation radar and GOES multispectral satellite imagery through the use of an artificial neural network. J. Appl. Meteorol. 2000, 39 Pt 1, 2115–2128. [Google Scholar] [CrossRef]

- Arkin, P.A.; Meisner, B.N. The Relationship between Large-Scale Convective Rainfall and Cold Cloud over the Western Hemisphere during 1982–1984. Mon. Weather. Rev. 1987, 115, 51–74. [Google Scholar] [CrossRef] [Green Version]

- Zeng, X. The Relationship among Precipitation, Cloud-Top Temperature, and Precipitable Water over the Tropics. J. Clim. 1999, 12, 2503. [Google Scholar] [CrossRef]

- Lu, X.; Tang, G.; Wei, M.; Yang, L.; Zhang, Y. Evaluation of multi-satellite precipitation products in Xinjiang, China. Int. J. Remote Sens. 2018, 39, 7437–7462. [Google Scholar] [CrossRef]

- Joyce, R.J.; Janowiak, J.E.; Arkin, P.A.; Xie, P.P. CMORPH: A method that produces global precipitation estimates from passive microwave and infrared data at high spatial and temporal resolution. J. Hydrometeorol. 2004, 5, 487–503. [Google Scholar] [CrossRef]

- Yu-Lu, Z. Evaluation and Verification of FY-4A Satellite Quantitative Precipitation Estimation Product. J. Agric. Catastropholgy 2021, 11, P96–P98. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer International Publishing: Munich, Germany, 2015; pp. 234–241. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M. Attention-unet: Learning Where to Look for the Pancreas. In Proceedings of the 1st Conference on Medical Imaging with Deep Learning (MIDL 2018), Amsterdam, The Netherlands, 21 August 2018. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Ghajarnia, N.; Liaghat, A.; Daneshkar Arasteh, P. Comparison and evaluation of high resolution precipitation estimation products in Urmia Basin-Iran. Atmos. Res. 2015, 158–159, 50–65. [Google Scholar] [CrossRef]

| Channels Number | Band Range/μm | Center Wavelength/μm | Spatial Resolution/km | Primary Probe Object |

|---|---|---|---|---|

| 1 | 0.45–0.49 | 0.47 | 1 | aerosol |

| 2 | 0.55–0.75 | 0.65 | 0.5 | fog, clouds |

| 3 | 0.75–0.90 | 0.825 | 1 | vegetation |

| 4 | 1.36–1.39 | 1.375 | 2 | cirrus |

| 5 | 1.58–1.64 | 1.61 | 2 | snow |

| 6 | 2.10–2.35 | 2.225 | 2 | cirrus, aerosol |

| 7 | 3.50–4.00 | 3.725 | 2 | fire point |

| 8 | 3.50–4.00 | 3.725 | 4 | earth’s surface |

| 9 | 5.80–6.70 | 6.25 | 4 | high-layer water vapor |

| 10 | 6.90–7.30 | 7.1 | 4 | mid-layer water vapor |

| 11 | 8.00–9.00 | 8.5 | 4 | low layer water vapor |

| 12 | 10.3–11.3 | 10.8 | 4 | cloud and surface temperature |

| 13 | 11.5–12.5 | 12.0 | 4 | cloud and Surface temperature |

| 14 | 13.2–13.8 | 13.5 | 4 | cloud-top height |

| Classification Metrics | Formula | Range | Optimum |

|---|---|---|---|

| Probability of detection (POD) | [0, 1] | 1 | |

| False alarm ratio (FAR) | [0, 1] | 0 | |

| Critical success index (CSI) | [0, 1] | 1 |

| Verification Measure | Formula | Range | Optimum |

|---|---|---|---|

| Root mean square error (RMSE) | [0, ∞] | 0 | |

| Pearson correlation coefficient (CC) | [−1, 1] | 1 |

| Metrics | FY4A-QPE Product | CMORPH Product | Attention-Unet Model | |

|---|---|---|---|---|

| CSI | Value | 0.180 | 0.220 | 0.283 |

| Performance gain | - | 22.22% | 57.22% | |

| POD | Value | 0.222 | 0.311 | 0.473 |

| Performance gain | - | 40.09% | 113.06% | |

| FAR | Value | 0.420 | 0.569 | 0.559 |

| Performance gain | - | −35.48% | −33.10% |

| Metrics | FY4A-QPE Product | CMORPH Product | Attention-Unet Model | |

|---|---|---|---|---|

| Average RMSE | Value | 0.978 | 0.832 | 0.751 |

| Performance gain | - | 14.92% | 23.21% | |

| CC | Value | 0.270 | 0.268 | 0.370 |

| Performance gain | - | 0.74% | 37.04% |

| Metrics | FY4A-QPE Product | CMORPH Product | Attention-Unet Model | |

|---|---|---|---|---|

| CSI | Value | 0.420 | 0.161 | 0.570 |

| Performance gain | - | −61.67% | 35.71% | |

| POD | Value | 0.457 | 0.257 | 0.729 |

| Performance gain | - | −43.76% | 59.52% | |

| FAR | Value | 0.156 | 0.698 | 0.265 |

| Performance gain | - | −347.44% | −69.87% |

| Metrics | FY4A-QPE Product | CMORPH Product | Attention-Unet Model | |

|---|---|---|---|---|

| Average RMSE | Value | 15.976 | 3.855 | 2.519 |

| Performance gain | - | 75.87% | 84.23% | |

| CC | Value | 0.590 | −0.086 | 0.616 |

| Performance gain | - | −85.42% | 4.41% |

| Metrics | Unet Model | PERSIANN-CNN Model | Attention-Unet Model | |

|---|---|---|---|---|

| POD | Value | 0.606 | 0.476 | 0.473 |

| Performance gain | - | −21.45% | −21.95% | |

| FAR | Value | 0.681 | 0.600 | 0.559 |

| Performance gain | - | 11.89% | 17.91% | |

| CSI | Value | 0.267 | 0.274 | 0.283 |

| Performance gain | - | 2.62% | 5.99% |

| Metrics | Unet Model | PERSIANN-CNN Model | Attention-Unet Model | |

|---|---|---|---|---|

| Average RMSE | Value | 2.871 | 0.709 | 0.751 |

| Performance gain | - | 75.30% | 73.84% | |

| CC | Value | 0.3570 | 0.368 | 0.370 |

| Performance gain | - | 3.08% | 3.64% |

| Metrics | Unet Model | PERSIANN- CNN Model | Attention-Unet Model | |

|---|---|---|---|---|

| POD | Value | 0.889 | 0.729 | 0.729 |

| Performance gain | - | −18.00% | −18.00% | |

| FAR | Value | 0.415 | 0.292 | 0.265 |

| Performance gain | - | 29.64% | 36.14% | |

| CSI | Value | 0.540 | 0.558 | 0.570 |

| Performance gain | - | 3.33% | 5.56% |

| Metrics | Unet Model | PERSIANN- CNN Model | Attention-Unet Model | |

|---|---|---|---|---|

| Average RMSE | Value | 13.274 | 2.908 | 2.519 |

| Performance gain | - | 78.09% | 81.02% | |

| CC | Value | 0.452 | 0.527 | 0.616 |

| Performance gain | - | 16.59% | 36.28% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Guan, J.; Zhang, F.; Wang, X.; Long, Z. Attention-Unet-Based Near-Real-Time Precipitation Estimation from Fengyun-4A Satellite Imageries. Remote Sens. 2022, 14, 2925. https://doi.org/10.3390/rs14122925

Gao Y, Guan J, Zhang F, Wang X, Long Z. Attention-Unet-Based Near-Real-Time Precipitation Estimation from Fengyun-4A Satellite Imageries. Remote Sensing. 2022; 14(12):2925. https://doi.org/10.3390/rs14122925

Chicago/Turabian StyleGao, Yanbo, Jiping Guan, Fuhan Zhang, Xiaodong Wang, and Zhiyong Long. 2022. "Attention-Unet-Based Near-Real-Time Precipitation Estimation from Fengyun-4A Satellite Imageries" Remote Sensing 14, no. 12: 2925. https://doi.org/10.3390/rs14122925

APA StyleGao, Y., Guan, J., Zhang, F., Wang, X., & Long, Z. (2022). Attention-Unet-Based Near-Real-Time Precipitation Estimation from Fengyun-4A Satellite Imageries. Remote Sensing, 14(12), 2925. https://doi.org/10.3390/rs14122925