1. Introduction

In night driving, road night lighting plays an important role in traffic safety. The International Commission on Illumination (CIE) reported that road lighting reduced nighttime accidents by 13~75% over 15 countries [

1]. Christopher found that the reported crashes where roads were lit decreased 28.95% in total crashes and 39.21% in injury night crashes [

2]. Elvik et al. indicated that road lighting reduced the nighttime crash rate by 23% in Belgium, Britain and Sweden [

3]. William found that improving overall uniformity up to approximately 0.4 lowers the night-to-day crash ratio for highways in New Zealand [

4]. Good road lighting quality provides a good lit environment, thus reducing traffic crashes at night. Consequently, evaluating road lighting quality is meaningful to improve the nighttime road lit environment and gain urban traffic safety [

5,

6,

7,

8,

9].

Road lighting quality reflects the photometric performance of road lights aiming at satisfying drivers’ visual needs at night, which includes parameters such as average illuminance, overall uniformity, etc., according to CIE [

10] and European Committee for Standardization [

11] documents.

Field observation is the most popular way to evaluate road lighting quality. Generally, illuminance meters or imaging photometers are employed to measure road brightness at typical places to assess lighting quality. Liu et al. utilized different illuminance meters and handheld luminance meters to measure the illuminance in different orientations and the brightness of roads in Dalian, China [

12]. Guo et al. measured the luminance of roads in Espoo, Finland using an imaging photometer LMK Mobile Advanced (IPLMA), which converted photos into luminance values, and calculated the average luminance by the software LMK 2000 [

13]. Jägerbrand also applied the IPLMA to obtain road lighting parameters in Sweden [

14]. Ekrias et al. used an imaging luminance photometer ProMetric 1400 to measure the luminance of roads in Finland and calculated the average luminance and uniformities [

15]. However, static road lighting measurements cannot provide lighting information at large scales. Additionally, observation positions must be placed within roads, which may affect the normal running of traffic flow [

16,

17,

18].

Some researchers have carried out mobile measurements with imaging luminance devices or photometers boarded on vehicles. Greffier et al. used the High Dynamic Range (HDR) Imaging Luminance Measuring Device (ILMD) mounted on a car to measure road luminance [

19]. Zhou et al. developed a mobile road lighting measurement system with a photometer mounted on a vehicle, which was able to record the illuminance and position data simultaneously and successfully applied in Florida, America [

20]. However, mobile measurements are disturbed by many factors, such as the head and rear lights of cars, relative positions between observation vehicles and street lamps, and the vibration of observation angles.

Nighttime lighting remote sensing offers a unique way to monitor spatial-continuous nighttime nocturnal lighting at a large scale. Nighttime light remote sensing data, such as DMSP/OLS, NPP/VIIRS and Luojia1-01, are widely used in mapping urbanization processes [

21], estimating GDP, investigating poverty and monitoring disasters [

22]. However, due to the coarse resolutions of these nighttime satellite remote sensing data (DSMP/OLS: 2.7 km; NPP/VIIRS: 0.75 km; Luojia1-01: 130 m), they cannot map the detailed light environment within roads and therefore cannot be applied for road lighting quality evaluation, thus few studies have been carried out on road lighting environments based on nighttime light data However, there still are some studies. Cheng et al. used JL1-3B nighttime light data to extract and classify the street lights by a local maximum algorithm, achieving an accuracy of above 89% [

23]. Zheng et al. used the multispectral feature of JL1-3B data to discriminate light source types using ISODATA algorithm, with an overall accuracy of 83.86% [

24]. An unmanned aerial vehicle (UAV) is able to provide high-resolution nighttime lighting images on a relatively large scale. Rabaza et al. used a digital camera onboard the UAV to capture orthoimages of the lit roads in Deifontes, Spain, which was calibrated by a known luminance relationship. Then, the average luminance or illuminance was calculated [

25]. UAVs have the outstanding advantages of convenience, low cost and extremely high resolution. However, it is also limited by security, privacy factors, and the short flight distance makes it unsuitable for large areas. The newly launched JL1-3B satellite provides nighttime images with a high spatial resolution of 0.92 m and multispectral data [

24,

26]. The features of JL1-3B show the potential of evaluating road lighting quality at large scales.

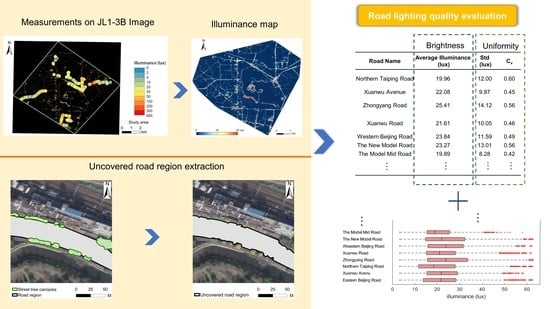

This study aimed to evaluate the road lighting quality in Nanjing, China, using high-resolution JL1-3B nighttime light remote sensing data combined with the in situ measured illuminance on typical roads. Several machine learning models were developed and compared to produce a fine resolution illuminance map with good accuracy. Then, the remotely sensed illuminance within roads was extracted to calculate indicators to evaluate road lighting quality.

5. Discussion

Traditional ground-based measurements are limited by the measuring scopes and usually disturb the normal traffic flow. Nighttime remote sensing can observe spatial continuous lit environments at large scales, providing potential for road lighting quality evaluation. However, due to the relative coarse resolutions, most nighttime remote sensing data cannot be used to map illuminance within roads. The new JL1-3B data has a high spatial resolution of 0.92 m that can capture detailed lighting environment within roads, and it also has three channels that can well characterize the light colors [

23,

24]. Therefore, JL1-3B is a proper data that can be used for effectively quantifying road lighting quality. This paper explores the method to evaluating road lighting quality by the estimated illuminance derived from JL1-3B data and in situ observations. The high-resolution illuminance map contains large amounts of illuminance data and can effectively depict the lighting environment within roads at pixel scale. Furthermore, with the revisiting period of 9 days, the proposed method using JL1-3B data is able to measure the road lighting quality periodically, providing timely information about large-scale road lighting condition for concerned government departments. Compared with traditional road lighting quality measurements, the application of nighttime light remote sensing data in this study is superior in terms of safety, rapidity, measuring scopes, measuring frequency and information content. In addition to the radiance values of the three bands of JL1-3B, other color components, such as HIS, were also introduced for estimating illuminance. Six combinations of variables were compared to determine the optimal variables combination. The results (

Table 4) showed the optimal model involved in all color components, indicating that considering more color components may improve the performance of the estimation model. Furthermore, the random forest model outperformed the multiple regression model. This may be attributed to the fact that different street lamps emit light of different colors, and the tree-based random forest method that can handle different conditions under different branches is more suitable for this complex scenario.

There were also some limitations in this study. During the process of data collection, some deficiencies like discrepancies between the ground and satellite observations and low positioning accuracy of the GNSS system, have negative impacts on the outcomes of the methods. Satellite imaging was almost instantaneous, but in situ observation lasted approximately 1 h. During observation, the lit environment may change, resulting in inconsistencies between field observations and remote sensing data. Although we carefully checked them and removed some obvious problematic samples, there may still be some uncertainties caused by the inconsistency. To overcome this problem, the planned sampling section can be divided into stable and unstable illumination zones. For stable zones, the lighting is steady, and therefore, the illuminance cannot be measured strictly synchronously with the satellite overpass. For unstable zones, the lighting is changeable; therefore, the illuminance should be recorded strictly synchronously with the satellite overpass. Assigning more observation teams also helps to reduce the inconsistency because they can collect abundant samples in less time. Moreover, there are also a lot of pixels that were affected by these factors in the remotely sensed illuminance map. How to identify and remove these problematic pixels is still a difficult problem to overcome in the future. During this in situ observation, normal handheld GNSS instruments were used to record sample coordinates. However, the positioning accuracy of these instruments is generally within 10 m. Given the obvious spatial difference in the light environment, this accuracy level is not sufficient. RTK GNSS instruments with centimeter-level accuracy can effectively improve the spatial consistency between field observations and remote sensing data. There is another important point to note, which is the observation angle of the satellite. Though JL1-3B can image within the off-nadir angle of ±45°, the satellite zenith angle should not be high enough to eliminate the shading effect of buildings and trees, and also reduce the influence of Rayleigh scattering of the atmosphere.

6. Conclusions

This study proposed a new space-borne method for evaluating road lighting quality based on JL1-3B nighttime light remote sensing data. Firstly, synchronous field observations were carried out to measure illuminance in Nanjing, China. After a series of preprocessing of the JL1-3B image, the in situ observed illuminance and the radiance of JL1-3B were combined to map the high-resolution surface illuminance based on the close relationship between them. Two models (multiple linear regression and random forest) with six independent variable combinations were employed and compared to develop the optimal model for illuminance estimation. Results showed that the random forest model with Hue, Saturability, lnI, lnR, lnG and lnB as the independent variables achieved the best performance (R2 = 0.75, RMSE = 9.79 lux). Additionally, the optimal model was applied to the JL1-3B preprocessed image to derive the surface illuminance map. The average, standard deviation and Cv of the illuminance within roads were calculated to assess their lighting quality.

This study is a preliminary study to develop a technical framework to evaluate road lighting quality using JL1-3B nighttime light remote sensing data. JL1-3B can be ordered from Changguang Co., Ltd., Changchun, China and the instruments for ground-based observation were inexpensive TES-1399R illuminance meters. The devices and methods used in this study have the advantages of low cost, simplicity and reliability, providing a reference for road lighting quality evolution in other regions.