Energy-Based Adversarial Example Detection for SAR Images

Abstract

:1. Introduction

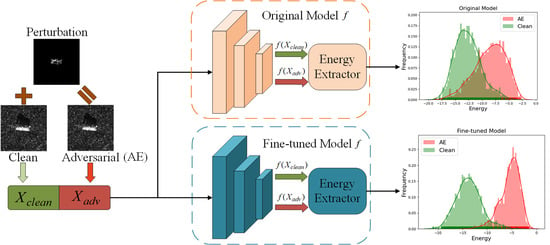

- We designed a novel energy feature space for SAR adversarial detection, where the adversarial degree of a sample is positively related to its energy.

- We propose an energy-based detector (ED), which requires no modification to the pretrained model. Compared with another unmodified detector, STD, the proposed method showed superior performance.

- On the basis of ED, we propose to fine-tune the pre-trained model with a hinge energy loss item to further optimize the output energy surface. Compared with the LID and MD, the proposed fine-tuned energy-based detector (FED) was experimentally demonstrated to boost the detection performance against SAR AEs, especially for those with regional constraints.

2. Preliminaries

2.1. Adversarial Attack

- FGSM: The fast gradient sign method (FGSM) [3] normalizes the gradients of the input with respect to the loss of model f to the smallest pixel depth as a perturbation unit:

- BIM: The basic iterative method (BIM) [4] optimizes the FGSM attack as an iterative version:

- DeepFool: Moosavi-Dezfooli et al. [5] added iterative perturbations until the AE crosses a linearly assumed decision boundary, and the perturbation in each iteration is calculated as

- CW: To avoid clamping AEs between in every iteration, Carlini and Wagner [6] introduced a new variable w to express the AE as , which maps the value of the AE smoothly lying between . The perturbation is expressed as:

2.2. Adversarial Detection

- Local intrinsic dimensionality detector (LID): Ma et al. [14] supposed that the AEs lie in the high-dimensional region of the feature manifold and, therefore, own higher local intrinsic dimensionality (LID) values compared with clean samples. Given a test sample x, the LID method randomly picks k samples in the training set and calculates the LID value of sample x as follows:where represents the featurewise Euclidean distance from sample x to its i-th nearest neighbor.

- Mahalanobis detector (MD): Lee et al. [15] adopted the featurewise Mahalanobis distance to measure the adversarial degree of a test sample x under the assumption that clean samples obey the class conditional Gaussian distribution in the feature space, while the AEs do not. With the feature vector before the classification layer of sample x defined as , the metric function of the MD method is calculated aswhere is the mean feature vector of the predicted label k of x on the training set and ∑ is the feature covariance matrix.

- Soft threshold detector (STD): Li et al. [16] found that there are differences in classification confidence between clean samples and AEs, and a lower confidence corresponds to a higher adversarial degree. Based on this finding, the authors recreated a new dataset consisting only of classification confidence and binarized labels and trained a logistic regression classifier to obtain the best confidence threshold for each class. The metric function M of the STD method can be expressed as

2.3. Problem of Detecting SAR AEs under Regional Constraint

- Impact on the LID and MD: The LID and MD implement detection by examining the intermediate features of the test samples. However, as the regional constraint became tightened, the detection performance of the LID and MD showed a significant drop, with the AUROC dropping by nearly 20% in the worst case. This reveals that SAR AEs under the regional constraint not only expose smaller visual observability, but also have less difference in intermediate features from clean samples.

- Impact on the STD: The STD method detects AEs by checking the output confidence. It can be seen that the regional constraint had relatively less impact on the output layer of the model. However, since the STD method is still based on the conditional confidence , it did not perform as well as the LID and MD, despite its computational efficiency.

3. Proposed Method

3.1. Interpretability of

3.2. Energy-Based Detector on Pretrained Model

| Algorithm 1: Energy-based adversarial detector (ED). |

|

3.3. Energy-Based Detector on Fine-Tuned Model

| Algorithm 2: Fine-tuned energy-based detector (FED). |

|

4. Results

4.1. Dataset

4.2. Experiment Setups

4.3. Evaluation Metric

- AUROC: The AUROC measures the area under the receiver operating characteristic curve, which takes a value between 0.5 and 1. The AUROC reflects the maximum potential of the detection methods.

- TNR@95%TPR: Since normal samples are in the majority and AEs are in the minority in practical applications, the detection rate against AEs (TNR) should be improved under the premise of maintaining the detection rate of normal samples (TPR), as shown in Table 1. Hence, we chose the true negative rate (TNR) at a 95 % true positive rate (TPR) to measure the performance of the detection methods.

4.4. Influence of Regional Constraint on Attack Performance

4.5. Detection Performance

4.6. Sensitivity Analysis

4.7. Visualization of Energy Distribution

4.8. Detection against AEs with Variable Perturbation Scales

4.9. Robustness to Adaptive Attacks

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhu, X.X.; Montazeri, S.; Ali, M.; Hua, Y.; Wang, Y.; Mou, L.; Shi, Y.; Xu, F.; Bamler, R. Deep learning meets SAR: Concepts, models, pitfalls, and perspectives. IEEE Geosci. Remote Sens. Mag. 2021, 9, 143–172. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: London, UK, 2016; pp. 99–112. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2574–2582. [Google Scholar] [CrossRef] [Green Version]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar]

- Li, H.; Huang, H.; Chen, L.; Peng, J.; Huang, H.; Cui, Z.; Mei, X.; Wu, G. Adversarial examples for CNN-based SAR image classification: An experience study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1333–1347. [Google Scholar] [CrossRef]

- Huang, T.; Zhang, Q.; Liu, J.; Hou, R.; Wang, X.; Li, Y. Adversarial attacks on deep-learning-based SAR image target recognition. J. Netw. Comput. Appl. 2020, 162, 102632. [Google Scholar] [CrossRef]

- Du, C.; Huo, C.; Zhang, L.; Chen, B.; Yuan, Y. Fast C&W: A Fast Adversarial Attack Algorithm to Fool SAR Target Recognition with Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2021, 19, 4010005. [Google Scholar]

- Peng, B.; Peng, B.; Zhou, J.; Xia, J.; Liu, L. Speckle Variant Attack: Towards Transferable Adversarial Attack to SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4509805. [Google Scholar] [CrossRef]

- Shafahi, A.; Najibi, M.; Ghiasi, M.A.; Xu, Z.; Dickerson, J.; Studer, C.; Davis, L.S.; Taylor, G.; Goldstein, T. Adversarial training for free! Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper/2019/file/7503cfacd12053d309b6bed5c89de212-Paper.pdf (accessed on 1 August 2022).

- Zhang, H.; Yu, Y.; Jiao, J.; Xing, E.; El Ghaoui, L.; Jordan, M. Theoretically principled trade-off between robustness and accuracy. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 7472–7482. [Google Scholar]

- Xu, Y.; Sun, H.; Chen, J.; Lei, L.; Ji, K.; Kuang, G. Adversarial Self-Supervised Learning for Robust SAR Target Recognition. Remote Sens. 2021, 13, 4158. [Google Scholar] [CrossRef]

- Ma, X.; Li, B.; Wang, Y.; Erfani, S.M.; Wijewickrema, S.; Schoenebeck, G.; Song, D.; Houle, M.E.; Bailey, J. Characterizing adversarial subspaces using local intrinsic dimensionality. In Proceedings of the 6th International Conference on Learning Representations, ICLR, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Lee, K.; Lee, K.; Lee, H.; Shin, J. A simple unified framework for detecting out-of-distribution samples and adversarial attacks. Adv. Neural Inf. Process. Syst. 2018, 31. Available online: https://proceedings.neurips.cc/paper/2018/file/abdeb6f575ac5c6676b747bca8d09cc2-Paper.pdf (accessed on 1 August 2022).

- Chen, L.; Xiao, J.; Zou, P.; Li, H. Lie to me: A soft threshold defense method for adversarial examples of remote sensing images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 8016905. [Google Scholar] [CrossRef]

- Du, M.; Bi, D.; Du, M.; Wu, Z.L.; Xu, X. Local Aggregative Attack on SAR Image Classification Models. Authorea Prepr. 2022. [Google Scholar] [CrossRef]

- Dang, X.; Yan, H.; Hu, L.; Feng, X.; Huo, C.; Yin, H. SAR Image Adversarial Samples Generation Based on Parametric Model. In Proceedings of the 2021 International Conference on Microwave and Millimeter Wave Technology (ICMMT), Nanjing, China, 23–26 May 2021; pp. 1–3. [Google Scholar]

- LeCun, Y.; Chopra, S.; Hadsell, R.; Ranzato, M.; Huang, F. A tutorial on energy-based learning. In Predicting Structured Data; MIT Press: Cambridge, MA, USA, 2006; Volume 1. [Google Scholar]

- Will Grathwohl, K.C.W.e. Your classifier is secretly an energy based model and you should treat it like one. In Proceedings of the 8th International Conference on Learning Representations, ICLR, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Liu, W.; Wang, X.; Owens, J.; Li, Y. Energy-based out-of-distribution detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21464–21475. [Google Scholar]

- Brown, T.B.; Mané, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial patch. arXiv 2017, arXiv:1712.09665. [Google Scholar]

- Rao, S.; Stutz, D.; Schiele, B. Adversarial training against location-optimized adversarial patches. In European Conference on Computer Vision, Proceedings of the ECCV 2020: Computer Vision—ECCV 2020 Workshops; Springer: Cham, Switzerland, 2020; pp. 429–448. [Google Scholar]

- Lu, M.; Li, Q.; Chen, L.; Li, H. Scale-adaptive adversarial patch attack for remote sensing image aircraft detection. Remote Sens. 2021, 13, 4078. [Google Scholar] [CrossRef]

- Ross, T.D.; Worrell, S.W.; Velten, V.J.; Mossing, J.C.; Bryant, M.L. Standard SAR ATR evaluation experiments using the MSTAR public release data set. In Proceedings of the Algorithms for Synthetic Aperture Radar Imagery V. International Society for Optics and Photonics, Orlando, FL, USA, 13–17 April 1998; Volume 3370, pp. 566–573. [Google Scholar]

- Malmgren-Hansen, D.; Nobel-J, M. Convolutional neural networks for SAR image segmentation. In Proceedings of the 2015 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Abu Dhabi, United Arab Emirates, 7–10 December 2015; pp. 231–236. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chen, L.; Xu, Z.; Li, Q.; Peng, J.; Wang, S.; Li, H. An empirical study of adversarial examples on remote sensing image scene classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7419–7433. [Google Scholar] [CrossRef]

- Du, C.; Zhang, L. Adversarial Attack for SAR Target Recognition Based on UNet-Generative Adversarial Network. Remote Sens. 2021, 13, 4358. [Google Scholar] [CrossRef]

| Adversarial: 0 | Ground Truth | ||

|---|---|---|---|

| Clean & Noisy: 1 | 1 | 0 | |

| Prediction | 1 | True Positive (TP) | False Positive (FP) |

| 0 | False Negative (FN) | True Negative (TN) | |

| Indicator | |||

| Constraint | Global | R1 | R2 | R3 | |

|---|---|---|---|---|---|

| ResNet34 | FGSM | 96.0 | 65.9 | 28.2 | 3.0 |

| BIM | 97.3 | 83.6 | 39.1 | 3.4 | |

| CW | 100 | 100 | 97.0 | 51.9 | |

| DeepFool | 98.9 | 73.1 | 56.29 | 9.6 | |

| DenseNet121 | FGSM | 93.1 | 97.2 | 70.0 | 20.6 |

| BIM | 100 | 100 | 95.3 | 30.8 | |

| CW | 99.9 | 99.9 | 99.8 | 87.7 | |

| DeepFool | 99.9 | 95.9 | 55.4 | 4.4 | |

| VGG16 | FGSM | 97.8 | 71.4 | 35.2 | 4.8 |

| BIM | 99.1 | 84.0 | 46.6 | 5.9 | |

| CW | 100 | 100 | 96.6 | 31.6 | |

| DeepFool | 96.3 | 64.7 | 33.1 | 0.7 | |

| Attack Setup | Unmodified | Modified | |||||

|---|---|---|---|---|---|---|---|

| Network | Attack | Region | STD | ED | LID | MD | FED |

| DenseNet121 | FGSM | Global | 73.2 | 74.6 | 98.3 | 99.5 | 99.6 |

| R1 | 69.7 | 70.2 | 91.1 | 88.8 | 98.8 | ||

| R2 | 76.4 | 78.3 | 79.7 | 74.3 | 96.8 | ||

| R3 | 89.6 | 91.9 | 64.3 | 53.1 | 96.7 | ||

| BIM | Global | 89.6 | 73.0 | 99.3 | 99.4 | 98.9 | |

| R1 | 93.1 | 82.5 | 99.8 | 99.2 | 97.3 | ||

| R2 | 55.7 | 64.7 | 96.8 | 94.9 | 98.2 | ||

| R3 | 52.6 | 72.3 | 82.2 | 88.3 | 89.6 | ||

| CW | Global | 74.7 | 63.8 | 99.9 | 99.9 | 99.9 | |

| R1 | 62.3 | 70.4 | 98.4 | 99.2 | 99.5 | ||

| R2 | 66.5 | 80.6 | 95.9 | 97.9 | 98.6 | ||

| R3 | 69.0 | 81.6 | 89.1 | 90.9 | 98.2 | ||

| DeepFool | Global | 95.2 | 97.5 | 78.1 | 91.3 | 97.7 | |

| R1 | 94.5 | 96.6 | 62.8 | 67.6 | 99.7 | ||

| R2 | 92.7 | 95.4 | 76.8 | 97.5 | 98.5 | ||

| R3 | / | / | / | / | / | ||

| ResNet34 | FGSM | Global | 60.5 | 82.7 | 94.5 | 95.5 | 97.5 |

| R1 | 65.5 | 86.8 | 91.5 | 89.7 | 97.5 | ||

| R2 | 67.3 | 87.2 | 81.3 | 87.8 | 95.8 | ||

| R3 | / | / | / | / | / | ||

| BIM | Global | 60.2 | 60.8 | 96.2 | 93.8 | 95.3 | |

| R1 | 82.2 | 62.9 | 98.3 | 81.7 | 93.8 | ||

| R2 | 66.0 | 87.5 | 84.1 | 87.3 | 96.6 | ||

| R3 | / | / | / | / | / | ||

| CW | Global | 66.3 | 62.1 | 98.0 | 95.9 | 96.8 | |

| R1 | 60.5 | 78.4 | 96.3 | 88.7 | 97.8 | ||

| R2 | 65.5 | 87.1 | 93.4 | 91.8 | 98.3 | ||

| R3 | 68.4 | 87.8 | 86.6 | 90.6 | 98.1 | ||

| DeepFool | Global | 60.0 | 97.6 | 81.8 | 98.2 | 98.8 | |

| R1 | 65.4 | 98.3 | 78.1 | 98.5 | 98.8 | ||

| R2 | 62.2 | 98.2 | 74.2 | 98.5 | 98.7 | ||

| R3 | / | / | / | / | / | ||

| VGG16 | FGSM | Global | 58.1 | 59.2 | 83.7 | 91.5 | 96.7 |

| R1 | 63.6 | 65.9 | 92.1 | 79.4 | 95.2 | ||

| R2 | 64.2 | 63.5 | 78.6 | 70.7 | 89.9 | ||

| R3 | / | / | / | / | / | ||

| BIM | Global | 56.5 | 57.6 | 89.8 | 74.0 | 95.3 | |

| R1 | 60.2 | 55.8 | 94.2 | 77.4 | 95.0 | ||

| R2 | 65.5 | 62.6 | 84.7 | 72.9 | 93.6 | ||

| R3 | / | / | / | / | / | ||

| CW | Global | 65.2 | 78.9 | 99.7 | 99.5 | 99.9 | |

| R1 | 60.0 | 61.9 | 97.4 | 84.1 | 97.0 | ||

| R2 | 70.9 | 71.5 | 92.8 | 87.3 | 97.3 | ||

| R3 | 68.3 | 71.7 | 80.0 | 76.1 | 90.9 | ||

| DeepFool | Global | 86.8 | 88.4 | 53.9 | 63.8 | 97.3 | |

| R1 | 71.1 | 86.0 | 69.4 | 75.6 | 96.4 | ||

| R2 | 76.4 | 82.2 | 67.5 | 75.0 | 93.4 | ||

| R3 | / | / | / | / | / | ||

| Attack Setup | Unmodified | Modified | |||||

|---|---|---|---|---|---|---|---|

| Network | Attack | Region | STD | ED | LID | MD | FED |

| DenseNet121 | FGSM | Global | 37.4 | 45.7 | 95.7 | 99.6 | 98.9 |

| R1 | 23.0 | 37.9 | 53.0 | 31.5 | 94.6 | ||

| R2 | 26.2 | 42.9 | 16.4 | 3.2 | 85.4 | ||

| R3 | 39.1 | 61.8 | 4.1 | 0.35 | 80.6 | ||

| BIM | Global | 74.2 | 46.1 | 98.1 | 98.4 | 96.4 | |

| R1 | 84.1 | 63.3 | 99.6 | 97.2 | 91.7 | ||

| R2 | 29.3 | 16.1 | 86.4 | 77.6 | 93.5 | ||

| R3 | 8.1 | 15.3 | 37.8 | 33.6 | 56.3 | ||

| CW | Global | 61.1 | 48.4 | 99.5 | 99.6 | 99.6 | |

| R1 | 18.7 | 30.5 | 92.3 | 98.6 | 98.8 | ||

| R2 | 34.1 | 51.3 | 79.8 | 90.4 | 94.2 | ||

| R3 | 33.5 | 48.4 | 66.8 | 77.2 | 91.7 | ||

| DeepFool | Global | 69.1 | 85.8 | 36.1 | 19.2 | 88.7 | |

| R1 | 57.2 | 77.8 | 5.2 | 0.2 | 99.7 | ||

| R2 | 50.3 | 63.0 | 26.2 | 91.6 | 96.9 | ||

| R3 | / | / | / | / | / | ||

| ResNet34 | FGSM | Global | 30.0 | 50.3 | 89.1 | 85.5 | 89.3 |

| R1 | 24.1 | 48.0 | 80.6 | 80.7 | 88.4 | ||

| R2 | 19.6 | 44.6 | 61.8 | 51.9 | 78.5 | ||

| R3 | / | / | / | / | / | ||

| BIM | Global | 44.8 | 22.3 | 83.8 | 63.0 | 76.5 | |

| R1 | 65.8 | 25.0 | 93.0 | 44.6 | 73.4 | ||

| R2 | 11.9 | 35.2 | 42.5 | 39.0 | 79.4 | ||

| R3 | / | / | / | / | / | ||

| CW | Global | 40.7 | 18.9 | 90.2 | 72.7 | 85.9 | |

| R1 | 14.0 | 32.5 | 80.4 | 64.6 | 90.9 | ||

| R2 | 11.5 | 51.6 | 69.8 | 72.4 | 92.8 | ||

| R3 | 16.3 | 43.6 | 51.8 | 51.2 | 90.5 | ||

| DeepFool | Global | 62.5 | 96.3 | 59.8 | 94.7 | 98.9 | |

| R1 | 65.4 | 95.4 | 49.7 | 94.9 | 98.6 | ||

| R2 | 62.2 | 95.0 | 46.3 | 96.3 | 97.3 | ||

| R3 | / | / | / | / | / | ||

| VGG16 | FGSM | Global | 12.6 | 25.4 | 53.4 | 73.1 | 86.1 |

| R1 | 18.7 | 22.0 | 66.7 | 27.6 | 78.4 | ||

| R2 | 19.4 | 15.2 | 34.9 | 17.0 | 55.8 | ||

| R3 | / | / | / | / | / | ||

| BIM | Global | 12.1 | 13.7 | 62.2 | 42.2 | 76.5 | |

| R1 | 9.7 | 6.7 | 77.0 | 26.8 | 72.4 | ||

| R2 | 10.0 | 11.1 | 41.8 | 17.6 | 67.0 | ||

| R3 | / | / | / | / | / | ||

| CW | Global | 10.3 | 49.5 | 99.8 | 100 | 100 | |

| R1 | 9.9 | 19.8 | 85.9 | 49.3 | 86.6 | ||

| R2 | 10.7 | 14.4 | 68.6 | 57.2 | 88.1 | ||

| R3 | 12.0 | 19.1 | 44.6 | 17.0 | 53.4 | ||

| DeepFool | Global | 37.9 | 41.8 | 40.4 | 45.3 | 85.5 | |

| R1 | 25.1 | 29.7 | 21.4 | 24.8 | 82.4 | ||

| R2 | 21.3 | 25.7 | 20.0 | 22.3 | 63.1 | ||

| R3 | / | / | / | / | / | ||

| Network | DenseNet121 | ResNet34 | VGG16 |

|---|---|---|---|

| Parameter | 6.96M | 21.29M | 134.3M |

| Layer | 121 | 34 | 16 |

| Attack | Global () | Global () | R3 () | R3 () | |

|---|---|---|---|---|---|

| Network | DenseNet121 | 39.2 | 87.4 | 49.3 | 69.4 |

| ResNet34 | 12.6 | 43.6 | 28.1 | 60.9 | |

| VGG16 | 68.5 | 92.4 | 55.9 | 68.4 |

| Attack Setup | Unmodified | Modified | ||||

|---|---|---|---|---|---|---|

| Network | Region | STD | ED | LID | MD | FED |

| DenseNet121 | Global () | 75.6 | 83.9 | 90.3 | 95.5 | 97.4 |

| Global () | 78.2 | 85.1 | 97.1 | 99.0 | 98.4 | |

| R3 () | 70.2 | 80.0 | 81.5 | 87.4 | 97.4 | |

| R3 () | 70.9 | 78.7 | 78.7 | 74.8 | 97.3 | |

| ResNet34 | Global () | 53.6 | 74.2 | 74.3 | 79.5 | 88.4 |

| Global () | 68.4 | 84.7 | 87.8 | 92.1 | 96.4 | |

| R3 () | 73.7 | 87.1 | 80.7 | 87.9 | 93.8 | |

| R3 () | 76.2 | 88.4 | 87.3 | 89.6 | 97.5 | |

| VGG16 | Global () | 65.4 | 63.7 | 87.1 | 76.8 | 94.1 |

| Global () | 54.0 | 54.7 | 94.7 | 83.9 | 94.3 | |

| R3 () | 68.5 | 55.5 | 83.6 | 81.6 | 93.9 | |

| R3 () | 67.0 | 55.2 | 85.7 | 86.3 | 94.0 | |

| Attack | FGSM | BIM | |||

|---|---|---|---|---|---|

| Original | Adaptive | Original | Adaptive | ||

| Network | DenseNet121 | 93.1 | 2.52 | 100 | 3.76 |

| ResNet34 | 96.0 | 2.40 | 97.3 | 2.44 | |

| VGG16 | 97.8 | 8.52 | 99.1 | 8.66 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Gao, X.; Liu, S.; Peng, B.; Wang, Y. Energy-Based Adversarial Example Detection for SAR Images. Remote Sens. 2022, 14, 5168. https://doi.org/10.3390/rs14205168

Zhang Z, Gao X, Liu S, Peng B, Wang Y. Energy-Based Adversarial Example Detection for SAR Images. Remote Sensing. 2022; 14(20):5168. https://doi.org/10.3390/rs14205168

Chicago/Turabian StyleZhang, Zhiwei, Xunzhang Gao, Shuowei Liu, Bowen Peng, and Yufei Wang. 2022. "Energy-Based Adversarial Example Detection for SAR Images" Remote Sensing 14, no. 20: 5168. https://doi.org/10.3390/rs14205168

APA StyleZhang, Z., Gao, X., Liu, S., Peng, B., & Wang, Y. (2022). Energy-Based Adversarial Example Detection for SAR Images. Remote Sensing, 14(20), 5168. https://doi.org/10.3390/rs14205168