A Machine-Learning Approach to Intertidal Mudflat Mapping Combining Multispectral Reflectance and Geomorphology from UAV-Based Monitoring

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. UAS Survey Setting

2.3. Structure from Motion—Multi-View Stereo (SfM-MVS) Photogrammetry Processes

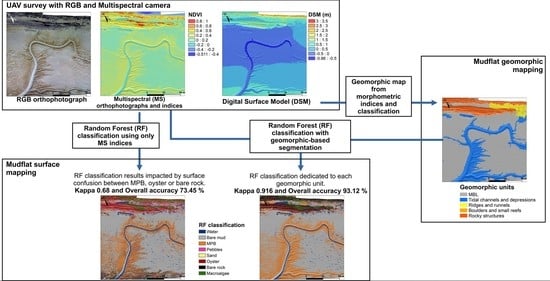

2.4. Image Classifications

2.4.1. Geomorphological Mapping Method

2.4.2. Supervised Image Classification Using Standard and Geomorphic-Based Random Forest Classifier

3. Results

3.1. Geomorphic Mapping

3.2. Reflectance Spectra and Multispectral Indices

3.3. Standard Image Classification

3.4. Geomorphic-Based Image Classification

4. Discussion

4.1. Geomorphological Analysis

4.2. Spectral Constraints

4.3. Generalisation of the Method

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Multispectral Index | NDVI | NDWI | GNDVI | Green/NIR | Red/NIR | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std | Mean | Std | Mean | Std | Mean | Std | Mean | Std | |

| Water | −0.052 | 0.086 | 0.020 | 0.112 | −0.001 | 0.026 | 1.072 | 0.279 | 1.129 | 0.212 |

| Bare mud | 0.137 | 0.029 | −0.185 | 0.021 | 0.070 | 0.010 | 0.689 | 0.030 | 0.760 | 0.045 |

| MPB | 0.362 | 0.044 | −0.356 | 0.035 | 0.116 | 0.013 | 0.476 | 0.039 | 0.470 | 0.049 |

| Pebbles | 0.216 | 0.106 | −0.284 | 0.076 | 0.102 | 0.029 | 0.564 | 0.093 | 0.658 | 0.145 |

| Sand | 0.079 | 0.013 | −0.210 | 0.018 | 0.086 | 0.011 | 0.654 | 0.024 | 0.854 | 0.023 |

| Oyster | 0.386 | 0.089 | −0.423 | 0.079 | 0.134 | 0.045 | 0.410 | 0.084 | 0.449 | 0.100 |

| Bare rock | 0.332 | 0.081 | −0.351 | 0.075 | 0.130 | 0.031 | 0.485 | 0.084 | 0.507 | 0.095 |

| Macro algae | 0.785 | 0.080 | −0.753 | 0.088 | 0.279 | 0.063 | 0.144 | 0.063 | 0.123 | 0.057 |

References

- Murray, N.J.; Phinn, S.R.; DeWitt, M.; Ferrari, R.; Johnston, R.; Lyons, M.B.; Clinton, N.; Thau, D.; Fuller, R.A. The global distribution and trajectory of tidal flats. Nature 2019, 565, 222–225. [Google Scholar] [CrossRef] [PubMed]

- Lebreton, B.; Rivaud, A.; Picot, L.; Prévost, B.; Barillé, L.; Sauzeau, T.; Beseres Pollack, J.; Lavaud, J. From ecological relevance of the ecosystem services concept to its socio-political use. The case study of intertidal bare mudflats in the Marennes-Oléron Bay, France. Ocean Coast. Manag. 2019, 172, 41–54. [Google Scholar] [CrossRef]

- Lovelock, C.E.; Duarte, C.M. Dimensions of blue carbon and emerging perspectives. Biol. Lett. 2019, 15, 20180781. [Google Scholar] [CrossRef] [PubMed]

- Underwood, G.J.C.; Kromkamp, J. Primary Production by Phytoplankton and Microphytobenthos in Estuaries. Adv. Ecol. Res. 1999, 29, 93–153. [Google Scholar] [CrossRef]

- Méléder, V.; Savelli, R.; Barnett, A.; Polsenaere, P.; Gernez, P.; Cugier, P.; Lerouxel, A.; Le Bris, A.; Dupuy, C.; Le Fouest, V.; et al. Mapping the Intertidal Microphytobenthos Gross Primary Production Part I: Coupling Multispectral Remote Sensing and Physical Modeling. Front. Mar. Sci. 2020, 7, 520. [Google Scholar] [CrossRef]

- Legge, O.; Johnson, M.; Hicks, N.; Jickells, T.; Diesing, M.; Aldridge, J.; Andrews, J.; Artioli, Y.; Bakker, D.C.E.; Burrows, M.T.; et al. Carbon on the Northwest European Shelf: Contemporary Budget and Future Influences. Front. Mar. Sci. 2020, 7, 143. [Google Scholar] [CrossRef] [Green Version]

- Waltham, N.J.; Elliott, M.; Lee, S.Y.; Lovelock, C.; Duarte, C.M.; Buelow, C.; Simenstad, C.; Nagelkerken, I.; Claassens, L.; Wen, C.K.C.; et al. UN Decade on Ecosystem Restoration 2021–2030—What Chance for Success in Restoring Coastal Ecosystems? Front. Mar. Sci. 2020, 7, 71. [Google Scholar] [CrossRef] [Green Version]

- Barranguet, C.; Kromkamp, J. Estimating primary production rates from photosynthetic electron transport in estuarine microphytobenthos. Mar. Ecol. Prog. Ser. 2000, 204, 39–52. [Google Scholar] [CrossRef] [Green Version]

- Barnett, A.; Méléder, V.; Blommaert, L.; Lepetit, B.; Gaudin, P.; Vyverman, W.; Sabbe, K.; Dupuy, C.; Lavaud, J. Growth form defines physiological photoprotective capacity in intertidal benthic diatoms. ISME J. 2015, 9, 32–45. [Google Scholar] [CrossRef] [Green Version]

- Daggers, T.D.; Kromkamp, J.C.; Herman, P.M.J.; van der Wal, D. A model to assess microphytobenthic primary production in tidal systems using satellite remote sensing. Remote Sens. Environ. 2018, 211, 129–145. [Google Scholar] [CrossRef]

- Launeau, P.; Méléder, V.; Verpoorter, C.; Barillé, L.; Kazemipour-Ricci, F.; Giraud, M.; Jesus, B.; Le Menn, E. Microphytobenthos Biomass and Diversity Mapping at Different Spatial Scales with a Hyperspectral Optical Model. Remote Sens. 2018, 10, 716. [Google Scholar] [CrossRef] [Green Version]

- Méléder, V.; Rincé, Y.; Barillé, L.; Gaudin, P.; Rosa, P. Spatiotemporal changes in microphytobenthos assemblages in a macrotidal flat (Bourgneuf Bay, France). J. Phycol. 2007, 43, 1177–1190. [Google Scholar] [CrossRef]

- Echappé, C.; Gernez, P.; Méléder, V.; Jesus, B.; Cognie, B.; Decottignies, P.; Sabbe, K.; Barillé, L. Satellite remote sensing reveals a positive impact of living oyster reefs on microalgal biofilm development. Biogeosciences 2018, 15, 905–918. [Google Scholar] [CrossRef] [Green Version]

- Brunier, G.; Oiry, S.; Gruet, Y.; Dubois, S.F.; Barillé, L. Topographic Analysis of Intertidal Polychaete Reefs (Sabellaria alveolata) at a Very High Spatial Resolution. Remote Sens. 2022, 14, 307. [Google Scholar] [CrossRef]

- Oiry, S.; Barillé, L. Using sentinel-2 satellite imagery to develop microphytobenthos-based water quality indices in estuaries. Ecol. Indic. 2021, 121, 107184. [Google Scholar] [CrossRef]

- Combe, J.; Launeau, P.; Barille, L.; Sotin, C. Mapping microphytobenthos biomass by non-linear inversion of visible-infrared hyperspectral images. Remote Sens. Environ. 2005, 98, 371–387. [Google Scholar] [CrossRef]

- Harishidayat, D.; Al-Shuhail, A.; Randazzo, G.; Lanza, S.; Muzirafuti, A. Reconstruction of Land and Marine Features by Seismic and Surface Geomorphology Techniques. Appl. Sci. 2022, 12, 9611. [Google Scholar] [CrossRef]

- Gkiatas, G.T.; Koutalakis, P.D.; Kasapidis, I.K.; Iakovoglou, V.; Zaimes, G.N. Monitoring and Quantifying the Fluvio-Geomorphological Changes in a Torrent Channel Using Images from Unmanned Aerial Vehicles. Hydrology 2022, 9, 184. [Google Scholar] [CrossRef]

- Brunier, G.; Fleury, J.; Anthony, E.J.E.J.; Gardel, A.; Dussouillez, P. Close-range airborne Structure-from-Motion Photogrammetry for high-resolution beach morphometric surveys: Examples from an embayed rotating beach. Geomorphology 2016, 261, 76–88. [Google Scholar] [CrossRef]

- Brunier, G.; Michaud, E.; Fleury, J.; Anthony, E.J.; Morvan, S.; Gardel, A. Assessing the relationship between macro-faunal burrowing activity and mudflat geomorphology from UAV-based Structure-from-Motion photogrammetry. Remote Sens. Environ. 2020, 241, 111717. [Google Scholar] [CrossRef]

- Sedrati, M.; Morales, J.A.; El M’rini, A.; Anthony, E.J.; Bulot, G.; Le Gall, R.; Tadibaght, A. Using UAV and Structure-From-Motion Photogrammetry for the Detection of Boulder Movement by Storms on a Rocky Shore Platform in Laghdira, Northwest Morocco. Remote Sens. 2022, 14, 4102. [Google Scholar] [CrossRef]

- Duffy, J.P.; Pratt, L.; Anderson, K.; Land, P.E.; Shutler, J.D. Spatial assessment of intertidal seagrass meadows using optical imaging systems and a lightweight drone. Estuar. Coast. Shelf Sci. 2018, 200, 169–180. [Google Scholar] [CrossRef]

- Román, A.; Tovar-Sánchez, A.; Olivé, I.; Navarro, G. Using a UAV-Mounted Multispectral Camera for the Monitoring of Marine Macrophytes. Front. Mar. Sci. 2021, 8, 1225. [Google Scholar] [CrossRef]

- Espriella, M.C.; Lecours, V.; Frederick, P.C.; Camp, E.V.; Wilkinson, B. Quantifying intertidal habitat relative coverage in a Florida estuary using UAS imagery and GEOBIA. Remote Sens. 2020, 12, 677. [Google Scholar] [CrossRef] [Green Version]

- Castellanos-Galindo, G.A.; Casella, E.; Mejía-Rentería, J.C.; Rovere, A. Habitat mapping of remote coasts: Evaluating the usefulness of lightweight unmanned aerial vehicles for conservation and monitoring. Biol. Conserv. 2019, 239, 108282. [Google Scholar] [CrossRef]

- Collin, A.; Dubois, S.; James, D.; Houet, T. Improving intertidal reef mapping using UAV surface, red edge, and near-infrared data. Drones 2019, 3, 67. [Google Scholar] [CrossRef] [Green Version]

- Curd, A.; Cordier, C.; Firth, L.B.; Bush, L.; Gruet, Y.; Le Mao, P.; Blaze, J.A.; Board, C.; Bordeyne, F.; Burrows, M.T.; et al. A broad-scale long-term dataset of Sabellaria alveolata distribution and abundance curated through the REEHAB (REEf HABitat) Project 2020. Seanoe 2020, 2. Available online: https://www.seanoe.org/ (accessed on 13 November 2022). [CrossRef]

- Barillé, L.; Le Bris, A.; Méléder, V.; Launeau, P.; Robin, M.; Louvrou, I.; Ribeiro, L. Photosynthetic epibionts and endobionts of Pacific oyster shells from oyster reefs in rocky versus mudflat shores. PLoS ONE 2017, 12, e0185187. [Google Scholar] [CrossRef]

- Le Bris, A.; Rosa, P.; Lerouxel, A.; Cognie, B.; Gernez, P.; Launeau, P.; Robin, M.; Barillé, L. Hyperspectral remote sensing of wild oyster reefs. Estuar. Coast. Shelf Sci. 2016, 172, 1–12. [Google Scholar] [CrossRef]

- Bocher, P.; Piersma, T.; Dekinga, A.; Kraan, C.; Yates, M.G.; Guyot, T.; Folmer, E.O.; Radenac, G. Site- and species-specific distribution patterns of molluscs at five intertidal soft-sediment areas in northwest Europe during a single winter. Mar. Biol. 2007, 151, 577–594. [Google Scholar] [CrossRef]

- Le Hir, P.; Roberts, W.; Cazaillet, O.; Christie, M.; Bassoullet, P.; Bacher, C. Characterization of intertidal flat hydrodynamics. Cont. Shelf Res. 2000, 20, 1433–1459. [Google Scholar] [CrossRef] [Green Version]

- Méléder, V.; Barillé, L.; Launeau, P.; Carrère, V.; Rincé, Y. Spectrometric constraint in analysis of benthic diatom biomass using monospecific cultures. Remote Sens. Environ. 2003, 88, 386–400. [Google Scholar] [CrossRef]

- Lucieer, A.; de Jong, S.M.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Wallace, L. Direct georeferencing of ultrahigh-resolution UAV imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Gonçalves, J.A.; Henriques, R. UAV photogrammetry for topographic monitoring of coastal areas. ISPRS J. Photogramm. Remote Sens. 2015, 104, 101–111. [Google Scholar] [CrossRef]

- Jaud, M.; Grasso, F.; Le Dantec, N.; Verney, R.; Delacourt, C.; Ammann, J.; Deloffre, J.; Grandjean, P. Potential of UAVs for Monitoring Mudflat Morphodynamics (Application to the Seine Estuary, France). ISPRS Int. J. Geo-Inf. 2016, 5, 50. [Google Scholar] [CrossRef] [Green Version]

- Ouédraogo, M.M.; Degré, A.; Debouche, C.; Lisein, J. The evaluation of unmanned aerial system-based photogrammetry and terrestrial laser scanning to generate DEMs of agricultural watersheds. Geomorphology 2014, 214, 339–355. [Google Scholar] [CrossRef]

- Jasiewicz, J.; Stepinski, T.F. Geomorphons-a pattern recognition approach to classification and mapping of landforms. Geomorphology 2013, 182, 147–156. [Google Scholar] [CrossRef]

- Liao, W.H. Region description using extended local ternary patterns. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; IEEE: New York, NY, USA, 2010; pp. 1003–1006. [Google Scholar]

- Yokoyama, R.; Shirasawa, M.; Pike, R.J. Visualizing topography by openness: A new application of image processing to digital elevation models. Photogramm. Eng. Remote Sens. 2002, 68, 257–265. [Google Scholar]

- Fisher, P. Improved modeling of elevation error with Geostatistics. Geoinformatica 1998, 2, 215–233. [Google Scholar] [CrossRef]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H. System for Automated Geoscientific Analyses (SAGA) v. 2.1.4. Geosci. Model Dev. Discuss. 2015, 8, 2271–2312. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Traganos, D.; Reinartz, P. Machine learning-based retrieval of benthic reflectance and Posidonia oceanica seagrass extent using a semi-analytical inversion of Sentinel-2 satellite data. Int. J. Remote Sens. 2018, 39, 9428–9452. [Google Scholar] [CrossRef]

- Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Windle, A.E.; Poulin, S.K.; Johnston, D.W.; Ridge, J.T. Rapid and accurate monitoring of intertidal Oyster Reef Habitat using unoccupied aircraft systems and structure from motion. Remote Sens. 2019, 11, 2394. [Google Scholar] [CrossRef] [Green Version]

- Guisan, A.; Weiss, S.B.; Weiss, A.D. GLM versus CCA Spatial Modeling of Plant Species Distribution Author(s): Reviewed work(s): GLM versus CCA spatial modeling of plant species distribution. Plant Ecol. 1999, 143, 107–122. [Google Scholar] [CrossRef]

- Meleder, V.; Launeau, P.; Barille, L.; Rince, Y. Microphytobenthos assemblage mapping by spatial visible-infrared remote sensing in a shellfish ecosystem. Comptes Rendus Biol. 2003, 326, 377–389. [Google Scholar] [CrossRef]

- Chand, S.; Bollard, B. Low altitude spatial assessment and monitoring of intertidal seagrass meadows beyond the visible spectrum using a remotely piloted aircraft system. Estuar. Coast. Shelf Sci. 2021, 255, 107299. [Google Scholar] [CrossRef]

- James, D.; Collin, A.; Mury, A.; Costa, S. Very high resolution land use and land cover mapping using Pleiades-1 stereo imagery and machine learning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2020, 43, 675–682. [Google Scholar] [CrossRef]

- Almeida, L.P.; Almar, R.; Bergsma, E.W.J.; Berthier, E.; Baptista, P.; Garel, E.; Dada, O.A.; Alves, B. Deriving high spatial-resolution coastal topography from sub-meter satellite stereo imagery. Remote Sens. 2019, 11, 590. [Google Scholar] [CrossRef]

- James, D.; Collin, A.; Mury, A.; Qin, R. Satellite–Derived Topography and Morphometry for VHR Coastal Habitat Mapping: The Pleiades–1 Tri–Stereo Enhancement. Remote Sens. 2022, 14, 219. [Google Scholar] [CrossRef]

- Diruit, W.; Le Bris, A.; Bajjouk, T.; Richier, S.; Helias, M.; Burel, T.; Lennon, M.; Guyot, A.; Gall, E.A. Seaweed Habitats on the Shore: Characterization through Hyperspectral UAV Imagery and Field Sampling. Remote Sens. 2022, 14, 3124. [Google Scholar] [CrossRef]

- Baptist, M.J.; Gerkema, T.; van Prooijen, B.C.; van Maren, D.S.; van Regteren, M.; Schulz, K.; Colosimo, I.; Vroom, J.; van Kessel, T.; Grasmeijer, B.; et al. Beneficial use of dredged sediment to enhance salt marsh development by applying a ‘Mud Motor’. Ecol. Eng. 2019, 127, 312–323. [Google Scholar] [CrossRef]

- Cavender-Bares, J.; Gamon, J.A.; Townsend, P.A. Remote sensing of plant biodiversity. In Remote Sensing of Plant Biodiversity; Springer: Berlin/Heidelberg, Germany, 2020; pp. 1–581. [Google Scholar] [CrossRef]

| UAV Parameter | ||

| UAV model | DJI Phantom 4 Pro | |

| Flight duration | 25 min | |

| Flight plan design and piloting software | DJI Ground Station Professional | |

| Cameras parameter | ||

| Sensor type | RGB Phantom 4 Pro main camera | Parrot Sequoia+ multispectral camera |

| Sensor/image size | CMOS 1.2/3″ (20 Megapixel (MP)) | 1280 × 960 pixels (1.2 Megapixel (MP)) |

| Shutter release | Global shutter | Global shutter |

| Focal length | 8.8/24 mm | 3.98 mm |

| Multispectral bands | - | Green 550 nm (40 nm width) Red 660 nm (40 nm width) Red-edge 735 nm (10 nm width) Near-Infrared 790 nm (40 nm width) |

| Gimbal | Stabilised over 3 axes (vertical inclination, roll, panoramic) | Rigid gimbal fixed on UAV shoes with a mast to support Sunshine sensor device |

| Additional features | IMU, GPS, Sunshine sensor (spectral sensors centred on camera spectral bands), ≈20% reflectance panel | |

| Flight plan settings parameters | ||

| Image ground size dimension (GSD) pixel | 5 cm/pixel (multispectral) 0.5 cm/pixel (RGB) | |

| Flight height | 45 m above ground level | |

| Frontal overlap | 80% | |

| Lateral overlap | 60% | |

| Shooting interval (triggered on time) | 2 seconds | |

| Surveyed area | 4.95 ha (330 × 150 m) | |

| Ground segment settings parameters | ||

| Positioning device | Topcon Hiper SR antenna | |

| Ground control point (GCP) number | 13 | |

| Training samples for Standard RF classification without segmenting the multispectral and indices images | ||||||||

| Class 1: Water | Class 2: Bare mud | Class 3: MPB | Class 4: Pebbles | Class 5: Sand | Class 6: Oysters | Class 7: Bare rock | Class 8: Macro algae | |

| Number of pixels | 4105 | 5371 | 3771 | 638 | 200 | 2171 | 666 | 642 |

| Training samples for Geomorphic-based RF classification | ||||||||

| Class 1: Water | Class 2: Bare mud | Class 3: MPB | Class 4: Pebbles | Class 5: Sand | Class 6: Oysters | Class 7: Bare rock | Class 8: Macro algae | |

| MBL (number of pixel) | 580 | 3922 | 1032 | 436 | 159 | - | - | 143 |

| Tidal channels and depression (number of pixel) | 3143 | 1025 | 2372 | - | - | - | - | - |

| Ridges and runnels (number of pixel) | 165 | 318 | 168 | 68 | 41 | - | - | 62 |

| Boulders and small reefs (number of pixel) | 13 | 77 | 123 | - | - | 653 | 69 | 241 |

| Rocky structures (number of pixel) | 272 | 91 | 115 | 134 | - | 1518 | 597 | 196 |

| Validation samples for both RF classification methods | ||||||||

| Class 1: Water | Class 2: Bare mud | Class 3: MPB | Class 4: Pebbles | Class 5: Sand | Class 6: Oysters | Class 7: Bare rock | Class 8: Macro algae | |

| Number of pixels | 4129 | 858 | 5592 | 889 | 434 | 3101 | 10,010 | 2074 |

| Camera Model | Phantom 4 Professional RGB Camera | Parrot Sequoia+ Multispectral Camera |

|---|---|---|

| Number of photographs | 186 | 313 per bands (1252 in total) |

| Coverage area in ha | 4.89 ha | 7.24 ha |

| Dense points cloud number and density per m2 | 73,524,417 points ≈ 1440 points/m2 | 1,905,290 points ≈ 36.13 points/m2 |

| Orthophotograph resolution in m/pixel | 0.0132 m/pixel | 0.0504 m/pixel, resampled to 0.05 m/pixel |

| DSM resolution in m/pixel | 0.026 m/pixel, resample to 0.05 m/pixel | 0.07 m/pixel, resample to 0.10 m/pixel |

| Global RMSE accuracy in m from GCPs geolocation | 0.03 m | 0.02 m |

| Water | Bare Mud | Mud with MPB | Pebbles | Sand | Oyster | Bare Rock | Macro Algae | Total | User Accuracy in % | |

|---|---|---|---|---|---|---|---|---|---|---|

| Water | 7702 | 20 | 9 | 0 | 0 | 0 | 8 | 0 | 7739 | 99.52 |

| Bare mud | 713 | 6209 | 161 | 0 | 0 | 4 | 51 | 4 | 7142 | 86.93 |

| Mud with MPB | 0 | 0 | 8288 | 0 | 0 | 184 | 646 | 33 | 9151 | 90.56 |

| Pebbles | 7 | 0 | 245 | 638 | 0 | 243 | 384 | 32 | 1549 | 41.18. |

| Sand | 0 | 0 | 0 | 0 | 200 | 0 | 0 | 0 | 200 | 100 |

| Oyster | 0 | 0 | 148 | 0 | 0 | 4996 | 5114 | 67 | 10,325 | 48.38 |

| Bare rock | 0 | 0 | 1131 | 0 | 0 | 236 | 2797 | 10 | 4174 | 67.01 |

| Macro algae | 0 | 0 | 1 | 0 | 0 | 0 | 2810 | 3044 | 5855 | 51.98 |

| Total | 8422 | 6229 | 9983 | 638 | 200 | 5663 | 11810 | 3190 | ||

| Producer accuracy in % | 91.45 | 99.67 | 83.02 | 100 | 100 | 88.22 | 23.68 | 95.42 | ||

| Overall accuracy in % | 73.45 | |||||||||

| Kappa metric | 0.68 | |||||||||

| Water | Bare Mud | Mud with MPB | Pebbles | Sand | Oyster | Bare Rock | Macro Algae | Total | User Accuracy in % | |

|---|---|---|---|---|---|---|---|---|---|---|

| Water | 7843 | 0 | 6 | 19 | 0 | 1 | 2 | 0 | 7871 | 99.64 |

| Bare mud | 756 | 6285 | 220 | 233 | 172 | 121 | 4 | 15 | 7806 | 80.51 |

| Mud with MPB | 0 | 2 | 9780 | 38 | 0 | 332 | 1 | 70 | 10,223 | 95.66 |

| Pebbles | 0 | 0 | 150 | 202 | 0 | 0 | 0 | 4 | 356 | 56.74 |

| Sand | 0 | 0 | 0 | 0 | 41 | 0 | 0 | 0 | 41 | 100 |

| Oyster | 0 | 0 | 0 | 164 | 0 | 5092 | 176 | 1 | 5433 | 93.72 |

| Bare rock | 0 | 0 | 0 | 3 | 0 | 194 | 11,736 | 33 | 11,966 | 98.07 |

| Macro algae | 0 | 0 | 0 | 0 | 0 | 0 | 36 | 3112 | 3148 | 98.85 |

| Total | 8599 | 6287 | 10,645 | 659 | 213 | 5740 | 11,970 | 3235 | ||

| Producer accuracy in % | 91.20 | 99.96 | 91.87 | 30.65 | 19.24 | 88.71 | 98.04 | 96.19 | ||

| Overall accuracy in % | 93.12 | |||||||||

| Kappa metric | 0.916 | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brunier, G.; Oiry, S.; Lachaussée, N.; Barillé, L.; Le Fouest, V.; Méléder, V. A Machine-Learning Approach to Intertidal Mudflat Mapping Combining Multispectral Reflectance and Geomorphology from UAV-Based Monitoring. Remote Sens. 2022, 14, 5857. https://doi.org/10.3390/rs14225857

Brunier G, Oiry S, Lachaussée N, Barillé L, Le Fouest V, Méléder V. A Machine-Learning Approach to Intertidal Mudflat Mapping Combining Multispectral Reflectance and Geomorphology from UAV-Based Monitoring. Remote Sensing. 2022; 14(22):5857. https://doi.org/10.3390/rs14225857

Chicago/Turabian StyleBrunier, Guillaume, Simon Oiry, Nicolas Lachaussée, Laurent Barillé, Vincent Le Fouest, and Vona Méléder. 2022. "A Machine-Learning Approach to Intertidal Mudflat Mapping Combining Multispectral Reflectance and Geomorphology from UAV-Based Monitoring" Remote Sensing 14, no. 22: 5857. https://doi.org/10.3390/rs14225857

APA StyleBrunier, G., Oiry, S., Lachaussée, N., Barillé, L., Le Fouest, V., & Méléder, V. (2022). A Machine-Learning Approach to Intertidal Mudflat Mapping Combining Multispectral Reflectance and Geomorphology from UAV-Based Monitoring. Remote Sensing, 14(22), 5857. https://doi.org/10.3390/rs14225857