Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning

Abstract

:1. Introduction

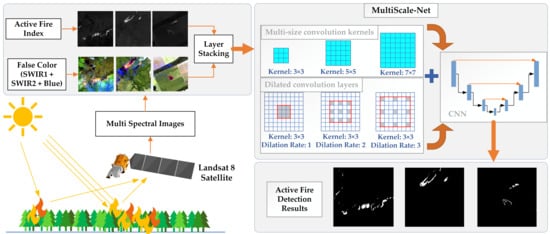

- Developing a novel DL network for multi-scale AFD based on an efficient, sophisticated CNN architecture.

- Introducing a new index for AFD to improve MultiScale-Net performance in extracting high-level features.

- Using convolution layers with multi-size kernels and different dilation rates to facilitate multi-scale AFD.

- Assessing the performance of the proposed network using test samples with some challenges such as multi-size/shape fires (e.g., large fire zones alongside strip-shaped/single-pixel fires).

2. Remote Sensing Imagery

2.1. Landsat-8 Active Fire Dataset

2.2. Data Augmentation

3. Deep Multiple Kernel Learning

3.1. Active Fire Index

3.2. Network Architecture

- The proposed DL architecture has a network depth of 5 (network length).

- It takes advantage of a new approach that includes convolutional kernels with different sizes for the training process.

- DCLs with different dilation rates are employed.

- Batch normalization and dropout are used, which play an essential role in preventing over-fitting [40].

- A binary cross-entropy loss function is utilized. Moreover, the “glorot_uniform” method initializes the network [41].

- Intra-block concatenations fuse feature maps from the first and second convolution layers with the same kernel size in each block.

- Inter-block concatenations fuse feature maps generated by convolution layers with varying kernel sizes from distinct blocks.

- Inter-branch concatenations fuse feature maps from the encoder and the decoders’ transposed convolution blocks.

3.3. Accuracy Metrics

4. Results and Discussion

4.1. Accuracy Assessment of AFD

- B3 scenario: The highest precision, sensitivity, F1-score, and IoU are associated with the K3D1 (P = 95.71%), K35D3 (S = 93.93%), K35D1 (F = 91.45%), and K35D1 (I = 84.24%) models, respectively, in this scenario.

- B4 scenario: In this scenario, the best precision, sensitivity, F1-score, and IoU are related to the K3D2 (P = 92.51%), K35D1 (S = 92.52%), K357D2 (F = 91.62%), and K357D2 (I = 84.54%) models, respectively.

- B1 scenario: In this scenario, the best precision, sensitivity, F1-score, and IoU are associated with the K35D1 (P = 94.5%), K357D2 (S = 92.49%), K357D2 (F = 91.11%), and K357D2 (I = 83.67%) models, respectively.

4.2. Qualitative Evaluation of MultiScale-Net

4.3. Qualitative Assessment of MultiScale-Net in Severe Cloud Condition

4.4. Effect of Adding AFI to the three Landsat-8 Bands

4.5. Effect of Multi-Size Kernels and DCLs

4.6. Comparison Analysis

5. Conclusions

- Geographically diverse training samples from five different study areas across the world made the network robust against different geographical and illumination conditions.

- MultiScale-Net showed satisfactory performance in extracting different-sized fires in the challenging test samples.

- This study proposed AFI, a new innovative indicator for AFD, derived from the SWIR2 and Blue bands. Multiscale-Net uses AFI as an input feature to increase accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Seydi, S.T.; Hasanlou, M.; Chanussot, J. DSMNN-Net: A Deep Siamese Morphological Neural Network Model for Burned Area Mapping Using Multispectral Sentinel-2 and Hyperspectral PRISMA Images. Remote Sens. 2021, 13, 5138. [Google Scholar] [CrossRef]

- Lalani, N.; Drolet, J.L.; McDonald-Harker, C.; Brown, M.R.G.; Brett-MacLean, P.; Agyapong, V.I.O.; Greenshaw, A.J.; Silverstone, P.H. Nurturing Spiritual Resilience to Promote Post-Disaster Community Recovery: The 2016 Alberta Wildfire in Canada. Front. Public Health 2021, 9, 682558. [Google Scholar] [CrossRef] [PubMed]

- Seydi, S.T.; Akhoondzadeh, M.; Amani, M.; Mahdavi, S. Wildfire Damage Assessment over Australia Using Sentinel-2 Imagery and MODIS Land Cover Product within the Google Earth Engine Cloud Platform. Remote Sens. 2021, 13, 220. [Google Scholar] [CrossRef]

- Keeley, J.E.; Syphard, A.D. Large California Wildfires: 2020 Fires in Historical Context. Fire Ecol. 2021, 17, 22. [Google Scholar] [CrossRef]

- FAO. Global Forest Resources Assessment 2020—Key Findings; FAO: Rome, Italy, 2020. [Google Scholar]

- Gin, J.L.; Balut, M.D.; Der-Martirosian, C.; Dobalian, A. Managing the Unexpected: The Role of Homeless Service Providers during the 2017–2018 California Wildfires. J. Community Psychol. 2021, 49, 2532–2547. [Google Scholar] [CrossRef]

- Ball, G.; Regier, P.; González-Pinzón, R.; Reale, J.; Van Horn, D. Wildfires Increasingly Impact Western US Fluvial Networks. Nat. Commun. 2021, 12, 2484. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Celik, T.; Akhloufi, M. Automatic Fire Pixel Detection Using Image Processing: A Comparative Analysis of Rule-Based and Machine Learning-Based Methods. Signal Image Video Process. 2016, 10, 647–654. [Google Scholar] [CrossRef] [Green Version]

- Martinez-de Dios, J.R.; Arrue, B.C.; Ollero, A.; Merino, L.; Gómez-Rodríguez, F. Computer Vision Techniques for Forest Fire Perception. Image Vis. Comput. 2008, 26, 550–562. [Google Scholar] [CrossRef]

- Valero, M.M.; Rios, O.; Pastor, E.; Planas, E. Automated Location of Active Fire Perimeters in Aerial Infrared Imaging Using Unsupervised Edge Detectors. Int. J. Wildland Fire 2018, 27, 241–256. [Google Scholar] [CrossRef] [Green Version]

- Alkhatib, A.A.A. A Review on Forest Fire Detection Techniques. Int. J. Distrib. Sens. Netw. 2014, 10, 597368. [Google Scholar] [CrossRef] [Green Version]

- Wooster, M.J.; Roberts, G.J.; Giglio, L.; Roy, D.P.; Freeborn, P.H.; Boschetti, L.; Justice, C.; Ichoku, C.; Schroeder, W.; Davies, D.; et al. Satellite Remote Sensing of Active Fires: History and Current Status, Applications and Future Requirements. Remote Sens. Environ. 2021, 267, 112694. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef] [PubMed]

- Csiszar, I.; Schroeder, W.; Giglio, L.; Ellicott, E.; Vadrevu, K.P.; Justice, C.O.; Wind, B. Active Fires from the Suomi NPP Visible Infrared Imaging Radiometer Suite: Product Status and First Evaluation Results. J. Geophys. Res. Atmos. 2014, 119, 803–816. [Google Scholar] [CrossRef]

- Schroeder, W.; Oliva, P.; Giglio, L.; Csiszar, I.A. The New VIIRS 375m Active Fire Detection Data Product: Algorithm Description and Initial Assessment. Remote Sens. Environ. 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Xiong, X.; Aldoretta, E.; Angal, A.; Chang, T.; Geng, X.; Link, D.; Salomonson, V.; Twedt, K.; Wu, A. Terra MODIS: 20 Years of on-Orbit Calibration and Performance. J. Appl. Remote Sens. 2020, 14, 1–16. [Google Scholar] [CrossRef]

- Giglio, L.; Schroeder, W.; Justice, C.O. The Collection 6 MODIS Active Fire Detection Algorithm and Fire Products. Remote Sens. Environ. 2016, 178, 31–41. [Google Scholar] [CrossRef] [Green Version]

- Parto, F.; Saradjian, M.; Homayouni, S. MODIS Brightness Temperature Change-Based Forest Fire Monitoring. J. Indian Soc. Remote Sens. 2020, 48, 163–169. [Google Scholar] [CrossRef]

- He, L.; Li, Z. Enhancement of a Fire-Detection Algorithm by Eliminating Solar Contamination Effects and Atmospheric Path Radiance: Application to MODIS Data. Int. J. Remote Sens. 2011, 32, 6273–6293. [Google Scholar] [CrossRef]

- Engel, C.B.; Jones, S.D.; Reinke, K. A Seasonal-Window Ensemble-Based Thresholding Technique Used to Detect Active Fires in Geostationary Remotely Sensed Data. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4947–4956. [Google Scholar] [CrossRef]

- Jang, E.; Kang, Y.; Im, J.; Lee, D.-W.; Yoon, J.; Kim, S.-K. Detection and Monitoring of Forest Fires Using Himawari-8 Geostationary Satellite Data in South Korea. Remote Sens. 2019, 11, 271. [Google Scholar] [CrossRef] [Green Version]

- Xie, Z.; Song, W.; Ba, R.; Li, X.; Xia, L. A Spatiotemporal Contextual Model for Forest Fire Detection Using Himawari-8 Satellite Data. Remote Sens. 2018, 10, 1992. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Wu, K.; Jiang, R.; Zhang, H. A Simple Artificial Neural Network For Fire Detection Using LANDSAT-8 Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 447–452. [Google Scholar] [CrossRef]

- Kumar, S.S.; Roy, D.P. Global Operational Land Imager Landsat-8 Reflectance-Based Active Fire Detection Algorithm. Int. J. Digit. Earth 2018, 11, 154–178. [Google Scholar] [CrossRef] [Green Version]

- Schroeder, W.; Oliva, P.; Giglio, L.; Quayle, B.; Lorenz, E.; Morelli, F. Active Fire Detection Using Landsat-8/OLI Data. Landsat 8 Sci. Results 2016, 185, 210–220. [Google Scholar] [CrossRef] [Green Version]

- Murphy, S.W.; de Souza Filho, C.R.; Wright, R.; Sabatino, G.; Correa Pabon, R. HOTMAP: Global Hot Target Detection at Moderate Spatial Resolution. Remote Sens. Environ. 2016, 177, 78–88. [Google Scholar] [CrossRef]

- Ansari, M.; Homayouni, S.; Safari, A.; Niazmardi, S. A New Convolutional Kernel Classifier for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11240–11256. [Google Scholar] [CrossRef]

- Ranjbar, S.; Zarei, A.; Hasanlou, M.; Akhoondzadeh, M.; Amini, J.; Amani, M. Machine Learning Inversion Approach for Soil Parameters Estimation over Vegetated Agricultural Areas Using a Combination of Water Cloud Model and Calibrated Integral Equation Model. J. Appl. Remote Sens. 2021, 15, 1–17. [Google Scholar] [CrossRef]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. ResUNet-a: A Deep Learning Framework for Semantic Segmentation of Remotely Sensed Data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef] [Green Version]

- Vani, K. Deep Learning Based Forest Fire Classification and Detection in Satellite Images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019; pp. 61–65. [Google Scholar]

- Phan, T.C.; Nguyen, T.T. Remote Sensing Meets Deep Learning: Exploiting Spatio-Temporal-Spectral Satellite Images for Early Wildfire Detection. No. REP_WORK. 2019. Available online: https://Infoscience.Epfl.Ch/Record/270339 (accessed on 7 September 2020).

- de Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active Fire Detection in Landsat-8 Imagery: A Large-Scale Dataset and a Deep-Learning Study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, X.; Song, S.; Zhang, H.; Huang, G.; Wu, C. Implicit Semantic Data Augmentation for Deep Networks. Adv. Neural Inf. Process. Syst. 2019, 32, 12635–12644. [Google Scholar]

- King, M.D.; Platnick, S.; Moeller, C.C.; Revercomb, H.E.; Chu, D.A. Remote Sensing of Smoke, Land, and Clouds from the NASA ER-2 during SAFARI 2000. J. Geophys. Res. Atmos. 2003, 108, 8502. [Google Scholar] [CrossRef]

- Giglio, L.; Descloitres, J.; Justice, C.O.; Kaufman, Y.J. An Enhanced Contextual Fire Detection Algorithm for MODIS. Remote Sens. Environ. 2003, 87, 273–282. [Google Scholar] [CrossRef]

- Cadau, E.; Laneve, G. Improved MSG-SEVIRI Images Cloud Masking and Evaluation of Its Impact on the Fire Detection Methods. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; Volume 2, p. II-1056. [Google Scholar]

- Ho, Y.; Wookey, S. The Real-World-Weight Cross-Entropy Loss Function: Modeling the Costs of Mislabeling. IEEE Access 2020, 8, 4806–4813. [Google Scholar] [CrossRef]

- Garbin, C.; Zhu, X.; Marques, O. Dropout vs. Batch Normalization: An Empirical Study of Their Impact to Deep Learning. Multimed. Tools Appl. 2020, 79, 12777–12815. [Google Scholar] [CrossRef]

- Glorot, X.; Bengio, Y. Understanding the Difficulty of Training Deep Feedforward Neural Networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 31 2010; Volume 9, pp. 249–256. [Google Scholar]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [Green Version]

- Hamers, L. Similarity Measures in Scientometric Research: The Jaccard Index versus Salton’s Cosine Formula. Inf. Process. Manag. 1989, 25, 315–318. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Alidoost, F.; Arefi, H. Multiscale Building Segmentation Based on Deep Learning for Remote Sensing RGB Images from Different Sensors. J. Appl. Remote Sens. 2020, 14, 1–21. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Shah-Hosseini, R. A Deep Learning Method for Near-Real-Time Cloud and Cloud Shadow Segmentation from Gaofen-1 Images. Comput. Intell. Neurosci. 2020, 2020, 8811630. [Google Scholar] [CrossRef]

| Configuration Parameters | Input Values | Abbreviation |

|---|---|---|

| Input feature(s) | SWIR2 + SWIR1 + Blue + AFI | B4 |

| SWIR2 + SWIR1 + Blue | B3 | |

| AFI | B1 | |

| Kernel size | (3, 3) | K3 |

| (3, 3) + (5, 5) | K35 | |

| (3, 3) + (5, 5) + (7, 7) | K357 | |

| Dilation rate | (1, 1) + (1, 1) | D1 |

| (1, 1) + (2, 2) | D2 | |

| (1, 1) + (3, 3) | D3 |

| Encoder | Decoder | ||

|---|---|---|---|

| Layer | Output | Layer | Output |

| Conv. 3 × 3 + BN + ReLU | 256 × 256 × 16 | Transposed Conv. 3 × 3 Inter-branch Concatenation | 32 × 32 × 128 32 × 32 × 896 |

| Conv. 3 × 3 + BN | 256 × 256 × 16 | ||

| Intra-block Concatenation + ReLU | 256 × 256 × 32 | ||

| Conv. 5 × 5 + BN + ReLU | 256 × 256 × 16 | Conv. 3 × 3 + BN + ReLU | 32 × 32 × 128 |

| Conv. 5 × 5 + BN | 256 × 256 × 16 | Conv. 3 × 3 + BN | 32 × 32 × 128 |

| Intra-block Concatenation + ReLU | 256 × 256 × 32 | Intra-block Concatenation + ReLU | 32 × 32 × 256 |

| Conv. 7 × 7 + BN + ReLU | 256 × 256 × 16 | Conv. 5 × 5 + BN + ReLU | 32 × 32 × 128 |

| Conv. 7 × 7 + BN | 256 × 256 × 16 | Conv. 5 × 5 + BN | 32 × 32 × 128 |

| Intra-block Concatenation + ReLU | 256 × 256 × 32 | Intra-block Concatenation + ReLU | 32 × 32 × 256 |

| Inter-block Concatenation Max-pooling. 2 × 2 | 256 × 256 × 96 128 × 128 × 96 | Conv. 7 × 7 + BN + ReLU | 32 × 32 × 128 |

| Conv. 7 × 7 + BN | 32 × 32 × 128 | ||

| Intra-block Concatenation + ReLU | 32 × 32 × 256 | ||

| Inter-block Concatenation | 32 × 32 × 768 | ||

| Conv. 3 × 3 + BN + ReLU | 128 × 128 × 32 | Transposed Conv. 3 × 3 Inter-branch Concatenation | 64 × 64 × 64 64 × 64 × 448 |

| Conv. 3 × 3 + BN | 128 × 128 × 32 | ||

| Intra-block Concatenation + ReLU | 128 × 128 × 64 | ||

| Conv. 5 × 5 + BN + ReLU | 128 × 128 × 32 | Conv. 3 × 3 + BN + ReLU | 64 × 64 × 64 |

| Conv. 5 × 5 + BN | 128 × 128 × 32 | Conv. 3 × 3 + BN | 64 × 64 × 64 |

| Intra-block Concatenation + ReLU | 128 × 128 × 64 | Intra-block Concatenation + ReLU | 64 × 64 × 128 |

| Conv. 7 × 7 + BN + ReLU | 128 × 128 × 32 | Conv. 5 × 5 + BN + ReLU | 64 × 64 × 64 |

| Conv. 7 × 7 + BN | 128 × 128 × 32 | Conv. 5 × 5 + BN | 64 × 64 × 64 |

| Intra-block Concatenation + ReLU | 128 × 128 × 64 | Intra-block Concatenation + ReLU | 64 × 64 × 128 |

| Inter-block Concatenation Max-pooling. 2 × 2 | 128 × 128 × 192 64 × 64 × 192 | Conv. 7 × 7 + BN + ReLU | 64 × 64 × 64 |

| Conv. 7 × 7 + BN | 64 × 64 × 64 | ||

| Intra-block Concatenation + ReLU | 64 × 64 × 128 | ||

| Inter-block Concatenation | 64 × 64 × 384 | ||

| Conv. 3 × 3 + BN + ReLU | 64 × 64 × 64 | Transposed Conv. 3 × 3 Inter-branch Concatenation | 128 × 128 × 32 128 × 128 × 224 |

| Conv. 3 × 3 + BN | 64 × 64 × 64 | ||

| Intra-block Concatenation + ReLU | 64 × 64 × 128 | ||

| Conv. 5 × 5 + BN + ReLU | 64 × 64 × 64 | Conv. 3 × 3 + BN + ReLU | 128 × 128 × 32 |

| Conv. 5 × 5 + BN | 64 × 64 × 64 | Conv. 3 × 3 + BN | 128 × 128 × 32 |

| Intra-block Concatenation + ReLU | 64 × 64 × 128 | Intra-block Concatenation + ReLU | 128 × 128 × 64 |

| Conv. 7 × 7 + BN + ReLU | 64 × 64 × 64 | Conv. 5 × 5 + BN + ReLU | 128 × 128 × 32 |

| Conv. 7 × 7 + BN | 64 × 64 × 64 | Conv. 5 × 5 + BN | 128 × 128 × 32 |

| Intra-block Concatenation + ReLU | 64 × 64 × 128 | Intra-block Concatenation + ReLU | 128 × 128 × 64 |

| Inter-block Concatenation Max-pooling. 2 × 2 | 64 × 64 × 384 32 × 32 × 384 | Conv. 7 × 7 + BN + ReLU | 128 × 128 × 32 |

| Conv. 7 × 7 + BN | 128 × 128 × 32 | ||

| Intra-block Concatenation + ReLU | 128 × 128 × 64 | ||

| Inter-block Concatenation | 128 × 128 × 192 | ||

| Conv. 3 × 3 + BN + ReLU | 32 × 32 × 128 | Transposed Conv. 3 × 3 Inter-branch Concatenation | 256 × 256 × 16 256 × 256 × 112 |

| Conv. 3 × 3 + BN | 32 × 32 × 128 | ||

| Intra-block Concatenation + ReLU | 32 × 32 × 256 | ||

| Conv. 5 × 5 + BN + ReLU | 32 × 32 × 128 | Conv. 3 × 3 + BN + ReLU | 256 × 256 × 16 |

| Conv. 5 × 5 + BN | 32 × 32 × 128 | Conv. 3 × 3 + BN | 256 × 256 × 16 |

| Intra-block Concatenation + ReLU | 32 × 32 × 256 | Intra-block Concatenation + ReLU | 256 × 256 × 32 |

| Conv. 7 × 7 + BN + ReLU | 32 × 32 × 128 | Conv. 5 × 5 + BN + ReLU | 256 × 256 × 16 |

| Conv. 7 × 7 + BN | 32 × 32 × 128 | Conv. 5 × 5 + BN | 256 × 256 × 16 |

| Intra-block Concatenation + ReLU | 32 × 32 × 256 | Intra-block Concatenation + ReLU | 256 × 256 × 32 |

| Inter-block Concatenation Max-pooling. 2 × 2 | 32 × 32 × 768 16 × 16 × 768 | Conv. 7 × 7 + BN + ReLU | 256 × 256 × 16 |

| Conv. 7 × 7 + BN | 256 × 256 × 16 | ||

| Intra-block Concatenation + ReLU | 256 × 256 × 32 | ||

| Inter-block Concatenation Dropout | 256 × 256 × 96 256 × 256 × 96 | ||

| Conv. 3 × 3 + BN + ReLU | 16 × 16 × 256 | Conv. 1 × 1 + Softmax | 256 × 256 × 2 |

| Conv. 3 × 3 + BN | 16 × 16 × 256 | ||

| Intra-block Concatenation + ReLU | 16 × 16 × 512 | ||

| Conv. 5 × 5 + BN + ReLU | 16 × 16 × 256 | ||

| Conv. 5 × 5 + BN | 16 × 16 × 256 | ||

| Intra-block Concatenation + ReLU | 16 × 16 × 512 | ||

| Conv. 7 × 7 + BN + ReLU | 16 × 16 × 256 | ||

| Conv. 7 × 7 + BN | 16 × 16 × 256 | ||

| Intra-block Concatenation + ReLU | 16 × 16 × 512 | ||

| Inter-block Concatenation | 16 × 16 × 1536 | ||

| Total number of trainable parameters: ~45 M | |||

| Configuration Scenarios | Accuracy Metrics (%) | ||||||

|---|---|---|---|---|---|---|---|

| Input | Kernel Size | Dilation Rate | P | S | F | I | |

| B3 | K3 | D1 | 95.71 | 86.16 | 90.68 | 82.96 | |

| D2 | 89.48 | 92.18 | 90.81 | 83.17 | |||

| D3 | 93.42 | 86.97 | 90.08 | 81.95 | |||

| K35 | D1 | 91.06 | 91.83 | 91.45 | 84.24 | ||

| D2 | 93.65 | 86.89 | 90.14 | 82.06 | |||

| D3 | 88.02 | 93.93 | 90.87 | 83.28 | |||

| K357 | D1 | 92.26 | 89.78 | 91 | 83.49 | ||

| D2 | 94.01 | 87.08 | 90.41 | 82.51 | |||

| D3 | 95.29 | 84.05 | 89.32 | 80.7 | |||

| Average | 92.54 | 88.76 | 90.53 | 82.71 | |||

| Best Model | K3D1 | K35D3 | K35D1 | K35D1 | |||

| B1 | K3 | D1 | 93.25 | 85.35 | 89.12 | 80.38 | |

| D2 | 87.8 | 90.04 | 88.91 | 80.04 | |||

| D3 | 90.58 | 88.53 | 89.54 | 81.07 | |||

| K35 | D1 | 94.5 | 83.42 | 88.61 | 79.56 | ||

| D2 | 91.77 | 89.25 | 90.49 | 82.64 | |||

| D3 | 93.63 | 84.54 | 88.85 | 79.95 | |||

| K357 | D1 | 93.59 | 85.22 | 89.21 | 80.52 | ||

| D2 | 89.76 | 92.49 | 91.11 | 83.67 | |||

| D3 | 92.63 | 80.21 | 85.97 | 75.4 | |||

| Average | 91.94 | 86.56 | 89.09 | 80.35 | |||

| Best Model | K35D1 | K357D2 | K357D2 | K357D2 | |||

| B4 | K3 | D1 | 91 | 89.56 | 90.27 | 82.27 | |

| D2 | 92.51 | 87.96 | 90.18 | 82.12 | |||

| D3 | 92.05 | 84.15 | 87.92 | 78.54 | |||

| K35 | D1 | 89.95 | 92.52 | 91.22 | 83.86 | ||

| D2 | 92.49 | 88.92 | 90.67 | 82.93 | |||

| D3 | 92.18 | 89.05 | 90.59 | 82.79 | |||

| K357 | D1 | 90.51 | 92.39 | 91.44 | 84.23 | ||

| D2 | 91.72 | 91.52 | 91.62 | 84.54 | |||

| D3 | 91.63 | 90.91 | 91.27 | 83.94 | |||

| Average | 91.56 | 89.66 | 90.58 | 82.79 | |||

| Best Model | K3D2 | K35D1 | K357D2 | K357D2 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rostami, A.; Shah-Hosseini, R.; Asgari, S.; Zarei, A.; Aghdami-Nia, M.; Homayouni, S. Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sens. 2022, 14, 992. https://doi.org/10.3390/rs14040992

Rostami A, Shah-Hosseini R, Asgari S, Zarei A, Aghdami-Nia M, Homayouni S. Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sensing. 2022; 14(4):992. https://doi.org/10.3390/rs14040992

Chicago/Turabian StyleRostami, Amirhossein, Reza Shah-Hosseini, Shabnam Asgari, Arastou Zarei, Mohammad Aghdami-Nia, and Saeid Homayouni. 2022. "Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning" Remote Sensing 14, no. 4: 992. https://doi.org/10.3390/rs14040992

APA StyleRostami, A., Shah-Hosseini, R., Asgari, S., Zarei, A., Aghdami-Nia, M., & Homayouni, S. (2022). Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sensing, 14(4), 992. https://doi.org/10.3390/rs14040992