Extraction of Winter Wheat Planting Area Based on Multi-Scale Fusion

Abstract

:1. Introduction

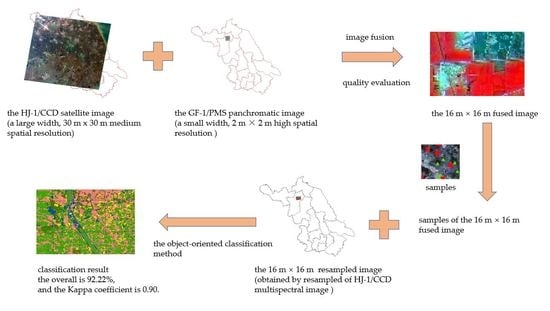

2. Materials and Methods

2.1. Study Area Selection

2.2. Remote Sensing Image Data Acquisition and Preprocessing

2.3. Remote Sensing Image Fusion

2.4. Spectral Reflectance of Ground Objects

2.5. Fused Image Quality Evaluation

2.6. Object-Oriented Classification

2.6.1. Sample Establishment and Utilization

2.6.2. Determination of Segmentation Factors and Image Segmentation

2.6.3. Extraction of Spectral Information Features

2.6.4. Extraction of Texture Information Features

3. Results

3.1. Analysis of Spectral Characteristics of Different Ground Objects

3.2. Subjective Evaluation of Fused Image Quality at Different Spatial Scales

3.3. Objective Evaluation of Fusion Image Quality at Different Spatial Scales

3.4. Selection of Remote Sensing Image Segmentation Parameters

3.5. Classification Rules for Combining Spectral and Texture Information Features

3.6. Distribution Characteristics of Winter Wheat Planting Area

3.7. Accuracy Evaluation of Object-Oriented Classification Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Wang, J.; Zhao, C.; Liu, L. A model of estimating winter wheat yield based on tm image and yield formation. J. Triticeae Crops 2007, 5, 904–907. [Google Scholar]

- Li, W.; Liu, Y.; Chen, H.; Zhang, C. Estimation model of winter wheat disease based on meteorological factors and spectral information. Food Prod. Process. Nutr. 2020, 2, 5. [Google Scholar] [CrossRef]

- Li, X.; Meng, Q.; Gu, X.; Jancso, T.; Yu, T.; Wang, K.; Mavromatis, S. A hybrid method combining pixel-based and object-oriented methods and its application in Hungary using Chinese HJ-1 satellite images. Int. J. Remote Sens. 2013, 34, 4655–4668. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Gu, X.; Ge, G.; Chen, H.; Zhang, C. Development of remote sensing monitoring information system for county scale winter wheat diseases. Jiangsu J. Agric. Sci. 2019, 35, 302–306. [Google Scholar] [CrossRef]

- Zhou, Q.; Yu, Q.; Liu, J.; Wu, W.; Tang, H. Perspective of Chinese GF-1 high-resolution satellite data in agricultural remote sensing monitoring. J. Integr. Agr. 2017, 16, 242–251. [Google Scholar] [CrossRef]

- Li, Z.; Li, W.; Shen, S.; Ma, J. Application of HJ and GF1 image data to extract rice planting area. Jiangsu J. Agric. Sci. 2016, 32, 111–117. [Google Scholar] [CrossRef]

- Jin, Z.; Li, W.; Jing, Y. Appropriate extraction scale of winter wheat planting area based on image fusion. Jiangsu J. Agric. Sci. 2015, 31, 1312–1317. [Google Scholar] [CrossRef]

- Li, W.; Chen, H.; Jin, Z.; Zhang, C.; Ge, G.; Ji, F. Remote sensing monitoring of winter wheat scab based on suitable scale selection. J. Triticeae Crops 2018, 38, 1374–1380. [Google Scholar] [CrossRef]

- Li, X.; Ma, B.; Zhang, S.; Chen, Y.; WU, L. Study on classification of ground objects with multispectral and SAR images. Map. Spa. Geogr. Inform. 2019, 42, 55–58. [Google Scholar]

- Seo, D.K.; Kim, Y.H.; Eo, Y.D.; Lee, M.H.; Park, W.Y. Fusion of SAR and multispectral images using random forest regression for change detection. ISPRS Int. J. Geo-Inf. 2018, 7, 401. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Zheng, D. Applications of artificial neural networks to geosciences: Review and prospect. Adv. Earth Sci. 2003, 1, 68–76. [Google Scholar]

- Li, W.; Li, Z.; Wang, J.; Huang, W.; Guo, W. Classification monitoring of grain protein contents of winter wheat by TM image based on ISODATA. Jiangsu J. Agric. Sci. 2009, 25, 1247–1251. [Google Scholar]

- Li, N.; Zhu, X.; Pan, Y.; Zhan, P. Optimized SVM based on artificial bee colony algorithm for remote sensing image classification. J. Remote Sens. 2018, 22, 559–569. [Google Scholar] [CrossRef]

- Izquierdo-Verdiguier, E.; Laparra, V.; Gomez-Chova, L.; Camps-Valls, G. Encoding invariances in remote sensing image classification with SVM. IEEE Geosic. Remote Sens. Lett. 2013, 10, 981–985. [Google Scholar] [CrossRef]

- Zhou, C.; Wang, P.; Zhang, Z.; Qi, C. Classification of urban land based on object-oriented information extraction technology. Remote Sens. Technol. Appl. 2008, 1, 31–35+123. [Google Scholar]

- Mo, L.; Cao, Y.; Hu, Y.; Liu, M.; Xia, D. Object-oriented Classification for Satellite Remote Sensing of Wetlands: A Case Study in Southern Hangzhou Bay Area. Wetland Sci. 2012, 10, 206–213. [Google Scholar]

- Li, Z.; Li, W.; Shen, S. A classification of wheat yield by remote-monitoring based on optimization ISODATA. Jiangsu J. Agric. Sci. 2009, 2, 301–302. [Google Scholar]

- Shackelford, A.K.; Davis, C.H. A combined fuzzy pixel-based and object-based approach for classification of high-resolution multispectral data over urban areas. IEEE Trans. Geosci. Remote 2003, 41, 2354–2363. [Google Scholar] [CrossRef] [Green Version]

- Song, M. Object-oriented urban land classification with GF-2 remote sensing image. Remote Sens. Technol. Appl. 2019, 34, 547–553+629. [Google Scholar] [CrossRef]

- Liu, Y.; Li, M.; Mao, L.; Xu, F.; Huang, S. Review of remotely sensed imagery classification patterns based on object-oriented image analysis. Chinese Geogr. Sci. 2006, 16, 282–288. [Google Scholar] [CrossRef]

- Cui, W.; Zheng, Z.; Zhou, Q.; Huang, J.; Yuan, Y. Application of a parallel spectral-spatial convolution neural network in object-oriented remote sensing land use classification. Remote Sens. Lett. 2018, 9, 334–342. [Google Scholar] [CrossRef]

- Zhang, Y.; He, Z.; Wu, Z. Crop classification and extraction based on multi-source remote sensing image. J. Shandong Agric. Univ. (Nat. Sci.) 2021, 52, 615–618. [Google Scholar] [CrossRef]

- Zhou, Z.; Huang, J.; Wang, J.; Zhang, K.; Kuang, Z.; Zhong, S.; Song, X. Object-oriented classification of sugarcane using time-series middle-resolution remote sensing data based on AdaBoost. PLoS ONE 2015, 10, e0142069. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, Y.; Hu, Z.; Fan, R. Effect of high-resolution image fusion algorithm on the classification precision of land utilization in coastal wetland. Bull. Surv. Map. 2022, 1, 116–120. [Google Scholar] [CrossRef]

- Wang, F.; Yang, W.; Wang, J.; Chen, A. Chinese high-resolution satellite pixel level image fusion and its quality evaluation. Sci. Surv. Map. 2021, 46, 73–80. [Google Scholar] [CrossRef]

- Wang, Z.; Nie, C.; Wang, H.; Ao, Y.; Jin, X.; Yu, X.; Bai, Y.; Liu, Y.; Shao, M.; Cheng, M.; et al. Detection and analysis of degree of maize lodging using UAV-RGB image multi-feature factors and various classification methods. ISPRS Int. J. Geo-Inf. 2021, 10, 309. [Google Scholar] [CrossRef]

- Jin, B.; Ye, P.; Zhang, X.; Song, W.; Li, S. Object-Oriented method combined with deep convolutional neural networks for land-use-type classification of remote sensing images. J. Indian Soc. Remote 2019, 47, 951–965. [Google Scholar] [CrossRef]

- Li, W.; Jiang, N. Extraction of Winter Wheat Planting Area by Object-oriented Classification Method. J. Triticeae Crops 2012, 32, 701–705. [Google Scholar]

- Zhao, B.; Wang, X.; Yan, S.; Zhang, Y.; Zhang, T. Application of object-oriented classification method in the extraction of soil and water conservation measures. Sci. Soil Water Conserv. 2022, 20, 122–127. [Google Scholar] [CrossRef]

- Li, Q.; Liu, J.; Mi, X.; Yang, J.; Yu, T. Object-oriented crop classification for GF-6 WFV remote sensing images based on Convolutional Neural Network. J. Remote Sens. 2021, 25, 549–558. [Google Scholar] [CrossRef]

- Li, W.; Huang, W.; Dong, Y.; Chen, h.; Wang, J.; Shan, J. Estimation on winter wheat scab based on combination of temperature, humidity and remote sensing vegetation index. Trans. Chin. Soc. Agric. Eng. 2017, 33, 203–210. [Google Scholar] [CrossRef]

- Gu, X.; Li, W.; Wang, L. Understanding vegetation changes in northern China and Mongolia with change vector analysi. Springer Plus 2016, 5, 1780–1793. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pei, H.; Sun, T.; Wang, X. Object-oriented land use/cover classification based on texture features of Landsat 8 OLI image. Trans. Chin. Soc. Agric. Eng. 2018, 34, 248–255. [Google Scholar] [CrossRef]

- Regniers, O.; Bombrun, L.; Lafon, V.; Germain, C. Supervised classification of very high resolution optical images using wavelet-based textural features. IEEE Trans. Geosci. Remote 2016, 54, 3722–3735. [Google Scholar] [CrossRef] [Green Version]

- Wen, X.; Jia, M.; Li, X.; Wang, Z.; Zhong, C.; Feng, E. Identification of mangrove canopy species based on visible unmanned aerial vehicle images. J. Fores. Environ. 2020, 40, 486–496. [Google Scholar] [CrossRef]

- Wang, Y.; Fan, W.; Liu, C. An object-based fusion of QUICKBIRD data and RADARSAT SAR data for classification analysis. J. Northeast Fores. Univ. 2016, 44, 44–49. [Google Scholar] [CrossRef]

- Wu, Z.; Mao, Z.; Wang, Z.; Qiu, Y.; Shen, W. Shallow water depth retrieval from multi-scale multi-spectral satellite data: Take Sentinel-2A and Resource 3 as an example. Map. Spa. Geogr. Inform. 2019, 42, 12–16. [Google Scholar]

- Zhao, F.; Wu, X.; Wang, S. Object-oriented Vegetation Classification Method based on UAV and Satellite Image Fusion. Procedia Comput. Sci. 2020, 174, 609–615. [Google Scholar] [CrossRef]

- Nie, Q.; Qi, K.; Zhao, Y. Object-oriented classification of high resolution image combining super-pixel segmentation. Bull. Surv. Map. 2021, 6, 44–49. [Google Scholar] [CrossRef]

- Wan, J.; Zang, J.; Liu, S. Fusion and classification of SAR and optical image with consideration of polarization characteristics. Acta Opt. Sin. 2017, 37, 292–301. [Google Scholar] [CrossRef]

- Weng, Y.; Tian, Q. Analysis and Evaluation of Method on Remote Sensing Data Fusion. Remote Sens. Inform. 2003, 3, 49–54. [Google Scholar]

- LI, W.; Jiang, N.; GE, G. Analysis of Spectral Characteristics Based on Optical Remote Sensing and SAR Image Fusion. Agric. Sci. Technol. 2014, 15, 2035–2038+2040. [Google Scholar] [CrossRef]

| Evaluation Indicators | Formula | Reference |

|---|---|---|

| Mean Value | [6] | |

| Standard Deviation | [8] | |

| Average Gradient | [25] | |

| Correlation Coefficient | [25] |

| Texture Feature Indicators | Formula | Reference |

|---|---|---|

| Homogeneity | [28] | |

| Entropy | [28] | |

| Angular Second Moment | [28] | |

| Contrast | [28] |

| Scale of Fusion | Mean Value | Standard Deviation | Average Gradient | Correlation Coefficient |

|---|---|---|---|---|

| 2 m × 2 m | 160.98 | 78.60 | 1.81 | 0.95 |

| 8 m × 8 m | 161.01 | 82.93 | 2.97 | 0.96 |

| 16 m × 16 m | 161.15 | 83.01 | 4.55 | 0.97 |

| 24 m × 24 m | 165.03 | 83.59 | 6.13 | 0.85 |

| Vegetation Type | Homogeneity | Entropy | Angular Second Moment | Contrast |

|---|---|---|---|---|

| winter wheat | 0.80 | 1.39 | 0.31 | 0.66 |

| rape | 0.72 | 1.12 | 0.32 | 0.70 |

| other vegetation | 0.60 | 1.27 | 0.21 | 1.33 |

| Classification Combinations | Training Sample | Image | Winter Wheat (hm2) | Rape (hm2) | Other Vegetation (hm2) |

|---|---|---|---|---|---|

| Combination One | SFI | RI16m | 22,783 | 2995 | 7386 |

| Combination Two | SFI | FI16m | 21,117 | 3069 | 7239 |

| Combination Three | SRI | RI16m | 23,148 | 3360 | 5835 |

| Classification Combinations | Training Sample | Image | Accuracy | Water (%) | Buildings and Roads (%) | Winter Wheat (%) | Rape (%) | Other Vegetation (%) | Overall Accuracy (%) | Kappa Coefficient |

|---|---|---|---|---|---|---|---|---|---|---|

| Combination One | SFI | RI16m | Producer Accuracy | 90.00 | 92.00 | 97.78 | 85.00 | 91.43 | 92.22 | 0.90 |

| User Accuracy | 96.43 | 93.88 | 91.67 | 89.47 | 88.89 | |||||

| Combination Two | SFI | FI16m | Producer Accuracy | 93.33 | 96.00 | 97.78 | 90.00 | 91.43 | 94.44 | 0.93 |

| User Accuracy | 100 | 90.57 | 100 | 85.71 | 94.12 | |||||

| Combination Three | SRI | RI16m | Producer Accuracy | 83.33 | 86.00 | 93.33 | 70.00 | 80.00 | 84.44 | 0.80 |

| User Accuracy | 83.33 | 82.70 | 91.30 | 73.68 | 84.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, W.; Zhang, H.; Li, W.; Ma, T. Extraction of Winter Wheat Planting Area Based on Multi-Scale Fusion. Remote Sens. 2023, 15, 164. https://doi.org/10.3390/rs15010164

Li W, Zhang H, Li W, Ma T. Extraction of Winter Wheat Planting Area Based on Multi-Scale Fusion. Remote Sensing. 2023; 15(1):164. https://doi.org/10.3390/rs15010164

Chicago/Turabian StyleLi, Weiguo, Hong Zhang, Wei Li, and Tinghuai Ma. 2023. "Extraction of Winter Wheat Planting Area Based on Multi-Scale Fusion" Remote Sensing 15, no. 1: 164. https://doi.org/10.3390/rs15010164

APA StyleLi, W., Zhang, H., Li, W., & Ma, T. (2023). Extraction of Winter Wheat Planting Area Based on Multi-Scale Fusion. Remote Sensing, 15(1), 164. https://doi.org/10.3390/rs15010164