Comparative Assessment of Five Machine Learning Algorithms for Supervised Object-Based Classification of Submerged Seagrass Beds Using High-Resolution UAS Imagery

Abstract

:1. Introduction

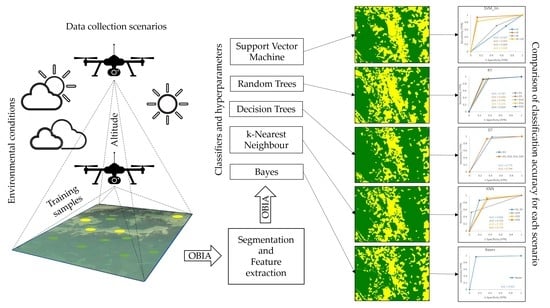

2. Materials and Methods

2.1. Data Collection and Processing

2.1.1. Study Area

2.1.2. UAS Flights

2.1.3. Environmental Conditions during UAS Flights

2.1.4. Production of Georeferenced Orthomosaics

2.2. Object-Based Image Analysis

2.2.1. Experimental Setup

2.2.2. Image Segmentation

2.2.3. Training and Validation Sample Selection

2.2.4. Feature Space

2.2.5. Classifiers and Hyperparameter Tuning

2.3. Accuracy Assessment

3. Results

3.1. Image Segmentation

3.2. Training and Validation Sample Selection

3.3. Feature Space

3.4. Supervised Classification

3.4.1. Classifier Performance with Optimal Hyperparameter Settings

3.4.2. Sensitivity to Hyperparameter Tuning

4. Discussion

4.1. Object-Based Image Analysis Workflow

4.2. Classifier Performance

4.3. Recommendations for Classifier Application and Related Hyperparameter Settings

4.4. Limitations

4.5. Implications for Coastal Conservation Efforts

4.6. Future Recommendations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Flindt, M.R.; Pardal, M.Â.; Lillebø, A.I.; Martins, I.; Marques, J.C. Nutrient cycling and plant dynamics in estuaries: A brief review. Acta Oecol. 1999, 20, 237–248. [Google Scholar] [CrossRef] [Green Version]

- Orth, R.J.; Luckenbach, M.L.; Marion, S.R.; Moore, K.A.; Wilcox, D.J. Seagrass recovery in the Delmarva Coastal Bays, USA. Aquat. Bot. 2006, 84, 26–36. [Google Scholar] [CrossRef]

- Steinfurth, R.C.; Lange, T.; Oncken, N.S.; Kristensen, E.; Quintana, C.O.; Flindt, M.R. Improved benthic fauna community parameters after large-scale eelgrass (Zostera marina) restoration in Horsens Fjord, Denmark. Mar. Ecol. Prog. Ser. 2022, 687, 65–77. [Google Scholar] [CrossRef]

- Beck, M.W.; Heck, K.L.; Able, K.W.; Childers, D.L.; Eggleston, D.B.; Gillanders, B.M.; Halpern, B.; Hays, C.G.; Hoshino, K.; Minello, T.J.; et al. Identification, Conservation, and Management of Estuarine and Marine Nurseries for Fish and Invertebrates: A better understanding of the habitats that serve as nurseries for marine species and the factors that create site-specific variability in nursery quality will improve conservation and management of these areas. BioScience 2001, 51, 633–641. [Google Scholar] [CrossRef]

- Costanza, R.; D’Arge, R.; De Groot, R.; Farber, S.; Grasso, M.; Hannon, B.; Limburg, K.; Naeem, S.; O’Neill, R.V.; Paruelo, J.; et al. The value of the world’s ecosystem services and natural capital. Nature 1997, 387, 253–260. [Google Scholar] [CrossRef]

- Plummer, M.L.; Harvey, C.J.; Anderson, L.E.; Guerry, A.D.; Ruckelshaus, M.H. The Role of Eelgrass in Marine Community Interactions and Ecosystem Services: Results from Ecosystem-Scale Food Web Models. Ecosystems 2013, 16, 237–251. [Google Scholar] [CrossRef]

- Orth, R.J.; Carruthers, T.J.B.; Dennison, W.C.; Duarte, C.M.; Fourqurean, J.W.; Heck, K.L.; Hughes, A.R.; Kendrick, G.A.; Kenworthy, W.J.; Olyarnik, S.; et al. A global crisis for seagrass ecosystems. Bioscience 2006, 56, 987–996. [Google Scholar] [CrossRef] [Green Version]

- Waycott, M.; Duarte, C.M.; Carruthers, T.J.B.; Orth, R.J.; Dennison, W.C.; Olyarnik, S.; Calladine, A.; Fourqurean, J.W.; Heck, K.L.; Hughes, A.R.; et al. Accelerating loss of seagrasses across the globe threatens coastal ecosystems. Biol. Sci. 2009, 106, 12377–12381. [Google Scholar] [CrossRef]

- Valdemarsen, T.; Canal-Verges, P.; Kristensen, E.; Holmer, M.; Kristiansen, M.D.; Flindt, M.R. Vulnerability of Zostera marina seedlings to physical stress. Mar. Ecol. Prog. Ser. 2010, 418, 119–130. [Google Scholar] [CrossRef] [Green Version]

- Griffiths, L.L.; Connolly, R.M.; Brown, C.J. Critical gaps in seagrass protection reveal the need to address multiple pressures and cumulative impacts. Ocean Coast. Manag. 2020, 183, 104946. [Google Scholar] [CrossRef]

- Duffy, J.E.; Benedetti-Cecchi, L.; Trinanes, J.; Muller-Karger, F.E.; Ambo-Rappe, R.; Boström, C.; Buschmann, A.H.; Byrnes, J.; Coles, R.G.; Creed, J.; et al. Toward a coordinated global observing system for seagrasses and marine macroalgae. Front. Mar. Sci. 2019, 6, 317. [Google Scholar] [CrossRef] [Green Version]

- Lange, T.; Oncken, N.S.; Svane, N.; Steinfurth, R.C.; Kristensen, E.; Flindt, M.R. Large-scale eelgrass transplantation: A measure for carbon and nutrient sequestration in estuaries. Mar. Ecol. Prog. Ser. 2022, 685, 97–109. [Google Scholar] [CrossRef]

- Lønborg, C.; Thomasberger, A.; Stæhr, P.A.U.; Stockmarr, A.; Sengupta, S.; Rasmussen, M.L.; Nielsen, L.T.; Hansen, L.B.; Timmermann, K. Submerged aquatic vegetation: Overview of monitoring techniques used for the identification and determination of spatial distribution in European coastal waters. Integr. Environ. Assess. Manag. 2022, 18, 892–908. [Google Scholar] [CrossRef] [PubMed]

- Klemas, V.V. Remote sensing of submerged aquatic vegetation. In Seafloor Mapping along Continental Shelves; Finkl, C., Makowski, C., Eds.; Coastal Research Library, Springer: Cham, Switzerland, 2016; Volume 13, pp. 125–140. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Svane, N.; Lange, T.; Egemose, S.; Dalby, O.; Thomasberger, A.; Flindt, M.R. Unoccupied aerial vehicle-assisted monitoring of benthic vegetation in the coastal zone enhances the quality of ecological data. Prog. Phys. Geogr. 2022, 46, 232–249. [Google Scholar] [CrossRef]

- Duffy, J.P.; Pratt, L.; Anderson, K.; Land, P.E.; Shutler, J.D. Spatial assessment of intertidal seagrass meadows using optical imaging systems and a lightweight drone. Estuar. Coast. Shelf Sci. 2018, 200, 169–180. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality—Dealing with complexity. In Object-Based Image Analysis. Lecture Notes in Geoinformation and Cartography; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 3–27. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [Green Version]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing object-based and pixel-based classifications for mapping savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Weih, R.C.; Riggan, N.D. Object-based classification vs. pixel-based classification: Comparitive importance of multi-resolution imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, C7. [Google Scholar]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Belluscio, A.; Ardizzone, G. Mapping and classification of ecologically sensitive marine habitats using unmanned aerial vehicle (UAV) imagery and Object-Based Image Analysis (OBIA). Remote Sens. 2018, 10, 1331. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T.; Lang, S.; Hay, G. Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef] [Green Version]

- Van Niel, T.G.; McVicar, T.R.; Datt, B. On the relationship between training sample size and data dimensionality: Monte Carlo analysis of broadband multi-temporal classification. Remote Sens. Environ. 2005, 98, 468–480. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Browning, D.M.; Rango, A. Feature selection methods for object-based classification of sub-decimeter resolution digital aerial imagery. In Proceedings of the Geographic Object-Based Image Analysis Conference (GEOBIA 2010), Ghent, Belgium, 29 June–2 July 2010. [Google Scholar]

- Nussbaum, S.; Niemeyer, I.; Canty, M.J. seath-a new tool for automated feature extraction in the context of object-based image analysis. In Proceedings of the 1st International Conference on Object-Based Image Analysis (OBIA), Salzburg, Austria, 4–5 July 2006. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2019; pp. 95–98. [Google Scholar]

- Shiraishi, T.; Motohka, T.; Thapa, R.B.; Watanabe, M.; Shimada, M. Comparative assessment of supervised classifiers for land use-land cover classification in a tropical region using time-series PALSAR mosaic data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1186–1199. [Google Scholar] [CrossRef]

- Wieland, M.; Pittore, M. Performance Evaluation of Machine Learning Algorithms for Urban Pattern Recognition from Multi-spectral Satellite Images. Remote Sens. 2014, 6, 2912–2939. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Koppa, J.; Fredrickson, E.L.; Rango, A. Comparison of nearest neighbor and rule-based decision tree classification in an object-oriented environment. In Proceedings of the 2006 IEEE International Symposium on Geoscience and Remote Sensing, Denver, CO, USA, 31 July–4 August 2006; pp. 3923–3926. [Google Scholar]

- Buddhiraju, K.M.; Rizvi, I.A. Comparison of CBF, ANN AND SVM classifiers for object based classification of high resolution satellite images. In Proceedings of the 2010 IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 40–43. [Google Scholar] [CrossRef]

- Bakirman, T.; Gumusay, M.U. Assessment of Machine Learning Methods for Seagrass Classification in the Mediterranean. Balt. J. Mod. Comput. 2020, 8, 315–326. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GIScience Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Pan, Y.; Flindt, M.; Schneider-Kamp, P.; Holmer, M. Beach wrack mapping using unmanned aerial vehicles for coastal environmental management. Ocean Coast. Manag. 2021, 213, 105843. [Google Scholar] [CrossRef]

- Rommel, E.; Giese, L.; Fricke, K.; Kathöfer, F.; Heuner, M.; Mölter, T.; Deffert, P.; Asgari, M.; Näthe, P.; Dzunic, F.; et al. Very High-Resolution Imagery and Machine Learning for Detailed Mapping of Riparian Vegetation and Substrate Types. Remote Sens. 2022, 14, 954. [Google Scholar] [CrossRef]

- Qian, Y.; Zhou, W.; Yan, J.; Li, W.; Han, L. Comparing Machine Learning Classifiers for Object-Based Land Cover Classification Using Very High Resolution Imagery. Remote Sens. 2015, 7, 153–168. [Google Scholar] [CrossRef] [Green Version]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Chand, S.; Bollard, B. Low altitude spatial assessment and monitoring of intertidal seagrass meadows beyond the visible spectrum using a remotely piloted aircraft system. Estuar. Coast. Shelf Sci. 2021, 255, 107299. [Google Scholar] [CrossRef]

- Nababan, B.; Mastu, L.O.K.; Idris, N.H.; Panjaitan, J.P. Shallow-water benthic habitat mapping using drone with object based image analyses. Remote Sens. 2021, 13, 4452. [Google Scholar] [CrossRef]

- Hamad, I.Y.; Staehr, P.A.U.; Rasmussen, M.B.; Sheikh, M. Drone-Based Characterization of Seagrass Habitats in the Tropical Waters of Zanzibar. Remote Sens. 2022, 14, 680. [Google Scholar] [CrossRef]

- Olesen, B. Regulation of light attenuation and eelgrass Zosteramarina depth distribution in a Danish embayment. Mar. Ecol. Prog. Ser. 1996, 134, 187–194. [Google Scholar] [CrossRef] [Green Version]

- UgCS. UgCS User Manual. Available online: https://manuals-dji.ugcs.com/docs/4mission-execution-specifics (accessed on 24 April 2023).

- Agisoft LLC. Agisoft Agisoft Metashape User Manual Professional Edition, Version 1.7; Agisoft LLC: St. Petersburg, Russia, 2023. [Google Scholar]

- Trimble. eCognition Developer 10.1. Available online: https://docs.ecognition.com/v9.5.0/Pagecollection/eCognitionSuiteDevRB.htm (accessed on 24 April 2023).

- Baatz, M. Object-oriented and multi-scale image analysis in semantic networks. In Proceedings of the 2nd International Symposium Operationalization of Remote Sensing, Enschede, Germany, 16–20 August 1999. [Google Scholar]

- Tortora, R.D. The teacher’s corner: A note on sample size estimation for multinomial populations. Am. Stat. 1978, 32, 100–102. [Google Scholar] [CrossRef]

- Haralick, R.M.; Dinstein, I.; Shanmugam, K. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Lewis, D.D. Naive (Bayes) at forty: The Independence Assumption in Information Retrieval. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1398, pp. 4–15. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees, 1st ed.; Chapman & Hall/CRC: New York, NY, USA, 1984; pp. 1–358. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Akbulut, Y.; Sengur, A.; Guo, Y.; Smarandache, F. NS-k-NN: Neutrosophic Set-Based k-Nearest Neighbors Classifier. Symmetry 2017, 9, 179. [Google Scholar] [CrossRef] [Green Version]

- Vapnik, V.N.; Chervonenkis, A.Y. On the uniform convergence of relative frequencies of events to their probabilities. In Measures of Complexity: Festschrift for Alexey Chervonenkis; Springer: Cham, Switzerland, 2015; pp. 11–30. [Google Scholar]

- Wu, X.; Kumar, V.; Ross, Q.J.; Ghosh, J.; Yang, Q.; Motoda, H.; McLachlan, G.J.; Ng, A.; Liu, B.; Yu, P.S.; et al. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008, 14, 1–37. [Google Scholar] [CrossRef] [Green Version]

- Eisank, C.; Smith, M.; Hillier, J. Assessment of multiresolution segmentation for delimiting drumlins in digital elevation models. Geomorphology 2014, 214, 452–464. [Google Scholar] [CrossRef] [Green Version]

- Anders, N.S.; Seijmonsbergen, A.C.; Bouten, W. Segmentation optimization and stratified object-based analysis for semi-automated geomorphological mapping. Remote Sens. Environ. 2011, 115, 2976–2985. [Google Scholar] [CrossRef]

- Gao, Y.; Marpu, P.; Niemeyer, I.; Runfola, D.M.; Giner, N.M.; Hamill, T.; Pontius, R.G. Object-based classification with features extracted by a semi-automatic feature extraction algorithm-SEaTH. Geocarto Int. 2011, 26, 211–226. [Google Scholar] [CrossRef]

- Barrell, J.; Grant, J. High-resolution, low-altitude aerial photography in physical geography: A case study characterizing eelgrass (Zostera marina L.) and blue mussel (Mytilus edulis L.) landscape mosaic structure. Prog. Phys. Geogr. 2015, 39, 440–459. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sanchez, J.P. An assessment of the effectiveness of a random forest classifier for land-cover classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Nahirnick, N.K.; Reshitnyk, L.; Campbell, M.; Hessing-Lewis, M.; Costa, M.; Yakimishyn, J.; Lee, L. Mapping with confidence; delineating seagrass habitats using Unoccupied Aerial Systems (UAS). Remote Sens. Ecol. Conserv. 2019, 5, 121–135. [Google Scholar] [CrossRef]

- Doukari, M.; Katsanevakis, S.; Soulakellis, N.; Topouzelis, K. The effect of environmental conditions on the quality of UAS orthophoto-maps in the coastal environment. ISPRS Int. J. Geo-Inf. 2021, 10, 18. [Google Scholar] [CrossRef]

- Doukari, M.; Batsaris, M.; Papakonstantinou, A.; Topouzelis, K. A protocol for aerial survey in coastal areas using UAS. Remote Sens. 2019, 11, 1913. [Google Scholar] [CrossRef] [Green Version]

- Tallam, K.; Nguyen, N.; Ventura, J.; Fricker, A.; Calhoun, S.; O’leary, J.; Fitzgibbons, M.; Walter, R.K. Application of Deep Learning for Classification of Intertidal Eelgrass from Drone-Acquired Imagery. Remote Sens. 2023, 15, 2321. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef] [Green Version]

- Xie, G.; Niculescu, S. Mapping and Monitoring of Land Cover/Land Use (LCLU) Changes in the Crozon Peninsula (Brittany, France) from 2007 to 2018 by Machine Learning Algorithms (Support Vector Machine, Random Forest, and Convolutional Neural Network) and by Post-classification Comparison (PCC). Remote Sens. 2021, 13, 3899. [Google Scholar] [CrossRef]

- Robson, B.A.; Bolch, T.; MacDonell, S.; Hölbling, D.; Rastner, P.; Schaffer, N. Automated detection of rock glaciers using deep learning and object-based image analysis. Remote Sens. Environ. 2020, 250, 112033. [Google Scholar] [CrossRef]

- Timilsina, S.; Aryal, J.; Kirkpatrick, J.B. Mapping Urban Tree Cover Changes Using Object-Based Convolution Neural Network (OB-CNN). Remote Sens. 2020, 12, 3017. [Google Scholar] [CrossRef]

- Zaabar, N.; Niculescu, S.; Mihoubi, M.K. Assessment of combining convolutional neural networks and object based image analysis to land cover classification using Sentinel 2 satellite imagery (Tenes region, Algeria). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 383–389. [Google Scholar] [CrossRef]

- Zhang, C.; Xie, Z. Data fusion and classifier ensemble techniques for vegetation mapping in the coastal Everglades. Geocarto Int. 2014, 29, 228–243. [Google Scholar] [CrossRef]

- Desjardins, É.; Lai, S.; Houle, L.; Caron, A.; Tam, A.; François, V.; Berteaux, D. Algorithms and Predictors for Land Cover Classification of Polar Deserts: A Case Study Highlighting Challenges and Recommendations for Future Applications. Remote Sens. 2023, 15, 3090. [Google Scholar] [CrossRef]

| Scenario | Level 1 | Level 2 | Level 3 |

|---|---|---|---|

| 100 m_fav | 31 | 51 | 501 |

| 30 m_fav | 91 | 101 | 1521 |

| 100 m_unfav | 61 | 91 | 701 |

| 30 m_unfav | 59 | 91 | 1701 |

| 100 m_fav | 30 m_fav | 100 m_unfav | 30 m_unfav | |||||

|---|---|---|---|---|---|---|---|---|

| Class | Seagrass | Sand | Seagrass | Sand | Seagrass | Sand | Seagrass | Sand |

| Areal cover | 67.5% | 32.5% | 57.6% | 42.4% | 61.7% | 38.3% | 51.1% | 48.9% |

| Small sample (50) | 34 | 16 | 29 | 21 | 31 | 19 | 26 | 24 |

| Large sample (100) | 169 | 81 | 144 | 106 | 154 | 96 | 128 | 122 |

| Validation | 298 | 143 | 283 | 208 | 292 | 183 | 257 | 245 |

| Image | 100 m_fav | 30 m_fav | 100 m_unfav | 30 m_unfav | ||||

|---|---|---|---|---|---|---|---|---|

| Scenario | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| Samples | 250 | 50 | 250 | 50 | 250 | 50 | 250 | 50 |

| Dimensions | 12 | 9 | 14 | 8 | 9 | 3 | 11 | 10 |

| Separation distance | 0.65 | 1.12 | 1.49 | 1.61 | 0.12 | 0.63 | 0.17 | 0.23 |

| Features | Mean Layer 2 GLCM Homogen. Max. Diff. GLCM Correlat. Mean Layer 1 GLCM Entropy Mean Layer 3 GLCM Mean St. Dev. Layer 3 Brightness St. Dev. Layer 2 St. Dev. Layer 1 | GLCM Correlat. Mean Layer 2 GLCM Homogen. Max.Diff. Mean Layer 1 GLCM Entropy GLCM Mean Mean Layer 3 Brightness | St. Dev. Layer 1 Mean Layer 2 GLCM Homogen. GLCM Correlat. Mean Layer 1 GLCM Entropy Max. Diff. GLCM Mean St. Dev. Layer 3 St. Dev. Layer 2 Brightness GLCM Dissimilar. GLCM StdDev Mean Layer 3 | Mean Layer 1 GLCM Homogen. Mean Layer 2 Max. Diff. GLCM Mean GLCM Entropy Brightness GLCM Correlat. | GLCM Homogen. GLCM Correlat. Max. Diff. GLCM Mean St. Dev. Layer 2 Mean Layer 1 GLCM Entropy St. Dev. Layer 1 Mean Layer 2 | GLCM Correlat. St. Dev. Layer 2 St. Dev. Layer 3 | Mean Layer 1 Max. Diff. GLCM StdDev Mean Layer 2 St. Dev. Layer 1 GLCM Mean GLCM Correlat. GLCM Homogen. Brightness St. Dev. Layer 2 GLCM Entropy | Mean Layer 2 Mean Layer 1 Max. Diff. GLCM StdDev GLCM Mean Brightness GLCM Correlat. St. Dev. Layer 1 St. Dev. Layer 2 GLCM Entropy |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thomasberger, A.; Nielsen, M.M.; Flindt, M.R.; Pawar, S.; Svane, N. Comparative Assessment of Five Machine Learning Algorithms for Supervised Object-Based Classification of Submerged Seagrass Beds Using High-Resolution UAS Imagery. Remote Sens. 2023, 15, 3600. https://doi.org/10.3390/rs15143600

Thomasberger A, Nielsen MM, Flindt MR, Pawar S, Svane N. Comparative Assessment of Five Machine Learning Algorithms for Supervised Object-Based Classification of Submerged Seagrass Beds Using High-Resolution UAS Imagery. Remote Sensing. 2023; 15(14):3600. https://doi.org/10.3390/rs15143600

Chicago/Turabian StyleThomasberger, Aris, Mette Møller Nielsen, Mogens Rene Flindt, Satish Pawar, and Niels Svane. 2023. "Comparative Assessment of Five Machine Learning Algorithms for Supervised Object-Based Classification of Submerged Seagrass Beds Using High-Resolution UAS Imagery" Remote Sensing 15, no. 14: 3600. https://doi.org/10.3390/rs15143600

APA StyleThomasberger, A., Nielsen, M. M., Flindt, M. R., Pawar, S., & Svane, N. (2023). Comparative Assessment of Five Machine Learning Algorithms for Supervised Object-Based Classification of Submerged Seagrass Beds Using High-Resolution UAS Imagery. Remote Sensing, 15(14), 3600. https://doi.org/10.3390/rs15143600