A Remote Sensing Image Fusion Method Combining Low-Level Visual Features and Parameter-Adaptive Dual-Channel Pulse-Coupled Neural Network

Abstract

:1. Introduction

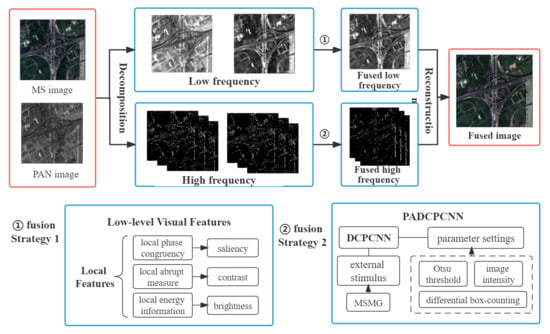

- (1)

- Low-frequency sub-band fusion based on LLVF. In the process of low-frequency sub-band fusion, the fusion rules are constructed only based on a single local feature, which cannot effectively extract the feature information of the image. To this end, according to the principle of the human visual system to understand the image through the underlying visual features such as the saliency, contrast, and brightness of the image, a fusion rule which is more in line with the visual characteristics of the human eye is constructed by combining the three local features of local phase congruency, local abrupt measure, and local energy information.

- (2)

- High-frequency sub-band fusion based on PADCPCNN. Based on the advantage that MSMG can integrate gradient information at multiple scales, it is used as an external stimulus for DCPCNN, thereby enhancing the spatial correlation of DCPCNN. The parameters of DCPCNN are adaptively represented by differences in the box dimension, the Otsu threshold, and the image intensity to solve the complexity of parameter setting.

- (3)

- A remote sensing image fusion method combining LLVF and PADCPCNN. By combining the fusion strategies proposed above, a novel NSST domain fusion method is proposed, which more fully considers the energy preservation and detail extraction of remote sensing images. In order to verify the effectiveness of the method, five sets of remote sensing image data from different platforms and ground objects are selected to conduct comparative experiments between the proposed method and 16 other methods, and the experimental results are compared and analyzed from qualitative and quantitative aspects.

2. Methodology

2.1. Low-Level Visual Features

- Phase congruency is a dimensionless measure, often used in image edge detection, which can better measure the saliency of image features. The phase congruency at position can be expressed aswhere is the positive constant, is the orientation angle at , and is the amplitude and angle of the n-th Fourier component. and are the convolution results of the input image at , where represents the pixel value at , and and are two-dimensional Log-Gabor even symmetric and odd symmetric filters with scale , respectively.

- Local abrupt measure mainly reflects the contrast information of the image, which can overcome the difficulty that phase congruency is insensitive to the image contrast information. The expression of the abrupt measure iswhere represents a local region of size 3 × 3, and is the pixel value in local region . In order to calculate the contrast of in the neighborhood range , the local abrupt measure is proposed, and its expression is

- Local energy information can reflect the intensity of image brightness variation, and its equation can be expressed as

2.2. PADCPCNN

2.3. NSST-LLVF-PADCPCNN Method

2.4. Steps

- One luminance component and two chromaticity components and are obtained by YUV space transform of multispectral image .

- The luminance component obtained by YUV space transform and panchromatic image obtained by preprocessing are multi-scale decomposed by NSST transform, and the low-frequency sub-bands and high-frequency sub-bands are obtained, respectively, where and represent decomposition series and direction, respectively.

- The fusion strategy of low-level visual features is used, the low frequency sub-bands are fused according to Equations (6)–(8), and the fused low-frequency sub-band coefficient is obtained.

- PADCPCNN model is performed on the high-frequency sub-bands through Equations (9)–(14), and the fused high-frequency sub-band coefficient is obtained.

- The new luminance component is obtained by NSST inverse transform of the fused low-frequency and high-frequency fusion coefficients.

- Then the new fused image is obtained by YUV space inverse transform of the new luminance component and chromaticity component and .

3. Experimental Design

3.1. Experimental Data

3.2. Compared Methods

3.3. Evaluation Indexes

- IE: It is an evaluation index to measure the amount of information contained in the fused image, and the greater the information entropy, the richer the information. The mathematical expression of IE iswhere is the gray level of the image and is the statistical probability of the gray histogram.

- MI: It measures the extent to which the fused image acquires information from the original image. A larger MI indicates that more information is retained from the original image. The mathematical expression of MI iswhere and are the fused image and the reference image, respectively; and are the length and width of the image, respectively; and denotes the resolution of the image. is the joint grayscale histogram of and .

- AG: It measures the clarity of the image, and the greater the AG, the higher the clarity and the better the quality of the fusion. The mathematical expression of AG iswhere and represent the first-order differences of the image in the x- and y-directions, respectively.

- SF: It reflects the grayscale rate of change of the image and reflects the active level of the image. A larger SF indicates clearer and higher fusion quality. The mathematical expression of SF isand represent the row space frequency and column space frequency of image , respectively. It can be expressed as

- SCC: It reflects the spatial correlation between the two images, and the larger the correlation coefficient, the better the fusion effect. It can be expressed as

- SD: It measures the spectral difference between the fused image and the reference image. The larger the SD, the more severe the spectral distortion. The mathematical expression of SD is

- SAM: It measures the similarity between spectra by calculating the inclusion angle between two vectors. The smaller the inclusion angle is, the more similar the two spectra are. The mathematical expression of SAM iswhere is the spectral pixel vector of the original image, and is the spectral pixel vector of the fused image.

- ERGAS: It reflects the degree of spectral distortion between the fused image and the reference image. The larger the ERGAS, the more severe the spectral distortion. The mathematical expression of ERGAS iswhere is the ratio of the resolution of the ASR image to that of the multispectral image, denotes the number of bands, and denotes the mean value of the image.

- Q4: It is a global evaluation index based on the calculation of the hypercomplex correlation coefficient between the reference image and the fused image, which jointly measure the spectral and spatial distortion. Its specific definition is detailed in reference [43].

- VIFF: It is a newly proposed index that measures the fidelity of visual information between the fused image and each source image by measuring the fidelity of the visual information based on the Gaussian scale mixture model, the distortion model, and the HVS model. Its specific definition is detailed in reference [44].

4. Experimental Results and Analysis

4.1. Qualitative Evaluation

4.2. Quantitative Evaluation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Arienzo, A.; Alparone, L.; Garzelli, A.; Lolli, S. Advantages of Nonlinear Intensity Components for Contrast-Based Multispectral Pansharpening. Remote Sens. 2022, 14, 3301. [Google Scholar] [CrossRef]

- Kulkarni, S.C.; Rege, P.P. Pixel level fusion techniques for SAR and optical images: A review. Inf. Fusion 2020, 59, 13–29. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Guo, S. IHS-GTF: A fusion method for optical and synthetic aperture radar data. Remote Sens. 2020, 12, 2796. [Google Scholar] [CrossRef]

- Batur, E.; Maktav, D. Assessment of surface water quality by using satellite images fusion based on PCA method in the Lake Gala, Turkey. IEEE Tran. Geosci. Remote Sens. 2018, 57, 2983–2989. [Google Scholar] [CrossRef]

- Quan, Y.; Tong, Y.; Feng, W.; Dauphin, G.; Huang, W.; Xing, M. A novel image fusion method of multi-spectral and sar images for land cover classification. Remote Sens. 2020, 12, 3801. [Google Scholar] [CrossRef]

- Zhao, R.; Du, S. An Encoder–Decoder with a Residual Network for Fusing Hyperspectral and Panchromatic Remote Sensing Images. Remote Sens. 2022, 14, 1981. [Google Scholar] [CrossRef]

- Wu, Y.; Feng, S.; Lin, C.; Zhou, H.; Huang, M. A Three Stages Detail Injection Network for Remote Sensing Images Pansharpening. Remote Sens. 2022, 14, 1077. [Google Scholar] [CrossRef]

- Nair, R.R.; Singh, T. Multi-sensor medical image fusion using pyramid-based DWT: A multi-resolution approach. IET Image Process. 2019, 13, 1447–1459. [Google Scholar] [CrossRef]

- Aishwarya, N.; Thangammal, C.B. Visible and infrared image fusion using DTCWT and adaptive combined clustered dictionary. Infrared Phys. Technol. 2018, 93, 300–309. [Google Scholar] [CrossRef]

- Arif, M.; Wang, G. Fast curvelet transform through genetic algorithm for multimodal medical image fusion. Soft Comput. 2020, 24, 1815–1836. [Google Scholar] [CrossRef]

- Wang, Z.; Li, X.; Duan, H.; Su, Y.; Zhang, X.; Guan, X. Medical image fusion based on convolutional neural networks and non-subsampled contourlet transform. Expert Syst. Appl. 2021, 171, 114574. [Google Scholar] [CrossRef]

- Li, B.; Peng, H.; Luo, X.; Wang, J.; Song, X.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. Medical image fusion method based on coupled neural P systems in nonsubsampled shearlet transform domain. Int. J. Neural Syst. 2021, 31, 2050050. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Li, X.; Luo, L.; Mei, X.; Ma, J. Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inf. Sci. 2020, 508, 64–78. [Google Scholar] [CrossRef]

- Panigrahy, C.; Seal, A.; Mahato, N.K. Fractal dimension based parameter adaptive dual channel PCNN for multi-focus image fusion. Opt. Lasers Eng. 2020, 133, 106141. [Google Scholar] [CrossRef]

- Jin, X.; Jiang, Q.; Yao, S.; Zhou, D.; Nie, R.; Lee, S.J.; He, K. Infrared and visual image fusion method based on discrete cosine transform and local spatial frequency in discrete stationary wavelet transform domain. Infrared Phys. Technol. 2018, 88, 1–12. [Google Scholar] [CrossRef]

- Xu, Y.; Sun, B.; Yan, X.; Hu, J.; Chen, M. Multi-focus image fusion using learning based matting with sum of the Gaussian-based modified Laplacian. Digit. Signal Process. 2020, 106, 102821. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, M.; Huang, G. Medical image fusion based on improved multi-scale morphology gradient-weighted local energy and visual saliency map. Biomed. Signal Process. Control 2022, 74, 103535. [Google Scholar] [CrossRef]

- Khademi, G.; Ghassemian, H. Incorporating an adaptive image prior model into Bayesian fusion of multispectral and panchromatic images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 917–921. [Google Scholar] [CrossRef]

- Wang, T.; Fang, F.; Li, F.; Zhang, G. High-quality Bayesian pansharpening. IEEE Trans. Image Process. 2018, 28, 227–239. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Z. Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process. 2014, 9, 347–357. [Google Scholar] [CrossRef] [Green Version]

- Deng, L.; Feng, M.; Tai, X. The fusion of panchromatic and multispectral remote sensing images via tensor-based sparse modeling and hyper-Laplacian prior. Inf. Fusion 2019, 52, 76–89. [Google Scholar] [CrossRef]

- Huang, C.; Tian, G.; Lan, Y.; Peng, Y.; Ng, E.Y.K.; Hao, Y.; Che, W. A new pulse coupled neural network (PCNN) for brain medical image fusion empowered by shuffled frog leaping algorithm. Front. Neurosci. 2019, 13, 210. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yin, M.; Duan, P.; Liu, W.; Liang, X. A novel infrared and visible image fusion algorithm based on shift-invariant dual-tree complex shearlet transform and sparse representation. Neurocomputing 2017, 226, 182–191. [Google Scholar] [CrossRef]

- Liu, Z.; Feng, Y.; Zhang, Y.; Li, X. A fusion algorithm for infrared and visible images based on RDU-PCNN and ICA-bases in NSST domain. Infrared Phys. Technol. 2016, 79, 183–190. [Google Scholar] [CrossRef]

- Cheng, B.; Jin, L.; Li, G. Infrared and visual image fusion using LNSST and an adaptive dual-channel PCNN with triple-linking strength. Neurocomputing 2018, 310, 135–147. [Google Scholar] [CrossRef]

- Li, H.; Qiu, H.; Yu, Z.; Zhang, Y. Infrared and visible image fusion scheme based on NSCT and low-level visual features. Infrared Phys. Technol. 2016, 76, 174–184. [Google Scholar] [CrossRef]

- Zhang, Y.; Bai, X.; Wang, T. Boundary finding based multi-focus image fusion through multi-scale morphological focus-measure. Inf. Fusion 2017, 35, 81–101. [Google Scholar] [CrossRef]

- Panigrahy, C.; Seal, A.; Mahato, N.K.; Bhattacharjee, D. Differential box counting methods for estimating fractal dimension of gray-scale images: A survey. Chaos Solitons Fractals 2019, 126, 178–202. [Google Scholar] [CrossRef]

- Yin, M.; Liu, X.; Liu, Y.; Chen, X. Medical Image Fusion With Parameter-Adaptive Pulse Coupled Neural Network in Nonsubsampled Shearlet Transform Domain. IEEE Trans. Instrum. Meas. 2018, 68, 49–64. [Google Scholar] [CrossRef]

- Chen, Y.; Park, S.K.; Ma, Y.; Ala, R. A New Automatic Parameter Setting Method of a Simplified PCNN for Image Segmentation. IEEE Trans. Neural Netw. 2011, 22, 880–892. [Google Scholar] [CrossRef] [PubMed]

- Panigrahy, C.; Seal, A.; Mahato, N.K.; Krejcar, O.; Viedma, E.H. Multi-focus image fusion using fractal dimension. Appl. Opt. 2020, 59, 5642–5655. [Google Scholar] [CrossRef] [PubMed]

- Singh, P.; Shankar, A.; Diwakar, M. Review on nontraditional perspectives of synthetic aperture radar image despeckling. J. Electron. Imaging 2022, 32, 021609. [Google Scholar] [CrossRef]

- Singh, P.; Diwakar, M.; Shankar, A.; Shree, R.; Kumar, M. A Review on SAR Image and its Despeckling. Arch. Comput. Methods Eng. 2021, 28, 4633–4653. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, S.P.; Wang, Z.F. A general framework for image fusion based on multi-scale transform and sparse representation. Inf. Fusion 2015, 24, 147–164. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Peng, H.; Wang, Z. Multi-focus image fusion with a deep convolutional neural network. Inf. Fusion 2017, 36, 191–207. [Google Scholar] [CrossRef]

- Sufyan, A.; Imran, M.; Shah, S.A.; Shahwani, H.; Wadood, A.A. A novel multimodality anatomical image fusion method based on contrast and structure extraction. Int. J. Imaging Syst. Technol. 2021, 32, 324–342. [Google Scholar] [CrossRef]

- Du, Q.; Xu, H.; Ma, Y.; Huang, J.; Fan, F. Fusing infrared and visible images of different resolutions via total variation model. Sensors 2018, 18, 3827. [Google Scholar] [CrossRef] [Green Version]

- Chen, J.; Li, X.; Wu, K. Infrared and visible image fusion based on relative total variation decomposition. Infrared Phys. Technol. 2022, 123, 104112. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Image Fusion With Convolutional Sparse Representation. IEEE Signal Process. Lett. 2016, 23, 1882–1886. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Medical Image Fusion via Convolutional Sparsity Based Morphological Component Analysis. IEEE Signal Process. Lett. 2019, 26, 485–489. [Google Scholar] [CrossRef]

- Jian, L.; Yang, X.; Zhou, Z.; Zhou, K.; Liu, K. Multi-scale image fusion through rolling guidance filter. Future Gener. Comput. Syst. 2018, 83, 310–325. [Google Scholar] [CrossRef]

- Tan, W.; Zhou, H.; Song, J.; Li, H.; Yu, Y.; Du, J. Infrared and visible image perceptive fusion through multi-level Gaussian curvature filtering image decomposition. Appl. Opt. 2019, 58, 3064–3073. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Zhou, Z.; Wang, B.; Zong, H. Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 2017, 82, 8–17. [Google Scholar] [CrossRef]

- Tan, W.; Tiwari, P.; Pandey, H.M.; Moreira, C.; Jaiswal, A.K. Multimodal medical image fusion algorithm in the era of big data. Neural Comput. Appl. 2020, 1–21. [Google Scholar] [CrossRef]

- Cheng, F.; Fu, Z.; Huang, L.; Chen, P.; Huang, K. Non-subsampled shearlet transform remote sensing image fusion combined with parameter-adaptive PCNN. Acta Geod. Cartogr. Sin. 2021, 50, 1380–1389. [Google Scholar] [CrossRef]

- Hou, Z.; Lv, K.; Gong, X.; Zhi, J.; Wang, N. Remote sensing image fusion based on low-level visual features and PAPCNN in NSST domain. Geomat. Inf. Sci. Wuhan Univ. 2022. accepted. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F. Hypercomplex quality assessment of multi/hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 662–665. [Google Scholar] [CrossRef]

- Han, Y.; Cai, Y.; Cao, Y.; Xu, X. A new image fusion performance metric based on visual information fidelity. Inf. Fusion 2013, 14, 127–135. [Google Scholar] [CrossRef]

| Image | Method | IE↑ | MI↑ | AG↑ | SF↑ | SCC↑ | SD↓ | SAM↓ | ERGAS↓ | Q4↑ | VIFF↑ |

|---|---|---|---|---|---|---|---|---|---|---|---|

| QuickBird | Curvelet | 3.988 | 7.977 | 1.505 | 3.208 | 0.894 | 2.338 | 2.747 | 10.673 | 0.853 | 0.587 |

| DTCWT | 4.005 | 8.010 | 1.541 | 3.318 | 0.893 | 2.438 | 2.870 | 11.224 | 0.870 | 0.591 | |

| CNN | 3.812 | 7.625 | 1.524 | 3.288 | 0.865 | 4.214 | 7.550 | 24.836 | 0.923 | 0.444 | |

| CSE | 3.739 | 7.479 | 1.495 | 3.284 | 0.849 | 4.338 | 7.096 | 26.453 | 0.719 | 0.332 | |

| ASR | 3.799 | 7.599 | 0.822 | 2.025 | 0.881 | 2.297 | 2.430 | 10.998 | 0.899 | 0.470 | |

| CSR | 3.944 | 7.888 | 1.149 | 2.632 | 0.900 | 2.268 | 2.572 | 10.363 | 0.861 | 0.561 | |

| CSMCA | 4.019 | 8.037 | 1.330 | 2.962 | 0.888 | 2.385 | 2.903 | 10.626 | 0.398 | 0.631 | |

| TVM | 3.704 | 7.408 | 0.948 | 1.918 | 0.870 | 4.131 | 6.561 | 24.153 | 0.720 | 0.348 | |

| RTVD | 3.109 | 6.217 | 0.734 | 1.560 | 0.858 | 5.120 | 4.057 | 38.677 | 0.883 | 0.182 | |

| RGF | 4.051 | 8.102 | 1.558 | 3.318 | 0.862 | 2.049 | 1.841 | 8.213 | 0.749 | 0.559 | |

| MLGCF | 4.017 | 8.026 | 1.521 | 3.228 | 0.895 | 2.284 | 2.734 | 10.099 | 0.881 | 0.631 | |

| VSM-WLS | 4.042 | 8.085 | 1.583 | 3.401 | 0.896 | 2.439 | 2.909 | 10.687 | 0.801 | 0.655 | |

| EA-DCPCNN | 3.977 | 7.954 | 1.451 | 3.122 | 0.892 | 2.517 | 2.691 | 11.469 | 0.864 | 0.620 | |

| EA-PAPCNN | 4.007 | 8.015 | 1.524 | 3.322 | 0.888 | 2.560 | 2.940 | 12.127 | 0.884 | 0.520 | |

| WLE-PAPCNN | 4.262 | 8.514 | 1.552 | 3.359 | 0.884 | 1.720 | 1.746 | 7.025 | 0.892 | 0.715 | |

| LLVF-PAPCNN | 4.308 | 8.616 | 1.598 | 3.382 | 0.884 | 1.268 | 1.857 | 5.442 | 0.860 | 0.899 | |

| Proposed | 4.319 | 8.639 | 1.633 | 3.487 | 0.899 | 1.242 | 1.637 | 5.396 | 0.938 | 0.948 | |

| SPOT-6 | Curvelet | 3.620 | 7.200 | 0.763 | 1.562 | 0.964 | 2.001 | 2.890 | 11.129 | 0.861 | 0.550 |

| DTCWT | 3.622 | 7.213 | 0.787 | 1.666 | 0.965 | 2.036 | 3.089 | 11.415 | 0.869 | 0.564 | |

| CNN | 3.273 | 6.546 | 0.776 | 1.631 | 0.929 | 3.526 | 5.881 | 16.812 | 0.837 | 0.337 | |

| CSE | 3.601 | 5.931 | 0.677 | 1.447 | 0.957 | 1.991 | 3.133 | 10.730 | 0.734 | 0.641 | |

| ASR | 3.600 | 7.202 | 0.350 | 0.925 | 0.952 | 1.920 | 2.799 | 10.650 | 0.851 | 0.461 | |

| CSR | 3.533 | 7.066 | 0.475 | 1.194 | 0.963 | 1.937 | 2.452 | 10.985 | 0.816 | 0.501 | |

| CSMCA | 3.584 | 7.168 | 0.590 | 1.398 | 0.963 | 1.970 | 2.720 | 11.010 | 0.804 | 0.609 | |

| TVM | 3.038 | 6.076 | 0.436 | 0.999 | 0.937 | 3.227 | 4.933 | 29.552 | 0.561 | 0.260 | |

| RTVD | 2.736 | 5.472 | 0.355 | 0.845 | 0.957 | 4.550 | 6.344 | 44.935 | 0.280 | 0.192 | |

| RGF | 3.468 | 6.937 | 0.792 | 1.655 | 0.926 | 2.222 | 3.403 | 12.057 | 0.658 | 0.452 | |

| MLGCF | 3.625 | 7.251 | 0.772 | 1.579 | 0.964 | 1.919 | 2.839 | 10.160 | 0.842 | 0.589 | |

| VSM-WLS | 3.632 | 7.264 | 0.794 | 1.664 | 0.966 | 1.944 | 3.060 | 10.343 | 0.777 | 0.581 | |

| EA-DCPCNN | 3.618 | 7.235 | 0.709 | 1.541 | 0.967 | 1.741 | 3.037 | 10.340 | 0.698 | 0.604 | |

| EA-PAPCNN | 3.638 | 7.276 | 0.779 | 1.661 | 0.965 | 1.969 | 3.109 | 8.625 | 0.718 | 0.541 | |

| WLE-PAPCNN | 4.002 | 8.004 | 0.799 | 1.695 | 0.966 | 0.848 | 2.108 | 3.883 | 0.815 | 0.842 | |

| LLVF-PAPCNN | 4.021 | 8.042 | 0.809 | 1.712 | 0.967 | 0.630 | 1.965 | 3.441 | 0.814 | 0.958 | |

| Proposed | 4.023 | 8.047 | 0.813 | 1.712 | 0.968 | 0.601 | 1.903 | 3.328 | 0.878 | 0.954 | |

| WorldView-2 | Curvelet | 5.385 | 10.771 | 2.667 | 4.778 | 0.920 | 8.608 | 0.704 | 4.095 | 0.781 | 0.563 |

| DTCWT | 5.409 | 10.818 | 2.802 | 5.040 | 0.917 | 8.707 | 0.736 | 4.146 | 0.792 | 0.594 | |

| CNN | 5.209 | 10.417 | 2.796 | 5.037 | 0.869 | 16.035 | 1.629 | 6.942 | 0.727 | 0.519 | |

| CSE | 5.083 | 10.166 | 2.784 | 5.019 | 0.823 | 16.337 | 1.379 | 9.168 | 0.731 | 0.471 | |

| ASR | 5.685 | 11.370 | 1.362 | 2.961 | 0.875 | 7.203 | 0.787 | 3.273 | 0.801 | 0.723 | |

| CSR | 5.360 | 10.720 | 1.977 | 3.804 | 0.876 | 8.605 | 0.700 | 4.047 | 0.795 | 0.530 | |

| CSMCA | 5.386 | 10.771 | 2.450 | 4.560 | 0.918 | 8.786 | 0.744 | 4.116 | 0.792 | 0.553 | |

| TVM | 4.935 | 9.869 | 1.202 | 2.309 | 0.846 | 16.284 | 1.318 | 9.156 | 0.711 | 0.360 | |

| RTVD | 4.234 | 8.469 | 1.208 | 2.167 | 0.911 | 38.444 | 0.615 | 41.154 | 0.413 | 0.120 | |

| RGF | 5.116 | 10.234 | 2.799 | 5.041 | 0.827 | 8.729 | 0.868 | 4.081 | 0.754 | 0.474 | |

| MLGCF | 4.413 | 10.820 | 2.627 | 4.709 | 0.921 | 8.433 | 0.690 | 3.975 | 0.817 | 0.580 | |

| VSM-WLS | 5.439 | 10.878 | 2.742 | 4.914 | 0.919 | 8.735 | 0.751 | 4.109 | 0.804 | 0.629 | |

| EA-DCPCNN | 5.456 | 10.912 | 2.619 | 4.754 | 0.920 | 10.205 | 0.478 | 4.995 | 0.783 | 0.635 | |

| EA-PAPCNN | 5.435 | 10.873 | 2.809 | 5.057 | 0.916 | 8.629 | 0.743 | 3.590 | 0.807 | 0.635 | |

| WLE-PAPCNN | 5.735 | 11.470 | 2.823 | 5.079 | 0.916 | 2.898 | 0.451 | 1.969 | 0.884 | 0.594 | |

| LLVF-PAPCNN | 5.758 | 11.516 | 2.839 | 5.102 | 0.909 | 3.059 | 0.464 | 1.482 | 0.879 | 0.809 | |

| Proposed | 5.760 | 11.521 | 2.839 | 5.103 | 0.923 | 3.020 | 0.458 | 1.441 | 0.893 | 0.833 | |

| WorldView-3 | Curvelet | 3.870 | 7.740 | 0.777 | 1.631 | 0.979 | 2.407 | 9.160 | 14.849 | 0.641 | 0.535 |

| DTCWT | 3.874 | 7.748 | 0.788 | 1.661 | 0.978 | 2.124 | 9.257 | 14.891 | 0.632 | 0.546 | |

| CNN | 3.277 | 6.555 | 0.651 | 1.534 | 0.956 | 4.683 | 22.300 | 49.153 | 0.357 | 0.383 | |

| CSE | 3.290 | 6.793 | 0.632 | 1.455 | 0.959 | 4.632 | 21.682 | 49.419 | 0.341 | 0.205 | |

| ASR | 3.564 | 7.129 | 0.418 | 1.103 | 0.970 | 3.531 | 13.961 | 26.933 | 0.501 | 0.370 | |

| CSR | 3.774 | 7.548 | 0.531 | 1.331 | 0.978 | 2.340 | 7.571 | 14.489 | 0.695 | 0.534 | |

| CSMCA | 3.790 | 7.579 | 0.609 | 1.525 | 0.975 | 2.333 | 7.758 | 13.868 | 0.756 | 0.683 | |

| TVM | 3.229 | 6.459 | 0.449 | 1.180 | 0.963 | 4.640 | 23.004 | 49.765 | 0.409 | 0.283 | |

| RTVD | 3.049 | 6.097 | 0.398 | 0.993 | 0.962 | 4.846 | 6.014 | 43.436 | 0.395 | 0.258 | |

| RGF | 4.062 | 8.123 | 0.846 | 1.808 | 0.966 | 1.473 | 3.908 | 6.551 | 0.627 | 0.826 | |

| MLGCF | 3.887 | 7.774 | 0.792 | 1.695 | 0.978 | 2.241 | 8.799 | 12.765 | 0.668 | 0.618 | |

| VSM-WLS | 3.876 | 7.751 | 0.804 | 1.742 | 0.977 | 2.219 | 8.674 | 12.388 | 0.726 | 0.644 | |

| EA-DCPCNN | 3.674 | 7.528 | 0.680 | 1.582 | 0.975 | 2.558 | 5.498 | 14.371 | 0.706 | 0.645 | |

| EA-PAPCNN | 3.857 | 7.715 | 0.767 | 1.590 | 0.977 | 2.289 | 8.698 | 13.656 | 0.628 | 0.504 | |

| WLE-PAPCNN | 4.092 | 8.185 | 0.805 | 1.659 | 0.972 | 0.807 | 2.735 | 4.078 | 0.691 | 0.488 | |

| LLVF-PAPCNN | 4.094 | 8.188 | 0.850 | 1.815 | 0.972 | 0.614 | 2.495 | 3.570 | 0.758 | 0.929 | |

| Proposed | 4.101 | 8.202 | 0.851 | 1.855 | 0.981 | 0.518 | 2.484 | 2.574 | 0.759 | 0.943 | |

| Pleiades | Curvelet | 4.089 | 8.177 | 1.276 | 2.582 | 0.822 | 2.484 | 2.030 | 8.004 | 0.579 | 0.453 |

| DTCWT | 4.116 | 8.231 | 1.330 | 2.720 | 0.824 | 2.571 | 2.121 | 8.206 | 0.827 | 0.458 | |

| CNN | 4.522 | 9.043 | 1.350 | 2.732 | 0.756 | 1.932 | 2.806 | 6.370 | 0.830 | 0.657 | |

| CSE | 3.586 | 7.172 | 1.265 | 2.608 | 0.736 | 4.707 | 3.529 | 16.998 | 0.561 | 0.148 | |

| ASR | 3.929 | 7.858 | 0.679 | 1.773 | 0.784 | 2.341 | 1.954 | 8.183 | 0.807 | 0.446 | |

| CSR | 4.037 | 8.073 | 0.911 | 2.120 | 0.820 | 2.416 | 1.953 | 7.875 | 0.826 | 0.454 | |

| CSMCA | 4.191 | 8.383 | 1.094 | 2.343 | 0.812 | 2.384 | 2.069 | 7.477 | 0.752 | 0.611 | |

| TVM | 3.478 | 6.956 | 0.704 | 1.424 | 0.746 | 4.532 | 3.349 | 16.335 | 0.713 | 0.163 | |

| RTVD | 3.194 | 6.388 | 0.616 | 1.318 | 0.766 | 6.873 | 2.668 | 35.893 | 0.699 | 0.158 | |

| RGF | 4.048 | 8.096 | 1.367 | 2.719 | 0.772 | 3.817 | 3.471 | 9.207 | 0.728 | 0.488 | |

| MLGCF | 4.135 | 8.271 | 1.282 | 2.587 | 0.821 | 2.344 | 1.979 | 7.241 | 0.764 | 0.508 | |

| VSM-WLS | 4.157 | 8.314 | 1.345 | 2.727 | 0.827 | 2.567 | 2.191 | 7.670 | 0.825 | 0.504 | |

| EA-DCPCNN | 4.195 | 8.390 | 1.242 | 2.620 | 0.814 | 2.209 | 1.950 | 7.369 | 0.810 | 0.600 | |

| EA-PAPCNN | 4.135 | 8.269 | 1.309 | 2.688 | 0.819 | 2.608 | 2.174 | 8.162 | 0.836 | 0.408 | |

| WLE-PAPCNN | 4.408 | 8.818 | 1.349 | 2.753 | 0.785 | 2.008 | 2.321 | 5.020 | 0.840 | 0.641 | |

| LLVF-PAPCNN | 4.523 | 9.047 | 1.427 | 2.872 | 0.791 | 1.404 | 1.941 | 3.907 | 0.864 | 0.915 | |

| Proposed | 4.532 | 9.064 | 1.433 | 2.911 | 0.832 | 1.404 | 1.930 | 3.901 | 0.867 | 0.931 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, Z.; Lv, K.; Gong, X.; Wan, Y. A Remote Sensing Image Fusion Method Combining Low-Level Visual Features and Parameter-Adaptive Dual-Channel Pulse-Coupled Neural Network. Remote Sens. 2023, 15, 344. https://doi.org/10.3390/rs15020344

Hou Z, Lv K, Gong X, Wan Y. A Remote Sensing Image Fusion Method Combining Low-Level Visual Features and Parameter-Adaptive Dual-Channel Pulse-Coupled Neural Network. Remote Sensing. 2023; 15(2):344. https://doi.org/10.3390/rs15020344

Chicago/Turabian StyleHou, Zhaoyang, Kaiyun Lv, Xunqiang Gong, and Yuting Wan. 2023. "A Remote Sensing Image Fusion Method Combining Low-Level Visual Features and Parameter-Adaptive Dual-Channel Pulse-Coupled Neural Network" Remote Sensing 15, no. 2: 344. https://doi.org/10.3390/rs15020344

APA StyleHou, Z., Lv, K., Gong, X., & Wan, Y. (2023). A Remote Sensing Image Fusion Method Combining Low-Level Visual Features and Parameter-Adaptive Dual-Channel Pulse-Coupled Neural Network. Remote Sensing, 15(2), 344. https://doi.org/10.3390/rs15020344