Generalized Stereo Matching Method Based on Iterative Optimization of Hierarchical Graph Structure Consistency Cost for Urban 3D Reconstruction

Abstract

:1. Introduction

- (1)

- There are obvious radiation differences and small ground feature differences between images from different time phases or different satellites, which will interfere with the correspondence of matching points. Radiation differences between images lead to inconsistent grayscale distribution on the same target surface. There are small ground feature differences between images of different time phases, such as vehicles, trees, and ponds.

- (2)

- More complex situations exist on satellite images in urban scenes than natural scenes. There are usually texture-less regions on the flat ground and building top surfaces, and the close intensity values of pixels will cause blurred matching.

- (3)

- The baselines of images captured by satellites are longer than aerial images under the condition of high-speed motion, which will cause serious visual differences and numerous occlusions between satellite stereo image pairs. The building target will show different facades from different observation angles and there are occluded regions. Simultaneously, there is usually a large degree of disparity variation at the junction of a roof and façade, or a roof and the ground, and then there is a disparity discontinuity region.

- (1)

- The constructed graph structure consistency (GSC) cost is applicable to stereo matching between image pairs from different observation angles with different satellites or different time phases, which provides the possibility of obtaining the stereo information of a region more easily. Meanwhile, the matching of texture-less regions can be improved to some extent by adaptively combining multi-scale GSC costs.

- (2)

- This paper proposes an iterative optimization process based on visibility term and disparity discontinuity term to continuously detect occlusion and optimize the boundary disparity, which improves the matching of occlusion and disparity discontinuity regions to some extent.

2. Study Data and Preprocessing

2.1. Study Data

2.2. Preprocessing

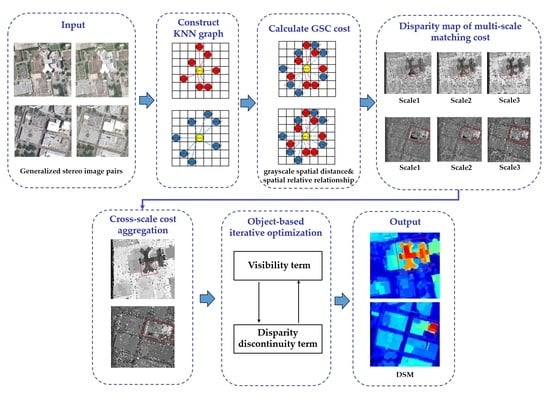

3. Methodology

3.1. Hierarchical Graph Structure Consistency Cost Construction

3.1.1. Graph Structure

3.1.2. Constructing Graph Structure Consistency Cost

3.1.3. Cross-Scale Cost Aggregation

3.2. Object-Based Iterative Optimization

3.2.1. Iterative Optimization Based on Visibility Term and Disparity Discontinuity Term

3.2.2. Disparity Refinement

3.3. IOHGSCM

| Algorithm 1 IOHGSCM |

| Input: Epipolar constrainted left image Ileft and right image Iright |

| Output: Disparity map dIOHGSCM and digital surface model DSMIOHGSCM |

| 1 /* Hierarchical graph structure consistency cost construction */ |

| 2 Obtain left-view KNN graph and right-view KNN graph by setting ws and K |

| 3 Obtain graph structure consistency cost (left→right) and (left←right) |

| 4 Fusion of the and |

| 5 Truncated and weighted combination of GSC cost and gradient cost |

| 6 Obtain multi-scale cost |

| 7 Add a generalized Tikhonov regularizer into Equation (18) |

| 8 Obtain multi-scale cost aggregation result |

| 9 /* Object-based iterative update optimization */ |

| 10 Obtain superpixel segmentation cost function F(s,d) by SLIC |

| 11 for 1 ≤ t + 1 ≤ nInter do |

| Set |

| Compute , |

| Compute |

| end |

| 12 Optimize disparity map using fractal net evolution |

4. Results

4.1. Evaluation Metrics

4.2. Effectiveness of Hierarchical Graph Structure Consistency Cost Construction

4.3. Effectiveness of Object-Based Iterative Optimization

4.4. Comparison with State-of-the-Art

5. Discussion

5.1. Comparison of Algorithm Time Consumption

5.2. Parameter Selection for KNN Graph

5.3. Evaluation of IOHGSCM

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, Y.; Wu, B. Relation-constrained 3D reconstruction of buildings in metropolitan areas from photogrammetric point clouds. Remote Sens. 2021, 13, 129. [Google Scholar] [CrossRef]

- Qi, Z.; Zou, Z.; Chen, H. 3D Reconstruction of Remote Sensing Mountain Areas with TSDF-Based Neural Networks. Remote Sens. 2022, 14, 4333. [Google Scholar] [CrossRef]

- Stathopoulou, E.K.; Welponer, M.; Remondino, F. Open-source image-based 3D reconstruction pipelines: Review, comparison and evaluation. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 331–338. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.; Guo, B.; Li, D.; Li, L.; Yang, N.; Liu, J.; Zhang, P.; Peng, Z. Multi-view stereo matching based on self-adaptive patch and image grouping for multiple unmanned aerial vehicle imagery. Remote Sens. 2016, 8, 89. [Google Scholar] [CrossRef] [Green Version]

- Nguatem, W.; Mayer, H. Modeling urban scenes from Pointclouds. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3857–3866. [Google Scholar]

- Wohlfeil, J.; Hirschmüller, H.; Piltz, B.; Börner, A.; Suppa, M. Fully automated generation of accurate digital surface models with sub-meter resolution from satellite imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B1, 75–80. [Google Scholar] [CrossRef] [Green Version]

- Pan, C.; Liu, Y.; Huang, D. Novel belief propagation algorithm for stereo matching with a robust cost computation. IEEE Access 2019, 7, 29699–29708. [Google Scholar] [CrossRef]

- Mozerov, M.G.; Van De Weijer, J. Accurate stereo matching by twostep energy minimization. IEEE Trans. Image Process. 2015, 24, 1153–1163. [Google Scholar] [CrossRef]

- Liu, H.; Wang, R.; Xia, Y.; Zhang, X. Improved cost computation and adaptive shape guided filter for local stereo matching of low texture stereo images. Appl. Sci. 2020, 10, 1869. [Google Scholar] [CrossRef] [Green Version]

- Zhang, B.; Zhu, D. Local stereo matching: An adaptive weighted guided image filtering-based approach. Int. J. Pattern Recognit. Artif. Intell. 2021, 35, 2154010. [Google Scholar] [CrossRef]

- Hosni, A.; Rhemann, C.; Bleyer, M.; Rother, C.; Gelautz, M. Fast cost-volume filtering for visual correspondence and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 504–511. [Google Scholar] [CrossRef]

- Zhang, C.; Li, Z.; Cheng, Y.; Cai, R.; Chao, H.; Rui, Y. Meshstereo: A global stereo model with mesh alignment regularization for view interpolation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 2057–2065. [Google Scholar]

- Hallek, M.; Boukamcha, H.; Mtibaa, A.; Atri, M. Dynamic programming with adaptive and self-adjusting penalty for real-time accurate stereo matching. J. Real-Time Image Process. 2022, 19, 233–245. [Google Scholar] [CrossRef]

- Nguyen, P.H.; Ahn, C.W. Stereo Matching Methods for Imperfectly Rectified Stereo Images. Symmetry 2019, 11, 570–590. [Google Scholar]

- Shin, Y.; Yoon, K. PatchMatch belief propagation meets depth upsampling for high-resolution depth maps. Electron. Lett. 2016, 52, 1445–1447. [Google Scholar] [CrossRef]

- Zeglazi, O.; Rziza, M.; Amine, A.; Demonceaux, C. A hierarchical stereo matching algorithm based on adaptive support region aggregation method. Pattern Recognit. Lett. 2018, 112, 205–211. [Google Scholar] [CrossRef]

- Haq, Q.M.U.; Lin, C.H.; Ruan, S.J.; Gregor, D. An edge-aware based adaptive multi-feature set extraction for stereo matching of binocular images. J. Ambient. Intell. Humaniz. Comput. 2022, 13, 1953–1967. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Yang, W.; Li, X.; Yang, B.; Fu, Y. A Novel Stereo Matching Algorithm for Digital Surface Model (DSM) Generation in Water Areas. Remote Sens. 2020, 12, 870. [Google Scholar] [CrossRef] [Green Version]

- Khamis, S.; Fanello, S.; Rhemann, C.; Kowdle, A.; Valentin, J.; Izadi, S. StereoNet: Guided Hierarchical Refinement for Real-Time Edge-Aware Depth Prediction. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 573–590. [Google Scholar]

- Xu, B.; Xu, Y.; Yang, X.; Jia, W.; Guo, Y. Bilateral grid learning for stereo matching network. arXiv 2021, arXiv:abs/2101.01601. [Google Scholar]

- Zhao, L.; Liu, Y.; Men, C.; Men, Y. Double Propagation Stereo Matching for Urban 3-D Reconstruction from Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–17. [Google Scholar] [CrossRef]

- Tatar, N.; Arefi, H.; Hahn, M. High-Resolution Satellite Stereo Matching by Object-Based Semiglobal Matching and Iterative Guided Edge-Preserving Filter. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1841–1845. [Google Scholar] [CrossRef]

- He, S.; Li, S.; Jiang, S.; Jiang, W. HMSM-Net: Hierarchical multi-scale matching network for disparity estimation of high-resolution satellite stereo images. ISPRS J. Photogramm. Remote Sens. 2022, 188, 314–330. [Google Scholar] [CrossRef]

- He, S.; Zhou, R.; Li, S.; Jiang, S.; Jiang, W. Disparity Estimation of High-Resolution Remote Sensing Images with Dual-Scale Matching Network. Remote Sens. 2021, 13, 5050. [Google Scholar] [CrossRef]

- Chen, W.; Chen, H.; Yang, S. Self-Supervised Stereo Matching Method Based on SRWP and PCAM for Urban Satellite Images. Remote Sens. 2022, 14, 1636. [Google Scholar] [CrossRef]

- Zhang, C.; Cui, Y.; Zhu, Z.; Jiang, S.; Jiang, W. Building Height Extraction from GF-7 Satellite Images Based on Roof Contour Constrained Stereo Matching. Remote Sens. 2022, 14, 1566. [Google Scholar] [CrossRef]

- Nemmaoui, A.; Aguilar, F.J.; Aguilar, M.A.; Qin, R. DSM and DTM generation from VHR satellite stereo imagery over plastic covered greenhouse areas. Comput. Electron. Agric. 2019, 164, 104903. [Google Scholar] [CrossRef]

- Wang, W.; Song, W.; Liu, Y. Precision analysis of 3D reconstruction model of generalized stereo image pair. Sci. Surv. Mapp. 2010, 35, 31–33. [Google Scholar]

- Yan, Y.; Su, N.; Zhao, C.; Wang, L. A Dynamic Multi-Projection-Contour Approximating Framework for the 3D Reconstruction of Buildings by Super-Generalized Optical Stereo-Pairs. Sensors 2017, 17, 2153. [Google Scholar] [CrossRef] [Green Version]

- Aguilar, M.A.; Saldaña, M.M.; Aguilar, F.J. Generation and Quality Assessment of Stereo-Extracted DSM From GeoEye-1 and WorldView-2 Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1259–1271. [Google Scholar] [CrossRef]

- Liu, B.; Yu, H.; Qi, G. GraftNet: Towards Domain Generalized Stereo Matching with a Broad-Spectrum and Task-Oriented Feature. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 13012–13021. [Google Scholar]

- Zhang, J.; Wang, X.; Bai, X.; Wang, C.; Huang, L.; Chen, Y.; Hancock, E.R. Revisiting Domain Generalized Stereo Matching Networks from a Feature Consistency Perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 13001–13011. [Google Scholar]

- Lee, S.; Jin, H.L.; Lim, J.; Suh, I.H. Robust stereo matching using adaptive random walk with restart algorithm. Image Vis. Comput. 2015, 37, 1–11. [Google Scholar] [CrossRef]

- Sun, J.; Zheng, N.N.; Shum, H.Y. Stereo matching using belief propagation. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 787–800. [Google Scholar]

- Fei, L.; Yan, L.; Chen, C.; Ye, Z.; Zhou, J. Ossim: An object-based multiview stereo algorithm using ssim index matching cost. IEEE Trans. Geosci. Remote Sens. 2017, 99, 6937–6949. [Google Scholar] [CrossRef]

- Liang, Z.; Feng, Y.; Guo, Y.; Liu, H.; Qiao, L.; Chen, W.; Zhang, J. Learning deep correspondence through prior and posterior feature constancy. arXiv 2017, arXiv:1712.01039. [Google Scholar]

- Li, S.; Chen, K.; Song, M.; Tao, D.; Chen, G.; Chen, C. Robust efficient depth reconstruction with hierarchical confidence-based matching. IEEE Trans. Image Process. 2017, 26, 3331–3343. [Google Scholar]

- Bosch, M.; Foster, K.; Christie, G.; Wang, S.; Hager, G.D.; Brown, M. Semantic stereo for incidental satellite images. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1524–1532. [Google Scholar]

- Zhang, D.; Xie, F.; Zhang, L. Preprocessing and fusion analysis of GF-2 satellite Remote-sensed spatial data. In Proceedings of the 2018 International Conference on Information Systems and Computer Aided Education (ICISCAE), Changchun, China, 6–8 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 24–29. [Google Scholar]

- Huang, B.; Zheng, J.; Giannarou, S.; Elson, D.S. H-Net: Unsupervised Attention-based Stereo Depth Estimation Leveraging Epipolar Geometry. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 4460–4467. [Google Scholar]

- Wang, Y.; Lu, Y.; Lu, G. Stereo Rectification Based on Epipolar Constrained Neural Network. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, ON, Canada, 6–11 June 2021; pp. 2105–2109. [Google Scholar]

- Yi, H.; Chen, X.; Wang, D.; Du, S.; Xu, B.; Zhao, F. An Epipolar Resampling Method for Multi-View High Resolution Satellite Images Based on Block. IEEE Access 2021, 9, 162884–162892. [Google Scholar] [CrossRef]

- Fuhry, M.; Reichel, L. A new Tikhonov regularization method. Numer. Algorithms 2012, 59, 433–445. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gholinejad, S.; Amiri-Simkooei, A.; Moghaddam, S.H.A.; Naeini, A.A. An automated PCA-based approach towards optization of the rational function model. ISPRS J. Photogramm. Remote Sens. 2020, 165, 133–139. [Google Scholar] [CrossRef]

- Zhang, K.; Fang, Y.Q.; Min, D.B.; Sun, L.F.; Yang, S.Q.; Yan, S.C.; Tian, Q. Cross-Scale Cost Aggregation for Stereo Matching. IEEE Trans. Circuits Syst. Video Technol. 2017, 27, 965–976. [Google Scholar] [CrossRef] [Green Version]

- Hu, Z.; Wu, Z.; Zhang, Q.; Fan, Q.; Xu, J. A Spatially-Constrained Color-Texture Model for Hierarchical VHR Image Segmentation. IEEE Geosci. Remote Sens. Lett. 2013, 10, 120–124. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, L.; Wang, Z.; Wang, R. Dense Stereo Matching Strategy for Oblique Images That Considers the Plane Directions in Urban Areas. IEEE Trans. Geosci. Remote Sens. 2020, 58, 5109–5116. [Google Scholar] [CrossRef]

- Höhle, J.; Höhle, M. Accuracy assessment of digital elevation models by means of robust statistical methods. ISPRS J. Photogramm. Remote Sens. 2009, 64, 398–406. [Google Scholar] [CrossRef] [Green Version]

| Dataset | Image Pair | Sensor | Date | Characteristics |

|---|---|---|---|---|

| Dataset 1 |  | SuperView-1/ GF-2 | 4 May 2020 2 April 2020 | Radiation inconsistency |

| Dataset 2 |  | WorldView-3 | 21 May 2015 15 June 2015 | Disparity discontinuities and occlusions |

| Dataset 3 |  | WorldView-3 | 21 May 2015 15 June 2015 | Texture-less |

| Dataset 4 |  | WorldView-3 | 21 May 2015 15 June 2015 | Repetitive patterns |

| Dataset 5 |  | WorldView-3 | 21 May 2015 15 June 2015 | Disparity discontinuities and occlusions |

| Dataset 6 |  | WorldView-3 | 18 October 2014 30 October 2014 | Disparity discontinuities and occlusions |

| Dataset 7 |  | WorldView-3 | 18 October 2014 30 October 2014 | Texture-less |

| Parameters | Description | Value |

|---|---|---|

| ,, | The number of similar pixels of multi-scale graph structure | 101, 101, 84 |

| ,, | Window size of multi-scale graph structure | 13, 13, 13 |

| Truncation parameter of GSC cost | 4.0 | |

| Truncation parameter of gradient cost | 2.0 | |

| Scale parameter of GSC cost | 0.6 | |

| Scale parameter of gradient cost | 0.4 |

| Method/ Dataset | RMSE/NMAD (m) | ||

|---|---|---|---|

| GSC + Gradient | Census + Gradient | Color + Gradient | |

| Dataset 1 | 12.16/8.98 | 14.08/11.68 | 14.65/12.04 |

| Dataset 2 | 8.13/5.38 | 8.84/5.93 | 9.42/7.36 |

| Dataset 3 | 9.61/7.20 | 12.46/8.44 | 13.94/9.32 |

| Dataset 4 | 4.36/3.99 | 4.81/4.49 | 4.59/4.48 |

| Dataset 5 | 4.13/3.00 | 4.78/3.56 | 5.39/4.91 |

| Dataset 6 | 8.27/6.74 | 10.46/9.18 | 11.03/9.75 |

| Dataset 7 | 4.64/3.81 | 5.52/4.27 | 5.91/4.83 |

| Dataset | RMSE/NMAD (m) | |||

|---|---|---|---|---|

| Scale 1 | Scale 2 | Scale 3 | Aggregation | |

| Dataset 2 | 8.13/5.38 | 7.02/4.36 | 7.94/5.07 | 6.04/3.28 |

| Dataset 3 | 9.61/7.20 | 8.33/5.91 | 9.29/6.75 | 7.17/4.87 |

| Dataset | RMSE/NMAD (m) | |

|---|---|---|

| Before | After | |

| Dataset 1 | 8.86/5.92 | 4.31/2.41 |

| Dataset 2 | 6.04/3.28 | 2.18/1.29 |

| Dataset 3 | 7.17/4.87 | 2.67/1.96 |

| Dataset 4 | 3.78/3.59 | 3.17/2.96 |

| Dataset 5 | 3.54/2.32 | 2.97/1.48 |

| Dataset 6 | 6.49/4.75 | 3.53/2.53 |

| Dataset 7 | 4.15/3.11 | 3.06/1.82 |

| Method/ Dataset | RMSE (m) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IOHGSCM | ARWSM | SGM | MeshSM | FCVFSM | HMSMNet | DSMNet | StereoNet | BGNet | |

| Dataset 1 | 4.31 | 5.12 | 6.56 | 6.46 | 5.61 | 6.6 | 5.96 | 7.14 | 5.71 |

| Dataset 2 | 2.18 | 2.62 | 2.86 | 3.05 | 6.24 | 2.6 | 3.71 | 3.72 | 2.34 |

| Dataset 3 | 2.67 | 3.4 | 4.78 | 4.13 | 4.63 | 4.22 | 5.68 | 6.3 | 2.59 |

| Dataset 4 | 3.17 | 3.31 | 3.68 | 3.88 | 3.73 | 3.93 | 3.25 | 3.76 | 3.49 |

| Dataset 5 | 2.97 | 3.19 | 3.89 | 3.55 | 3.32 | 3.43 | 4.19 | 4.07 | 3.41 |

| Dataset 6 | 3.53 | 4.05 | 4.92 | 4.77 | 4.58 | 6.79 | 3.94 | 4.83 | 4.46 |

| Dataset 7 | 3.06 | 3.28 | 3.67 | 3.23 | 4.12 | 3.35 | 4.38 | 5.01 | 3.54 |

| Method/ Dataset | NMAD (m) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IOHGSCM | ARWSM | SGM | MeshSM | FCVFSM | HMSMNet | DSMNet | StereoNet | BGNet | |

| Dataset 1 | 2.41 | 2.93 | 4.1 | 3.26 | 3.48 | 4.77 | 3.97 | 4.42 | 3.6 |

| Dataset 2 | 1.29 | 1.36 | 1.47 | 1.34 | 2.83 | 1.39 | 2.64 | 2.98 | 1.34 |

| Dataset 3 | 1.96 | 2.39 | 3.52 | 2.59 | 3.22 | 2.94 | 3.78 | 4.47 | 1.93 |

| Dataset 4 | 2.96 | 2.99 | 3.37 | 3.86 | 3.69 | 3.98 | 3.33 | 3.46 | 3.37 |

| Dataset 5 | 1.48 | 1.31 | 1.71 | 1.69 | 1.57 | 1.79 | 2.56 | 2.74 | 1.75 |

| Dataset 6 | 2.53 | 2.67 | 3.54 | 3.26 | 3.48 | 4.35 | 2.59 | 2.81 | 3.13 |

| Dataset 7 | 1.82 | 1.94 | 2.46 | 1.78 | 2.79 | 1.88 | 2.63 | 3.75 | 2.04 |

| Method | IOHGSCM | ARWSM | SGM | MeshSM | FCVFSM | HMSMNet | DSMNet | StereoNet | BGNet |

|---|---|---|---|---|---|---|---|---|---|

| Times (s) | 19.12 | 8.99 | 6.83 | 27.22 | 45.56 | 3.92 | 2.02 | 2.17 | 1.13 |

| Method/ Target | RMSE (m) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IOHGSCM | ARWSM | SGM | MeshSM | FCVFSM | HMSMNet | DSMNet | StereoNet | BGNet | |

| Target 1 | 5.81 | 7.02 | 8.26 | 9.35 | 7.91 | 9.26 | 11.08 | 8.99 | 8.18 |

| Target 2 | 3.47 | 4.46 | 5.13 | 4.31 | 5.41 | 4.63 | 5.11 | 6.53 | 4.92 |

| Target 3 | 5.52 | 8.82 | 9.41 | 15.73 | 16.49 | 19.02 | 22.67 | 22.75 | 5.58 |

| Target 4 | 2.47 | 2.78 | 2.69 | 3.45 | 5.61 | 2.81 | 3.04 | 2.79 | 2.58 |

| Target 5 | 2.21 | 2.33 | 2.61 | 2.54 | 2.46 | 3.28 | 5.58 | 4.12 | 2.37 |

| Target 6 | 2.63 | 4.38 | 3.56 | 4.17 | 3.14 | 3.84 | 3.62 | 7.25 | 5.31 |

| Method/ Target | NMAD (m) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| IOHGSCM | ARWSM | SGM | MeshSM | FCVFSM | HMSMNet | DSMNet | StereoNet | BGNet | |

| Target 1 | 2.43 | 2.74 | 2.68 | 2.77 | 2.64 | 2.83 | 3.06 | 2.73 | 2.91 |

| Target 2 | 1.73 | 2.35 | 3.55 | 2.32 | 2.40 | 3.07 | 2.84 | 4.47 | 3.16 |

| Target 3 | 5.31 | 5.87 | 6.27 | 7.15 | 6.76 | 6.87 | 10.17 | 10.96 | 5.25 |

| Target 4 | 0.61 | 1.04 | 1.10 | 1.16 | 2.14 | 1.18 | 1.12 | 1.10 | 0.66 |

| Target 5 | 0.86 | 0.98 | 1.11 | 1.04 | 1.15 | 1.57 | 3.46 | 2.83 | 0.94 |

| Target 6 | 1.82 | 3.67 | 2.82 | 3.14 | 2.39 | 2.83 | 2.57 | 5.56 | 3.89 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, S.; Chen, H.; Chen, W. Generalized Stereo Matching Method Based on Iterative Optimization of Hierarchical Graph Structure Consistency Cost for Urban 3D Reconstruction. Remote Sens. 2023, 15, 2369. https://doi.org/10.3390/rs15092369

Yang S, Chen H, Chen W. Generalized Stereo Matching Method Based on Iterative Optimization of Hierarchical Graph Structure Consistency Cost for Urban 3D Reconstruction. Remote Sensing. 2023; 15(9):2369. https://doi.org/10.3390/rs15092369

Chicago/Turabian StyleYang, Shuting, Hao Chen, and Wen Chen. 2023. "Generalized Stereo Matching Method Based on Iterative Optimization of Hierarchical Graph Structure Consistency Cost for Urban 3D Reconstruction" Remote Sensing 15, no. 9: 2369. https://doi.org/10.3390/rs15092369

APA StyleYang, S., Chen, H., & Chen, W. (2023). Generalized Stereo Matching Method Based on Iterative Optimization of Hierarchical Graph Structure Consistency Cost for Urban 3D Reconstruction. Remote Sensing, 15(9), 2369. https://doi.org/10.3390/rs15092369