Burned Area Mapping Using Support Vector Machines and the FuzCoC Feature Selection Method on VHR IKONOS Imagery

Abstract

:1. Introduction

- to investigate whether the quality and accuracy of burned area maps produced by an SVM classifier increase with the addition of higher-order features to the original VHR IKONOS spectral bands, and

- to compare two classification approaches, namely the object-oriented and pixel-based classification approaches, in order to identify which one is the most appropriate for operational burned area mapping.

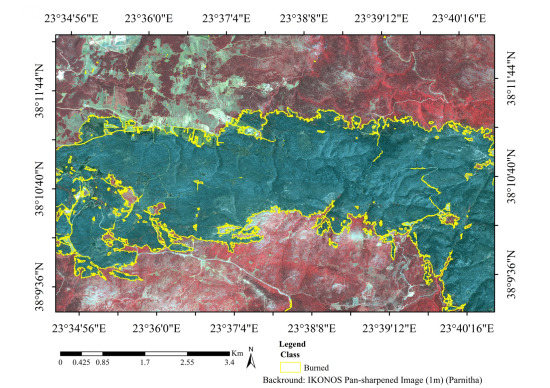

2. Study Area

3. Proposed Methodology

3.1. Step 1: Feature Generation for Pixel and Object-Based Classifications

3.1.1. Feature Sets for the Pixel-Based Classifications

| Feature Category | Window Sizes | Number of Features |

|---|---|---|

| Bands | - | 4 |

| Occurrence Measures (Mean, Entropy, Skewness, Variance) | (11 × 11, 15 × 15, 21 × 21) | 48 |

| Co-Occurrence Measures (Mean, Entropy, Homogeneity, Second moment, Variance, Dissimilarity, Correlation, Contrast) | (11 × 11, 15 × 15, 21 × 21) | 64 |

| LISA (Moran’s I, Getis-Ord Gi, Geary’s C) | (5 × 5) | 12 |

| PCA | - | 4 |

| IHS | - | 3 |

| Tasseled Cap | - | 3 |

| VIs (NDVI) | - | 1 |

| Band Ratio (BN = Blue/NIR) | - | 1 |

| Total | 172 |

3.1.2. Image Segmentation and Feature Extraction for Object-Based Classifications

| Feature Categories (eCognition Categorization) | Object Features | Number of Features |

|---|---|---|

| Customized (Indexes) | NDVI, NIR/Red, PC2/NIR, Blue/Red | 4 |

| Layer values | Mean, Standard Deviation, Skewness, Pixel-based, To-neighbors, To-scene, Ratio-to scene, Hue, Saturation, Intensity | 113 |

| Geometry | Density, Length and Width | 2 |

| Total | 119 |

3.2. Step 2: Training Samples Selection for SVM Pixel- and Object-Based Classifications

3.2.1. Training Set for Pixel-Based Classifications

3.2.2. Training Set for Object-Based Classification

3.3. Step 3: Feature Selection for the Pixel and Object-Based Classifications

- IKONOSRGBNIR-PIXEL: The initial four bands of the IKONOS image.

- IKONOSFullSpace-PIXEL: All 172 available features considered in pixel level.

- IKONOSFuZCoC-PIXEL: The features selected by the FuzCoC FS algorithm.

| Features Parnitha | Features Rhodes |

|---|---|

| PCA (Second PCA) | GLCM (Mean in the NIR band) (Window size 21 × 21) |

| Moran’s I (Blue) | Occurrence measures (Skewness in the NIR band) (Window size 15 × 15) |

| Occurrence measures (Skewness in the NIR band) (Window size 11 × 11) | GLCM (Correlation in the NIR band) (Window size 21 × 21) |

| GLCM (Mean in the NIR band) (Window size 15 × 15) | - |

| Features Parnitha | Features Rhodes |

|---|---|

| Ratio (Blue Band) | Ratio (Second PCA) |

| Max.Diff | Min Pixel value (RED Band) |

| Mean of outer border (NIR Band) | Mean of outer border (NIR Band) |

| - | Arithmetic Features (NIR/RED) |

- IKONOSOBJECT: All 119 available features considered for object-based classifications.

- IKONOSFuzCoC-OBJECT: The features selected by the FuzCoC FS algorithm.

3.4. Step 4: SVM Pixel and Object-Based Classification Models

- SVMFullSpace-PIXEL: The pixel-based classification map produced by applying the SVM on the dataset composed of all the 172 features.

- SVMRGBNIR-PIXEL: The pixel-based classification map produced by applying the SVM on the original IKONOS image (four bands).

- SVMFuzCoC-PIXEL: The pixel-based classification map produced by applying the SVM on the augmented dataset including the higher-order features, after employing the FuzCoC FS methodology.

- SVMOBJECT: The object-based classification map produced by applying the SVM on the segmented image, using all the 119 calculated object features.

- SVMFuzCoC-OBJECT: The object-based classification map produced by applying the SVM on the segmented image, after employing the FuzCoC FS methodology.

| Parnitha | Rhodes | |||

|---|---|---|---|---|

| Dataset | C | γ | C | γ |

| IKONOSRGBNIR-PIXEL | 128 | 0.125 | 32768 | 23 |

| IKONOSFullSpace-PIXEL | 512 | 0.5 | 8 | 2 |

| IKONOSFuZCoC-PIXEL | 128 | 0.125 | 32768 | 23 |

| IKONOSOBJECT | 2 | 0.5 | 8192 | 2−7 |

| IKONOSFuZCoC-OBJECT | 0.5 | 2 | 2048 | 0.5 |

4. Experimental Results

4.1. SVM Pixel-Based Classification Results for the Parnitha Dataset

| Classification | Class | PA | UA | OA | KIA | Pf |

|---|---|---|---|---|---|---|

| SVMFullSpace-PIXEL | Burned | 95.99 | 94.42 | 97.47 | 0.934 | 0.015 |

| Unburned | 98.00 | 98.58 | ||||

| SVMRGBNIR-PIXEL | Burned | 91.09 | 93.24 | 95.95 | 0.894 | 0.018 |

| Unburned | 97.67 | 96.89 | ||||

| SVMFuzCoC-PIXEL | Burned | 92.37 | 97.08 | 97.27 | 0.928 | 0.007 |

| Unburned | 99.02 | 97.35 |

4.2. SVM Object-Based Classification Results for the Parnitha Dataset

| Classification | Class | PA | UA | OA | KIA | Pf |

|---|---|---|---|---|---|---|

| SVMOBJECT | Burned | 94.64 | 94.56 | 97.17 | 0.926 | 0.015 |

| Unburned | 98.08 | 98.11 | ||||

| SVMFuzCoC-OBJECT | Burned | 94.64 | 97.05 | 97.85 | 0.943 | 0.007 |

| Unburned | 98.99 | 98.13 |

4.3. SVM Pixel-Based Classification Results for the Rhodes Dataset

| Classification | Class | PA | UA | OA | KIA | Pf |

|---|---|---|---|---|---|---|

| SVMFullSpace-PIXEL | Burned | 95.20 | 90.36 | 89.97 | 0.766 | 0.075 |

| Unburned | 79.32 | 89.02 | ||||

| SVMRGBNIR-PIXEL | Burned | 95.29 | 83.12 | 83.86 | 0.604 | 0.154 |

| Unburned | 60.56 | 86.33 | ||||

| SVMFuzCoC-PIXEL | Burned | 89.47 | 91.82 | 87.59 | 0.722 | 0.060 |

| Unburned | 83.77 | 79.62 |

4.4. SVM Object-Based Classifications for the Rhodes Dataset

| Classification | Class | PA | UA | OA | KIA | Pf |

|---|---|---|---|---|---|---|

| SVMOBJECT | Burned | 87.24 | 84.30 | 79.26 | 0.477 | 0.114 |

| Unburned | 59.30 | 64.90 | ||||

| SVMFuzCoC-OBJECT | Burned | 92.88 | 95.67 | 92.39 | 0.830 | 0.051 |

| Unburned | 91.43 | 86.30 |

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- FAO. Fire Management Global Assessment 2006; Food and Agriculture Organization of the United Nations: Rome, Italy, 2007. [Google Scholar]

- FAO. State of the World’s Forests; Food and Agriculture Organization of the United Nations: Rome, Italy, 2012. [Google Scholar]

- FOREST EUROPE Liaison Unit Oslo; United Nations Economic Commission for Europe (UNECE); Food and Agriculture Organization of the United Nations (FAO). State of Europe’s Forests 2011: Status and Trends in Sustainable Forest Management in Europe; EFI EUROFOREST Portal: Oslo, Norway, 2011. [Google Scholar]

- Pereira, J.M.; Chuvieco, E.; Beaudoin, A.; Desbois, N. Remote sensing of burned areas: A review. In Report of the Megafires Project ENVCT96-0256; Chuvieco, E., Ed.; Universidad de Alcala: Alcala de Henares, Spain, 1997; pp. 127–183. [Google Scholar]

- Gitas, I.Z.; Mitri, G.H.; Ventura, G. Object-based image classification for burned area mapping of Creus Cape, Spain, using NOAA-AVHRR imagery. Remote Sens. Environ. 2004, 92, 409–413. [Google Scholar] [CrossRef]

- Richards, J.A; Milne, A.K. Mapping fire burns and vegetation regeneration using principal components analysis. In Proceeding of 1983 International Geoscience and Remote Sensing Symposium (IGARSS’83), San Francisco, CA, USA, 3l August–2 September 1983.

- Chuvieco, E.; Congalton, R.G. Mapping and inventory of forest fires from digital processing of TM data. Geocarto Int. 1988, 3, 41–53. [Google Scholar] [CrossRef]

- Lentile, L.B.; Holden, Z.A.; Smith, A.M. S.; Falkowski, M.J.; Hudak, A.T.; Morgan, P.; Lewis, S.A.; Gessler, P.E.; Benson, N.C. Remote sensing techniques to assess active fire characteristics and post-fire effects. Int. J. Wildland Fire 2006, 15, 319–345. [Google Scholar] [CrossRef]

- Boschetti, M.; Stroppiana, D.; Brivio, P.A. Mapping burned areas in a Mediterranean environment using soft integration of spectral indices from high-resolution satellite images. Earth Interact. 2010, 14, 1–20. [Google Scholar] [CrossRef]

- Mitri, G.H.; Gitas, I.Z. Fire type mapping using object-based classification of Ikonos imagery. Int. J. Wildland Fire 2006, 15, 457–462. [Google Scholar] [CrossRef]

- Mitri, G.H.; Gitas, I.Z. Mapping the severity of fire using object-based classification of IKONOS imagery. Int. J. Wildland Fire 2008, 17, 431–442. [Google Scholar] [CrossRef]

- Polychronaki, A.; Ioannis, I.Z. Burned area mapping in Greece using SPOT-4 HRVIR images and object-based image analysis. Remote Sens. 2012, 4, 424–438. [Google Scholar] [CrossRef]

- Henry, M.C. Comparison of single- and multi-date landsat data for mapping wildfire scars in Ocala National Forest, Florida. Photogramm. Eng. Remote Sens. 2008, 74, 881–891. [Google Scholar] [CrossRef]

- Mallinis, G.; Koutsias, N. Comparing ten classification methods for burned area mapping in a Mediterranean environment using Landsat TM satellite data. Int. J. Remote Sens. 2012, 33, 4408–4433. [Google Scholar] [CrossRef]

- Koutsias, N.; Karteris, M. Logistic regression modelling of multitemporal Thematic Mapper data for burned area mapping. Int. J. Remote Sens. 1998, 19, 3499–3514. [Google Scholar] [CrossRef]

- Kontoes, C.C.; Poilvé, H.; Florsch, G.; Keramitsoglou, I.; Paralikidis, S. A comparative analysis of a fixed thresholding vs. a classification tree approach for operational burn scar detection and mapping. Int. J. Appl. Earth Obs. Geoinf. 2009, 11, 299–316. [Google Scholar] [CrossRef]

- Ustin, S. Manual of Remote Sensing: Remote Sensing for Natural Resource Management and Environmental Monitoring; Wiley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Quintano, C.; Fernández-Manso, A.; Fernández‐Manso, O.; Shimabukuro, Y.E. Mapping burned areas in Mediterranean countries using spectral mixture analysis from a uni-temporal perspective. Int. J. Remote Sens. 2006, 27, 645–662. [Google Scholar] [CrossRef]

- Smith, A.M. S.; Drake, N.A.; Wooster, M.J.; Hudak, A.T.; Holden, Z.A.; Gibbons, C.J. Production of Landsat ETM+ reference imagery of burned areas within Southern African savannahs: Comparison of methods and application to MODIS. Int. J. Remote Sens. 2007, 28, 2753–2775. [Google Scholar] [CrossRef]

- Pu, R.; Gong, P. Determination of burnt scars using logistic regression and neural network techniques from a single post-fire Landsat 7 ETM + image. Photogramm. Eng. Remote Sens. 2004, 70, 841–850. [Google Scholar] [CrossRef]

- Mitrakis, N.E.; Mallinis, G.; Koutsias, N.; Theocharis, J.B. Burned area mapping in Mediterranean environment using medium-resolution multi-spectral data and a neuro-fuzzy classifier. Int. J. Image Data Fusion 2011, 3, 299–318. [Google Scholar] [CrossRef]

- Cao, X.; Chen, J.; Matsushita, B.; Imura, H.; Wang, L. An automatic method for burn scar mapping using support vector machines. Int. J. Remote Sens. 2009, 30, 577–594. [Google Scholar] [CrossRef]

- Zammit, O.; Descombes, X.; Zerubia, J. Assessment of different classification algorithms for burnt land discrimination. In Proceedings of 2007 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2007), Barcelona, Spain, 23–28 July 2007; pp. 3000–3003.

- Zammit, O.; Descombes, X.; Zerubia, J. Burnt area mapping using support vector machines. For. Ecol. Manag. 2006, 234, S240. [Google Scholar] [CrossRef]

- Mitri, G.H.; Gitas, I.Z. A semi-automated object-oriented model for burned area mapping in the Mediterranean region using Landsat-TM imagery. Int. J. Wildland Fire 2004, 13, 367–376. [Google Scholar] [CrossRef]

- Polychronaki, A.; Gitas, I.Z. The development of an operational procedure for burned-area mapping using object-based classification and ASTER imagery. Int. J. Remote Sens. 2010, 31, 1113–1120. [Google Scholar] [CrossRef]

- Burned area detection and merging development, 2012. ESA CCI Aerosol Website. Available online: https://www.esa-fire-cci.org/webfm_send/445 (accessed on 21 October 2014).

- Moustakidis, S.; Mallinis, G.; Koutsias, N.; Theocharis, J.B.; Petridis, V. SVM-based fuzzy decision trees for classification of high spatial resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 149–169. [Google Scholar] [CrossRef]

- Stavrakoudis, D.G.; Theocharis, J.B.; Zalidis, G.C. A boosted genetic fuzzy classifier for land cover classification of remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2011, 66, 529–544. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Stavrakoudis, D.G.; Galidaki, G.N.; Gitas, I.Z.; Theocharis, J.B. A genetic fuzzy-rule-based classifier for land cover classification from hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 130–148. [Google Scholar] [CrossRef]

- Stavrakoudis, D.G.; Galidaki, G.N.; Gitas, I.Z.; Theocharis, J.B. Reducing the complexity of genetic fuzzy classifiers in highly-dimensional classification problems. Int. J. Comput. Intell.Syst. 2012, 5, 254–275. [Google Scholar] [CrossRef]

- Smith, A.M.S.; Wooster, M.J.; Powell, A.K.; Usher, D. Texture based feature extraction: Application to burn scar detection in Earth observation satellite sensor imagery. Int. J. Remote Sens. 2002, 23, 1733–1739. [Google Scholar] [CrossRef]

- Alonso-Benito, A.; Hernandez-Leal, P.A.; Gonzalez-Calvo, A.; Arbelo, M.; Barreto, A. Analysis of different methods for burnt area estimation using remote sensing and ground truth data. In Proceedings of 2008 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2008), Boston, MA, USA, 7–11 July 2008.

- Vapnik, A. The Nature of Statistical Learning Theory; Springer-Verlag: New York, NY, USA, 1995. [Google Scholar]

- Moustakidis, S.P.; Theocharis, J.B.; Giakas, G. Feature selection based on a fuzzy complementary criterion: Application to gait recognition using ground reaction forces. Comput. Meth. Biomech. Biomed. Eng. 2011, 15, 627–644. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Tetuko, J.; Tateishi, R. Analysis of co-occurrence and discrete wavelet transform textures for differentiation of forest and non-forest vegetation in very-high-resolution optical-sensor imagery. Int. J. Remote Sens. 2008, 29, 3417–3456. [Google Scholar] [CrossRef]

- Feng, D.; Xin, Z.; Pengyu, F.; Lihui, C. A new method for burnt scar mapping using spectral indices combined with Support Vector Machines. In Proceedings of the First International Conference on Agro-Geoinformatics, Shanghai, China, 2–4 August 2012; pp. 1–4.

- Koutsias, N.; Karteris, M.; Chuvieco, E. The use of intensity-hue-saturation transformation of Landsat-5 Thematic Mapper data for burned land mapping. Photogramm. Eng. Remote Sens. 2000, 66, 829–840. [Google Scholar]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst., Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Anselin, L. Local indicators of spatial association—LISA. Geogr. Anal. 1995, 27, 93–115. [Google Scholar] [CrossRef]

- Jensen, J.R. Introductory Digital Image Processing: A Remote Sensing Perspective, 2 ed.; Prentice-Hall Press: Upper Saddle River, NJ, United States, 1996. [Google Scholar]

- Horne, J.H. A tasseled cap transformation for IKONOS images. In Proceedings of ASPRS 2003 Annual Conference, Anchorage, Alaska, 5–9 May 2003; pp. 60–70.

- Chikr El-Mezouar, M.; Taleb, N.; Kpalma, K.; Ronsin, J. An IHS-based fusion for color distortion reduction and vegetation enhancement in IKONOS imagery. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1590–1602. [Google Scholar]

- Chuvieco, E.; Huete, A. Fundamentals of Satellite Remote Sensing; CRC Press: London, UK, 2009. [Google Scholar]

- Srinivasan, G.N; Shobha, G. Statistical texture analysis. Proc. World Acad. Sci., Eng. Technol. 2008, 36, 1264–1269. [Google Scholar]

- Unser, M. Sum and difference histograms for texture classification. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 118–125. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shapiro, L.G. Computer and Robot Vision; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1991; p. 608. [Google Scholar]

- Marceau, D.J.; Howarth, P.J.; Dubois, J.M.; Gratton, D.J. Evaluation of the grey-level co-occurrence matrix method for land-cover classification using SPOT imagery. IEEE Trans. Geosci. Remote Sens. 1990, 28, 513–519. [Google Scholar] [CrossRef]

- Soh, L.K.; Tsatsoulis, C. Texture analysis of SAR sea ice imagery using gray level co-occurrence matrices. IEEE Trans. Geosci. Remote Sens. 1999, 37, 780–795. [Google Scholar] [CrossRef]

- Pesaresi, M. The Remotely Sensed City: Concepts and Applications about the Analysis of the Contemporary Built-Up Environment Using Advanced Space Technologies; EC Joint Research Centre: Ispra, Italy, 2000. [Google Scholar]

- Murray, H.; Lucieer, A.; Williams, R. Texture-based classification of sub-Antarctic vegetation communities on Heard Island. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 138–149. [Google Scholar] [CrossRef]

- Puissant, A.; Hirsch, J.; Weber, C. The utility of texture analysis to improve per‐pixel classification for high to very high spatial resolution imagery. Int. J. Remote Sens. 2005, 26, 733–745. [Google Scholar] [CrossRef]

- Curran, P.J. The semivariogram in remote sensing: An introduction. Remote Sens. Environ. 1988, 24, 493–507. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Strahler, A.H.; Jupp, D.L.B. The use of variograms in remote sensing: I. Scene models and simulated images. Remote Sens. Environ. 1988, 25, 323–348. [Google Scholar] [CrossRef]

- Berberoglu, S.; Curran, P.J.; Lloyd, C.D.; Atkinson, P.M. Texture classification of Mediterranean land cover. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 322–334. [Google Scholar] [CrossRef]

- Onojeghuo, A.O.; Blackburn, G.A. Mapping reedbed habitats using texture-based classification of QuickBird imagery. Int. J. Remote Sens. 2011, 32, 8121–8138. [Google Scholar] [CrossRef]

- Zhang, Y.; Hong, G. An IHS and wavelet integrated approach to improve pan-sharpening visual quality of natural colour IKONOS and QuickBird images. Inf. Fusion 2005, 6, 225–234. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. Angew. Geogr. Inf. Verarb. 2000, XII, 12–23. [Google Scholar]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Li, H.; Gu, H.; Han, Y.; Yang, J. Object-oriented classification of high-resolution remote sensing imagery based on an improved colour structure code and a support vector machine. Int. J. Remote Sens. 2010, 31, 1453–1470. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Van Coillie, F.M.B.; Verbeke, L.P.C.; De Wulf, R.R. Feature selection by genetic algorithms in object-based classification of IKONOS imagery for forest mapping in Flanders, Belgium. Remote Sens. Environ. 2007, 110, 476–487. [Google Scholar]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. Toward intelligent training of supervised image classifications: Directing training data acquisition for SVM classification. Remote Sens. Environ. 2004, 93, 107–117. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. The use of small training sets containing mixed pixels for accurate hard image classification: Training on mixed spectral responses for classification by a SVM. Remote Sens. Environ. 2006, 103, 179–189. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A.; Sanchez-Hernandez, C.; Boyd, D.S. Training set size requirements for the classification of a specific class. Remote Sens. Environ. 2006, 104, 1–14. [Google Scholar] [CrossRef]

- Markham, B.L; Townshend, J.R.G. Land cover classification accuracy as a function of sensor spatial resolution. In Proceedings of International Symposium on Remote Sensing of Environment, Ann Arbor, MI, USA, 11–15 May 1981; pp. 1075–1990.

- Pal, M.; Foody, G.M. Feature selection for classification of hyperspectral data by SVM. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2297–2307. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Piedra-Fernández, J.A.; Cantón-Garbín, M.; Wang, J.Z. Feature selection in AVHRR ocean satellite images by means of filter methods. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4193–4203. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Shackelford, A.K.; Davis, C.H. A hierarchical fuzzy classification approach for high-resolution multispectral data over urban areas. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1920–1932. [Google Scholar] [CrossRef]

- Xiuping, J.; Richards, J.A. Segmented principal components transformation for efficient hyperspectral remote-sensing image display and classification. IEEE Trans. Geosci. Remote Sens. 1999, 37, 538–542. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Gunn, S.R. Support Vector Machines for Classification and Regression; University of Southampton: Southampton, UK, 1998. [Google Scholar]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Waske, B.; Benediktsson, J.A. Fusion of support vector machines for classification of multisensor data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3858–3866. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices; CRC Press: New York, NY, USA, 2008. [Google Scholar]

- Giglio, L.; Csiszar, I.; Restás, Á.; Morisette, J.T.; Schroeder, W.; Morton, D.; Justice, C.O. Active fire detection and characterization with the advanced spaceborne thermal emission and reflection radiometer (ASTER). Remote Sens. Environ. 2008, 112, 3055–3063. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dragozi, E.; Gitas, I.Z.; Stavrakoudis, D.G.; Theocharis, J.B. Burned Area Mapping Using Support Vector Machines and the FuzCoC Feature Selection Method on VHR IKONOS Imagery. Remote Sens. 2014, 6, 12005-12036. https://doi.org/10.3390/rs61212005

Dragozi E, Gitas IZ, Stavrakoudis DG, Theocharis JB. Burned Area Mapping Using Support Vector Machines and the FuzCoC Feature Selection Method on VHR IKONOS Imagery. Remote Sensing. 2014; 6(12):12005-12036. https://doi.org/10.3390/rs61212005

Chicago/Turabian StyleDragozi, Eleni, Ioannis Z. Gitas, Dimitris G. Stavrakoudis, and John B. Theocharis. 2014. "Burned Area Mapping Using Support Vector Machines and the FuzCoC Feature Selection Method on VHR IKONOS Imagery" Remote Sensing 6, no. 12: 12005-12036. https://doi.org/10.3390/rs61212005

APA StyleDragozi, E., Gitas, I. Z., Stavrakoudis, D. G., & Theocharis, J. B. (2014). Burned Area Mapping Using Support Vector Machines and the FuzCoC Feature Selection Method on VHR IKONOS Imagery. Remote Sensing, 6(12), 12005-12036. https://doi.org/10.3390/rs61212005