Building Change Detection Using Old Aerial Images and New LiDAR Data

Abstract

:1. Introduction

2. Data and Methodology

2.1. Data

2.2. Overview of the Proposed Method

2.3. Data Preprocessing

2.4. Change Detection

2.4.1. Change Indicator

2.4.2. Change Optimization Using Graph Cuts

2.5. Post-Processing

2.5.1. Positive Building Change Results Refinement

2.5.2. Negative Building Change Results Refinement

3. Experimental Results

3.1. Data Preprocessing Results

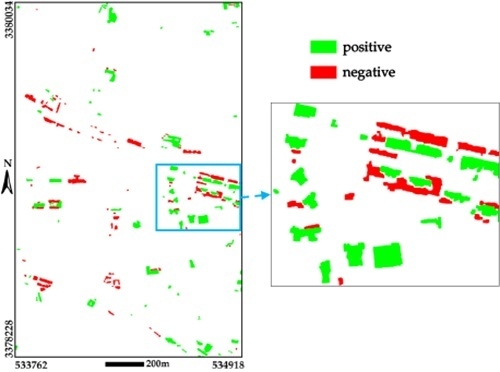

3.2. Building Change Detection Results

- (1)

- The buildings with complex structures of roofs or covered by vegetation are removed by the proposed method, which is the main cause of omissions (Figure 12).

- (2)

- The newly built low-rise buildings with a lower height.

- (1)

- Some vegetated areas have insignificant green color which is hard to be removed using the nEGI.

- (2)

- A newly built underground passage results in the subsidence of ground. As shown in Figure 13, there are almost 5 m changes in ground height.

4. Discussion

4.1. Prameter Selection and Sensitivity Analysis

4.2. Comparison

4.3. Limitations of the Proposed Building Change Detection

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Du, P.; Liu, S.; Gamba, P.; Tan, K.; Xia, J. Fusion of difference images for change detection over urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1076–1086. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Brunner, D.; Bruzzone, L.; Lemoine, G. Change detection for earthquake damage assessment in built-up areas using very high resolution optical and SAR imagery. In Proceedings of the 2010 IEEE International Symposium Geoscience and Remote Sensing (IGARSS), Honolulu, HI, USA, 25–30 July 2010; pp. 3210–3213.

- Chesnel, A.L.; Binet, R.; Wald, L. Object oriented assessment of damage due to natural disaster using very high resolution images. In Proceedings of the 2007 IEEE International Symposium Geoscience and Remote Sensing (IGARSS), Barcelona, Spain, 23–28 July 2007; pp. 3736–3739.

- Liu, S.; Bruzzone, L.; Bovolo, F.; Du, P. Hierarchical unsupervised change detection in multitemporal hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 244–260. [Google Scholar] [CrossRef]

- Marchesi, S.; Bovolo, F.; Bruzzone, L. A context-sensitive technique robust to registration noise for change detection in VHR multispectral images. IEEE Trans. Image Process. 2010, 19, 1877–1889. [Google Scholar] [CrossRef] [PubMed]

- Tian, J.; Cui, S.; Reinartz, P. Building change detection based on satellite stereo imagery and digital surface models. IEEE Trans. Geosci. Remote Sens. 2014, 52, 406–417. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Bontemps, S.; Bogaert, P.; Titeux, N.; Defourny, P. An object-based change detection method accounting for temporal dependences in time series with medium to coarse spatial resolution. Remote Sens. Environ. 2008, 112, 3181–3191. [Google Scholar] [CrossRef]

- Pajares, G. A hopfield neural network for image change detection. IEEE Trans. Neural Netw. 2006, 17, 1250–1264. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, S.; Bruzzone, L.; Patra, S.; Bovolo, F.; Ghosh, A. A context-sensitive technique for unsupervised change detection based on hopfield-type neural networks. IEEE Trans. Geosci. Remote Sens. 2007, 45, 778–789. [Google Scholar] [CrossRef]

- Pajares, G.; Ruz, J.J.; Jesús, M. Image change detection from difference image through deterministic simulated annealing. Pattern Anal. Appl. 2009, 12, 137–150. [Google Scholar] [CrossRef]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm. Remote Sens. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Im, J.; Jensen, J.; Tullis, J. Object-based change detection using correlation image analysis and image segmentation. Int. J. Remote Sens. 2008, 29, 399–423. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, L.; Huang, X. Object-oriented change detection based on the Kolmogorov–Smirnov test using high-resolution multispectral imagery. Int. J. Remote Sens. 2011, 32, 5719–5740. [Google Scholar] [CrossRef]

- Tian, J.; Reinartz, P.; d’Angelo, P.; Ehlers, M. Region-based automatic building and forest change detection on cartosat-1 stereo imagery. ISPRS J. Photogramm. Remote Sens. 2013, 79, 226–239. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection–approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Wu, B.; Zhang, Y.; Zhu, Q. Integrated point and edge matching on poor textural images constrained by self-adaptive triangulations. ISPRS J. Photogramm. Remote Sens. 2012, 68, 40–55. [Google Scholar] [CrossRef]

- Jung, F. Detecting building changes from multitemporal aerial stereopairs. ISPRS J. Photogramm. Remote Sens. 2004, 58, 187–201. [Google Scholar] [CrossRef]

- Tian, J.; Chaabouni-Chouayakh, H.; Reinartz, P.; Krauß, T.; d’Angelo, P. Automatic 3D change detection based on optical satellite stereo imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 586–591. [Google Scholar]

- Nebiker, S.; Lack, N.; Deuber, M. Building change detection from historical aerial photographs using dense image matching and object-based image analysis. Remote Sens. 2014, 6, 8310–8336. [Google Scholar] [CrossRef]

- Guerin, C.; Binet, R.; Pierrot-Deseilligny, M. Automatic detection of elevation changes by differential DSM analysis: Application to urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4020–4037. [Google Scholar] [CrossRef]

- Baltsavias, E. Airborne laser scanning: Basic relations and formulas. ISPRS J. Photogramm. Remote Sens. 1999, 54, 199–214. [Google Scholar] [CrossRef]

- Murakami, H.; Nakagawa, K.; Hasegawa, H.; Shibata, T.; Iwanami, E. Change detection of buildings using an airborne laser scanner. ISPRS J. Photogramm. Remote Sens. 1999, 54, 148–152. [Google Scholar] [CrossRef]

- Vu, T.T.; Matsuoka, M.; Yamazaki, F. LIDAR-based change detection of buildings in dense urban areas. In Proceedings of the 2004 IEEE International Symposium Geoscience and Remote Sensing (IGARSS), Anchorage, AK, USA, 20–24 September 2004; pp. 3413–3416.

- Choi, K.; Lee, I.; Kim, S. A feature based approach to automatic change detection from LiDAR data in urban areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 18, 259–264. [Google Scholar]

- Girardeau-Montaut, D.; Roux, M.; Marc, R.; Thibault, G. Change detection on points cloud data acquired with a ground laser scanner. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2005, 36, 30–35. [Google Scholar]

- Richter, R.; Kyprianidis, J.E.; Döllner, J. Out-of-core GPU-based change detection in massive 3D point clouds. Trans. GIS. 2013, 17, 724–741. [Google Scholar] [CrossRef]

- Barber, D.; Holland, D.; Mills, J. Change detection for topographic mapping using three-dimensional data structures. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1177–1182. [Google Scholar]

- Xu, H.; Cheng, L.; Li, M.; Chen, Y.; Zhong, L. Using octrees to detect changes to buildings and trees in the urban environment from airborne LiDAR data. Remote Sens. 2015, 7, 9682–9704. [Google Scholar] [CrossRef]

- Vögtle, T.; Steinle, E. Detection and recognition of changes in building geometry derived from multitemporal laserscanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 428–433. [Google Scholar]

- Rutzinger, M.; Rüf, B.; Höfle, B.; Vetter, M. Change detection of building footprints from airborne laser scanning acquired in short time intervals. Int. Arch. Photogramm. Remote Sens. 2010, 38, 475–480. [Google Scholar]

- Teo, T.; Shih, T. Lidar-based change detection and change-type determination in urban areas. Int. J. Remote Sens. 2013, 34, 968–981. [Google Scholar] [CrossRef]

- Pang, S.; Hu, X.; Wang, Z.; Lu, Y. Object-based analysis of airborne LiDAR data for building change detection. Remote Sens. 2014, 6, 10733–10749. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X. Advances in fusion of optical imagery and LiDAR point cloud applied to photogrammetry and remote sensing. Int. J. Image Data Fusion. 2016, 2016, 1–31. [Google Scholar] [CrossRef]

- Vosselman, G.; Gorte, B.; Sithole, G. Change detection for updating medium scale maps using laser altimetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 34, 1–6. [Google Scholar]

- Awrangjeb, M. Effective generation and update of a building map database through automatic building change detection from LiDAR point cloud data. Remote Sens. 2015, 7, 14119–14150. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Fraser, C.; Lu, G. Building change detection from LiDAR point cloud data based on connected component analysis. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 393–400. [Google Scholar] [CrossRef]

- Malpica, J.; Alonso, M. Urban changes with satellite imagery and lidar data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 18, 853–858. [Google Scholar]

- Malpica, J.; Alonso, M.; Papí, F.; Arozarena, A.; Martínez De Agirre, A. Change detection of buildings from satellite imagery and LiDAR data. Int. J. Remote Sens. 2013, 34, 1652–1675. [Google Scholar] [CrossRef]

- Liu, Z.; Gong, P.; Shi, P.; Chen, H.; Zhu, L.; Sasagawa, T. Automated building change detection using ultracamd images and existing cad data. Int. J. Remote Sens. 2010, 31, 1505–1517. [Google Scholar] [CrossRef]

- Chen, L.; Lin, L. Detection of building changes from aerial images and light detection and ranging (LiDAR) data. J. Appl. Remote Sens. 2010, 4. [Google Scholar] [CrossRef]

- Qin, R. Change detection on LOD 2 building models with very high resolution spaceborne stereo imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 179–192. [Google Scholar] [CrossRef]

- Stal, C.; Tack, F.; De Maeyer, P.; De Wulf, A.; Goossens, R. Airborne photogrammetry and LiDAR for DSM extraction and 3D change detection over an urban area—A comparative study. Int. J. Remote Sens. 2013, 34, 1087–1110. [Google Scholar] [CrossRef] [Green Version]

- Qin, R. An object-based hierarchical method for change detection using unmanned aerial vehicle images. Remote Sens. 2014, 6, 7911–7932. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N. A method for registration of 3-D shapes. IEEE Trans. Pat. Anal. Mach. Intel. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Fitzgibbon, W. Robust registration of 2D and 3D point sets. Image Vis. Comput. 2003, 21, 1145–1153. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the icp algorithm. In Proceedings of Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QB, Canada, 28 May–1 June 2001; pp. 145–152.

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D point cloud based object maps for household environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Lu, G.; Wong, D. An adaptive inverse-distance weighting spatial interpolation technique. Comput. Geosci. 2008, 34, 1044–1055. [Google Scholar] [CrossRef]

- Chaplot, V.; Darboux, F.; Bourennane, H.; Leguédois, S.; Silvera, N.; Phachomphon, K. Accuracy of interpolation techniques for the derivation of digital elevation models in relation to landform types and data density. Geomorphology 2006, 77, 126–141. [Google Scholar] [CrossRef]

- Chaabouni-Chouayakh, H.; Reinartz, P. Towards automatic 3D change detection inside urban areas by combining height and shape information. Photogramm. Fernerkund. Geoinf. 2011. [Google Scholar] [CrossRef]

- Boykov, Y.; Jolly, M.P. Interactive graph cuts for optimal boundary & region segmentation of objects in N-D images. In Proceedings of the International Conference on Computer Vision, Vancouver, CO, Canada, 7–14 July 2001; pp. 105–112.

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1222–1239. [Google Scholar] [CrossRef]

- Chaabouni-Chouayakh, H.; Krauss, T.; d’Angelo, P.; Reinartz, P. 3D change detection inside urban areas using different digital surface models. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 86–91. [Google Scholar]

| Parameters | Description | Value | Set Mode |

|---|---|---|---|

| Threshold for squared coefficient of variation of the histogram | 1.1 | Experimentally | |

| the weight of grey-scale similarity in the data term | 0.6 | Experimentally | |

| the weight of smooth term in the energy function | 0.75 | Experimentally |

| Type | Reference Number | Detected Number | True Detected Number | False Detected Number | Completeness | Correctness |

|---|---|---|---|---|---|---|

| Positive | 89 | 92 | 83 | 9 | 93.3% | 90.2% |

| Negative | 85 | 85 | 80 | 5 | 94.1% | 94.1% |

| Methods | Data Source | Completeness (%) | Correctness (%) | |

|---|---|---|---|---|

| First Time | Second Time | |||

| Xu et al. [31] | LiDAR data | LiDAR data | 94.81 | × |

| Pang et al. [35] | LiDAR data | LiDAR data | 97.8 | 91.2 |

| Chen and Lin [43] | polyhedral building model | LiDAR data and aerial imagery | 91.67 | 82.6 |

| Qin [44] | 3D model | stereo satellite imagery | 82.6 | × |

| Awrangjeb [38] | building database | LiDAR data | 99.6 | 63.2 |

| Tian et al. [7] | stereo satellite image | stereo satellite imagery | 93.33 | 87.5 |

| The proposed method | aerial imagery | LiDAR data | 93.5 | 92.15 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building Change Detection Using Old Aerial Images and New LiDAR Data. Remote Sens. 2016, 8, 1030. https://doi.org/10.3390/rs8121030

Du S, Zhang Y, Qin R, Yang Z, Zou Z, Tang Y, Fan C. Building Change Detection Using Old Aerial Images and New LiDAR Data. Remote Sensing. 2016; 8(12):1030. https://doi.org/10.3390/rs8121030

Chicago/Turabian StyleDu, Shouji, Yunsheng Zhang, Rongjun Qin, Zhihua Yang, Zhengrong Zou, Yuqi Tang, and Chong Fan. 2016. "Building Change Detection Using Old Aerial Images and New LiDAR Data" Remote Sensing 8, no. 12: 1030. https://doi.org/10.3390/rs8121030

APA StyleDu, S., Zhang, Y., Qin, R., Yang, Z., Zou, Z., Tang, Y., & Fan, C. (2016). Building Change Detection Using Old Aerial Images and New LiDAR Data. Remote Sensing, 8(12), 1030. https://doi.org/10.3390/rs8121030