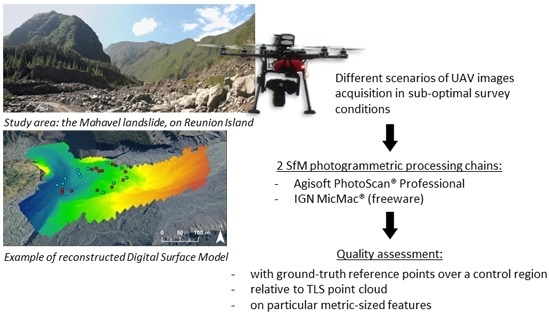

Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions

Abstract

:1. Introduction

2. Scenario of the Survey and 3D Model Reconstruction

2.1. Description of the Study Area

2.2. Data Collection

3. Photogrammetric Processing Chain

3.1. PhotoScan Overview

- Camera alignment by bundle adjustment. Common tie points are detected and matched on photographs so as to compute the external camera orientation parameters for each picture. Camera calibration parameters are refined, simulating the distortion of the lens with Brown’s distortion model [21]. This model allows correcting both for radial and tangential distortions. The “High” accuracy parameter is selected (the software works with original size photos) to obtain more accurate camera position estimates. The number of matching points (tie points) for every image can be limited to optimize performance. The default value of this parameter (1000) is kept.

- Creation of the dense point cloud using the estimated camera positions and the pictures themselves. PhotoScan calculates depth maps for each image. The quality of the reconstruction is set to “High” to obtain a more detailed and accurate geometry.

- Reconstruction of a 3D polygonal mesh representing the object surface based on the dense point cloud. The surface type may be selected as “Arbitrary” (no assumptions are made on the type of the reconstructed object) or “Height field” (2.5D reconstruction of planar surfaces). Considering the complexity of the study area (blocks, terrace fronts), the surface type is set to “Arbitrary” for 3D mesh construction, even if this implies higher memory consumption and higher processing time. The user can also specify the maximum number of polygons in the final mesh. In our study, this parameter is set to “High” (i.e., 1/5 of the number of points in the previously generated dense point cloud) to optimize the level of detail of the mesh.

- The reconstructed mesh can be textured following different mapping modes. In this study, we used the default “Generic” mode.

3.2. MicMac Overview

- Tie point computation: the Pastis tool uses the SIFT++ algorithm [24] for the tie point pair generation. This algorithm creates an invariant descriptor that can be used to identify the points of interest and match them even under a variety of perturbing conditions such as scale changes, rotation, changes in illumination, viewpoints or image noise. Here, we used Tapioca, the simplified tool interface, since the features available using Tapioca are sufficient for the purpose of our comparative study. Also note that the images have been shrunk to a scaling of 0.25.

- External orientation and intrinsic calibration: the Apero tool generates external and internal orientations of the camera. The relative orientations were computed with the Tapas tool in two steps: first on a small set of images and then by using the calibration obtained as an initial value for the global orientation of all images. The distortion model used in this module is a Fraser’s radial model with decentric and affine parameters and 12 degrees of freedom. Recently, Tournadre et al. (2015) published a study [25] presenting the last evolutions of MicMac’s bundle adjustment and some additional camera distortion models specifically designed to address issues arising with UAV linear photogrammetry. Indeed, UAV surveys along a linear trajectory may be problematic for 3D reconstruction, as they tend to induce “bowl effects” [25,26] due to a poor estimation of the camera’s internal parameters. This study therefore puts to the test the refined radial distortion model “Four 15 × 2” [23,25] for camera distortion. Using GCPs, the image relative orientations are transformed with “GCP Bascule” into an absolute orientation within the local coordinate system. Finally the Campari command is used to compensate for heterogeneous measurements (tie points and GCPs).

- Matching: from the resulting oriented images, MicMac computes 3D models and orthorectification. The calculation is performed iteratively on sub-sampled images at decreasing resolutions, the result obtained at a given resolution being used to predict the next step solution. The correlation window size is 3 × 3 pixels.

- Orthophoto generation: the tool used to generate orthomosaics is Tawny. It is an interface to the Porto tool. Tawny merges individual rectified images that have been generated by MicMac in a global orthophoto and optionally does some radiometric equalization.

3.3. Images Selection

3.4. GCPs Tagging

3.5. Processing Scenarios

- Scenario 1: the process is performed with the automatic selection of 121 photographs (Selection #1), using full-resolution images, i.e., 4256 × 2832 pixels (12 Mpix), and 12 GCPs.

- Scenario 2: the process is performed with the manual selection of 109 photographs (Selection #2), using full-resolution images and 12 GCPs.

- Scenario 3: the process is performed with the set of 109 selected photographs, using reduced-quality images, i.e., 2128 × 1416 pixels (3 Mpix), and 12 GCPs. The images of the datasets were down-sampled from 12 Megapixels (Mpix) to 3 Mpix, selecting one line and one sample out of two without modifying the color content of the pixels (nearest-neighbor approach).

- Scenario 4: the process is performed with the set of 109 selected photographs using full-resolution images and only six GCPs: GCP 1, GCP 2, GCP 6, GCP 7, GCP 8 and GCP 9. The goal of this scenario is to test the impact of the number of GCPs and their distribution within the control region. Only the GCPs of the central part of the control region are therefore considered in order to assess in this case the errors on the external parts of the area [9]. The results of this scenario would be affected both by reducing the number of GCPs and by changing their distribution.

4. Results and Discussion

4.1. Effects of the Refined Distortion Model on MicMac Processing Chain

4.2. Quality Assessment of PhotoScan and MicMac within the Control Region Using Ground-Truth Reference Points

4.3. Relative Quality Assessment of PhotoScan and MicMac with Respect to the TLS Point Cloud over the Whole Study Area

4.4. Quality Assessment of PhotoScan and MicMac on Particular Metric Features

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Delacourt, C.; Allemand, P.; Jaud, M.; Grandjean, P.; Deschamps, A.; Ammann, J.; Cuq, V.; Suanez, S. DRELIO: An unmanned helicopter for imaging coastal areas. J. Coast. Res. 2009. [Google Scholar] [CrossRef]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using unmanned aerial vehicles (UAV) for high-resolution reconstruction of topography: The structure from motion approach on coastal environments. Remote Sens. 2013. [Google Scholar] [CrossRef] [Green Version]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013. [Google Scholar] [CrossRef] [Green Version]

- Henry, J.-B.; Malet, J.-P.; Maquaire, O.; Grussenmeyer, P. The use of small-format and low-altitude aerial photos for the realization of high-resolution DEMs in mountainous areas: Application to the super-sauze earthflow (Alpes-de-Haute-Provence, France). Earth Surf. Proc. Landf. 2002, 27, 1339–1350. [Google Scholar] [CrossRef] [Green Version]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Proc. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Woodget, A.S.; Carbonneau, P.E.; Visser, F.; Maddock, I.P. Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry. Earth Surf. Proc. Landf. 2015, 40, 47–64. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the 7th IEEE International Conference on Computer Vision (ICCV), Kerkyra, Corfu, Greece, 20–25 September 1999; pp. 1150–1157.

- James, M.R.; Robson, S. Straightforward reconstruction of 3D surfaces and topography with a camera: Accuracy and geoscience application. J. Geophys. Res. 2012. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Neitzel, F.; Klonowski, J. Mobile 3D mapping with a low-cost UAV system. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 39–44. [Google Scholar] [CrossRef]

- Küng, O.; Strecha, C.; Beyeler, A.; Zufferey, J.-C.; Floreano, D.; Fua, P.; Gervaix, F. The accuracy of automatic photogrammetric techniques on ultra-light UAV imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 125–130. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Anders, N.; Masselink, R.; Keegstra, S.; Suomalainen, J. High-res digital surface modeling using fixed-wing UAV-based photogrammetry. In Proceedings of the Geomorphometry, Nanjing, China, 16–20 October 2013.

- BRGM. Etude Géologique de L’éperon Rive Droite de la Rivière des Remparts Situé au Droit du Barrage de Mahavel. Evolution de la Morphologie du Barrage Après le Passage du Cyclone “Denise”; Technical Report TAN 66-A/12; BRGM: Orléans, France, 1966. [Google Scholar]

- Le Bivic, R.; Delacourt, C.; Allemand, P.; Stumpf, A.; Quiquerez, A.; Michon, L.; Villeneuve, N. Quantification d’une ile tropicale volcanique: La Réunion. In Proceedings of the 3ème Journée Thématique du Programme National de Télédétection Spatiale–Méthodes de Traitement des Séries Temporelles en Télédétection Spatiale, Paris, France, 13 November 2014.

- Topcon Positioning Systems, Inc. Topcon Hiper II Operator Manual; Part Number 7010-0982; Topcon Positioning Systems, Inc.: Livermore, CA, USA, 2010. [Google Scholar]

- Katz, D.; Friess, M. Technical note: 3D from standard digital photography of human crania—A preliminary assessment: Three-dimensional reconstruction from 2D photographs. Am. J. Phys. Anthropol. 2014, 154, 152–158. [Google Scholar] [CrossRef] [PubMed]

- Van Damme, T. Computer vision photogrammetry for underwater archeological site recording in a low-visibility environment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 231–238. [Google Scholar] [CrossRef]

- Ryan, J.C.; Hubbard, A.L.; Box, J.E.; Todd, J.; Christoffersen, P.; Carr, J.R.; Holt, T.O.; Snooke, N. UAV photogrammetry and structure from motion to assess calving dynamics at Store Glacier, a large outlet draining the Greenland ice sheet. Cryosphere 2015, 9, 1–11. [Google Scholar] [CrossRef]

- Agisoft LLC. AgiSoft PhotoScan User Manual; Professional Edition v.1.1.5; Agisoft LLC: St. Petersburg, Russia, 2014. [Google Scholar]

- Pierrot-Deseilligny, M.; Clery, I. Apero, an open source bundle adjustment software for automatic calibration and orientation of set of images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 269–276. [Google Scholar]

- Pierrot-Deseilligny, M. MicMac, Apero, Pastis and Other Beverages in a Nutshell! 2015. Available online: http://logiciels.ign.fr/IMG/pdf/docmicmac-2.pdf (accessed on 10 December 2015).

- Vedaldi, A. An Open Implementation of the SIFT Detector and Descriptor; UCLA CSD Technical Report 070012; University of California: Los Angeles, CA, USA, 2007. [Google Scholar]

- Tournadre, V.; Pierrot-Deseilligny, M.; Faure, P.H. UAV linear photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 327–333. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Proc. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- Vallet, J.; Panissod, F.; Strecha, C.; Tracol, M. Photogrammetric performance of an ultra-light weight swinglet “UAV”. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 253–258. [Google Scholar] [CrossRef]

- Javernick, L.; Brasington, J.; Caruso, B. Modeling the topography of shallow braided rivers using structure-from-motion photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- Heritage, G.L.; Milan, D.J.; Large, A.R.G.; Fuller, I.C. Influence of survey strategy and interpolation model on DEM quality. Geomorphology 2009, 112, 334–344. [Google Scholar] [CrossRef]

- Wheaton, J.M.; Brasington, J.; Darby, S.E.; Sear, D.A. Accounting for uncertainty in DEMs from repeat topographic surveys: Improved sediment budgets. Earth Surf. Proc. Landf. 2009, 35, 136–156. [Google Scholar] [CrossRef]

| MicMac | MicMac-rdm | |||

|---|---|---|---|---|

| Delta-XY (cm) | Delta-Z (cm) | Delta-XY (cm) | Delta-Z (cm) | |

| REF.1 | 2.8 | −9.2 | 2.9 | −0.3 |

| REF.2 | 2.6 | 5.6 | 1.7 | 6.8 |

| REF.3 | 5.8 | 10.6 | 3.7 | 1.5 |

| REF.4 | not visible | not visible | ||

| REF.5 | 4.4 | 5.2 | 4.0 | 0.4 |

| REF.6 | 2.0 | −9.9 | 2.6 | −1.0 |

| REF.7 | 5.3 | −8.6 | 5.6 | −4.8 |

| REF.8 | 3.3 | −7.6 | 3.9 | 0.0 |

| REF.9 | 1.8 | −5.0 | 2.2 | −3.1 |

| RMSE | 3.8 | 8.0 | 3.5 | 3.2 |

| Standard dev. σ | 1.5 | 8.2 | 1.2 | 3.4 |

| PhotoScan® | MicMac® | ||||||

|---|---|---|---|---|---|---|---|

| H-RMSE | V-RMSE | σh | σv | H-RMSE | V-RMSE | σh | σv |

| Scenario 1: 121 images (automatically selected)—4256 × 2832 pixels (12 Mpix)—12 GCP | |||||||

| 4.5 cm | 3.9 cm | 2.0 cm | 4.1 cm | 3.5 cm | 3.2 cm | 1.2 cm | 3.4 cm |

| Scenario 2: 109 images (manually selected)—4256 × 2832 pixels (12 Mpix)—12 GCP | |||||||

| 3.4 cm (2 pixels) | 12.4 cm (7.3 pixels) | 1.6 cm | 10.3 cm | 3.0 cm (1.8 pixels) | 4.7 cm (2.8 pixels) | 1.6 cm | 4.4 cm |

| Scenario 3: 109 images—2128 × 1416 pixels (3 Mpix)—12 GCP | |||||||

| 5.8 cm (1.7 pixels) | 15.0 cm (4.4 pixels) | 3.3 cm | 11.9 cm | 3.2 cm (0.9 pixels) | 6.3 cm (1.8 pixels) | 1.7 cm | 6.3 cm |

| Scenario 4: 109 images—4256 × 2832 pixels (12 Mpix)—six GCP | |||||||

| 4.4 cm | 7.3 cm | 1.7 cm | 6.0 cm | 4.7 cm | 4.8 cm | 2.3 cm | 3.9 cm |

| TLS Cloud–PhotoScan DSM | TLS Cloud–MicMac DSM | |||

|---|---|---|---|---|

| Mean Difference | Stand. dev. σ | Mean Difference | Stand. dev. σ | |

| Scenario 1 | 0.05 m | 0.66 m | 0.22 m | 1.08 m |

| Scenario 2 | 0.04 m | 0.66 m | 0.19 m | 1.10 m |

| Scenario 3 | 0.06 m | 0.68 m | 0.22 m | 1.12 m |

| Scenario 4 | 0.05 m | 0.65 m | 0.19 m | 1.09 m |

| PhotoScan-MicMac RMS Offset | PhotoScan-TLS RMS Offset | MicMac-TLS RMS Offset | |

|---|---|---|---|

| Vertical profile over a terrace front | 0.71 m | 0.36 m | 0.79 m |

| Vertical profile over blocks of rock | 0.06 m | 0.17 m | 0.17 m |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaud, M.; Passot, S.; Le Bivic, R.; Delacourt, C.; Grandjean, P.; Le Dantec, N. Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions. Remote Sens. 2016, 8, 465. https://doi.org/10.3390/rs8060465

Jaud M, Passot S, Le Bivic R, Delacourt C, Grandjean P, Le Dantec N. Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions. Remote Sensing. 2016; 8(6):465. https://doi.org/10.3390/rs8060465

Chicago/Turabian StyleJaud, Marion, Sophie Passot, Réjanne Le Bivic, Christophe Delacourt, Philippe Grandjean, and Nicolas Le Dantec. 2016. "Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions" Remote Sensing 8, no. 6: 465. https://doi.org/10.3390/rs8060465

APA StyleJaud, M., Passot, S., Le Bivic, R., Delacourt, C., Grandjean, P., & Le Dantec, N. (2016). Assessing the Accuracy of High Resolution Digital Surface Models Computed by PhotoScan® and MicMac® in Sub-Optimal Survey Conditions. Remote Sensing, 8(6), 465. https://doi.org/10.3390/rs8060465