1. Introduction

The savanna biome covers approximately 25% of the world’s terrestrial landscape, and contributes significantly to the global net vegetation productivity and carbon cycle [

1,

2]. These mixed grass–woody ecosystems constitute a multi-scale mosaic of bare soil, patches of grass, shrubs and tree clumps. Detailed mapping of savanna’s land cover components is important in solving fundamental problems in these ecosystems such as soil erosion, bush encroachment, forage and browsing availability. However, separation of savanna land cover components is difficult and requires fine-scale analyses. Traditional land cover classification of these landscapes accounts for generalized classes with mixed vegetation [

3]. For various specialized studies of savanna ecosystems, there is a need for fine-scale discrimination of the principal land cover components, such as bare soil, grass, shrubs and trees. High landscape heterogeneity of savannas with gradual transition between open and closed vegetation cover, and small patch sizes are, however, major reasons of misclassifications [

3,

4]. Furthermore, limiting confusion between spectrally similar but compositionally different tree canopies, shrubs and grasses can be very challenging [

5].

It is also important to consider seasonality while classifying savanna land cover components, due to the huge contrast in vegetation state during the wet (summer) and dry (winter) period [

6]. As the environmental conditions depend on water availability, an optimal discrimination of phenological changes in different vegetation types is only possible by analyzing both wet and dry season data [

7,

8]. Understanding of seasonal vegetation dynamics is essential to distinguish between short-term fluctuations or long-term changes in savanna composition and productivity [

9]. However, there are several challenges to overcome when discriminating land cover types from satellite imagery in tropical savanna regions. First of all, image selection is affected by dense cloud cover and a high amount of atmospheric water vapor occurring in the rainy period. These often prevent the usage of wet season scenes or create gaps in the classification maps. Despite that, wet season images are often preferred as they represent the peak of the growing season with well-developed vegetation cover and leaf-on conditions [

8,

10]. However, when considering fine-scale separation of savanna components, the high photosynthesis rate may confuse spectral differences between vegetation types [

11]. Extended band combination of new satellite sensors recording slight differences in the vegetation reflectance can potentially contribute to solve this problem [

12]. On the other hand, dry season imagery is usually cloud-free and offers better contrast between senescing herbaceous vegetation and evergreen trees [

13,

14]. It might however be difficult to detect leafless deciduous trees in winter scenes, as the tree canopy is significantly smaller and less defined in the leaf-off conditions. Furthermore, biochemical properties in leaves correlated with spectral information enable tree identification. That information is missing for deciduous trees in the winter period. Considering all above-mentioned issues, it is important to know how accurately savannas land cover components can be classified using winter imagery and ground truth data collected in the dry season, in comparison to commonly preferred maximum “greenness” images.

There are several remote sensing solutions useful for differentiation of land cover components in heterogeneous landscapes. Combined airborne LiDAR and hyperspectral surveys, although arguably the best suited for this application, are expensive for large scale studies, without mentioning that the availability of LiDAR or hyperspectral infrastructure in Africa is limited. Very high resolution (VHR) satellite imagery, however, affords the possibility of regional scale studies [

15]. Moreover new satellite sensors, like WorldView-2 (WV-2), offer not only a very high spatial resolution but also extended and innovative spectral bands. The combination of a red-edge, yellow, and two infrared bands of WV-2 provide additional valuable information for vegetation classification [

16,

17,

18], which may be particularly useful when spectrally similar components like shrubs and trees need to be separated. As a consequence, several studies proved the benefits of using WV-2 imagery for land cover classification of diverse landscapes (e.g., [

19,

20,

21]).

Most land use classification studies are based on pixel-oriented approach. It is generally well accepted that pixel-based classification tends to perform better with images of relatively coarse spatial resolution [

22,

23]. However, fine-scale land cover classification based on VHR imagery increases the number of detectable class elements, thus the within-class spectral variance. This can make the separation of spectrally mixed land cover types more difficult [

24] and leads to an increase of misclassified pixels, creating a “salt-and-pepper” effect when using a pixel-based approach [

25]. An alternative classification method is the Object-Based Image Analysis (OBIA), where an image is firstly segmented into internally homogeneous segments to represent spatial objects. Segments, compared to single pixels, can be described according to a wider range of spectral and spatial features [

5,

26]. Furthermore, replacement of pixel values belonging to the same segment by their means lowers the variance of the complete pixels’ set (see Huygens theorem in Edwards and Cavalli-Sforza (1965) [

27]). As a result, several studies have proven that object-based approaches can be very useful for mapping vegetation structure and to discriminate structural stages in vegetation [

5,

28,

29,

30]. Furthermore, different authors claimed that OBIA is better suited for classifying VHR imagery compared to pixel-based methods [

23,

31,

32,

33]. However, none of these studies tested the usability of OBIA in highly heterogeneous landscape such as African savanna for fine-scale land cover classification. Although OBIA has been proven to perform better with high resolution data, it remains unknown whether it outperforms the pixel-based approach when applied to a fine-scale mosaic of vegetation patches consisting, often, of only a few pixels.

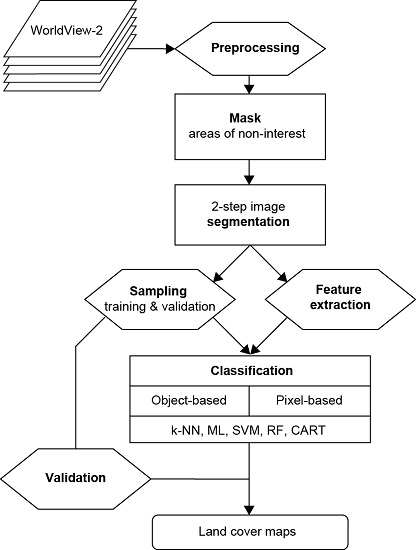

Besides the applied classification approach (pixel-based vs. OBIA), classification accuracy also largely depends on the used algorithm. Traditional methods like k-nearest neighbor (k-NN) or maximum likelihood (ML) have been used frequently in the past, but are nowadays increasingly replaced by modern and robust supervised machine learning algorithms including tree-based methods, artificial neural networks or support vector machines. Several studies compared the performance of machine learning algorithms with OBIA or pixel-based classification [

31,

34,

35]. However, it is yet unclear which of the classifiers performs the best with OBIA and pixel-based approaches, especially when used with VHR imagery for detail separation of vegetation components in African savanna.

To our knowledge, there are no extensive studies exploring best methods for fine-scale seasonal delineation of trees, shrubs, bare soils, and grasses in African savanna. This study is the first to comprehensively investigate how best to classify land cover components of an African savanna—a biome characterized by a very high level of heterogeneity. The novelty of this study lies in the combination of several factors: the investigated biome, the fine-scale separation of land cover components, the context of seasonality, the set of tested classifiers, and the application of advanced satellite imagery. In particular we examined the performance of selected traditional and machine learning algorithms with object- and pixel-based classification approaches applied to wet and dry season WorldView-2 imagery. We hypothesized that: (1) the OBIA approach performs better than the pixel-based method in highly heterogeneous landscape of African savanna and in both seasons; (2) more advanced machine learning algorithms outperform traditional classifiers; and (3) in wet or peak productivity season, WorldView-2 scenes provide better classification results than during the leaf-off dry season.

4. Discussion

Findings of this study revealed that the classification based on WV-2 imagery produces high accuracy results regardless of the used classification approach. The combination of very high spatial resolution and extended spectral bands (especially yellow and red-edge) of WorldView-2 constitute an invaluable and unique dataset to discriminate woody, herbaceous, and bare components of African savannas. Advantages of using WV-2 images for fine-scale land cover classification of urban and natural landscapes were also reported by other authors (e.g., [

7,

48,

49]). However, this is the first time that WV-2 was used for fine-scale classification of African savannas, proving effectiveness of this imagery in highly heterogeneous landscape. With WV-2 images we were able to classify individual trees, tree clumps, shrub patches, grass and bare soil, moving one step further from the “traditional” savanna land cover mapping with mixed components into more discrete classification. This might not be possible when applying IKONOS or Landsat imagery [

17,

60]. WV-2 provides the degree of spatial detail and geometric precision comparable to aerial photographs [

61] and multispectral information facilitating more options for digital analysis [

7]. The high accuracies achieved in this study can be explained by the fact that WorldView-2 provides not only a high spatial resolution but also a unique band combination. Based on random forest feature importance, we found that yellow and red-edge band in pick productivity season are important in discrimination of vegetation components in savanna ecosystem. The contribution of these bands in mapping vegetation components was reported by several authors [

7,

62,

63]. Yellow and red-edge band are able to record variations in pigment concentration/content (e.g., chlorophyll, carotenoids, anthocyanin) in plants enabling detection of species or vegetation communities [

7,

17]. Only LiDAR and/or airborne hyperspectral imagery can produce similar or better classification results than WorldView-2. However, as these techniques are costly, require substantial preprocessing and are often not suitable for regional scale mapping, the methodology used in this study provides a valuable alternative.

In our study, both pixel- and object-based classifications generally produced high quality results or accuracies (≥77%) regardless of the considered season. However the latter approach had a significantly higher accuracy for almost every classifier with the highest overall accuracy score of 93%. This is consistent with several other studies indicating superiority of using OBIA in a range of environments [

5,

31,

64]. In particular, Whiteside et al. (2011) [

5] concluded that OBIA outperforms pixel-based classification for medium and high resolution satellite imagery in Australian savannas. Moreover, study of Gibbes et al. (2010) [

64] indicates that OBIA has a great potential in discriminating African woodland savanna from shrubby dominated and grassland patches using IKONOS high resolution imagery. Our study goes further, demonstrating that object-based classification of imagery characterized by a small pixel size and 8 spectral bands provides tools for fine level differentiation of spectrally similar vegetation components in the African savanna ecosystem. In the classification approach based on objects, thanks to hierarchic segmentation, very small objects of similar spectral information, like tree crowns, shrubs and shrub/tree patches, can be delineated, which is not possible with pixel-based methods. It leads to more thematically consistent mapping, eliminating the “salt-and-pepper” effect appearing on maps generated using pixel-based method. This is evidenced when comparing our results of OBIA and pixel-based approach for the best performing classifiers: trees and shrubs were found to have one of the highest improvements in the user accuracy in both seasons. A better performance of OBIA over pixel-based method with WorldView-2 imagery in detection of woody vegetation or even tree species was also reported by Ghosh and Joshi (2014) [

7] and Immitzer et al. (2012) [

62]. Furthermore, our results suggest that OBIA can be useful in crown shadow detection in the dry season, when the spectral information from the shadowed area is more consistent as it is not confused by a still strong photosynthesis signal of underlying vegetation.

All tested classifiers (except of ML in dry season classification) performed significantly better with OBIA. This is consistent with the study of Ghosh and Joshi (2014) [

7] when SVM and RF classifiers produced much higher accuracy of fine-scale bamboo mapping with OBIA compared to pixel-based methods. However, Duro et al. (2012) [

34] did not find any statistically significant differences in performance of CART, SVM and RF between the two classification methods using medium resolution imagery. These results might indicate that the difference in classifier performance between OBIA and pixel-based methods is more pronounced with increasing spatial resolution. Overall, SVM and RF outperformed other classifiers in both approaches and regardless the season. SVM and RF algorithms have been previously successfully applied in vegetation mapping with high and very high resolution imagery [

34,

62,

65]. Both classifiers are non-parametric, thus do not assume a known statistical distribution of the data. This allows SVM and RF to outperform widely used classification methods based on maximum likelihood, as the remotely sensed data usually have unknown distributions [

52,

66]. Furthermore, one of the most important and useful in land cover classification characteristics of SVM and RF is their ability to well generalize from a limited amount of training data [

52,

62]. Pal (2005) [

67] and Duro et al. (2012) [

34] reported that both SVM and RF can produce similar classification accuracies which supports our findings. However, to achieve the best classification results with SVM the number of input features and amount of training data should be well balanced, as the accuracy of a classification by SVM has proven to decline with more features especially when using small training sample [

7,

68]. On the contrary, RF in comparison to SVM handles much better the variable collinearity, therefore can produce high accuracy results with more variables included in the model.

All surfaces show some degree of spectral reflectance anisotropy when illuminated by sunlight, which is described by the Bidirectional Reflectance Distribution Function (BRDF) [

69]. Therefore it is important to note, that given the varying viewing angles the BRDF might have an effect on the classification accuracy results presented in this study, as for instance showed by Sue et al. (2009) [

69], Vanonckelen et al. (2013) [

70] and Wu and Cihlar (1995) [

71].

Our study demonstrates that it is possible to successfully distinguish savanna land cover components during peak vegetation productivity (March, end of summer) using VHR WV-2 imagery. Although the results of dry season classifications were also satisfactory, their accuracies were on average 10% lower than during the wet season. The latter result is however particularly useful as often, wet season imagery, although providing the best classification accuracy, is not available due to the persistent cloud cover. Wet season imagery provided much better results in discriminating shrubs compared to the dry season. Shrubs are generally difficult to separate due to their smaller, in comparison with trees, canopy sizes and poorly defined canopy shapes (e.g., multiple stems and coppices). They are therefore often confused with either smaller trees or higher herbaceous vegetation. As shrub cover is well developed during the rainy season, its enriched spectral information makes it then easier to differentiate with 8-band VHR imagery like WV-2. Besides shrubs, also bare soil showed higher accuracies for the wet season imagery. This can be attributed to the fact that in the dry season bare soil might be confused with wilting grasses or burnt lands. Interestingly, the WV-2 imagery combined with OBIA was very successful (producer accuracy of 91%) in detecting tree cover in the dry season when deciduous trees are mostly leafless. This is probably due to the small pixel size allowing accurate delineation of the leafless tree branches during segmentation process, combined with a set of texture features improving the classification results. Furthermore, as the deciduous tree species are not the major contributors to canopy characteristics in the study area, the remaining evergreen species in dry season contrast stronger in reflectance with the senescing grasses, becoming easier to classify. Similar results were found by Boggs (2010) [

14], who reported that a combination of QuickBird imagery and OBIA produces high accuracy results of dry season tree cover detection in Kruger National Park.

A future possible improvement to the fine-scale classification of savanna biome is to apply multi-temporal WV-2 images with OBIA. The multi-temporal approach in vegetation classification was successfully used by several authors proving superiority over single image/season classification [

9,

72]. Covering different phenological stages of vegetation could improve recognition of grass, shrubs and trees in savanna. However, application of fine-scale multi-temporal images requires a very high resolution digital surface models. The necessity of proper images alignment might constitute a limiting factor for application of multi-temporal approach.