Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters

Abstract

:1. Introduction

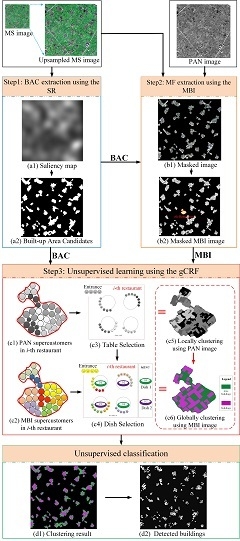

2. Proposed Method

2.1. Relationship between Components

2.2. Algorithm for VHR Satellite Images

2.3. Mathematical Description of Algorithm for VHR Satellite Images

2.3.1. SR

- (1)

- A frequency image f of the upsampled MS image I(x) is obtained by Fourier transform:where F denotes the Fourier transform.f = F(I(x)),

- (2)

- The SR R(f) is defined as follows:where L(f) = log(A(f)); A(f) represents the amplitude spectrum and is determined by A(f) = abs(f) and h(f) is a 3 × 3 local average filter. Correspondingly, R(f) denotes the built-up areas of the input image.R(f) = L(f) − h(f) × L(f),

- (3)

- The saliency map S(x) in the spatial domain is constructed by the inverse Fourier transform:where F−1 denotes the inverse Fourier transform and P(f) represents the phase spectrum, which is determined by P(f) = angle (f).S(x) = F−1[exp(R(f) + P(f))]2,

2.3.2. MBI

- (1)

- The brightness value b(x) for pixel x of the masked stacked image is calculated as follows:where bandk indicates the spectral value of pixel x at the kth band and K is the number of bands.

- (2)

- The differential morphological profiles (DMPs) of the white top-hat are defined as follows:DMPW_TH(d, s) = |MPW_TH(d, s +Δs) − MPW_TH(d, s)|The white top-hat is defined as follows:where denotes the opening-by-reconstruction of the brightness image b, while s and d indicate the scale and direction of a linear structure element (SE), respectively. The term Δs represents the interval of the profiles, with smin ≤ s ≤ smax. The sizes of SE (smax, smin, and Δs) are determined according to the resolution of the image and the characteristics of buildings (smax = 2, smin = 52, and Δs = 5 in this paper).

- (3)

- The MBI of the built-up areas are defined as the average of their DMPs:where D and S denote the direction and scale of the profiles, respectively. Four directions are considered in this letter (D = 4), because an increase in D did not result in higher accuracy for building detection. The number of scales is calculated by S = ((smax − smin)/Δs) + 1.

2.3.3. gCRF

2.4. Algorithm for Multiple Heterogeneous VHR Satellite Images

3. Experimental Results and Discussion

3.1. Experimental Setting

3.1.1. Experimental Data

3.1.2. Evaluation Method

3.2. Interaction between Components in Proposed Method

3.2.1. SR and gCRF

3.2.2. MBI and gCRF

3.2.3. MBI and SR

3.3. Performance Evaluation

3.3.1. Number of Images

3.3.2. gCRF_MBI Compared to Spectral-Based Methods

3.3.3. gCRF_MBI Compared to MBI-Based Methods

4. Discussion

4.1. Hierarchical Image Analysis Units

4.2. Feature Fusion in a Probabilistic Framework

4.3. Multiple Methods of Application

5. Conclusions

- (1)

- We propose a novel, unsupervised classification framework for building maps from multiple heterogeneous VHR satellite images by fusing two-layer image information in a unified, hierarchical model. The first layer is used to reshape over-segmented superpixels to potential individual buildings. The second layer is used to discriminate buildings from non-buildings using the MFs of the candidates. Due to the flexible hierarchical structure of the probabilistic model, a model is learned for each image in the first layer, while a probabilistic distribution for buildings and non-buildings is inferred for all images in the second layer.

- (2)

- Compared with traditional methods, the combination of multiple features eliminates the need to fine-tune model parameters from one image to another. Therefore, the proposed method is more suitable for automatically detecting buildings from multiple heterogeneous and uncalibrated VHR satellite images.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hoffmann, J. Mapping damage during the Bam (Iran) earthquake using interferometric coherence. Int. J. Remote Sens. 2007, 28, 1199–1216. [Google Scholar] [CrossRef]

- Hussain, E.; Ural, S.; Kim, K.; Fu, C.S.; Shan, J. Building extraction and rubble mapping for city of Port-au-Prince post-2010 earthquake with GeoEye-1 imagery and Lidar data. Photogramm. Eng. Remote Sens. 2011, 77, 1011–1023. [Google Scholar]

- Matsuoka, M.; Yamazaki, F. Building damage mapping of the 2003 Bam, Iran Earthquake using Envisat/ASAR intensity imagery. Earthq. Spectra 2005, 21, S285–S294. [Google Scholar] [CrossRef]

- Vu, T.; Ban, Y. Context-based mapping of damaged buildings from high-resolution optical satellite images. Int. J. Remote Sens. 2010, 31, 3411–3425. [Google Scholar] [CrossRef]

- Ok, A.O. Automated detection of buildings from single VHR multispectral images using shadow information and graph cuts. ISPRS J. Photogramm. Remote Sens. 2013, 86, 21–40. [Google Scholar] [CrossRef]

- Kim, T.; Muller, J. Development of a graph-based approach for building detection. Image Vis. Comput. 1999, 17, 3–14. [Google Scholar] [CrossRef]

- Huyck, C.; Adams, B.; Cho, S.; Chung, H.; Eguchi, R.T. Towards rapid citywide damage mapping using neighborhood edge dissimilarities in very high-resolution optical satellite imagery—Application to the 2003 Bam, Iran earthquake. Earthq. Spectra 2005, 21, S255–S266. [Google Scholar] [CrossRef]

- Ishii, M.; Goto, T.; Sugiyama, T.; Saji, H.; Abe, K. Detection of earthquake damaged areas from aerial photographs by using color and edge information. In Proceedings of the 5th Asian Conference on Computer Vision, Melbourne, Australia, 22–25 January 2002. [Google Scholar]

- Wang, D. A method of building edge extraction from very high resolution remote sensing images. Environ. Prot. Circ. Econ. 2009, 29, 26–28. [Google Scholar]

- Sirmacek, B.; Unsalan, C. Urban-area and building detection using SIFT keypoints and graph theory. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1156–1167. [Google Scholar] [CrossRef]

- Miura, H.; Modorikawa, S.; Chen, S. Texture characteristics of high-resolution satellite images in damaged areas of the 2010 Haiti Earthquake. In Proceedings of the 9th International Workshop on Remote Sensing for Disaster Response, Stanford, CA, USA, 15–16 September 2011. [Google Scholar]

- Duan, F.; Gong, H.; Zhao, W. Collapsed houses automatic identification based on texture changes of post-earthquake aerial remote sensing image. In Proceedings of the 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010. [Google Scholar]

- Pesaresi, M.; Benediktsson, J. A new approach for the morphological segmentation of high-resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Fauvel, M.; Benediktsson, J.; Chanussot, J. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3804–3814. [Google Scholar] [CrossRef]

- Shackelford, A.; Davis, C. A combined fuzzy pixel-based and object-based approach for classification of high-resolution multispectral data over urban areas. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2354–2363. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. Morphological building/shadow index for building extraction from high-resolution imagery over urban areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 161–172. [Google Scholar] [CrossRef]

- Pesaresi, M.; Guo, H.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Lu, L.; et al. A global human settlement layer from optical HR/VHR RS data: Concept and first results. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- San, D.; Turker, M. Support vector machines classification for finding building patches from IKONOS imagery: The effect of additional bands. J. Appl. Remote Sens. 2014, 8, 083694. [Google Scholar]

- Pal, M.; Foody, G. Evaluation of SVM, RVM and SMLR for accurate image classification with limited ground data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1344–1355. [Google Scholar] [CrossRef]

- Katartzis, A.; Sahli, H. A stochastic framework for the identification of building rooftops using a single remote sensing image. IEEE Trans. Geosci. Remote Sens. 2008, 46, 259–271. [Google Scholar] [CrossRef]

- Benedek, C.; Descombes, X.; Zerubia, J. Building development monitoring in multitemporal remotely sensed image pairs with stochastic birth-death dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, E.; Femiani, J.; Xu, S.; Zhang, X.; Wonka, P. Robust rooftop extraction from visible band images using higher order CRF. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4483–4495. [Google Scholar] [CrossRef]

- Li, E.; Xu, S.; Meng, W.; Zhang, X. Building extraction from remotely sensed images by integrating saliency cue. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 906–919. [Google Scholar] [CrossRef]

- Anees, A.; Aryal, J.; O’Reilly, M.; Gale, T.J.; Wardlaw, T. A robust multi-kernel change detection framework for detecting leaf beetle defoliation using Landsat 7 ETM+ data. ISPRS J. Photogramm. Remote Sens. 2016, 122, 167–178. [Google Scholar] [CrossRef]

- Mao, T.; Tang, H.; Wu, J.; Jiang, W.; He, S.; Shu, Y. A generalized metaphor of Chinese restaurant franchise to fusing both panchromatic and multispectral images for unsupervised classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4594–4604. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Li, S.; Tang, H.; Yang, X. Spectral residual model for rural residential region extraction from GF-1 satellite images. Math. Probl. Eng. 2016, 1–13. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectral GeoEye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Ok, A.; Senaras, C.; Yuksel, B. Automated detection of arbitrarily shaped buildings in complex environments from monocular VHR optical satellite imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1701–1717. [Google Scholar] [CrossRef]

- Chang, C.; Lin, C. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–39. [Google Scholar] [CrossRef]

- Shu, Y.; Tang, H.; Li, J.; Mao, T.; He, S.; Gong, A.; Chen, Y.; Du, H. Object-Based Unsupervised Classification of VHR Panchromatic Satellite Images by Combining the HDP and IBP on Multiple Scenes. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6148–6162. [Google Scholar] [CrossRef]

- Li, S.; Tang, H.; He, S.; Shu, Y.; Mao, T.; Li, J.; Xu, Z. Unsupervised Detection of Earthquake-Triggered Roof-Holes from UAV Images Using Joint Color and Shape Features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1823–1827. [Google Scholar]

- Anees, A.; Aryal, J.; O’Reilly, M.; Gale, T.J. A Relative Density Ratio-Based Framework for Detection of Land Cover Changes in MODIS NDVI Time Series. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3359–3371. [Google Scholar] [CrossRef]

- Anees, A.; Aryal, J. Near-Real Time Detection of Beetle Infestation in Pine Forests Using MODIS Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3713–3723. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Comput. Soc. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, K. Region-of-interest extraction based on frequency domain analysis and salient region detection for remote sensing image. IEEE Geosci. Remote Sens. Lett. 2013, 11, 916–920. [Google Scholar] [CrossRef]

| Acronyms | Full Names |

|---|---|

| VHR | Very high resolution |

| BAC | Built-up area candidate |

| MS | Multispectral |

| PAN | Panchromatic |

| MBI | Morphological building index |

| MF | Morphological feature |

| SVM | Support vector machine |

| RVM | Relevance vector machine |

| SMLR | Sparse multi-nominal logistic regression |

| LSPC | Least squares probabilistic classifier |

| MRF | Markov random field |

| gCRF | generalized Chinese Restaurant Franchise |

| SR | Spectral residual |

| DMP | Differential morphological profile |

| SE | Structure element |

| UTC | Universal time coordinated |

| MV | Majority vote |

| Sensor Satellite | Pan | MS | ||

|---|---|---|---|---|

| Resolution (m) | Band (µm) | Resolution (m) | Band (µm) | |

| QuickBird | 0.6 | 0.45–0.90 | 2.4 | 0.45–0.52 |

| 0.52–0.60 | ||||

| 0.63–0.69 | ||||

| 0.76–0.90 | ||||

| Pléiades | 0.5 | 0.47–0.83 | 2.0 | 0.43–0.55 |

| 0.50–0.62 | ||||

| 0.59–0.71 | ||||

| 0.74–0.94 | ||||

| Methods | Recall | Precision | F-Value |

|---|---|---|---|

| gCRF | 61.17% | 35.43% | 44.88% |

| SR+gCRF | 78.19% | 61.86% | 69.07% |

| MBI+SR+gCRF | 51.53% | 39.78% | 44.90% |

| The proposed method | 86.39% | 75.62% | 80.65% |

| Images | Recall | Precision | F-Value |

|---|---|---|---|

| Only QuickBird image | 86.39% | 75.62% | 80.65% |

| “generalized-images” (for QuickBird image) | 86.19% | 75.36% | 80.41% |

| Only Pléiades image | 80.73% | 72.73% | 76.52% |

| “generalized-images” (for Pléiades image) | 80.49% | 72.42% | 76.24% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, S.; Tang, H.; Huang, X.; Mao, T.; Niu, X. Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters. Remote Sens. 2017, 9, 1177. https://doi.org/10.3390/rs9111177

Li S, Tang H, Huang X, Mao T, Niu X. Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters. Remote Sensing. 2017; 9(11):1177. https://doi.org/10.3390/rs9111177

Chicago/Turabian StyleLi, Shaodan, Hong Tang, Xin Huang, Ting Mao, and Xiaonan Niu. 2017. "Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters" Remote Sensing 9, no. 11: 1177. https://doi.org/10.3390/rs9111177

APA StyleLi, S., Tang, H., Huang, X., Mao, T., & Niu, X. (2017). Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters. Remote Sensing, 9(11), 1177. https://doi.org/10.3390/rs9111177