1. Introduction

Accurate topographic mapping is critical for various environmental applications in many low-lying coasts including inter-tidal zones as elevation affects hydrodynamics and vegetation distributions [

1]. Small elevation changes can alter sediment stability, nutrient, organic matters, tides, salinity, and vegetation growth and therefore may cause significant vegetation transition in relatively flat wetlands [

2,

3]. Furthermore, topographic information is a prerequisite for extracting vegetation structural characteristics such as canopy height, vegetation cover, and biomass from remote sensing data [

4,

5,

6,

7,

8].

For decades, researchers have used various remote sensing techniques such as RADAR, Light Detection And Ranging (LiDAR), stereo photogrammetric mapping, altimetry, and GPS to map landscapes of various scales [

9,

10,

11,

12,

13,

14,

15]. However, accurate topographic mapping in coastal areas remains challenging due to complication of hydrodynamics, ever-changing landscapes, low elevation, and dense vegetation covers [

2,

3]. As a common tool for topographic mapping, airborne LiDAR has typical sensor measurement accuracies between 0.10 m to 0.20 m [

3,

7]. Previous studies based on airborne LiDAR commonly reported mean terrain errors from 0.07 m to 0.17 m in marshes [

3] and as high as 0.31 m in areas with relatively tall vegetation [

2,

16]. Errors further increase with denser and taller vegetation conditions and may reach a challenging “dead zone” if the marsh vegetation height is close to or beyond 2 m [

3]. Since many airborne LiDAR missions collect data during winter seasons for better laser penetration when many vegetation species die off or have sparser and flagging conditions, terrain mapping in seasons or wetlands types with fuller vegetation biomass will produce even lower accuracies. These studies prove the existence of severe errors in current coastal topographic mapping, which will have significant impacts on broad applications such as wetland habitat mapping [

2], change monitoring [

9], modeling of flood [

17], inundation [

10], storm surge, and sea level rise [

17].

Terrain mapping under dense vegetation in wetlands remains challenging as the characteristics of coastal environments can complicate its applications in vegetated areas. First, the low-relief topography under tidal and weather induced variations of water level can affect the amount of land exposure to air. Second, the dense vegetation with a large coverage often results in a lack of exposed ground for ground filtering and terrain interpolation. Third, the limited spectral information from high-density or high-resolution remote sensors combined with the presence of water and high soil moisture makes land cover mapping extremely difficult. Fourth, the intense land dynamics and lack of ability to obtain data promptly prevent many applications such as wetland restorations. Therefore, there is a great need for more accurate and rapid topographic mapping in coastal environments.

In recent years, terrain correction emerges as a new trend to seek improvement of terrain mapping in areas with dense vegetation. Hladik, Schalles and Alber [

2] demonstrated an approach to correct wetland terrain from airborne LiDAR data by integrating with hyperspectral images and GPS surveys of terrain samples. However, terrain correction based on multiple historical data faces limitations of data availability, resolution differences, time discrepancies, and restriction to unchanged areas. Discrepancies among the data or seasonal and annual landscape changes can introduce significant errors in the ever-changing coastal environments affected by hydrodynamics, hazards, and human activities. Furthermore, LiDAR boasts the best for high-resolution terrain mapping [

18,

19] among current mapping technologies, but the equipment is costly. Frequent deployment of airborne LiDAR for surveys is cost prohibitive, urging for affordable method for rapid and accurate measurements without relying on out-of-dated historical data [

2,

3].

Recent developments of unmanned aerial vehicle (UAV) provide a promising solution to general mapping applications. Salient features of UAV systems and data include low costs, ultra-high spatial resolution, non-intrusive surveying, high portability, flexible mapping schedule, rapid response to disturbances, and ease for multi-temporal monitoring [

20]. In particular, the expedited data acquisition processes and no requirement of ground mounting locations make UAV a favorable surveying method considering the challenging mobility and accessibility in wetland environments.

In the past few decades, the UAV communities have made significant progresses in light-weighted sensor and UAV system developments [

21,

22], improving data preprocessing [

23], registration [

24,

25,

26,

27], and image matching [

28,

29,

30]. As a result, the low-cost system and automated mapping software have allowed users to conveniently apply UAV to various applications including change detection [

31], leaf area index (LAI) mapping [

32], vegetation cover estimation [

33], vegetation species mapping [

34], water stress mapping [

35], power line surveys [

36], building detection and damage assessment [

37,

38], and comparison with terrestrial LiDAR surveys [

39]. For more details, Watts et al. [

40] gave a thorough review about UAV technologies with an emphasis on hardware and technique specs. Colomina and Molina [

20] reviewed general UAV usage in photogrammetry and remote sensing. Klemas [

4] reviewed UAV applications in coastal environments.

Despite the various successful applications, challenges remain, especially when deploying UAV to map densely vegetated areas. Difficulties in deriving under-canopy terrain are common for point-based ground-filtering processes. Use of UAV to map terrain, therefore, is best suited to areas with sparse or no vegetation, such as sand dunes and beaches [

41], coastal flat landscapes dominated by beaches [

42], and arid environments [

43]. Thus far, the examination of the efficacy of UAV for topographic mapping over vegetated areas has been limited to only a few studies. Jensen and Mathews [

5], for instance, constructed a digital terrain model (DTM) from UAV images for a woodland area and reported an error of 0.31 m overestimation. Their study site was relatively flat with sparse vegetation where the ground-filtering algorithm picked up points from nearby exposed ground areas. Simpson et al. [

44] used LAStools suite to conduct ground filtering of UAV data in a burned tropical forest, which showed that the impact of DTM resolutions varied with vegetation types and high errors remained in tall vegetation. More recently, Gruszczyński, Matwij and Ćwiąkała [

39] introduced a ground filtering method for UAV mapping in areas covered by low vegetation in a partially mowed grass field. Successful applications of these ground-filtering methods depend on the assumption that adjusting the size of search windows can help pick up nearby points on ground in sparsely vegetated and relatively flat areas with fragmented vegetation distribution. However, ground filtering in dense and tall vegetation was considered “pointless” due to the difficulty to penetrate and a lack of points from ground [

39]. Thus, current developments in UAV communities provide no solution to the above challenging issue of terrain mapping in densely vegetated coastal environments.

The goal of this study is to alleviate the difficulties and challenges in terrain mapping under dense vegetation cover and develop a rapid mapping solution without depending on historical data. Since researchers often use external GPS units with higher accuracy for registering point clouds from UAV based on targets and vegetation crown is often visible under most hydrological conditions, this research proposes an algorithmic framework to correct terrain based on land cover classifications through the integration of GPS samples with points from vegetation crown measured from UAV. Among the three main UAV types of photogrammetric, multispectral, and hyperspectral UAV systems, photogrammetric UAV is the most commonly used type with relatively low cost and a natural color camera. This means that many users have access to photogrammetric UAV systems and often face the challenge of high-resolution mapping with limited spectral bands, which has a significant impact on classification accuracies. On the other hand, high-resolution images contain tremendous amount of textural information, which are not always directly usable as input bands for traditional classification but are feasible by logical reasoning based on texture and object-oriented analyses. Therefore, to tackle this problem, this research developed a novel object-oriented classification ensemble algorithm to integrate texture and contextual indicators and multi-input classifications. Evaluated using data for a coastal region in Louisiana, the validated approach provides a field survey solution to apply UAV for terrain mapping in densely vegetated environments.

3. Methodology

3.1. Overview of an Object-Oriented Classification Ensemble Algorithm for Class and Terrain Correction

This section presents an object-oriented classification ensemble algorithm to apply photogrammetric UAV and GPS sampling for improved terrain mapping in densely vegetated environments. The conceptual workflow includes four main stages as illustrate in

Figure 3. Stage 1 conducts UAV mapping and generate initial DTM using the well-established Structure from Motion (SfM) method. Stage 2 compares the classification result based on orthophoto with those based on orthophoto and a point statistic layer to derive two optimal classification results. Stage 3 uses the object-oriented classification ensemble method to conduct classification correction based on a set of spectral, texture and contextual indicators. Stage 4 assigns a terrain correction factor to each class based on elevation samples from GPS surveys and conducts final terrain correction. The ensemble method used here refers to several integrations at different stages: the multiple classification results, the analytical use of indicators, and the mechanism of integrating UAV and GPS surveys. The following sections illustrate and validate the algorithm through a densely vegetated coastal wetland restoration site.

3.2. Stage 1: Generation of Initial DTM

To ensure the quality of matching points from stereo mapping, a data preprocess first screens the images by removing images with bad qualities (e.g., blurry images due to motion or out of focus) and conducting image enhancement of sharpness, contrast and saturation. The Pix4Dmapper software then processes the images to construct matching points, orthophoto, and DTM through combining the SfM algorithm with the Multi-View Stereo photogrammetry algorithm (SfM-MVS) [

47]. The SfM-MVS method first applies the Scale Invariant Feature Transform (SIFT) to detect features in images with wide bases and then identifies the correspondences between features through high-dimensional descriptors from the SIFT algorithm. After elimination of erroneous matches, SfM uses bundle-adjustment algorithms to construct the 3D structure. The purpose of using MVS is to increase the density of the point clouds through use of images from wider baselines. In addition, Pix4Dmapper supports 3D georeferencing through manual input of GCP coordinates acquired from the GPS surveys. In the first step, the software automatically aligns the UAV images and generates DSM, orthophoto, and matching points based on the SfM-MVS algorithms. Pix4Dmapper software provides an interactive approach to the selection of corresponding targets in the 3D point clouds for registration. A classification function then classifies matching points into terrain vs. object based on the minimum object height, minimum object length, and maximum object length and interpolates identified points on terrain into a DTM model. In some situations, terrains in water areas may contain abnormal elevation outliers due to mismatched noisy points. Users should inspect the quality of DTM and eliminate these outliers. For detailed information about SfM-MVS and Pix4Dmapper software, please refer to Smith, Carrivick and Quincey [

47].

3.3. Stage 2: Multi-Input Classification Comparison

One major challenge in this study is to conduct land classification based on the photogrammetric UAV data without depending on historical data, which limits data inputs to three bands of orthophoto and matching points. To solve this limitation and utilize the detailed textural and context characteristics in high-resolution and high-density data, this research explores the use of statistics and morphological characteristics including point density, maximum, minimum, maximum–minimum, and mean elevation from the matching points to enhance classification.

For classification algorithms, Ozesmi and Bauer [

48] provided a thorough review about various classification methods for coastal and wetland studies. Among these methods, the machine-learning algorithm of SVM classifier demonstrates high robustness and reliability through numerous successful applications even with limited sample size, which is critical for sample-based studies considering the challenging field accessibility in wetland environments [

49,

50,

51,

52]. Therefore, this research selected SVM to classify UAV-based orthophoto and compare the results with those by adding one of the statistical layers into the classification inputs to identify two optimal classification results for the multi-classification ensemble in the following steps.

3.4. Stage 3: Classification Correction

Stage 3 uses an object-oriented classification ensemble method to improve classification based on classifications from multiple inputs and a set of indicators. The classification results from Stage 2 using a traditional classification method may produce classification accuracies that are acceptable for many classification applications (e.g., over 80%). However, when applied to coastal topographic mapping for terrain correction, the statistically acceptable percentage of errors may produce widely distributed artificial spikes and ditches on terrain and therefore needs correction. A recent trend to improve classification is to utilize classification results from multiple classifiers [

53,

54]. A commonly used strategy to ensemble the classification results is through majority voting by assigning the final class type to the one receiving the majority number of votes from the classifiers [

50]. However, limited spectral bands in photogrammetric UAV restrict its application as changing classifiers is not likely to significantly correct the spectrally confused areas. Therefore, this research explores a comprehensive approach to integrate spectral, texture, and contextual statistics, as well as results from multiple classifications, to improve classification.

For UAV applications, orthophoto is the main source for land cover classification. Adding layers from topographic statistics may improve classification in certain areas but reduce accuracies in other areas, especially when large gaps exist in the matching points due to lack of texture or shadow effects resulting from low contrast or sunlight illumination. Comparing to traditional pixel-based classification, the object-oriented ensemble approach provides a promising solution because of its flexibility in integration and logical realization.

Figure 4 conceptualizes the method to integrate multiple classification results with other indicators.

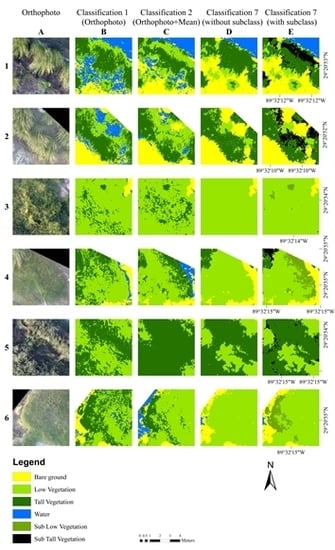

Figure 5 illustrates the need for classification correction and the effect of analytical steps on six representative plots to demonstrate the scenarios and procedures in the following sections.

Let Classification 1 represent the classification based on orthophoto and Classification 2 denote the other optimal classification result, which is the classification result based on orthophoto and mean statistic layer in this experiment. The other useful information derivable from matching points for the following steps includes the point density, maximum, minimum, maximum–minimum, and mean elevations, texture (variance) from the natural color images, and the band with high contrast in brightness values between vegetated and shadowed areas. Among the three RGB bands, the red band in orthophoto provides the highest contrast in troubled areas based on visual examination of individual bands in this experiment and hence differentiates dark and bright objects.

After comparative classification experiments, Classification 1 based on orthophoto and Classification 2 based on orthophoto and mean elevation serve as the multi-classification inputs. Four main steps of corrections shown on the right side of

Figure 4 correspond to four main types of class confusions observed from Classification 2 in this experiment.

(1) Correction of water

In coastal environments, water may have a frequent presence in wetlands and areas with high moisture saturation in soil. Water related errors often result from the following phenomena. First, users should carefully examine DTM quality especially in water areas and remove outliers as stated in the UAV mapping section. For example, two mismatched pixels created two sharp artificial drops in water areas in this experiment. Because of lack of nearby matching points and the interpolation process, the DTM, classification and object-oriented statistics further propagated and expanded these errors. Therefore, a manual preprocess removed these points before DTM interpolation. Second, in order to acquire high quality pictures, UAV field data collections usually occur under a sunny weather condition with relatively calm water surfaces, which results in no matching points (no point density value) in some water areas due to lack of texture. After removing the mismatched points, the interpolation process may derive a reasonable elevation values based on nearby points. Therefore, part of these water areas may have correct classification label, and no point density may be a useful indicator for other misclassified water areas if applicable. Third, due to the confusion between shallow water and dark ground surface with high moisture as demonstrated in Plots 1 and 2 in

Figure 5, many misclassified water pixels may scatter on land with fragmented shapes or salt-and-pepper patterns. These errors usually locate in dark soil or shadowed areas between vegetation leaves in this study site and need correction first as the later processes may compare object elevations with nearby ground surfaces. Users can extract and correct these errors based on object size, elevation and other characteristics such as no point density. This study used region group tool in ArcGIS software to form objects. To estimate elevation of nearby ground, this research uses the zonal statistics tool based on the objects and the mean ground elevation calculated with a 3 × 3 kernel from the ground elevation image. This mean elevation calculation will expand the edges of ground elevation layer into the boundary of water objects in order to return valid values from the zonal statistics. Fourth, some areas with shallow water (few centimeters) next to land have dense matching points from the bathymetry and therefore may cause misclassification as ground. Nevertheless, visual inspection of the DTM in these areas reflecting reasonable topography in this experiment, indicating no need for class and terrain correction. However, users should assess the needs in their sites as water condition may be different due to color, clarity, waves, and bed texture etc.

Users can apply the above characteristics to correct water related classification errors. The analytical processes in

Figure 4 demonstrate the effective ones in this experiment. From the result of Classification 2, the correction procedures first extract those pixels labeled as water to form objects and then change an object to ground if the object is too small, too high, or has no matching points (point density ≥ 1). Observing from the water pixels and terrain model, this experiment applied five pixels for object size and 0.22 m (NAVD 88) for elevation threshold. The no point density condition has no impact on large objects of classified water from actual water areas as part of the object areas likely has some matching points. Corrected Classification 3 is the corrected classification result for the next process.

(2) Correction of ground

When correcting confusions between vegetation types, the procedure needs to compare its object elevation to nearby ground pixels to estimate vegetation height. However, some shadowed pixels in tall vegetation misclassified as ground as demonstrated in Plot 2 in

Figure 5 resulted in underestimated vegetation height and hence failure to separate tall and low vegetation. Therefore, the goal of this step is to correct these ground-labeled pixels as vegetation. Unlike the typical larger size of exposed ground areas, these objects from misclassified ground pixels are fragmental in shape and scatter between leaves with a much higher elevation value than nearby ground surfaces but close to those of the tall vegetation. Therefore, this step change objects from ground to tall vegetation if an object is too small and their elevation difference to nearby tall vegetation is smaller than a threshold. Observing from the extracted objects of ground, this experiment used ten pixels for object size and 0.2 m for the elevation threshold.

(3) Correction of tall vegetation with low contrast

One type of severe confusion between tall vegetation and low vegetation are in clusters of tall vegetation as illustrated in Plots 1 and 2 in

Figure 5. Further examination discovers a common characteristic of low contrast due to shadow or sparse leaves on edges, which often cause gaps in point clouds with no point density. The spectral band with larger contrast, which is the red band in this true-color orthophoto, helps differentiate objects with contrast difference. However, ground areas among tall vegetation may have no point density and result in overestimation of elevations in topographic statistics through interpolation. These errors lead to the misclassification as low vegetation in Classification 2. However, when elevation statistic layer is not involved, these areas will be classified as ground or water in Classification 1. Therefore, the method extracts those pixels that are classified as low vegetation in current result but are classified as ground or water in Classification 1 for further analysis.

If an object of low vegetation in this collection has no nearby ground pixels (no value in nearby ground elevation), these objects are likely isolated ground areas among tall vegetation that are causing the problem above. Therefore, these pixels of low vegetation are first corrected as ground. The corrected classification is then used to extract low vegetation with no point density, and the objects with mean spectral values in red band less than seventy are reclassified as tall vegetation. Note that the contrast threshold is for an extracted subset of potentially troubled areas instead of the entire image, and correctly classified healthy low vegetation among tall vegetation is usually not in this subset and hence will not cause problem. Corrected Classification 5 concludes the correction of tall vegetation with low contrast.

(4) Correction of taller low vegetation

One type of widely distributed errors in low vegetation as demonstrated in Plots 3 and 4 in

Figure 5 are that low vegetation with relatively higher elevation are classified as tall vegetation when a topographic layer is used in classification. However, these areas cause no trouble when only orthophoto is used. Thus, the correction procedures extract those pixels classified as tall vegetation but with a value of low vegetation in Classification 1. Among these tall vegetation candidates, the objects with variance less than or equal to a threshold are corrected as low vegetation. This experiment used twenty as the threshold by examining the data. These procedures will correct significant amount of errors in this category.

(5) Correction of low vegetation labeled as tall vegetation

In this last step, the classification correction processes gradually extract and correct classification labels by examining different spectral and contextual information available from photogrammetric UAV imagery. Similar to the above analyses, these thresholds are derivable from the subset areas of interests. One major type of error remaining in the classification is the low vegetation mislabeled as tall vegetation, as shown in Plot 5 in

Figure 5. Many errors in this category share a common characteristic of relatively dark color in contrast to well-grown tall vegetation, and some are low vegetation located in shadows of tall vegetation. However, the white-color parts of the targets produce high reflectance and confused with tall vegetation, which needs correction before correction of low vegetation. Thus, the method corrects tall vegetation objects with mean reflectance values in red band greater than or equal to 200 as ground. Considering that tall vegetation in this study usually presents in larger clusters, tall vegetation objects smaller or equal to 400 pixels should be low vegetation. This procedure corrects some portion of well-grown low vegetation. The followed procedure confirms some well-grown tall vegetation objects with variances greater than or equal to 100 as tall vegetation and excludes them from further examination. From the remaining collection, tall vegetation objects with average elevation difference to nearby low vegetation greater than or equal to 0.03 m are excluded as tall vegetation since low vegetation usually has similar elevation to nearby low vegetation. With the updated low vegetation and tall vegetation, the objects with elevation difference to nearby low vegetation less than −0.09 m (lower than nearby low vegetation) will be corrected as low vegetation. Finally, the method changes tall vegetation objects with variance less than 40 or reflectance values in red band smaller than 30 or larger than 72 to low vegetation. This concludes the classification correction processes as no significant errors remain according to visual inspection.

3.5. Stage 4: Terrain Correction

Theoretically, there are two main approaches to integrate field surveys with remotely sensed images for terrain corrections in vegetated areas. The first approach relies on dense and well-distributed GPS surveys to generate interpolated DEM or TIN model to be used within ground filtering process. In densely vegetated area, this approach requires full coverage and dense GPS survey of the study site for better control, which can be challenging for wetland field survey considering the limitation on accessibility, weather, and cost. The second approach is to run ground-filtering process first based on the matching points to generate a DTM and then use land-cover classification and terrain correction factors derived from GPS points for each class to conduct terrain correction. The second approach allows flexible sampling numbers and locations, has a potential for mapping of large areas and upscaling, and is proven effective [

2]. Therefore, this research adopts terrain correction factors based on land-cover classification.

Given a set of field samples for each class, the terrain correction procedure starts from calculating the average elevation difference from GPS surveys to a terrain model. This terrain model can be a DTM derived directly from the UAV terrain mapping process or statistic maps. Since the goal of this research is to refine the poorly mapped DTM from the UAV system, the terrain difference is calculated by subtracting initial DTM values from the GPS survey values, so that negative values indicate lower ground than mapped values in DTM and hence a subtractive terrain correction factor. The final terrain correction factor for a class is the mean elevation difference for all samples located in a class. Finally, the terrain correction process applies the terrain correction factor in reference to the classification map and produces a final corrected DTM.

5. Discussion

With a flight height of 42 m, the mapping based on UAV generated dense point clouds with an average point sampling distance of 1.37 cm and a registration with RMSE of 0.008 m. The standard deviation in bare ground area is 0.029 m, which is comparable to the approximate 0.029 m by Mancini, Dubbini, Gattelli, Stecchi, Fabbri and Gabbianelli [

42] and better than the 3.5–5.0 standard deviation achieved by Goncalves and Henriques [

41]. These results indicate a reliable mapping accuracy and accurate registration between GPS measurements and DTM. In addition, the experiment proves that dense point mapping from UAV can produce DTM models with 2 cm resolution and centimeter level vertical accuracy that is comparable to RTK GPS control in non-vegetated areas. However, significant overestimation that accounts for approximately 80% of vegetation heights presented in densely vegetated areas (80.1% of tall vegetation and 81.6% of low vegetation). This proves that large areas of dense vegetation are problematic when applying UAV for coastal environments and therefore need further correction.

Before assigning terrain correction factors, this research compares multiple classification methods to identify the optimal classification method based on limited spectral information from photogrammetric UAV. Classifications through single SVM classification method yielded 83.98% accuracy based on orthophoto. Adding the mean elevation statistic layer significantly improves the accuracy to 93.2%. This result proves that adding topographic information to spectral information can significantly improve classification solely based on orthophoto. In addition, adding the minimum or maximum statistics layers produced comparable classification accuracies due to their high correlation and the small sampling size of 2 cm used in this research. Which of these three statistic layers provides the best classification could be site-dependent. The proposed new method based on multi-classification and object-oriented analytical method further improves the classification to 96.12%. More corrections are observable in some particular areas, such as edges of land cover objects and areas with low contrast that represents areas spectrally confused by the traditional classification methods but omitted by the assessment results due to limitation of sampling in remote sensing. Furthermore, the developed hybrid analytical method differs from those existing ensemble methods [

53,

54] by incorporating comprehensive texture, contextual, and logic information, which allows correction of spectral confusion created by traditional classification methods.

Terrain correction based on the best single classification method significantly corrects the overestimation in both low and tall vegetation, and the proposed new method further improves the accuracies especially in those areas with spectral confusion. The strategy of extracting subclasses from the analytical procedures for adjusted terrain correction factors shows slight improvement in topographic mapping. The final corrected DTM based on the new method produces mean accuracy of −2 cm for low grass areas, which is better than the 5 cm achieved by Gruszczyński, Matwij and Ćwiąkała [

39]. Correction in tall vegetation successfully reduced mean terrain errors from 1.305 m to 0.057 m. Furthermore, it is worth noting that the

spartina alterniflora in this site grows on banks in much denser and taller condition than most other reported experiments in frequently inundated wetlands. Areas with taller and denser vegetation are relatively more challenging for topographic mapping than areas covered by low and sparse vegetation.

While solving the challenge to classify UAV data with limited spectral information, some useful findings and challenges are worth of discussion. The following texture or contextual statistics demonstrate usefulness for UAV data from natural color cameras: maximum elevation, minimum elevation, vegetation height (maximum–minimum), mean elevation, point density, the spectral band with relatively larger contrast, and variance calculated from orthophoto. The first three elevation statistics are useable as direct input for pixel-based classification, while others may be more meaningful at object level or in analytical steps. Under such dense point condition from UAV mapping, point density appeared to be not helpful for class classification when directly used as an additional input band. However, areas without matching points on land are useful indicators to extract troubled areas for correction due to shadow, low contrast, or lack of texture. Among the three RGB bands from most commonly used natural color cameras, red band shows the biggest contrast in brightness in this experiment. When applying UAV for densely vegetated coastal environments, another major challenge is the lack of matching points (gaps) in shadows and areas with low contrast. The most representative locations are on the edges of tall vegetation with relatively darker tone or shadowed areas due to the presence of nearby taller objects.

6. Conclusions

This research aims to solve the problems of topographic mapping under dense vegetation through a cost-effective photogrammetric UAV system. By integrating GPS surveys with the UAV-derived points from vegetation crown, this research developed an object-oriented classification ensemble algorithm for classification and terrain correction based on multiple classifications and a set of indicators derivable from the matching points and orthophoto. The research validated the method at a wetland restoration site, where dense tall vegetation and water covers the lower portion of the curvature surface and dense low vegetation spreads in the relatively taller areas.

Overall, the research results prove that it is feasible to map terrain under dense vegetation canopy by integrating UAV-derived points from vegetation crown with GPS field surveys. The developed method provides a solution to apply UAV for coastal topographic mapping under dense vegetation as a timely and cost-effective solution. The most intriguing applications of this method are the high-resolution monitor of sediment dynamics due to hazards, wetland restorations, and human activities and the multi-disciplinary integrations with wetland ecologists and sedimentologists. The sizes of study sites with intense surveys often match with their sampling sites. For example, users can apply UAV mapping to mangrove and salt marsh wetlands for high-resolution 3D inundation modeling by integrating with the hourly water level recorders installed by wetland ecologists.

Terrain estimation under dense vegetation is one of the most challenging issues in terrain mapping. At this early stage, the goal of this research is test the hypothesis whether it is possible to derive meaningful terrain estimation by applying photographic UAV to densely vegetated wetlands and demonstrate a successful solution with strategic references to many other users. To apply the method to other study sites, users can apply the outlined general processes and tools as in

Figure 3. To tailor classification correction to their classification schemes, users can adopt the method by generating the two optimal classification results and all the listed indicators, observing confusions patterns between each pair of classes, and analyze how to use those indicators to correct classification through referencing to those logics used in this research. Since UAV provides super high resolution mapping with detailed texture, users can determine all thresholds by observing the derived subset images. The examine methods may include but not limited to displaying the map of statistics with classified or contrasting colors, visual comparison with orthophoto images, trial and error experiments, histogram examination, and examining values of selected samples (such as water and targets). In addition, replicating this method to other sites is not a success or fail issue but rather the amount of invested effort matching with the desired improvement level. For example, users may choose to apply the method to correct one or two main troubling classification errors depending on the needs.

A potential limitation of the past data collection as some may point out is the limited number of images and overlap. However, although the more overlapping the better, there is rarely quantified studies about the minimum overlap rates for UAV. Traditional photogrammetric stereo mapping requires 60% overlap along flight lines and 30–40% overlap between flight lines. This experiment has overlapping rates around 74% and 55% correspondingly and an average of five images for each matching points. On the other hand, visual inspection of the point matching results indicates no problem in areas with clear images and areas with no matching points are mostly shadowed areas or areas with low contrast or high moisture, which is not an issue solvable by increasing the number of images. However, the plan for future study is to conduct an experiment to quantify the uncertainties induced from data acquisition settings such as overlap rates, flight height, fly speed, and imaging time etc. Other directions for future studies include validations of the method in different types of landscapes, development of local terrain correction factors based on local vegetation status, nearby elevation samples or bare ground elevations, and the impact of resampling cell sizes of these elevation models to alleviate the heterogeneity of crown structure. Another direction is to develop more robust and global solutions for future studies.