1. Introduction

Due to periodical imaging from the earth, satellite sensors have become a powerful and unique tool for multi-temporal analysis. Accurate image geo-referencing is an important pre-processing step for this analysis [

1], in order to avoid errors due to misregistration [

2], because, even small geometric errors could have a significant effect on retrieval of subsequent information [

3]. Most modern satellite imagery is supplied with a sufficient and reliable Rational Polynomial Coefficients (RPC) [

4], and sub-pixel geo-referencing accuracies can be achieved by bias-compensating these coefficients using a few Ground Control Points (GCP) [

5,

6]. However, old archive imagery are a main part of the data sources for multi-temporal analysis. RPCs have not been available for these imagery, and if we could find any, they would not be reliable. Therefore, the Rational Function Model (RFM) can only be applied to the old archive imagery in a terrain dependent manner. However, if an accurately geo-referenced master image is available for the region of interest, old archive imagery can be co-registered with the master image [

7].

Despite the popularity and multiple achievements of terrain independent RFMs and their widespread use for high resolution satellite images, the terrain dependent RFMs were not really welcomed [

8,

9,

10]. At least, this can be due to two main reasons: (1) Third-order 3D RFMs encompass 80 parameters [

11], none of which are totally independent [

12,

13]. The existing correlation among these parameters causes the problem be ill-posed [

13,

14]. This, in turn, results in a steady fall in accuracy and reliability, and, in some cases, even solving the equation system might be impossible [

15]. (2) Even if the zero-order term of polynomials in the denominator of RFMs is supposed to be equal to the fixed value of 1.00, at least 39 Ground Control points (GCPs) are still needed to solve the remaining 78 parameters. Obviously, the degree of freedom would be zero in this case; consequently, the results are not quite reliable [

16]. In some resources [

17], the number of required GCPs for an accurate and reliable solution of terrain dependent RFMs is mentioned as the twice of the minimum sufficient GCPs, i.e., 78 points. In many cases, not only this many control points, but also occasionally the minimum required GCPs are not available either. Thus, some researchers restricted the use of terrain dependent RFMs only to the usages where accuracy is not a major concern [

17,

18,

19], and some others are totally against its utilization [

5,

9].

There are two main strategies to encounter the ill-posed problems [

20]: (1) variational regularization; and (2) variable selection. Regularization of the normal equation plays an important role in the reduction of the multi-collinearity of rational polynomial coefficients (RPC) and improving the model’s accuracy. Nevertheless, in this strategy besides the essential parameters a set of unnecessary ones are estimated as well. In fact, this strategy is attempting to cutting down the negative effect of the inessential parameters on estimating the effectual ones as much as possible. However, unwanted parameters will exist in the model and some GCPs are required to estimate them as well, which indicates an inefficient use of the available GCPs. Conversely, in the second strategy, the unnecessary parameters are put aside; consequently, apart from solving the problem of the ill-posed normal equation, the number of existing unknown parameters will be reduced [

12,

21,

22]. Thus, fewer GCPs are required to solve the model, and, due to the increase in the degree of freedom, the final results will be more reliable [

12,

22,

23]. Moreover, owing to the absence of unnecessary parameters and reducing the multiple correlations between the remaining parameters, the final model would be more interpretable [

20].

The main issue in this strategy is to determine the optimal structure of the model through distinguishing the effectual and unnecessary parameters. The strategy accepted in commercial software such as PCI-Geomatica [

24] for solving terrain dependent RFM is to use similar structure in the RFM. It means that, regardless of the zero-order term in the denominator polynomials, the structure of the four polynomials in the RFM is considered alike, and the maximum possible number of parameters regarding to the available GCPs are employed in all polynomials. Notwithstanding the least possible computational cost, it is evident that, in this strategy, achieving the most accurate results for a given number of GCPs is quite unlikely. Considering that the image space distortions depends not only on the interior and exterior orientation parameters of the imaging system, but also on other factors such as terrain relief [

16], doubtlessly, the optimal structure of the RFM would be different for each scene.

Thus far, a wide variety of studies has been conducted in order to identify the optimal structure of RFMs, which generally fall into two main groups: linear algebra-oriented (LAO) and artificial intelligence-oriented (AIO). Among the LAO studies, the detection of the optimal structure of the RFM based on correlation analysis [

21], eigenvalue of structure matrix [

5], scatter matrix [

25], multi-collinearity analysis [

26], and nested regression [

20] can be mentioned; and, from the AIO researches, the use of GA [

22], modified GA [

12], and PSO algorithm [

23] to identify the optimal structure of the RFM can be pointed out.

One of the common features of the LAO methods is the sequential choice of parameters. This means that the quality of a parameter is firstly evaluated and its presence in the final structure is decided, then the next parameter is considered. In this way, not all the possible combinations of the parameters are taken into account; and consequently, the final structure would largely depend on the order of the evaluation of the parameters. Thus, the recognized optimal structure would probably not be global because variable selection is a non-deterministic polynomial (NP) problem whose search space is very large [

27]. Alternatively, the AIO methods effectively investigate the different combinations of the parameters through taking advantage of the meta-heuristic search algorithms; therefore, they essentially have a higher potential to achieve the global optimal structure [

12,

22]. In comparison with the LAO methods, one other privilege of AIO methods is the possibility of determining the optimal structure of the RFM for a desirable number of GCPs. However, the computational cost of these algorithms is excessively high, which is a serious drawback from a practical point of view [

20].

This paper relies on the technical knowledge of prevailing geometric conditions on the formation of satellite imagery and the effect of external factors (e.g., terrain relief) on the image. A knowledge-based search strategy is proposed to designate the optimal structure of terrain dependent RFMs in the geo-referencing of satellite imagery. Compared to the previous studies, the major advantage of the proposed method can be sought in establishing a balance among the four elements of accuracy, reliability, flexibility, and computational cost.

2. The Geometry of Linear Pushbroom Imaging

In the linear pushbroom imaging, by means of a row of detectors, which are arranged in the focal plane of the sensor and across the flight direction, a narrow strip of the object space is recorded at every instant,

Figure 1. With the sensor’s moving forward, consecutive strips of the object space are recorded one after another; thus, its thorough coverage is provided [

16].

In this way, the imaging geometry is parallel along the trajectory of linear pushbroom sensors [

28]. Considering the rather short acquisition time of the imagery (for example, about 1 s for an IKONOS scene), the velocity, and orientation of the sensor can be assumed constant during the capture of the scene [

29,

30,

31,

32]. Hence, modeling the along-track image component via the 3D affine model is easy [

32,

33,

34]. Implicit in the model are two individual projections, one scaled-orthogonal, and the other skew-parallel (

Figure 2a). If the angle of the velocity vector and the optical axis of the sensor changes during the acquisition time, a series of time-dependent terms are required to be added to the 3D affine model [

28,

35]. Eventually, if the position of all ground points is transferred to a common height plane in the object space, the eight-term model can be effectively reduced to six parameters [

36,

37], which, is the case for images with few terrain reliefs. However, if the terrain relief is considerable, it is essential to provide a 3D affine model with one or more quadratic terms [

28].

In contrast, the imaging geometry is perspective across the sensor’s trajectory [

28], as can be seen in

Figure 2b. Assuming a constant orientation during the capture of the scene, the across-track component of the image is successfully modeled via 3D projective model by some researchers [

38,

39].

3. Rational Function Model

In the RFM, the relationship of image space components (

r,

c) is stated according to the division of two 3D polynomials in object space components (

X,

Y,

Z) [

40].To reduce the computational errors and to improve the numerical stability of the equations, image and object coordinates are both normalized to the range of −1.00 to +1.00 [

11]. The direct RFM is given in Equation (1) [

40]:

where

r and

c are row and column components of the image space, respectively;

X,

Y, and

Z are the points’ coordinates in the object space; and

Pi is a polynomial in the ground coordinates. The maximum power of each ground coordinate is usually limited to 3, as well as the total power of all ground coordinates [

14].

In such a case, each polynomial is of 20-terms cubic form (Equation (2)):

where

aijk stands for the polynomial coefficients called rational polynomial coefficients (RPCs). The order of the terms is trivial and may differ in different literature. The above order is adopted from [

11].

The distortions caused by the projection geometry can be represented by the ratios of the first-order terms (refer to

Section 2), while corrections such as earth curvature, atmospheric refraction, lens distortion, etc., can be well approximated by the second-order terms [

14,

41]. Significant high-frequency dynamic changes in sensor acceleration and orientation, coupled with large rotation angles, are the main reasons for implementing the third-order and even higher order terms. Fortunately, the relatively smooth trajectory of space borne sensors coupled with the narrow-angle viewing optics, means that such influences are unlikely to be encountered [

5].

There are two different computational scenarios for RFMs. If the physical sensor model is available, an approximation of the model could be provided by RFMs; which is a terrain-independent solution and can be used as the replacement sensor model. On the other hand, in the terrain-dependent approach, the unknown parameters of RFMs are estimated using a set of GCPs [

14,

17,

42].

In the terrain-dependent scenario, it is common to consider the zero-order parameter of the denominator polynomials be equal to the fixed value of 1.00, in order to avoid the singularity of the normal equation matrix [

5]. Therefore, the model’s parameters decrease to 78 and, to solve them, at least 39 GCPs would be required. When the number of available GCPs is less than the limit, some parameters must be set aside. In the conventional term selection method, the model is estimated with the maximum possible number of parameters. In this way, parameters are included in a sequential manner, while a similar structure (with the exception of the zero-order term of denominator polynomials) is assumed for all polynomials, as it is implemented in PCI-Geomatica software [

24]. Therefore, in order to use up “

n” parameters in the structure of each polynomial, “2 ×

n − 1” GCPs are required to solve the RFM.

4. Proposed Method

According to

Section 2 and

Section 3, it can be noted that the first-order terms of the RFM play the most important role in the modeling of the image space distortions. In the vendor-provided RPCs, the coefficient of the zero- and first-order terms in both the numerator and the denominator polynomials are larger by many orders of magnitude than those of the second- and third-order terms [

14], which in fact refers to the same thing. In the case of limited available GCPs, the relative priority of compeer terms depends on the conditions of the scene being modeled. Regarding the different geometry of the along-track and the across-track components, the number of required parameters in the two denominator polynomials is different. Moreover, increasing the field of view of the sensor leads to one or more second-order terms that is required in order to model the earth curvature, the off-nadir viewing angle of the sensor, and the lens distortion effects. The utilization of the third-order terms in the model’s structure depends on the availability of the sufficient GCPs. Accordingly, the following search strategy is proposed for a given number of available GCPs. In the first step, a simplified version of the RFM is considered as Equation (3).

where (

X,

Y,

Z) are the object space coordinates; (

r,

c) are the image space coordinates;

ai,

bi,

ci, and

di are the unknown coefficients of the model;

P1 and

P3 are the numerator polynomials of the row and column components; and

P2 and

P4 are the denominator polynomials of the row and column components, respectively.

Since the zero-order term of the numerator polynomials has an effective contribution, this term is used for both the row and the column components in the final structure of the RFM. All possible combinations of the remaining 12 parameters in the structure of Equation (3) are evaluated separately for each of the row and the column components and the optimal structure of the RFM are detected. The search space of the first step is illustrated in

Figure 3.

In the first step, the maximum number of the search cases is equal to 4095, which can be calculated using Equation (4).

where

N1 is the maximum number of the search cases in the first step of the proposed method.

Thereafter, the necessary first- and second-order terms are determined. In the second step, the feasibility of the enrichment of the detected structure is evaluated by means of adding the third-order terms to the numerator polynomials. Therefore, the functional form of the RFM being searched would be as Equation (5).

where {

Piopt} is the optimal structure of the

i-th (for

i = 1, 2, 3, and 4) polynomial of Equation (3), which is detected in the first step.

Afterwards, all possible combinations of these 10 added third-order terms to the numerator polynomials are searched in the presence of the optimal structures form the first step. Search space of the second step is illustrated in

Figure 4.

In the second step, the maximum number of the search cases is equal to 1050, which can be calculated from Equation (6).

where

N2 is the maximum number of the search cases in the second step of the proposed method.

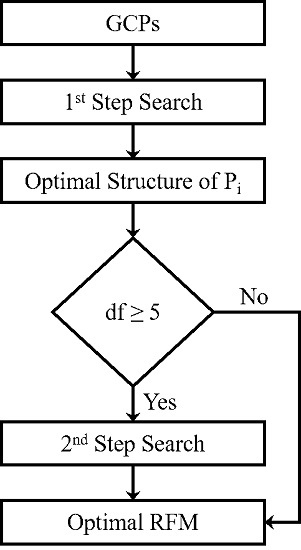

Obviously, the feasibility of executing the second step search depends on the number of available GCPs and the number of terms of the optimal structures from the first step. According to repetitive experiments, it is suggested that only if the first step had been completed with at least five degrees of freedom (

df), the second step to be executed. Thus, the flowchart of the proposed method would be as in

Figure 5.

In this paper, the Goodness-of-Fit (GoF) of the created model for each structure to be searched was used to detect the optimal structure of RFM [

20]. GoF of a statistical model describes how well the model fits a set of observations [

43]. The coefficient of determination of a linear regression between the observed and estimated values is used as the statistical measure of GoF, Equation (7).

where

n is the number of observation,

is the

i-th observation value,

is the mean of all the observation values, and

is the regression result of the

i-th observation. The value of

R2 is in the range of 0 to 1. The greater the value of

R2 is, the better the regression line approximates the observation data, and the more suitable the model [

44].

In addition to

R2, the degree of freedom of the created model was considered as a measure of the internal reliability of the model. Thus, the final benefit function for assessing different structures in the proposed search scheme can be written as Equation (8), which has to be maximized.

where

is the determination coefficient of

j-th structure (

Sj),

dfj is the degree of freedom of the

Sj, and

B(

Sj) is the benefit factor of the

Si.

5. Data Used

Four different datasets in Iran have been used in this article, including a SPOT-1A and a SPOT-1B imagery over Isfahan city, an IKONOS-Geo image, and one GeoEye-Geo image covering Hamedan and Uromieh cities, respectively. SPOT-3 Level lA is raw data that have been corrected radiometrically, while Level 1B data has been corrected additionally for certain known geometric distortions (Earth rotation and curvature, mirror look angles and orbit characteristics). Thus, SPOT-3 Level 1B image is partially rectified and approximates to an orthographic view of the Earth, but with the displacements due to relief remaining in the image. Furthermore, in this level, after these geometric corrections have been applied, the image is resampled at regular intervals of 10 m [

16]. To produce a Geo product, Ikonos-2 and GeoEye-1 images use a correction process that removes image distortions introduced by the collection geometry and then resamples the imagery to a uniform ground sample distance and a specified map projection. Geometric information and the number of available GCPs of each dataset are shown in

Table 1, along with the elevation relief of each dataset.

GCPs for Isfahan dataset were measured, in 1996, using differential GPS techniques based on the use of 3 Ashtech 12 dual frequency GPS sets. The accuracy of these points is estimated to be of the order of sub-meters. Their corresponding image co-ordinates were measured with an approximate precision of 0.5 pixels. For Hamedan and Uromieh datasets, GCPs were extracted from 1:2000 scale digital topographic maps produced by the National Cartographic Center (NCC) of Iran with 0.6 m planimetric and 0.5 m altimetric accuracies, respectively. The points are distinct features such as buildings, pool corners, walls, and road junctions. Their corresponding image coordinates were measured with an approximate precision of one pixel. These accuracies are lower than the potentially achievable accuracies from GeoEye-1 imagery. However, no better ground information was available for this dataset.

6. Results

In order to evaluate the accuracy of the proposed method, as it is impacted by the number of utilized GCPs, several experiments were performed on each dataset used. In each test, the root mean square error (rmse) of independent check points (CP), the mean, standard deviation (std.), and maximum absolute (max.) of residuals across the image’s rows (δr) and columns (δc), the condition number of the normal equation matrix (cond), the number of coefficients in

P1,

P2,

P3, and

P4 polynomials, and the degree of freedom (

df) of the equation system are provided in

Table 2,

Table 3,

Table 4 and

Table 5, for both the first and the second steps.

As can be seen from the results given in

Table 2,

Table 3,

Table 4 and

Table 5, the method is able to achieve sub-pixel accuracies even if a few GCPs (i.e., only 4–6 points) are available. In addition, the results of the proposed method are robust with respect to the number and combination of ground control points, and by changing the number of control points, there were no significant changes in the results. The comparison can be made with the results obtained with the conventional RFM and optimally structured RFM using GA [

22], which are given in

Table 6,

Table 7,

Table 8 and

Table 9. For a fair comparison, number and combination of GCPs were adopted similar to those of

Table 2,

Table 3,

Table 4 and

Table 5. The GA, however, did not converge to an acceptable result for fewer than eight GCPs in all datasets. Therefore, these results were excluded from

Table 6,

Table 7,

Table 8 and

Table 9. For each experiment, the GA was performed 10 times and the best results were reported. The number of parents, the population of generations, and maximum number of iterations were set to 20, 200, and 500, respectively. A detail description of GA optimization of RFMs is available in [

22]. Comparisons of the results in

Table 2,

Table 3,

Table 4 and

Table 5 with

Table 6,

Table 7,

Table 8 and

Table 9 show that accuracies obtained from the proposed method are considerably better than those obtained by conventional RFMs. Although the accuracies form the proposed method are a little better than those obtained by the GA-optimized RFMs, but the proposed algorithm benefits from remarkably higher degrees of freedom leading to more reliable results. In addition, the computational cost of the proposed method is significantly lower than that of GA algorithm, which confirms its effective performance.

In another experiment, low order polynomials (i.e., first and second order polynomials in

X,

Y, and

Z) were utilized for georeferencing the satellite imagery (

Table 10,

Table 11,

Table 12 and

Table 13). Normalized image and ground coordinates were used to estimate the models’ coefficients. Again, number and combination of GCPs were adopted similar to those of

Table 2,

Table 3,

Table 4 and

Table 5.

According to

Table 10,

Table 11,

Table 12 and

Table 13, first-order polynomials could not supply sub-pixel accuracies for SPOT-1A and SPOT-1B images, and the maximum absolute residual errors across the column numbers of Geoeye imagery are greater than one pixel, despite its sub-pixel accuracies. Second-order polynomials could not also provide sub-pixel accuracies for SPOT-1A imagery.

As the final experiment, the accuracy of the proposed method was examined with respect to the RPCs provided with Geoeye imagery. In this regard, first the RPCs were bias-compensated using four GCPs. Then, a regular grid of 100 image points was established, which is called original image points (OIPs) in this paper. Afterwards, these points were projected to the ground space for arbitrary heights in the range of the ground elevations by utilizing the compensated RPCs. Finally, these ground points were back-projected to the image space by means of the optimally structured RFM using the proposed method, which is called computed image points (CIPs). The mean, standard deviation (std.), and maximum absolute (max.) of discrepancies between the OIPs and CIPs across the image’s rows (δr) and columns (δc) are provided in

Table 14, along with their root mean square error (rmse).

7. Discussion

According to

Table 2,

Table 3,

Table 4 and

Table 5, the proposed method is able to achieve sub-pixel accuracies even if the number of available GCPs is limited; so that, sub-pixel accuracies were obtained using only six GCPs in all datasets. Scatter plot of residual errors shows that no systematic errors have occurred (

Figure 6). Moreover, the results from the proposed method are robust with respect to the number of available GCPs, so that there is no considerable change in the results with changing the number of GCPs. Obtained condition numbers illustrate that the normal equation matrices of the proposed method are pretty well-conditioned.

For the last three datasets (i.e., SPOT-1B, IKONOS-Geo, and GeoEye-Geo) which are geometrically corrected, the proposed method could achieve almost one-pixel accuracies using only four GCPs. However, for the first dataset (i.e., SPOT-1A) that is geometrically raw, it could not achieve an accuracy better than seven pixels using even five GCPs, which indicates more parameters are required in the structure of RFM to modeling the image space’s distortions.

In comparison with the results obtained from GA-optimized RFMs (

Table 6,

Table 7,

Table 8 and

Table 9), accuracies of the proposed method are competitive and even slightly better. It is worth mentioning that GA can theoretically provide the most accurate result if no computation limit is considered during the optimization process. However, in practice, some stopping criteria (e.g., a critical value for the fitness function or a certain number of iterations) are always utilized to complete the optimization process, which limits the achievable accuracies in comparison with the theoretical ones. In order to better compare these two methods, their accuracies are graphically displayed in

Figure 7.

Considering the capability of the GA to globally search the solution space [

45], it can be said that the results from the proposed method are to a great extent close to the global optimal solution. Undoubtedly, the final confirmation of the latter result requires more probing in the future studies. In addition, the proposed method can omit a larger number of parameters compared with the GA-optimized RFMs. Therefore, the optimal RFM has a higher number of degrees of freedom, resulting in a higher accuracy. On the other hand, according to

Table 6,

Table 7,

Table 8 and

Table 9, the average number of iterations of the GA was 350 iterations. Bearing in mind the fact that the population of each generation was considered to be 200 individuals, in each run the cost function has been evaluated on average 70,000 times; that is, 14 times slower than the proposed method. It should be noted that the results of the GA-optimized RFMs shown in

Table 3 are provided for 10 times execution of the algorithm, which by multiplying these two rates, the computational cost if the GA would increase to 140 times.

In comparison with the results obtained from the conventional RFMs shown in

Table 6,

Table 7,

Table 8 and

Table 9, the proposed method has always achieved better accuracies using fewer parameters. Therefore, the proposed algorithm benefits from higher degrees of freedom leading to more reliable results. Accuracies obtained from these two methods are graphically compared in

Figure 8.

According to

Figure 8, upon increasing the number of GCPs, the accuracy of conventional RFMs shows an upward trend and after that comes down. It can be noted that increasing the number of GCPs and consequently the number of estimable parameters cover the under-parameterization problem. However, increasing the number of parameters in the model results in the increment risk of the over-parameterization. Consequently, due to the involvement of unnecessary parameters in the model as well as the inherent correlation of some parameters, the overall accuracy of the model will be decreased, whereas this trend is not ever seen in the results of the proposed method, and this method is able to recognize the optimally structured RFMs using any arbitrary number of GCPs.

According to

Table 12 and

Table 13, first-order polynomials have provided sub-pixel accuracies for Ikonos and Geoeye-1 imagery. This could be due to the fact that these two sensors have a narrow instantaneous field of view (IFOV) and swath width, which leads their geometry to be more similar to a parallel projection. Therefore, a 3D affine model (which is equivalent to a first-order polynomial in

X,

Y, and

Z) is well suited for georeferencing these imagery, as it is confirmed in previous studies [

26]. However, it is not the case for sensors with a rather wide IFOV and swath width such as SPOT-3; as the first-order polynomials did not provide sub-pixel accuracies for SPOT-1A and SPOT-1B images.

As the final point, considering the sampling rate and the number of detectors for images used in this research(

Table 1), the required time interval to acquire a square scene for SPOT-3, IKONOS and Geoeye-1 sensors are approximately 9, 2 and 3.5 s, respectively. Since the proposed method has provided accurate and reliable results for SPOT-3 imagery, then the proposed method can be well utilized for other sensors with a shorter acquisition time interval.

8. Conclusions

Identifying the optimal structure of terrain dependent RFMs not only decreases the number of required GCPs, but also increases the model’s accuracy through reducing the multi-collinearity of RPCs and avoiding the ill-posed problem. Global optimization algorithms such as GA, evaluate different combinations of the parameters effectively. Thus, they have a high ability to detect the optimal structure of RFMs. However, one drawback of these algorithms is their high computational cost. To address this shortcoming, a knowledge-based search strategy is proposed in this article to identify the optimal structure of terrain dependent RFMs for geo-referencing of satellite imagery. The backbone of the proposed method is relying on the technical knowledge of the conditions controlling acquiring satellite images, as well as the effect of external factors (e.g., terrain relief) on the image space’s distortions. The capability of the proposed method in identifying the optimal structure of RFMs was evaluated, and its results were assessed in comparison with both GA-optimized and conventional RFMs.

According to the results, the proposed method achieved competitive and even better accuracies with a lower computational cost. It is worth mentioning that the speed of two methods was only compared based on the number of times which the cost function has been evaluated, while the search mechanism of the proposed method is much simpler than the GA. Therefore, the proposed method is in reality faster than the aforementioned ratio. Moreover, the proposed method could omit a larger number of parameters. Therefore, the proposed algorithm benefits from higher degrees of freedom leading to more reliable results. Future studies will be focused on the orthophoto and DEM generation using an optimally structured RFM based on the proposed method, as well as the epipolar resampling of linear pushbroom imagery.